Paper

Discretion in Hiring

Mitch Hoffman

Lisa B. Kahn

Danielle Li

University of Toronto

Yale University & NBER

Harvard University

March 18, 2015

PRELIMINARY & INCOMPLETE

Please do not cite or circulate without permission

Abstract

This paper examines whether and how firms should adopt job testing technologies.

If hiring managers have other sources of information about a worker’s quality (e.g.

interviews), then firms may want to allow managers to overrule test recommendations

for candidates they believe show promise. Yet if firms are concerned that managers

are biased or have misaligned objectives, it may be optimal to impose hiring rules

even if this means ignoring potentially valuable soft information. We evaluate the

staggered introduction of a job test across 130 locations of 15 firms employing service

sector workers. We show that testing improves the match-quality of hired workers, as

measured by their completed tenure, by about 14%. These gains largely come from

managers who follow test recommendations, as opposed to those who frequently make

exceptions to test recommendations. That is, when faced with similar applicant pools,

managers who make more exceptions systematically end up with workers with lower

tenure. In this setting, our results suggest that firms can improve productivity by

taking advantage of the verifiability of test scores to limit managerial discretion.

1

1

Introduction

Hiring the right workers is one of the most important and difficult problems that a firm

faces. Resumes, interviews, and other screening tools are often limited in their ability to

reveal whether a worker has the right skills or will be a good fit. In recent years, the rise

of “workforce analytics” has led to the development of new job tests based on gathering and

mining data on worker productivity for regularities that predict performance.1

Job testing has the potential to both improve information about the quality of job

candidates and to reduce agency problems between firms and their hiring managers. As

with interviews or referrals, job tests provide an additional signal of a worker’s quality. Yet,

unlike interviews and other subjective assessments, job tests provide information about the

quality of applicants that is potentially contractible: the firm can observe an applicant’s test

score just as easily as its hiring managers can. As a result, job tests make it easier for firms

to centralize hiring decisions.

This paper studies the impact job testing firm hiring policies. Specifically, we ask two

related questions: what is the impact of job testing on the quality of hired workers, and

to what extent should firms rely on job test recommendations? That is, should firms allow

managers the discretion to weigh job tests alongside interviews and other signals as they see

fit, or should they mandate a specific weight to be placed on job tests, thereby centralizing

authority? The latter, allows firms to limit manager biases or mistakes, but at the expense

of ignoring potentially valuable local information that may not be contractible.2

To gain empirical traction on these questions, we analyze the introduction of a computerized job test across a group of firms employing low skill service sector workers (our data

provider has requested anonymity but these positions are similar to data entry or call center

work. Prior to the introduction of testing, our sample firms employed hiring managers who

conducted interviews and made hiring decisions. After the introduction of testing, hiring

1

See, for instance, a recent article in The Atlantic magazine: http://www.theatlantic.com/

magazine/archive/2013/12/theyre-watching-you-at-work/354681/.

2

See Dessein (2002), Alonso, Dessein, Matouschek (2008) and Rantakari (2008).

2

managers were also given access to a test recommendation for each worker: green (hire),

yellow (possibly hire), red (do not hire).3

We begin with a model describing how job testing impacts firm hiring. In our model,

firms make hiring decisions with the help of a potentially biased manager. The manager

observes a private signal of a job applicant’s match quality, but may also care about worker

characteristics that are unrelated to job performance. When job testing is introduced, the

firm acquires a second, verifiable, signal of the applicant’s quality. Firms may want to

provide test results to their hiring managers but allow them to make exceptions to test

recommendations if their other signals indicate that a candidate has promise. If, however,

the hiring manager’s beliefs or preferences are not fully aligned with those of the firm, then

it may be difficult for the firm to know whether the manager is recommending a candidate

with poor test scores because he or she has evidence to the contrary or because he or she

is simply mistaken or biased. In the latter case, the firm may prefer to limit discretion and

place more weight on test results, even if this means ignoring the private information of the

manager.

Our empirical work directly examines the implications of this model. We have two

primary findings. First, the introduction of job testing increases the match quality of workers

that firms are able to hire. We demonstrate this by examining the staggered introduction

of job testing across 131 locations of 15 sample firms. All else equal, we find that workers

hired with job testing stay at the job 14% longer than workers hired without. We provide

a number of tests in the paper to ensure that our results are not driven by the endogenous

adoption of testing or by other policies that firms may have concurrently implemented.

Second, we show that firms can improve the match quality of their workers by limiting

managerial discretion. We arrive at this conclusion by using information on the applicant

pool to compute the extent to which managers make exceptions to test recommendations

3

The test is an online questionnaire that consists of four modules: factual qualifications taken from a

candidates’ completed job application, a personality assessment, a cognitive skills assessment, and a series

of multiple choice questions about how the applicant would handle scenarios she might encounter on the

job. Our data provider then uses a proprietary algorithm to aggregate this information into a single test

recommendation.

3

(e.g. hiring a yellow when a green applicant goes unhired). If managers are acting in the best

interest of the firm, then they should only make exceptions when their other signals indicate

that a candidate will be productive: this would generate a positive relationship between the

number of exceptions and the quality of hires. Yet, we show that the exercise of discretion

is strongly correlated with worse outcomes, even after controlling for a variety of location,

applicant pool, and cohort effects. That is, even when faced with applicant pools that are

identical in terms of test results, managers that make more exceptions systematically hire

workers with worse match quality. Our model shows that this negative correlation implies

that firms could improve productivity by placing more weight on the recommendations of

the job test.

As data analytics becomes more frequently applied to human resource management decisions, it becomes increasingly important to understand how these new technologies impact

the organizational structure of the firm and the efficiency of worker-firm matches. Ours is

the first paper to provide evidence that managers do not optimally weigh information when

making hiring decisions and to offer evidence that job testing technologies may be able to

provide a contractual solution. We contribute to a small, but growing literature on the impact of screening technologies on the quality of hires.4 Our paper is most closely related to

Autor and Scarborough (2008), which provides the first estimate of the impact job testing

on worker performance. The authors evaluate the introduction of a job test in retail trade,

with a particular focus on whether testing will have a disparate impact on minority hiring.

Our paper, by contrast, studies the implications of job testing on the allocation of authority

within the firm. In particular, most studies of hiring focus on improving information about

potential hires. In addition to documenting this fact, our paper provides direct evidence that

screening technologies can help resolve agency problems by improving information symmetry, and thereby relaxing contracting constraints. In this spirit, our paper is also related to

4

Burks et al. (2014) and Pallais and Sands (2013) for instance provide empirical evidence on the value

of referrals in the hiring process.

4

papers on bias, discretion, and rule-making in other settings.5 Our work is also relevant to

a broader literature on hiring and employer learning.6 Hiring is made even more challenging

because firms must often entrust these decisions to managers who may be biased or exhibit

poor judgment.7

The remainder of this paper proceeds as follows. Section 2 describes the setting and

data. Section 3 presents a model of hiring with both hard and soft signals of quality. Section

4 evaluates the impact of testing on the quality of hires, and Section 5 evaluates the role of

discretion in test adoption. Section 6 concludes.

2

Setting and Data

Over the past decades, firms have increasingly incorporated testing into their hiring

practices. One explanation for this shift is that the increasing power of data analytics has

made it easier to look for regularities that predict worker performance. We obtain data from

an anonymous consulting firm that follows such a model. We hereafter term this firm the

“data firm.”

The primary product offered by the data firm is a test designed to predict performance

for a particular job in the low-skilled service sector. Our data agreement prevents us from

revealing the exact nature of the job, but it is conducted in a non-retail environment and is

similar to data entry work or telemarketing. Applicants are administered an online question5

Paravisini and Schoar (2012) finds that credit scoring technology aligns loan offer incentives and improves

lending performance. Li (2012) documents an empirical tradeoff between expertise and bias among grant

selection committees.

6

A central literature in labor economics emphasizes that imperfect information generates substantial

problems for allocative efficiency in the labor market. The canonical work of Jovanovic (1979) points out

that match quality is an experience good that is likely difficult to discern before a worker has started the job.

The employer learning literature, beginning with empirical work by Farber and Gibbons (1996) and Altonji

and Pierret (2001), provides evidence that firms likely learn about a worker’s general productivity as a worker

gains experience in the labor market, and Kahn and Lange (2014) show that this learning continues to be

important even late into a worker’s lifecycle because their productivity constantly evolves. This literature

suggests imperfect information is a substantial problem facing those making hiring decisions. Finally, Oyer

and Schaefer (2011) note in their handbook chapter that hiring remains an important open area of research.

7

This notion stems from the canonical principal-agent problem, for instance as in Aghion and Tirole

(1997). In addition, many other models of management focus on moral hazard problems generated when a

manager is allocated decision rights.

5

naire consisting of a large battery of personality, cognitive skills, and job scenario questions.

This firm then matches these responses with subsequent performance in order to identify

the questions or sets of questions that are the most predictive of future workplace success in

this setting. These correlations are then aggregated by a proprietary algorithm to deliver a

single green, yellow, red job test score.

Our data firm provides its services to many clients (hereafter, “client firms”). We have

personnel records for 15 such client firms, with workers dispersed across 130 locations in the

U.S. Before partnering with our data firm, client firms kept records for each hired worker,

consisting of start and stop dates, the reason for the exit, some information about job

function, and location.8 Each client firm shared these records with our data firm, once a

partnership was established.9 From this point, our data firm keeps records of all applicants,

their test scores, and the unique ID of the hiring manager responsible for a given applicant,

in addition to the personnel records (exactly as described above) for hired workers.

In the first part of this paper, we examine the impact of testing technology on worker

productivity. For any given client firm, testing was rolled out gradually at roughly the

location level. However, because of practical considerations in the adoption process, not all

workers in a given location and time period share the same testing status. That is, in a

given month some applicants in a location may have test scores, while others do not.10 We

therefore impute a location-specific date of testing adoption. Our preferred metric for the

date of testing adoption is the first date in which at least 50% of workers hired in that month

and location have a test score. Once testing is adopted at a location, based on our definition,

8

The information on job function is related to the type of service provided by the worker, details of which

are difficult to elaborate on without revealing more about the nature of the job.

9

One downside of the pre-testing data is that they are collected idiosyncratically across client firms. For

some clients, we believe we have a stock-sampling problem: when firms began keeping track of these data,

they retrospectively added in start dates for anyone currently working. This generates a survivor-bias for

workers employed when data collection began. We infer the date when data collection began as the first

recorded termination date. Workers hired before this date may have only been a selected sample of the

full set of hires, those that survived up to that date. We drop these workers from our sample, but have

experimented with including them along with flexible controls for being "stock sampled" in our regressions.

10

We are told by the data firm, however, that the intention of clients was to bring testing into a location

at the same time for everyone in that location.

6

we impose that testing is thereafter always available.11 We also report specifications in which

testing adoption is defined as the first month in which any hire has a test score, as well as

individual-level specifications in which our explanatory variable is an indicator for whether

a specific individual receives testing.

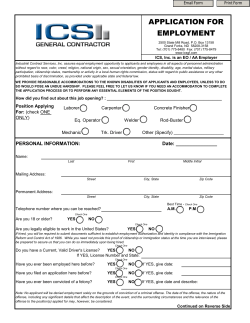

Table 1 provides sample characteristics. Across our whole sample period we have nearly

300,000 hires; two-thirds of these were observed before testing was introduced and one-third

were observed after, based on our preferred imputed definition of testing. Once we link

applicants to the hiring manager responsible for them (only after testing), we have 585 such

hiring managers (or “recruiters”) in our data. Post-testing, when we have information on

applicants as well as hires, we have nearly 94,000 hires and 690,000 applicants who were not

hired.

We will find it useful to define an “applicant pool” as a group of workers under consideration for a job at the same location, month and with the same manager. We restrict to

months in which at least one worker was hired. We allow non-hired applicants to be under

consideration for up to 4 months, from their application date.12 From Table 1, we have 4,529

such applicant pools in our data consisting of, on average 268 applicants. On average, 14%

of workers in a given pool are hired.

Table 1 also shows the distribution of test scores and the associated hire probabilities.

Roughly 40% of all applicants receive a “green”, while “yellow” and “red” candidates make

up roughly 30%, each. The test score is predictive of whether or not an applicant is hired.

Greens and yellows are hired at a rate of roughly 20%, while only 8% of reds are hired. Still

it is very likely for the test to be “over-ruled”. In three-quarters of the pools a yellow is hired

when a green also applied. In nearly a third of the pools, a red was hired when greens or

yellows were available. These summary statistics foreshadow substantial variation in testing

adoption that we exploit later.

11

This fits patterns in the data, for example, that most locations weakly increase the share of applicants

that are tested throughout our sample period.

12

We observe in our post-testing data that over 90% of hired workers are hired within 4 months of the

date they first submitted an application.

7

3

Model

We formalize the hiring decisions of a firm that has two sources of information about

the quality of job candidates. The first is information from interviews conducted by a hiring

manager. During these interviews, the manager receives a private and unverifiable signal

of the applicant’s quality. The firm can also obtain information from job testing. This

information is observable and verifiable to both the firm and the manager.

The introduction of job testing can thus improve hiring in two ways. First, it adds

new information, which should result in better hiring decisions. Second, unlike the soft

information a manager gleans during an interview, the test is observable and verifiable. It

therefore gives firms the option to implement hiring rules based on the test. The firm then

has the following decision: should it delegate hiring authority to the manager, or make these

decisions centrally?

Allowing the manager discretion over hiring decisions could result in more productive

hires because the manager can incorporate both the interview and test information, but it

can also leave the firm vulnerable to any potential managerial bias. The firm can instead

impose rules based solely on the public signal, removing any scope for managerial bias, but

also using only a subset of available information.

The following model formalizes this intuition and describes when discretion is optimal.

From this, we generate the empirical predictions we then take to our data.

3.1

Setup

An individual worker, i, has match quality ai , which is unobserved to all parties, but the

distribution of which is known, a ∼ N (0, σ02 ). A mass one of workers apply for job openings

within a firm. The firm’s objective is to choose hires that maximize a.

A mass one of applicants apply for job openings within a firm. The firm’s payoff of

hiring worker i is given by the worker’s match quality, ai , which is unobserved to all parties,

but the distribution of which is known, a ∼ N (0, σ02 ). The firm’s objective is to hire a

8

proportion, W , of workers that maximizes expected match quality. The firm employs hiring

managers whose interests are imperfectly aligned with that of the firm. In particular, a

manager’s expected payoff of hiring worker i is given by:

Ui = (1 − k)ai + kbi .

In addition to valuing match quality, managers also receive an idiosyncratic payoff bi , which

they value with weight k, assumed to fall between 0 and 1. We assume that a ⊥ b.

The additional quality, b can be thought of in two ways. First, it may capture idiosyncratic preferences of the manager for workers in certain demographic groups or with

similar backgrounds (same alma mater, for example). Second, b can represent beliefs that

the manager has about worker quality that are untrue. For example, the manager may genuinely have the same preferences as the firm but draw incorrect inferences from his or her

interview.13

The manager privately observes information about ai and bi . First, the manager observes a noisy signal si , of match quality:

si = ai + i

where i ∼ N (0, σ2 ) is independent of ai and bi . The parameter σ2 ∈ R+ ∪ {∞} measures the

level of the manager’s information. A manager with perfect information on ai has σ2 = 0,

while a manager with no private information has σ2 = ∞.

We assume for simplicity that bi is perfectly observed by the hiring manager, while the

firm observes neither b nor k, the degree to which the manager’s incentives are misaligned

with those of the firm. The parameter k measures the manager’s bias. This captures the

extent to which the variability of his or her hiring preferences is determined by idiosyncratic

13

If a manager’s mistakes were random noise, we can always separate the posterior belief over worker

ability into a component related to true ability, and an orthogonal component resulting from their error,

which fits our assumed form for managerial utility.

9

factors rather than those preferred by the firm. An unbaised manager has k = 0, while a

manager who makes decisions entirely based on idiosyncratic factors corresponds to k = 1.

A manager’s type is defined by the pair k, 1/σ2 , corresponding to the bias and precision

of private information.

Partway through our sample, a public signal of match quality (a) becomes available in

the form of a test score, t. Let t ∈ {G, Y }; a share of workers pG are type G, and a share

pY = 1 − pG are type Y .14 Type G workers have higher mean match quality: ai ∼ N (µta , σa2 )

Y 15

with µG

a > µa .

In this paper, we consider the following two hiring policies that firms may wish to adapt

with the introduction of testing:

1. Discretion: Using both the test and private signals, the manager picks any W share of

candidates she or he chooses.

2. No Discretion: The firm hires workers using only information from the test score, with

no role for the manager.

Discretion is the status quo before testing, and also, we believe, after. Figure 3 demonstrates that the vast majority of hiring managers make exceptions to test recommendations

at some point, indicating that they are unlikely to be bound by formal rules. The optimal

policy in this case is a threshold rule such that a manager hires a worker if and only if the

expected value of Ui conditional on si , bi , and t is at least U¯ .

No discretion is a policy that is only made possible with the introduction of testing

and corresponds to complete centralization of the hiring decision. The firm’s optimal policy

under no discretion is to hire in order of the test score, randomizing within Y or G groups

to resolve indifferences.

14

We assume that W <pG , i.e., there are always enough Type G applicants to meet the hire rate requirement. This matches our data well where the average hire rate is 14% and the share of applicants with a G

score is 40%. We furthermore assume that t ⊥ i

15

This distribution falls out of a Bayesian updating on a based on the prior and a continuous, normally

distributed signal, t. The posterior mean conditional on the signal is a weighted average of the prior and the

new signal. Under the normality assumptions, the posterior variance takes the same value, regardless of the

signal.

10

The firm may also consider a hybrid policy, such as requiring managers to hire lexicographically by the public test score before choosing his or her preferred candidates. We

focus on the two extreme cases to illustrate the tradeoff between information and bias, and

because they are optimal for some salient cases.

3.2

Model Predictions

Our goal in this paper are to better understand optimal hiring policy given the intro-

duction of testing allows for the possibility of centralization. We first generate a number of

predictions for the discretion hiring policy. These will help build intuition for the trade-offs

inherent to such a policy. They are also useful because discretion is the default policy before

testing and likely after, so far.

We then move to predictions about the optimal hiring policy as a function of managerial

information and bias. All proofs are in the appendix.

Proposition 3.1 Holding managerial type k, 1/σ2 constant, E[a|Hire] is weakly greater with

the test signal than without.

Intuitively, the test must weakly improve hires because it may add new information. If

there is no new information (or when k = 0) then managers are free to ignore it and be at

least as well off as before.

Proposition 3.2 The average match quality of hires, is decreasing in managerial bias, k,

and weakly increasing in the precision of the manager’s private information, 1/σ2

Both of these results are intuitive. When k is lower, the manager focuses more on

maximizing a, while when k is higher, the manager focuses more on maximizing b at the

expense of a. Similarly, 1/σ2a is the precision of the manager’s private information about

match quality. As long as the manager cares about a (k 6= 0), this increased precision allows

him or her to make better hiring decisions.

11

Our model shows that testing can improve hires under Discretion, but there is a tradeoff. Manager’s can widen the information set with their private signal on match quality, si ,

but exploiting this information leaves the firm prone to managerial bias, k. This trade off

is difficult to assess empirically since we do not observe managerial quality. However, we do

observe the choice set (in the form of applicant test scores) available to managers when they

made hiring decisions.

We define a hiring decision as an “exception” if the worker would not have been hired

under the rules-based regime: that is, every time a Y worker is hired when a G worker is

available but not hired. Exceptions are important because they drive differences in outcomes

between discretion-based and no-discretion hiring policies. Put simply, if managers never

made any exceptions, then it would not matter whether or not firms granted them discretion.

Proposition 3.3 The probability of an exception is increasing in both managerial bias, k,

and the precision of the manager’s private information, 1/σ2

Intuitively, managers make more exceptions when they have better information about

a because they then put less weight on the test. Managers also make more exceptions when

their preferences are more misaligned (k is larger), because they focus more on maximizing

other qualities, b.

Across a sufficiently large number of applicants, the probability of an exception, denote

this R, is driven by the quality of a manager’s overall information, σ2 , and by the strength of

his or her idiosyncratic preferences k; the same probability of exceptions can be generated by

different combinations of these parameters. Let a(R)D denote the expected quality of hires

under discretion, conditional on the information and biases being such that R(σ2 , k) = R.

We may also write a(R)N D : this is the expected quality of hires, given a manager with

R(σ2 , k) = R. Because that manager is never able to actually use discretion, a(R)N D is

constant for all values R.

Taken together, Propositions 3.2 and 3.3 show that while R is increasing in both managerial bias and the value of the manager’s private information, the match quality of hires

12

is only increasing in exceptions when exceptions are driven by the precision of private information. Thus, we can infer whether exceptions are being driven by bias or by information

by examining the relationship between exceptions R and match quality of hires, a(R)D .

Proposition 3.4 If the quality of hired workers is decreasing in the exception rate,

∂a(R)D

∂R

<

0, then firms can improve outcomes by eliminating discretion, a(R)D ≤ a(R)N D .

To see this, consider a manager who makes no exceptions even when given discretion:

R(σ2 , σb2 ) = 0. Across a large number of applicants, this only occurs if this manager has

no information and no bias. Following from Proposition 1, the quality of hires by this type

of manager is the same as the quality of workers that would be hired using the test signal

only: that is, a(0)D = a(R)N D for all R. If

∂a(R)D

,

∂R

then the quality of hired workers under

discretion is lower for all exception rates, meaning that firms are better off centralizing hiring

decisions, even if it comes at the expense of reduced information.

The intuition behind this result is as follows: the exceptions that managers make are

driven both by bias and by information. If exception rates are positively correlated with

outcomes, it means that exceptions are primarily being made because managers are better

informed. The opposite is true if there is a negative correlation between exceptions and

outcomes.

3.3

Empirical Predictions

To summarize, our model makes the following empirically testable predictions:

1. The introduction of testing improves the match quality of hired workers.

2. If the quality of workers is decreasing in the exception rate, then no discretion improves

match quality relative to discretion

In practice, managers may differ not only in their information or bias parameters, but

also in the applicant pool that they face (µG , µY , σa2 , W, pY , pG ). If managers face systematically different applicant pools, then this may impact our ability to ascribe differences in

13

the correlation between exceptions and realized match-quality to differences in the value of

information versus bias. Sections 4 and 5 address how we deal with these issues empirically.

4

The Impact of Testing

4.1

Empirical Strategy

We first analyze the impact of testing on worker productivity, exploiting the gradual

roll-out in testing across locations and over time. We examine the impact of the job testing

using difference-in-difference regressions of the form:

Outcomelt = α0 + α1 Testinglt + δl + γt + Controls + lt

(1)

Equation (1) compares outcomes for workers hired with and without job testing. We

regress a productivity outcome (Outcomelt ) for workers hired to a location l, at time t, on

an indicator for whether testing was available at that location at that time (Testinglt ) and

controls. As mentioned above, the location-time-specific measure of testing availability is

preferred to using an indicator for whether an individual was tested (though we also report

results with this metric), because of concerns that an applicant’s testing status is correlated

with his or her perceived quality. We thus estimate these regressions at the location-time

(month by year) level, the level of variation underlying our key explanatory variable. The

outcome measure is the average outcome for workers hired to the same location at the same

time.

All regressions include a complete set of location (δl ) and month by year of hire (γt )

fixed effects. They control for time-invariant differences across locations within our client

firms, as well as for cohort and macroeconomic effects that may impact job duration. In

some specifications, we augment this specification with controls for client-firm by year fixed

effects. These control for any changes to HR or other policies that our client firms may be

making in conjunction with the implementation of job testing. For example, our client firms

14

may be implementing job testing precisely because they are facing problems with retention,

or because they are attempting to improve their workplace. Our estimates of the effect of

job testing, then, may reflect the net effect of all these changes. Including client-year fixed

effects means that we compare locations in the same firm in the same year, some of which

receive job testing sooner than others. The identifying assumption in this specification is

that, within a firm, locations that receive testing sooner vs. later were on parallel trends

before testing.

To account for the possibility that this is not the case, some of our specifications also

include additional controls for location-year specific time trends. These account for broad

trends that may impact worker retention. These include, for instance, changes in local labor

market conditions. Finally, some of our specifications also include detailed controls for the

composition of the applicant pool at a location, after testing is implemented: fixed effects

for the number of green, yellow, and red applicants, respectively. We use these controls for

proxy for any time-varying, location specific changes to the desirability of a location that

are not captured by the location-year time trends. In all specifications, standard errors are

clustered at the location-level to account for correlated observations within a location over

time.

Our primary outcome measure, Outcomelt , is the log of the mean length of completed

job spells for workers hired to firm-location l, at time t. We focus on this outcome for

several reasons. The length of a job spell is a measure that both theory and the firms in

our study agree is important. Many canonical models of job search, e.g. Jovanovic 1979,

predict a positive correlation between match quality and job duration. Moreover, our client

firms employ low-skill service sector workers, a segment of the labor market that experiences

notoriously high turnover. Figure 1 shows a histogram of job tenure for completed spells

(75% of the spells in our data). The median worker (solid red line) stays only 99 days, or

just over 3 months. Twenty percent of hired workers leave after only a month. As a result,

hiring and training make up a substantial part of the labor costs in our client firms. Job

duration is also a measure that has been used previously in the literature, for example by

15

Autor and Scarborough (2008), who also focus on a low-skill service sector setting (retail).

Finally, job duration can be measured reliably for the majority of workers in our sample.

4.2

Results

Table 2 reports regression results for the log duration of completed job spells. We

later report results for several duration-related outcomes that do not restrict the sample

to completed spells. Of the 271,393 hired workers that we observe in our sample, 75%, or

202,977 workers have completed spells, with an average spell lasting 202 days. The key

explanatory variable is whether or not the median hire at this location-time was tested.

In the baseline specification (Column 1) we find that employees hired with the assistance

of job testing stay, on average, 27% longer, significant at the 5% level. A potential concern

is that the introduction of job testing may be proxying for other coincident changes in

management practices at a firm. To account for this possibility, Column 2 introduces client

firm by year fixed effects to control for implementation of new strategies and HR policies at

the firm level.16 Doing so reduces our estimated coefficient to 18%, which is now insignificant.

Column 3 introduces location-specific time trends to account for the possibility that locations

may be in general improving or worsening, or local labor market conditions may be trending

in a particular direction. Adding these controls changes our estimate to 14% and greatly

reduces our standard errors.

Finally, in Column 4, we include controls for the quality of the applicant pool. Because these variables are defined only after testing, these controls should be thought of as

interactions between composition and the post-testing indicator. With these controls, the

coefficient α1 on Testinglt is the impact of the introduction of testing, for locations that

end up receiving similarly qualified applicants. This controls for confounding changes in

applicant quality across locations, but it also controls for any potentially screening effect of

testing. If testing itself improves the quality of applicants, for instance by deterring the least

qualified candidates from applying, then we would not capture this effect. In practice, our

16

In interviews, our data firm has indicated that it was not aware of any other client-specific policy changes.

16

results indicate that the estimated impact of testing increases with these controls. Our most

thorough set of controls indicates that tested applicants stay 14.2% longer than untested

applicants. The addition of these controls increases our standard errors and these results

are no longer significant. Because these controls do not change our coefficient estimates, we

continue to use Column 3 as our preferred specification for the remainder of this section. The

range of our estimates are in line with previous estimates found in Autor and Scarborough,

2008.

Table 3 examines robustness to defining testing at the individual level. For these specifications we regress an individual’s job duration (conditional on completion) on whether or

not the individual was tested. Because these specifications are at the individual level, our

sample size increases from 4,401 location-months to 202,728 individual hiring events. Using

these same controls, we find numerically similar estimates. The one exception is Column 4,

which is now significant and larger: a 22.8% increase. From now on, we continue with our

preferred metric of testing adoption (whether the median worker was tested), from Table 2.

Table 4 explores other duration-related outcomes. For each hired worker, we measure

whether they stay at least three, six, or twelve months, for the set of workers who are not

right-censored.17 We aggregate this variable to measure the proportion of hires in a locationcohort that meet each duration milestone. We also report results for mean completed spells

in days. Table 4 reports our estimates of the impact of testing on these variables, using both

our baseline set of controls as well as our full set (Columns 1 and 4 of Table 2). We find that

testing increases the probability that a worker reaches specific tenure milestones; the bulk of

our estimates effect operates on the 6-to-12 month survival margins. Testing increases the

proportion of applicants persisting longer than 6 months by 5.7 percentage points or about

12 percent (Column 6). Column 8 shows that testing increases persistence past a year by

8.1 percentage points, or 18 percent.

Our findings indicate that the introduction of job testing leads to a 14% improvement

in the duration of hires. This effect comes primarily from increasing retention beyond 6

17

That is, a worker will be included in this metric if his or her hire date was at least three, six, or twelve

months, respectively, before the end of data collection.

17

months. This increase in productivity may be driven by several mechanisms. Most intuitively, the adoption of job testing may improve the productivity of hires at a location by

providing managers with new information about the quality of job candidates, or by making

this information more salient. However, it might also be the case that testing was not randomly assigned across locations or was accompanied by other changes that enhanced worker

productivity. In this subsection, we address these alternative explanations.

Controls for location fixed effects and location-specific time trends rule out the possibility that our results are driven by unobserved location characteristics that are either fixed

or that vary smoothly with time. For example, if testing was introduced first to the best

(or worst) locations or the locations that were on an upward (or downward) trajectory, our

controls would absorb these effects. However, it may still be that testing was introduced in

locations that were expected to become better in a manner uncorrelated with observables.

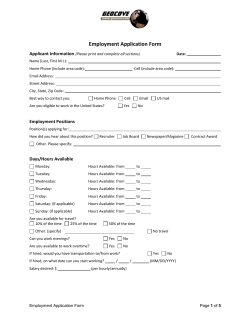

Figure 2 provides further evidence that the introduction of testing is not correlated with

location-specific characteristics that could also impact worker duration. Here we plot event

studies where we estimate the treatment impact of testing by quarter, from 12 quarters before

testing to 12 quarters after testing, using our baseline set of controls. The top left panel

shows the event study for log length of completed tenure spells as the outcome measure.

The figure shows that locations that will obtain testing within the next few months look

very similar to those that will not (either because they have already received testing or will

receive it later). After testing is introduced, however, we begin to see large differences. The

treatment effect of testing appears to grow over time, suggesting that hiring managers and

other participants might take some time to learn how to use the test effectively. Event studies

for our 3, 6, and 12 month persistence outcomes look quite similar. For these outcomes, we

do not see any evidence of the slight pretrend present in the completed tenure graph.

Our results are robust to controls for client-by-year effects. We can thus rule out that

discrete changes at the client firm-level drive our results. For example, if a client firm

continued to make improvements to its human resource policies across all locations after

testing was introduced, these changes would be picked up by our controls. Finally, our

18

results are robust to the inclusion of controls for the quality and size of the applicant pool

a manager chooses from. This indicates that testing improves the ability of managers to

identify productive workers, rather than by exclusively altering the quality of the applicant

pool.

Finally, we look for evidence that color score is indeed predictive of performance, by

comparing job durations for green, yellow, and red hires. A simple model of hiring would

predict that a firm equates productivity of the marginal green, yellow, and red hire. Thus

we should see identical outcomes for the marginal hires from each color score. However,

it should still be the case (if the test yields valuable information) that on average greens

outperform yellows, who outperform reds.

Across a range of specifications, Table 5 shows that both green and yellow workers

perform better relative to red workers. Greens, on average, stay roughly 17.7% longer than

reds, while yellows stay 12.1% longer. These differences are statistically significant at the

1% level, as are the differences between greens and yellows. These results are based on the

selected sample of hired workers; if managers use other signals to make hiring decisions, then

a red or yellow worker would only be hired if her unobserved quality were particularly high.

As such, we might expect the unconditional performance difference between green, yellow,

and red applicants to be even larger.

5

Rules versus Discretion

The evidence presented in Section 4 demonstrates that the introduction of testing im-

proves the quality of hires. Our results suggest that this effect is driven by How should firms

use this information, given that its managers often have their own unverifiable observations

about a candidate? Should they use job test recommendations to centralize hiring practices

or grant managers discretion in how to use the test?

19

5.1

Empirical Strategy

To assess this, we take advantage of our ability to measure how often managers make

exceptions to test recommendations. As we highlighted in the theory section, a manager

makes an exception to a test recommendation because (s)he either 1) has other information

suggesting that the applicant will be successful; 2) has preferences or beliefs that are not

fully aligned with that of the firm. In the first case, the firm will want the manager to

exercise discretion, while in the other case, the firm would prefer that the manager follow

test recommendations.

We do not directly observe a manager’s bias, nor the quality of his/her private signal.

However, as described in Proposition 3.4, we can infer whether exceptions are driven by

primarily by information or bias by examining the relationship between exceptions and

match quality. A negative relationship between exceptions and the quality hires tells us that

managers tend to use discretion to support candidates for whom they are favorably biased,

rather than candidates for whom they have superior private information. This decreases the

quality of hires and firms can improve performance by removing discretion.

To implement this test empirically, we first we define an exception rate. Recall that an

individual worker is hired as an exception if there are unhired workers in the same applicant

pool with higher test scores. A worker with a score of yellow is considered an exception if

there are any unhired greens; similarly, a hired red worker is considered an exception if there

are any unheard yellow or green workers remaining in the same applicant pool. We thus

define an exception rate at the applicant pool (manager-location-month) level:

Exception Ratemlt

Nyh ∗ Ngnh + Nrh ∗ (Ngnh + Nynh )

=

Maximum # of Exceptions

(2)

h

For a manager m at a location l in a month t, Ncolor

is the number of hired applicants of a

nh

given color in this pool and Ncolor

is the number of applicants of a given color who are not

hired from this pool. The numerator of Exception Ratemlt counts the number of exceptions

(or “order violations”) a manager makes when hiring.

20

The number of exceptions in a pool depends both on the manager’s choices and on

the type of applicants and the number of slots the manager needs to hire. For example, if

all workers in a pool are green then, mechanically, this pool cannot have any exceptions.

Similarly, if the manager hires all available applicants, then there can also be no exceptions.

To isolate the portion of variation in exceptions that are driven by managerial decisions, we

normalize the number of order violations by the maximum number of violations that could

occur, given the applicant pool that the recruiter faces and the number of hires.

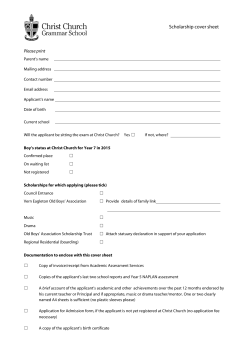

Most of our client firms told their managers that job test recommendations were informative and should be used in making hiring decisions. Following that, many firms gave

managers discretion over how to use the test, though some locations strongly discouraged

managers from hiring red candidates. Despite this fairly uniform advice, we see substantial variation in the extent to which managers follow test recommendations when making

hiring decisions. Figure 3 shows histograms of the exception rate, at the application pool

level, as well as aggregated to the manager and location levels. The top panels show unweighted distributions, while the bottom panels show distributions weighted by the number

of applicants.

In all sets of figures, the median exception rate is about 20% of the maximal number

of possible exceptions. While there are many pools for which we see very few or even zero

exceptions, this appears to be driven by pool level variation in candidates, rather than stable

preferences for test-following within a manager or locations: almost all hiring managers make

exceptions at some point. The same holds location-wide.

Given this measure of exceptions, we would like to examine the correlation between

exception rates and the realized match quality of hires. We first estimate the following

regression:

Durationmlt = a0 + a1 Exception Ratemlt + Xmlt γ + δl + δt + mlt

21

(3)

Equation (3) examines the relationship between pool-level (manager m, location l, time

t) exception rates and duration outcomes for hired workers hired from that pool, on the posttesting sample of application pools. We normalize Exception Ratemlt to be mean zero and

standard deviation one, which will help us to interpret regression coefficients. The coefficient

a1 measures the relationship between a one standard deviation change in the extent to which

managers exercise discretion and the subsequent match quality of those hires.

The goal of Equation (3) is not to identify the causal impact of exceptions. Rather,

guided by the model in Section 3, we would like to use the correlation between exceptions and

outcomes to infer whether managers are primarily driven by bias or by private information.

As a result, we seek to isolate variation in the exception rate that is driven purely by

the manager’s type (his or her specific bias, k, and information σa2 ), as opposed to some

other unobserved characteristics of the applicant pool. Our identifying assumption is that,

conditional on observables, a manager’s information or preferences are not correlated with

either the types of applicant pools that they face or the desirability of the locations that

they work at.

In general, there are two potential sources of confounding variation. The first comes

from systematic differences in how managers are assigned to locations, or clients, or applicant

pools. We may be concerned, for instance, that some locations are inherently less desirable

than others. These locations attract lower quality managers and lower quality applicants. In

this case, managers may make more exceptions because they are biased, and hired workers

may have lower tenures, but both facts are driven by unobserved location characteristics.

Our inference in this case would be complicated by the fact that the desirability of the

location generates a correlation between the quality managers and the quality of applicants.

The second source of contaminating variation comes from purely idiosyncratic variation at

the applicant pool level. For example, imagine a pool with a particularly weak draw of green

candidates. In this case, we may expect a manager to make more exceptions. Yet, because

the green workers in this pool are weak, it may also separately be the case that the match

22

quality of workers may be lower. In this case, a manager is using his or her discretion to

improve match quality, but exceptions will still be correlated with poor outcomes.

As described in Section ??, we estimate multiple specifications that include location and

time fixed effects, client-year fixed effects, location-specific linear time trends, and details

controls for the quality and number of applicants in an application pool. This controls

for systematic differences that may drive both exception rates and worker outcomes. A

downside of this approach, however, is that it increases the extent to which our identification

is driven by pool to pool variation in the idiosyncratic quality of applicants; this would tend

to exacerbate the second source of confounding variation.

To address these concerns together, we also examine whether the impact of the introduction of testing differs by exception rates:

Durationmlt = b0 + b1 Testinglt × Exception Ratemlt + b2 Testinglt

(4)

+Xmlt γ + δl + δt + mlt

Equation (4) studies how the impact of testing, as described in Section 4, differs when

managers make exceptions. Because exception rates are not defined in the pre-testing period

(there are no test scores in the pre-period), there is no main effect of exceptions in the pretesting period, beyond that which is absorbed by the location fixed effects δl . If b1 < 0, this

indicates that the impact of testing is smaller for cohorts of hired workers from application

pools in which managers exercised more discretion, relative to the pre-period baseline for

that location. Examining the difference in difference by exception rate allows us to use

pre-period observations for a location to control for systematic differences. By controlling

for, say, location fixed effects, across a wider time period, the remaining variation used to

identify b1 is not as subject to small sample idiosyncratic variation in the applicant pool.

Another way to approach this is to aggregate our observations in order to reduce noise.

We also report versions of Equations (3) and (4) in which we examine exceptions and worker

23

outcomes at the location-time level, as opposed to at the pool level. In Section 5.3, we

describe additional robustness checks.

5.2

Results

Figure 4 plots the raw correlation between the exception rate in a pool and the log

completed duration of workers hired from that pool. In the first panel, each applicant pool

has the same weight; in the second, panels are weighted by the inverse variance of their

pre-period mean, which takes into account their size and the confidence of our estimates.

In both cases, we see a negative correlation between the extent to which managers exercise

discretion by hiring exceptions, and the match quality of those hires, as measured by the

mean log of their completed durations.

Table 6 presents the regression analogue to this regression, using two measures of the exception rate: a standardized exception rate with mean 0 and standard deviation 1 (Columns

1 and 2), or an indicator variable for whether that applicant pool had above or below median exceptions (Columns 3 and 4). Columns 1 and 3 report specifications with our baseline

set of controls—location and time fixed effects only—while Columns 2 and 4 report results

with our full set of controls. Column 1 indicates that a one standard deviation increase in

exception rates is associated with 3.1% lower completed tenure for that group. This figure

is insignificant, but including additional location-year trends and client-year controls, as reported in Column 2, increases our estimate to 3.8%, significant at the 5% level. We find a

similar pattern using an indicator for high exception rates; we find that this is associated

with a 3.5% decrease in tenure.

Table 7 examines how the impact of testing varies by the extent to which managers

make exceptions. The explanatory variable in Columns 1 and 2 is the interaction between

an applicant pool’s post-testing standardized exception rate and an indicator for being in

the post-testing period. The coefficient on the main effect of testing represents the impact

of testing for a location with the median number of exceptions. In Column 1, we find that

locations with median exception rates post testing experience a 27.7% increase in duration

24

as a result of the implementation of testing. Each standard deviation increase in exception

rates lowers the impact of testing by 10.1 percentage points, or about a third of the total

effect at the median. Column 2 includes additional controls and this modulates our effect:

we find that the median location experiences a 21.7% increase in duration of hires as a result

of testing, but that each standard deviation increase in exceptions decreases this effect by

4.8 percentage points. Figure 5 plots the location-specific treatment effect of testing by a

location’s average exception rate and shows a negative relationship.

The controls included in Columns 2 and 4 of Tables 6 and 7 are important for addressing

several alternative explanations. For example, if a location has a more difficult time enticing

green applicants to accept job offers, they would be recorded as having a higher exception

rate and lower retention, even if managers are behaving optimally from the firm’s perspective.

The inclusion of location fixed effects, client-year effects, and location-time trends controls

for many unobserved determinants of a location’s desirability (changing firm HR policies,

local labor market conditions, overall location quality, etc.), that may confound our findings.

Columns 2 and 4 of Tables 6 and 7 also include detailed controls for both the size and

the quality of the applicant pool by including three sets fixed effects describing the number

of green, yellow, and red applicants (these variables are set to 0 before testing). With these

controls, our identification comes from comparing outcomes of hires across managers who

make different numbers of exceptions when facing identical applicant pools. Holding constant

the application pool additionally controls for the desirability of a location as well as for the

information about worker quality that is conveyed by the job test. Given this, differences

in exception rates should be driven by a manager’s own weighting of his or her private

preferences and private information. If managers were making these decisions optimally

from the firm’s perspective, we should not expect to see the workers they hire perform

systematically worse. In both Tables 6 and 7, we see that this is not the case: workers hired

from cohorts with more exceptions do worse.

Finally, another concern is that our results are identified from small sample changes

in the quality of applicants over time. Appendix Tables A1 and A2 report our main re-

25

sults, aggregated to the location level. Our results are qualitatively similar although some

coefficients loose significance.

5.3

Additional Robustness Checks

In this section, we address several specific concerns about our identification strategy.

Our results in Table 7 show that the impact of testing is smaller when managers make

more exceptions. We would expect this result if managers were biased: ignoring test information and hiring personally favored applicants would increase exception rates and yield no

improvement in the quality of hires. A concern with interpreting our results in this way is

that it is also possible that managers with better information may benefit less from testing.

Locations with more exceptions may be those in which managers have always had better

information; in that case, these locations would also experience fewer gains from testing, but

not because centralization would perform better than discretion.

If our results were driven by the fact that better informed managers make more exceptions, then it should be the case that exceptions are correct: a yellow hired as an exception

should perform better than a green who is not hired. In practice, it is not possible to compare

the performance of workers hired as exceptions against those who were passed up, because

we cannot generally observe performance for this latter group. We approximate this by comparing the performance of yellow workers hired as exceptions against green workers from the

same applicant pool who are not hired that month, but who subsequently begin working in

a later month. If it’s the case that managers are making exceptions to increase the match

quality of workers, then the exception yellows should have longer completed tenures than the

“passed over” greens. Table 8 shows that is not the case. The first panel compares the tenures

of workers who are either yellows who are hired as exceptions, or greens who are initially

passed over, but then subsequently hired. The omitted group are the yellow exceptions. The

second panel of Table 8 repeats this exercise, comparing red workers hired as exceptions (the

omitted group), against passed over yellows and passed over reds. In both cases, workers

hired as exceptions have shorter tenures. Column 3 of the first panel indicates, comparing

26

workers from the same applicant pool, that passed over greens stay 7.8% longer than yellows

hired as exceptions. In all specifications, we include hire month fixed effects to account for

different actual start dates. Column 3 of the second panel indicate that exception reds are

even worse: passed over greens stay 17.1% longer and exception yellows stay 11.2% longer.

One alternative explanation is that applicants with higher test scores were not initially

passed up, but instead were initially unavailable because of better outside options. This

would explain why workers with higher test scores eventually perform better when it looks

as though they were not first choice. However, given the nature of the job, and the fact that

hire rates are low for all types of workers, we believe that most applicants would be unwilling

to pass up the chance to work if employment is offered. Table A3 provides some evidence

that workers with a longer gap between their application and hire dates are not better. To

show this, we compare, within test recommendation colors, the duration of workers who had

to wait one, two, or three months before starting to the duration of workers who were able

to start immediately. We find no statistically significant results and, if anything, workers

who had to wait longer were slightly worse.

Another potential explanation for our findings is that the validity of the test varies

by location. Applicants who receive a green rating may be higher quality workers with

better outside options. In less desirable locations, this may mean that green workers are

more likely to leave for better jobs. A manager attempting to maximize job retention may

optimally decide to make exceptions in order to hire lower ranked workers with lower outside

options. In this case, a negative correlation between exceptions and performance would

not necessarily imply that firms could improve productivity by relying more on tests. We

examine this by comparing the relative performance of green, yellow, and red workers by

pre-testing durations. If it were the case that green workers were more likely to leave less

desirable locations, then we would expect yellow workers to have higher tenures in those

locations. Table 9 shows that this is unlikely to be the case: we find that hired green

workers have longer duration than hired yellow or red (the omitted category) workers. This

is true regardless of a location’s pre-period desirability. Appendix Table A4 repeats this

27

exercise, splitting the sample by high and low exception rate in the post period. Again,

there is no difference in the relative performance of hired greens, yellows, and reds across

high and low exception locations.

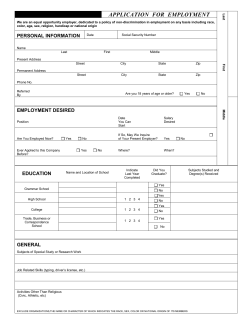

Finally, it is possible that locations with more exceptions were also undesirable for

other reasons that may independently impact worker tenure. While it is not possible to fully

test for this, Figure 6 shows that there is no statistically significant relationship between a

location’s exception rate and pre testing measures of log duration, or mean tenure exceeding

three, six, or twelve months.

6

Conclusion

We evaluate the introduction of a hiring test across a number of firms and locations in

a low-skill service sector industry. Exploiting variation in the timing of adoption across locations within firms, we show that testing increases the durations of hired workers by 20%. We

then document substantial variation in how managers use job test recommendations. Some

locations tend to hire appliance with the best test scores while other locations make many

more exceptions. Across a range of specifications, we show that the exercise of discretion is

associated with worse outcomes.

If managers make exceptions when they correctly believe that applicants will be better,

we would not expect there to be a systematic, negative correlation between the extent to

which managers make exceptions and the quality of their hires. Our results instead suggest

that managers underweight the job test relative to what the firm may prefer. It also suggests

that firms may want to take advantage of the verifiability of job test scores to impose hiring

rules that centralize hiring authority.

These findings highlight the role new technologies can play in solving agency problems in the workplace through contractual solutions. As workforce analytics becomes an

increasingly important part of human resource management, more work needs to be done to

understand how such technologies interact with organizational structure and the allocation

28

of decisions rights with the firm. This paper makes an important step towards understanding

and quantifying these issues.

References

[1] Aghion, P. and J. Tirole (1997), “Formal and Real Authority in Organizations,” The

Journal of Political Economy, 105(1).

[2] Altonji, J. and C. Pierret (2001), “Employer Learning and Statistical Discrimination,”

Quarterly Journal of Economics, 113: pp. 79-119.

[3] Alonso, Dessein, Matouschek (2008)

[4] Autor, D. and D. Scarborough (2008), “Does Job Testing Harm Minority Workers?

Evidence from Retail Establishments,” Quarterly Journal of Economics, 123(1): pp.

219-277.

[5] Burks, S., B. Cowgill, M. Hoffman, and M. Housman (2013), “The Facts About Referrals:

Toward an Understanding of Employee Referral Networks,” mimeo University of

Toronto.

[6] Dessein, W. (2002) “Authority and Communication in Organizations," Review of Economic Studies. 69, pp. 811-838.

[7] Farber, H. and R. Gibbons (1996), “Learning and Wage Dynamics,” Quarterly Journal

of Economics, 111: pp. 1007-1047.

[8] Jovanovic, Boyan (1979), "Job Matching and the Theory of Turnover," The Journal of

Political Economy, 87(October), pp. 972-90.

[9] Kahn, Lisa B. and Fabian Lange (2014), “Employer Learning, Productivity and the

Earnings Distribution: Evidence from Performance Measures,” Review of Economic

Studies, forthcoming.

[10] Koenders, K. and R. Rogerson (2005), “Organizational Dynamics Over the Business

Cycle: A View on Jobless Recoveries,” Federal Reserve Bank of St. Louis Review.

29

[11] Lazear, Edward (2000), “Performance Pay and Productivity,” American Economic Review, 90(5), p. 1346-1461.

[12] Li, D. (2012), “Expertise and Bias in Evaluation: Evidence from the NIH” mimeo Harvard University.

[13] Oyer, P. and S. Schaefer (2011), “Personnel Economics: Hiring and Incentives,” in the

Handbook of Labor Economics, 4B, eds. David Card and Orley Ashenfelter, pp.

1769-1823.

[14] Pallais, A. and E. Sands, “Why the Referential Treatment? Evidence from Field Experiments on Referrals,” mimeo Harvard University.

[15] Paravisini, D. and A. Schoar (2013) “The Incentive Effect of IT: Randomized Evidence

from Credit Committees” NBER Working Paper #19303.

[16] Rantakari, H. (2008) “Governing Adaptation,” Review of Economic Studies. 75, pp.

1257?1285

[17] Riviera, L. (2014) “Hiring as Cultural Matching: The Case of Elite Professional Service

Firms.” American Sociological Review. 77: 999-1022

30

Figure 1: Distribution of Completed Spells

0

.002

Density

.004

.006

.008

Distribution of Length of Completed Job Spells

0

200

400

600

Days of Tenure

800

Omits 4% of observations with durations over 1000 days

Red Line=Mean, Black line=Median

Notes: Figure 1 plots the distribution of completed job spells at the individual level.

31

1000

Figure 2: Event Study of Duration Outcomes

Impact of Testing Availability on Tenure Past 3 Months

Dashed Lines = 90% CI

Dashed Lines = 90% CI

Coefficient

.1

-.1

-.5

0

0

Coefficient

.5

1

.2

1.5

2

.3

Impact of Testing Availability on

-10

-5

0

5

Quarters from Introduction of Testing

10

-10

-5

0

5

Quarters from Introduction of Testing

10

Impact of Testing Availability on Tenure Past 12 Months

Dashed Lines = 90% CI

Dashed Lines = 90% CI

-.2

-.2

0

0

Coefficient

.2

Coefficient

.2

.4

.4

.6

Impact of Testing Availability on Tenure Past 6 Months

-10

-5

0

5

Quarters from Introduction of Testing

10

-10

-5

0

5

Quarters from Introduction of Testing

Notes: These figures plot the coefficient on the impact testing by quarter. The underlying estimating

equation is given by Log(Duration)lt = α0 + α1 Testinglt + δl + γt + lt . This regression does not control for

location-specific time trends; if those are present, they would be visible in the figure.

32

10

Figure 3: Variation in Application Pool Exception Rate

Distribution of Exception Rate

Manger Level, Unweighted

Location Level, Unweighted

0

.2

.4

.6

.8

1

.15

0

0

0

.05

.05

.05

.1

.1

.1

.15

.2

.15

Pool Level, Unweighted

0

.4

.6

.8

1

0

.2

.4

.6

Location Level, Weighted by N Applicants

.15

.1

.05

0

.2

.4

.6

.8

1

0

0

0

.05

.05

.1

.1

.15

.15

.2

.25

.2

Manager Level, Weighted by N Applicants

.2

Pool Level, Weighted by N Applicants

.2

0

.2

.4

.6

.8

1

0

.2

.4

.6

Red Line=Mean, Black line=Median

Notes: These figures plot the distribution of the exception rate, as defined by Equation (2) in Section 5.

The leftmost panel present results at the applicant pool level (defined to be a manager–location–month).

The middle panel aggregates these data to the manager level and the rightmost panel aggregates further to

the location level.

33

Figure 4: Exception Rate vs. Post-testing Match Quality

FEs weighted by location

Log(Complete Tenure) -- Post-Testing Mean

2

4

0

0

Log(Complete Tenure) -- Post-Testing Mean

2

4

6

Log(Complete Tenure)

Unweighted

6

Log(Complete Tenure)

0

.1

.2

.3

.4

.5

0

.1

.2

Violation Rate

Log(Complete Tenure)

.3

.4

.5

Violation Rate

Fitted Line

Log(Complete Tenure)

Fitted Line

Notes: Each dot represents a given location. The y-axis is the mean log completed tenure at a given

location after the introduction of testing; the x-axis is the average exception rate across all application pools

associated with a location. The first panel presents unweighed correlations; the second panel weights by the

number of applicants to a location.

34

Figure 5: Exception Rate vs. Impact of Testing

FEs weighted by inverse variance

1

Log(Complete Tenure) -- Treatment

0

-1

-2

-2

-1

Log(Complete Tenure) -- Treatment

0

1

2

Log(Complete Tenure)

Unweighted

2

Log(Complete Tenure)

0

.1

.2

.3

.4

.5

0

.1

.2

Violation Rate

Log(Complete Tenure)

.3

.4

.5

Violation Rate

Fitted Line

Log(Complete Tenure)

Fitted Line

Notes: Each dot represents a given location. The y-axis is the coefficient on the location-specific estimate

of the introduction of testing; the x-axis is the average exception rate across all application pools associated

with a location. The first panel presents unweighed correlations; the second panel weights by the inverse

variance of the error associated with estimating that location’s treatment effect.

35

Unweighted

0

.1

.2

.3

Violation Rate

.5

Tenure Past 3 Months -- Pre-Testing Mean

0

.2

.4

.6

.8

1

.1

.2

.3

Violation Rate

.4

.5

.2

.3

Violation Rate

Tenure Past 6 Months

.4

.5

.1

.2

.3

Violation Rate

Tenure Past 12 Months

0

.4

.1

.2

.3

Violation Rate

.5

Fitted Line

.5

Fitted Line

.4

.5

Fitted Line

Tenure Past 6 Months

FEs weighted by inverse variance

0

.1

.2

.3

Violation Rate

Tenure Past 6 Months

Unweighted

.4

Tenure Past 3 Months

Fitted Line

Tenure Past 12 Months

0

.2

.3

Violation Rate

Tenure Past 3 Months

Unweighted

.1

.1

FEs weighted by inverse variance

Fitted Line

Tenure Past 6 Months

0

0

Log(Complete Tenure)

Unweighted

0

Log(Complete Tenure)

FEs weighted by inverse variance

Fitted Line

Tenure Past 3 Months

Tenure Past 3 Months

Tenure Past 6 Months -- Pre-Testing Mean

.2

.4

.6

.8

1

.4

Tenure Past 6 Months -- Pre-Testing Mean

.2

.4

.6

.8

1

Tenure Past 3 Months -- Pre-Testing Mean

0

.2

.4

.6

.8

1

Log(Complete Tenure)

Tenure Past 12 Months -- Pre-Testing Mean

0

.2

.4

.6

.8

1

Log(Complete Tenure) -- Pre-Testing Mean

0

2

4

6

8

Log(Complete Tenure)

Tenure Past 12 Months -- Pre-Testing Mean

0

.2

.4

.6

.8

1

Log(Complete Tenure) -- Pre-Testing Mean

0

2

4

6

8

Figure 6: Exception Rate vs. Pre-testing Match Quality

.4

.5

Fitted Line

Tenure Past 12 Months

FEs weighted by inverse variance

0

.1

.2

.3

Violation Rate

Tenure Past 12 Months

.4

.5

Fitted Line

Notes: Each dot represents a given location. The y-axis reports mean duration variables at a given location

prior to the introduction of testing; the x-axis is the average exception rate across all application pools

associated with a location. The first panel presents unweighed correlations; the second panel weights by the

inverse variance of the error associated with estimating that location’s treatment effect, to remain consistent

with Figure 5

36

Table 1: Summary Statistics

Table 1: Summary Statistics

All

Location Pre-Testing Location Post-Testing

Sample Coverage

# Locations

# Hired Workers

131

116

111

270,086

176,390

93,696

# Applicants

1,132,757

# Recruiters

555

# Applicant Pools

4,209

Worker Performance

Mean Completed Spell (Days)

(Std. Dev.)

% > 3 Months

% > 6 Months

% > 12 Months

198.1

233.6

116.8

(267.9)

(300.8)

(139.7)

0.62

(0.49)

0.46

(0.50)

0.31

(0.46)

0.66

(0.47)

0.50

(0.50)

0.35

(0.48)

0.53

(0.50)

0.35

(0.48)

0.19

(0.39)

Applicant Pool Charactertisics (unweighted averages)

# Applicants

268

# Hired

22.2

Share Green Applicants

0.47

Share Yellow Applicants

0.32

Share of Greens Hired

0.22

Share of Yellows Hired

0.18

Share of Reds Hired

0.08

Notes: Only

information

onallhired

workers is available

to the is

introduction

of location-month

testing. Post

Notes:

The sample

includes

non stock-sampled

workers.prior

Post-testing

defined at the

testing,

there

is

information

on

applicants

and

hires.

Post-testing

is

defined

at

the

location-month

level as the first month in which 50% of hires had test scores, and all months thereafter. An applicant pool is

level as the first month in which 50% of hires had test scores, and all months thereafter. An