Verifiable Differential Privacy

Arjun Narayan

Andreas Haeberlen

privacy.cis.upenn.edu

Verifiable Differential Privacy

{narayana,ahae} @ cis.upenn.edu

Ariel Feldman

What is the Problem?

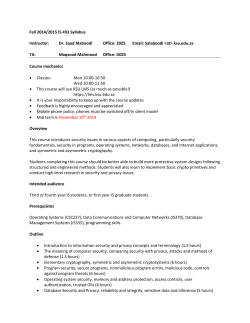

Differential Privacy

Doris

Elbonia

Bob

Cancer

Hank

Elbonia

Doris

Malaria

Publication

Hank

Malaria

Emil

Bob

Paris

...

...

HIV

...

...

Doctors

Airlines

Subjects

Greg

Curator

Analyst

Readers

2. Sometimes queries span multiple private

databases,

and

existing

solutions

don’t

work.

Privacy requirements can be very

strict: medical or census data is

extremely sensitive.

Insight: Use Set Intersections

Some Joins can be rewritten as set intersections.

SELECT COUNT(X) FROM HOSPITAL JOIN AIRLINE

WHERE Destination= “Elbonia” AND Diagnosis = “Malaria”

Verifiable Computing

Insight: Verifiable computing allows

∩proofs

us to generate zero-knowledge

of correct execution.

Doris

Elbonia

Bob

Cancer

Hank

Elbonia

Doris

Malaria

Emil

Vegas

Hank

Malaria

Bob

Paris

Greg

HIV

Charlie Elbonia

Who went to Elbonia?

Data Alice

Data set

Malaria

Who had Malaria?

Subjects

Curator

Doris

Hank

Hank

Charlie

Alice

Publication

=

Doris

Hank

=2

Publication

Analyst

Readers

We modify existing private set intersection

Subjects[Freedman

Curator

algorithms

et al Analyst

2004] to Readers

run our

queries

in a differentially

private

fashion. that

Challenge:

We need

to ensure

Proof

Challenge: Verifying Results

Idea 1: Give all the

Idea 2: Use Secure

Idea 3: Use PDDP

Privacy

requirements

hinder

key

data to a trusted party

Multiparty Computation

[NSDI 2012]

elements of the scientific process:

PDDP

reproducibility and verifiability.

Trusty Tim

DB

Solution: Publish differentially private

results, so individuals are protected.

Systems

like

Fuzz

and

PINQ

exist.

Rewriting JOIN queries

SELECT NOISY COUNT(A.ssn) FROM A,B WHERE

(A.ssn=B.ssn OR A.id=B.id) AND A.diagnosis= ‘malaria’

Rewriting Fuzz Programs

Original non-private query

Rewritten query using private primitives

Key idea: 1) Rewrite Fuzz programs into

−

circuits that

are private.

+

∪

2) Verify

the

| ∩ | computed

|∩|

|∩|

σ

σ circuit was

π

π

π

π

properly

using Pantryπ π

σ

Challenge: Performance.

σ

Solution:

Exploit

the structure

of

typical

A

B

A

B

programs to parallelize.

|⋅|

A.ssn=B.ssn

A.id=B.id

ssn

id

ssn

id

ssn,id

ssn,id

diag='malaria'

A single JOIN query may use multiple set intersections.

+

Ask me for details!

+

over_40

sample

count

Commitments

+

generating proofs is efficient, and

that the proof objects preserve

privacy.

+

+

+

+

Data set

Map tiles

+

+

Data set

Data

Result:

How it works: Add carefully

Provable privacy guarantees.

calculated

Get to use thenoise

data! to query results.

A.diag='malaria'

Data set

Data

Doris

1

is

okay to answer only queries that are

How does it work?

limited

to aggregates.

Adds

carefully

calculated noise to query result.

Existing differential privacy runtimes like PINQ

and Fuzz are limited to a single database.

+

Doris

Elbonia

Bob

Cancer

Hank

Elbonia

Doris

Malaria

Emil

Vegas

Hank

Malaria

Bob

Paris

Subjects

Greg

...

...

HIV

...

Publication

Curator

Analyst

Doris

Elbonia

Bob

Paris

Hank

Elbonia

Readers

Greg

HIV

What if we don’t

have one?

Feste

Cancer

Not designed

for JOIN queries.

It will take years.

Noise gives a fraudulent analyst

plausible deniability when forging

data. Privacy restrictions mean we

cannot

say

“show

me

the

data”.

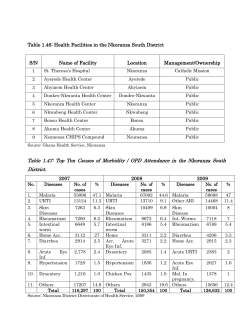

How does DJoin Perform?

Simple queries on three databases with 15,000

rows each take between 1 and 7.5 hours.

Previous best was Secure Multiparty

Computation - i.e. years.

How does it perform?

500

400

Our example

queries on databases

300

with 65k

rows took under two hours

200

to generate private results and

100

proofs, but is highly parallelizable.

0

Q1

Q2

Q3

Q4

Q5

First distributed differentially private system

capable of executing JOIN queries.

Scalable: All operations parallelize very well.

Extensible to multi-way JOINs ≥3 parties.

120

100

80

60

40

20

Map

Map

Map

Over_40 Webserver Census

Map

K-means

Reduce

Noising

+

Noising tile

Vegas

...

0

Reduce tiles

Emil

Completion time

(minutes)

Some scientific studies require private

“Is there a Malaria epidemic in Elbonia?”

data to prove their hypotheses.

Challenge: Distributed Data

Key idea:

To protect individuals, It is okay to answer only

questions

that To

are protect

about aggregates.

Key idea:

individuals, it

1 Useful information exists in various

databases today, but getting access is hard

because the data is private.

Researcher

Data set

Andreas Haeberlen

Differential Privacy

What is the problem?

Vegas Data

Antonis Papadimitriou

Proof generation time (minutes)

Arjun Narayan

Verification takes seconds.

© Copyright 2026