Hawkes processes and application in neuroscience and genomic

Hawkes processes and application in neuroscience and

genomic

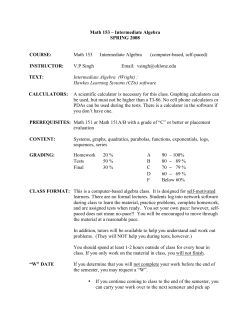

Patricia Reynaud-Bouret

CNRS, Universit´

e de Nice Sophia Antipolis

Journ´ees Aussois 2015, Mod´elisation Math´ematique et Biodiversit´e

P.Reynaud-Bouret

Hawkes

Aussois 2015

1 / 129

Hawkes processes and application in neuroscience and

genomic

Patricia Reynaud-Bouret

CNRS, Universit´

e de Nice Sophia Antipolis

Journ´ees Aussois 2015, Mod´elisation Math´ematique et Biodiversit´e

P.Reynaud-Bouret

Hawkes

Aussois 2015

1 / 129

Table of Contents

1

Point process and Counting process

2

Multivariate Hawkes processes and Lasso

3

Probabilistic ingredients

4

Back to Lasso

5

PDE and point processes

P.Reynaud-Bouret

Hawkes

Aussois 2015

2 / 129

Point process and Counting process

Table of Contents

1

Point process and Counting process

Introduction

Poisson process

More general counting process and conditional intensity

Classical statistics for counting processes

2

Multivariate Hawkes processes and Lasso

3

Probabilistic ingredients

4

Back to Lasso

5

PDE and point processes

P.Reynaud-Bouret

Hawkes

Aussois 2015

3 / 129

Point process and Counting process

Introduction

Definition

Point process

N= random countable set of points of X (usually R).

P.Reynaud-Bouret

Hawkes

Aussois 2015

4 / 129

Point process and Counting process

Introduction

Definition

Point process

N= random countable set of points of X (usually R).

!

NA number of points of N in A, dNt = T point de N δT .

"

!

f (t)dNt = T ∈N f (T )

If X = R+ and no accumulation, the counting process is Nt = N[0,t] .

th

.

.

.

.

.

.

.

.

.

.

.

...

.÷

.

.

.

.

.

.

.

.

i

.

.

.

.

H

.

P.Reynaud-Bouret

:-.

.

.

.

2

,

.

Hawkes

Aussois 2015

4 / 129

Point process and Counting process

Introduction

Examples

disintegration of radioactive atoms

date / duration / position of phone calls

date / position/ magnitude of earthquakes

P.Reynaud-Bouret

Hawkes

Aussois 2015

5 / 129

Point process and Counting process

Introduction

Examples

disintegration of radioactive atoms

date / duration / position of phone calls

date / position/ magnitude of earthquakes

position / size / age of trees in a forest

discovery time / position/ size of petroleum fields

breakdowns

P.Reynaud-Bouret

Hawkes

Aussois 2015

5 / 129

Point process and Counting process

Introduction

Examples

disintegration of radioactive atoms

date / duration / position of phone calls

date / position/ magnitude of earthquakes

position / size / age of trees in a forest

discovery time / position/ size of petroleum fields

breakdowns

time of the event ”a bee came out of its hive”, ”a monkey climbed in

the tree”, ”an agent buys a financial asset”, ”someone clicks on the

website” ....

P.Reynaud-Bouret

Hawkes

Aussois 2015

5 / 129

Point process and Counting process

Introduction

Examples

disintegration of radioactive atoms

date / duration / position of phone calls

date / position/ magnitude of earthquakes

position / size / age of trees in a forest

discovery time / position/ size of petroleum fields

breakdowns

time of the event ”a bee came out of its hive”, ”a monkey climbed in

the tree”, ”an agent buys a financial asset”, ”someone clicks on the

website” ....

Everything has been noted, probably link via a model between those

quantities. No reason at all that they may be identically distributed and/or

independent (not i.i.d.).

P.Reynaud-Bouret

Hawkes

Aussois 2015

5 / 129

Point process and Counting process

Introduction

Life times

Historically, first evident records of statistics start with Graunt in 1662 and

its life table.

NB : at the same time, Pascal and Euler started Probability ... Halley,

Huyghens and many others also recorded life tables

”Why are people dying ?” → record of the time of deaths in London at

that time.

P.Reynaud-Bouret

Hawkes

Aussois 2015

6 / 129

Point process and Counting process

Introduction

Life times

Historically, first evident records of statistics start with Graunt in 1662 and

its life table.

”Why are people dying ?” → record of the time of deaths in London at

that time.

Even if considered i.i.d., the interesting quantity is the hazard rate

q(t), with

q(t)dt ≃ P( die just after t given that alive in t)

If f density, q(t) = f (t)/S(t), with

S(t) =

#

+∞

f (s)ds.

t

P.Reynaud-Bouret

Hawkes

Aussois 2015

6 / 129

Point process and Counting process

Introduction

Life times

Historically, first evident records of statistics start with Graunt in 1662 and

its life table.

”Why are people dying ?” → record of the time of deaths in London at

that time.

Even if considered i.i.d., the interesting quantity is the hazard rate

q(t),

q = cte : do not ”age”, no memory → exponential variable

q increases : better young than old

q

q decreases : better old than young.

classical shape for

human

Ould

mortality

old

aging

→

age

P.Reynaud-Bouret

Hawkes

Aussois 2015

6 / 129

Point process and Counting process

Introduction

Life times

Historically, first evident records of statistics start with Graunt in 1662 and

its life table.

”Why are people dying ?” → record of the time of deaths in London at

that time.

Even if considered i.i.d., the interesting quantity is the hazard rate

q(t),

not even clearly i.i.d. : people may move out before disease, may

contaminate each other etc... → what is the interesting quantity ?

P.Reynaud-Bouret

Hawkes

Aussois 2015

6 / 129

Point process and Counting process

Introduction

Neuroscience and neuronal unitary activity

P.Reynaud-Bouret

Hawkes

Aussois 2015

7 / 129

Point process and Counting process

Introduction

Neuronal data and Unitary Events

P.Reynaud-Bouret

Hawkes

Aussois 2015

8 / 129

Point process and Counting process

Introduction

Genomics and Transcription Regulatory Elements

P.Reynaud-Bouret

Hawkes

Aussois 2015

9 / 129

Point process and Counting process

Poisson process

Definition

Poisson processes

for all integer n, for all A1 , . . . , An disjoint measurable subsets of X,

NA1 , . . . , NAn are independent random variables.

P.Reynaud-Bouret

Hawkes

Aussois 2015

10 / 129

Point process and Counting process

Poisson process

Definition

:a÷

ah

iii.

ix:i !

:

'

.

.

Poisson processes

for all integer n, for all A1 , . . . , An disjoint measurable subsets of X,

NA1 , . . . , NAn are independent random variables.

for all measurable subset A of X, NA obeys a Poisson law with

parameter depending on A and denoted ℓ(A).

P.Reynaud-Bouret

Hawkes

Aussois 2015

10 / 129

Point process and Counting process

Poisson process

Definition

Poisson processes

for all integer n, for all A1 , . . . , An disjoint measurable subsets of X,

NA1 , . . . , NAn are independent random variables.

for all measurable subset A of X, NA obeys a Poisson law with

parameter depending on A and denoted ℓ(A).

If X = R and ℓ([0, t]) = λt, then

P(Nt = 0) = P(T1 > t)

0

P.Reynaud-Bouret

£

j

Hawkes

.

.

.

.

.

Aussois 2015

10 / 129

Point process and Counting process

Poisson process

Definition

Poisson processes

for all integer n, for all A1 , . . . , An disjoint measurable subsets of X,

NA1 , . . . , NAn are independent random variables.

for all measurable subset A of X, NA obeys a Poisson law with

parameter depending on A and denoted ℓ(A).

If X = R and ℓ([0, t]) = λt, then

P(Nt = 0) = P(T1 > t) = e −λt

H Poisson

PINEH

P.Reynaud-Bouret

dt=o

with

distribution

Hawkes

.

.

Q,÷i0

parameter

.

Aussois 2015

10 / 129

Point process and Counting process

Poisson process

Definition

Poisson processes

for all integer n, for all A1 , . . . , An disjoint measurable subsets of X,

NA1 , . . . , NAn are independent random variables.

for all measurable subset A of X, NA obeys a Poisson law with

parameter depending on A and denoted ℓ(A).

If X = R and ℓ([0, t]) = λt, then

P(Nt = 0) = P(T1 > t) = e −λt

→ first time is an exponential variable

P.Reynaud-Bouret

Hawkes

Aussois 2015

10 / 129

Point process and Counting process

Poisson process

Definition

Poisson processes

for all integer n, for all A1 , . . . , An disjoint measurable subsets of X,

NA1 , . . . , NAn are independent random variables.

for all measurable subset A of X, NA obeys a Poisson law with

parameter depending on A and denoted ℓ(A).

If X = R and ℓ([0, t]) = λt, then

P(Nt = 0) = P(T1 > t) = e −λt

→ first time is an exponential variable

All ”intervals” are i.i.d and exponentially distributed.

P.Reynaud-Bouret

Hawkes

Aussois 2015

10 / 129

Point process and Counting process

Poisson process

Definition

Poisson processes

for all integer n, for all A1 , . . . , An disjoint measurable subsets of X,

NA1 , . . . , NAn are independent random variables.

for all measurable subset A of X, NA obeys a Poisson law with

parameter depending on A and denoted ℓ(A).

If X = R and ℓ([0, t]) = λt, then

P(Nt = 0) = P(T1 > t) = e −λt

→ first time is an exponential variable

All ”intervals” are i.i.d and exponentially distributed.

No memory ⇐⇒ independence to the past

P.Reynaud-Bouret

Hawkes

Aussois 2015

10 / 129

Point process and Counting process

Poisson process

If λ not constant

If ℓ([0, t]) =

"t

0

λ(s)ds, let N be a Poisson process in R2+ and

That

@

a!

.

OP

.

:

.

-

P.Reynaud-Bouret

Hawkes

Aussois 2015

11 / 129

Point process and Counting process

Poisson process

If λ not constant

If ℓ([0, t]) =

"t

0

λ(s)ds, let N be a Poisson process in R2+ and

number

fens

o°=

0:i

Poisson

Ftakretu

:

OP

of

area

.

.

"

:

i

:3

:mkEnwmei¥

.

.

AH

.

.

.

-

P.Reynaud-Bouret

Hawkes

Aussois 2015

11 / 129

Point process and Counting process

Poisson process

If λ not constant

If ℓ([0, t]) =

"t

0

λ(s)ds, let N be a Poisson process in R2+ and

N)

ECM )

ECM )

::

.

Ham )

in ] )

ECM )

If λ(.) bounded by M

P.Reynaud-Bouret

Hawkes

Aussois 2015

11 / 129

Point process and Counting process

Poisson process

If λ not constant

If ℓ([0, t]) =

"t

0

λ(s)ds, let N be a Poisson process in R2+ and

Rejected Point

HGID )

gT.ru

0

Ham )

-

accepted

points

ECM )

If λ(.) bounded by M

P.Reynaud-Bouret

WGN )

ECM )

ECM )

Hawkes

Aussois 2015

11 / 129

Point process and Counting process

More general counting process and conditional intensity

Conditional intensity

Need of the same sort of interpretation as hazard rate but given ”the

Past” !

P.Reynaud-Bouret

Hawkes

Aussois 2015

12 / 129

Point process and Counting process

More general counting process and conditional intensity

Conditional intensity

Need of the same sort of interpretation as hazard rate but given ”the

Past” !

dN

$ %&t '

Nbr observed points

in [t, t + dt]

P.Reynaud-Bouret

=

intensity

& '$ %

λ(t) dt

$ %& '

Expected Number

given the past before t

Hawkes

+

$noise

%& '

Martingales

differences

Aussois 2015

12 / 129

Point process and Counting process

More general counting process and conditional intensity

Conditional intensity

Need of the same sort of interpretation as hazard rate but given ”the

Past” !

dN

$ %&t '

Nbr observed points

in [t, t + dt]

λ(t)

=

P.Reynaud-Bouret

=

intensity

& '$ %

λ(t) dt

$ %& '

Expected Number

given the past before t

+

$noise

%& '

Martingales

differences

instantaneous frequency

Hawkes

Aussois 2015

12 / 129

Point process and Counting process

More general counting process and conditional intensity

Conditional intensity

Need of the same sort of interpretation as hazard rate but given ”the

Past” !

dN

$ %&t '

Nbr observed points

in [t, t + dt]

λ(t)

=

=

P.Reynaud-Bouret

=

intensity

& '$ %

λ(t) dt

$ %& '

Expected Number

given the past before t

+

$noise

%& '

Martingales

differences

instantaneous frequency

random, depends on the Past (previous points ...)

Hawkes

Aussois 2015

12 / 129

Point process and Counting process

More general counting process and conditional intensity

Conditional intensity

Need of the same sort of interpretation as hazard rate but given ”the

Past” !

dN

$ %&t '

Nbr observed points

in [t, t + dt]

λ(t)

=

=

=

=

intensity

& '$ %

λ(t) dt

$ %& '

Expected Number

given the past before t

+

$noise

%& '

Martingales

differences

instantaneous frequency

random, depends on the Past (previous points ...)

characterizes the distribution when exists

NB : if λ(t) deterministic → Poisson process

P.Reynaud-Bouret

Hawkes

Aussois 2015

12 / 129

Point process and Counting process

More general counting process and conditional intensity

Thinning

Let N be a Poisson process in R2+ .

In

oil

.÷

=

.

""

.

'

'

ftp.ik

.

.

λ(t) is predictable, i.e. depends on the past only, can be drawn from left

to right if one discovers one point at a time. Usually ”c-`a-g” for ”continu

`a gauche” (left continuous)

P.Reynaud-Bouret

Hawkes

Aussois 2015

13 / 129

Point process and Counting process

More general counting process and conditional intensity

Thinning

Let N be a Poisson process in R2+ .

€p~

÷

ay

dtlltipasde

points

0

s€,¥HEm]

.

)

For simulation (Ogata’s thinning), λ(t) bounded if no points appear

P.Reynaud-Bouret

Hawkes

Aussois 2015

13 / 129

Point process and Counting process

More general counting process and conditional intensity

Thinning

Let N be a Poisson process in R2+ .

min

ydttitpinhoppeanyytu

•

ELMH

I

'

For simulation (Ogata’s thinning), λ(t) bounded if no points appear

P.Reynaud-Bouret

Hawkes

Aussois 2015

13 / 129

Point process and Counting process

More general counting process and conditional intensity

One life time

N = {T }, T of hazard rate q.

'

9kt

'

.

.

,

,

P.Reynaud-Bouret

Hawkes

Aussois 2015

14 / 129

Point process and Counting process

More general counting process and conditional intensity

One life time

N = {T }, T of hazard rate q.

.

941

.

.

tttsaoatayst

'M

.tt?dsi.=explnsLH

to )

It

.

expfftqlslds )

Plt7t-P(

=

.

.

=expfs

=S(t

.

)

:

λ(t) = q(t)1T ≥t

P.Reynaud-Bouret

t

Hawkes

Aussois 2015

14 / 129

Point process and Counting process

More general counting process and conditional intensity

Poissonian interaction

Parents : Ui either (uniform) i.i.d. or (homogeneous) Poisson process

Each parent gives birth according to a Poisson process of intensity h

originated in Ui .

Process of the children ?

then

aIy¥÷

eE¥

P.Reynaud-Bouret

Hawkes

Aussois 2015

15 / 129

Point process and Counting process

More general counting process and conditional intensity

Poissonian interaction

Parents : Ui either (uniform) i.i.d. or (homogeneous) Poisson process

Each parent gives birth according to a Poisson process of intensity h

originated in Ui .

"

Process of the children ?

"

int

fhlt

λ(t) =

!

i /Ui <t

P.Reynaud-Bouret

h(t − Ui )

←

htttithttud

Ey

Hawkes

Aussois 2015

15 / 129

Point process and Counting process

More general counting process and conditional intensity

Poissonian interaction

Parents : Ui either (uniform) i.i.d. or (homogeneous) Poisson process

Each parent gives birth according to a Poisson process of intensity h

originated in Ui .

With some orphans of rate ν ?

Othtt U )+h(t Ud

.

-

,

←

0

utan

i÷

;

.

i

.

.

;

a

P.Reynaud-Bouret

Hawkes

Aussois 2015

15 / 129

Point process and Counting process

More general counting process and conditional intensity

Poissonian interaction

Parents : Ui either (uniform) i.i.d. or (homogeneous) Poisson process

Each parent gives birth according to a Poisson process of intensity h

originated in Ui .

With some orphans of rate ν ?

.

.÷

=

i.in

'

D

;

11

I

11

i

λ(t) = ν +

!

ai ;

i /Ui <t

P.Reynaud-Bouret

I

I

/

l

I

I I

11

I 1

'

'

11

i

1

,

1 1

11

.

h(t − Ui )

Hawkes

Aussois 2015

15 / 129

Point process and Counting process

More general counting process and conditional intensity

Hawkes process : linear case

A self-exciting process introduced by Hawkes in the 70’s to model

earthquakes.

Ancestors appear at rate ν.

Each point gives birth according to h etc etc (aftershocks)

€i%

=iii

:-,

,

P.Reynaud-Bouret

Hawkes

,

.

Aussois 2015

,

16 / 129

Point process and Counting process

More general counting process and conditional intensity

Hawkes process : linear case

A self-exciting process introduced by Hawkes in the 70’s to model

earthquakes.

Ancestors appear at rate ν.

Each point gives birth according to h etc etc (aftershocks)

vk.mil#HiIY?Dh

λ(t) = ν +

!

T <t

P.Reynaud-Bouret

h(t − T ) = ν +

"t

−∞ h(t

Hawkes

− u)dNu

Aussois 2015

16 / 129

Point process and Counting process

More general counting process and conditional intensity

Hawkes and Modelisation

models any sort of self contamination (earthquakes, clicks, etc)

P.Reynaud-Bouret

Hawkes

Aussois 2015

17 / 129

Point process and Counting process

More general counting process and conditional intensity

Hawkes and Modelisation

models any sort of self contamination (earthquakes, clicks, etc)

can be marked : position or magnitude of earthquakes, neuron on

which the spike happens (see later)

P.Reynaud-Bouret

Hawkes

Aussois 2015

17 / 129

Point process and Counting process

More general counting process and conditional intensity

Hawkes and Modelisation

models any sort of self contamination (earthquakes, clicks, etc)

can be marked : position or magnitude of earthquakes, neuron on

which the spike happens (see later)

can therefore model interaction also (see later)

P.Reynaud-Bouret

Hawkes

Aussois 2015

17 / 129

Point process and Counting process

More general counting process and conditional intensity

Hawkes and Modelisation

models any sort of self contamination (earthquakes, clicks, etc)

can be marked : position or magnitude of earthquakes, neuron on

which the spike happens (see later)

can therefore model interaction also (see later)

"

generally, modelling people ”want” stationnarity : if h < 1, the

branching procedure ends ⇐⇒ existence of stationnary version.

P.Reynaud-Bouret

Hawkes

Aussois 2015

17 / 129

Point process and Counting process

More general counting process and conditional intensity

Hawkes and Modelisation

models any sort of self contamination (earthquakes, clicks, etc)

can be marked : position or magnitude of earthquakes, neuron on

which the spike happens (see later)

can therefore model interaction also (see later)

"

generally, modelling people ”want” stationnarity : if h < 1, the

branching procedure ends ⇐⇒ existence of stationnary version.

for genomics and neuroscience, need of inhibition : possible to use

# t

λ(t) = Φ(

h(t − u)dNu ),

−∞

with

Φ 1-Lipschitz positive and h of any sign. Stationnarity condition

"

|h| < 1.

In particular, Φ = (.)+ but loss of the branching structure ( ?)

P.Reynaud-Bouret

Hawkes

Aussois 2015

17 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood

Informally the likelihood of the observation N should give the

probability to see (something near) N.

P.Reynaud-Bouret

Hawkes

Aussois 2015

18 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood

Informally the likelihood of the observation N should give the

probability to see (something near) N. Formally, it is somehow the

density wrt (here) Poisson, but without depending on the intensity of

the reference Poisson.

P.Reynaud-Bouret

Hawkes

Aussois 2015

18 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood

Informally the likelihood of the observation N should give the

probability to see (something near) N.

If N = {t1 , ..., tn }, we want for N ′ of intensity λ(.) observed on [0, T ],

P(N ′ has n points, each Ti in [ti − dti , ti ])

.

,

P.Reynaud-Bouret

itit

.

.

.

.

Hawkes

Aussois 2015

18 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood

Informally the likelihood of the observation N should give the

probability to see (something near) N.

If N = {t1 , ..., tn }, we want for N ′ of intensity λ(.) observed on [0, T ],

P(N ′ has n points, each Ti in [ti − dti , ti ])

.

,

itit

.

.

.

.

P(no point in [0, t1 −dt1 ], 1 point in [t1 −dt1 , t1 ], ..., no point in[tn , T ])

P.Reynaud-Bouret

Hawkes

Aussois 2015

18 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood(2)

;

jin

.jx£+:e

,

,

it

.

.

.

.

(

!T

(

P(no point in[tn , T ](past at tn ) = e − tn λ(s)ds

P.Reynaud-Bouret

Hawkes

H

Aussois 2015

19 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood(3)

=y4odsIeoec

;f

,

if

.

.

.

,

it

.

.

.

.

H

(

! tn +dtn

(

λ(s)ds

P(1 point in[tn − dtn , tn ](past at tn − dtn ) ≃ λ(tn )dtn e − tn

P.Reynaud-Bouret

Hawkes

Aussois 2015

20 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood(4)

P(no point in [0, t1 − dt1 ], 1 point in [t1 − dt1 , t1 ], ..., no point in[tn , T ])

)

= E 1no point in [0,t1 −dt1 ] ...11 point in [tn −dtn ,tn ] ×

(

*

(

P(no point in[tn , T ](past at tn )

)

* !T

= E 1no point in [0,t1 −dt1 ] ...11 point in [tn −dtn ,tn ] e − tn λ(s)ds

Point process and Counting process

Classical statistics for counting processes

Likelihood(4)

P(no point in [0, t1 − dt1 ], 1 point in [t1 − dt1 , t1 ], ..., no point in[tn , T ])

)

= E 1no point in [0,t1 −dt1 ] ...11 point in [tn −dtn ,tn ] ×

(

*

(

P(no point in[tn , T ](past at tn )

)

* !T

= E 1no point in [0,t1 −dt1 ] ...11 point in [tn −dtn ,tn ] e − tn λ(s)ds

Point process and Counting process

Classical statistics for counting processes

Likelihood(4)

P(no point in [0, t1 − dt1 ], 1 point in [t1 − dt1 , t1 ], ..., no point in[tn , T ])

)

= E 1no point in [0,t1 −dt1 ] ...11 point in [tn −dtn ,tn ] ×

(

*

(

P(no point in[tn , T ](past at tn )

)

* !T

= E 1no point in [0,t1 −dt1 ] ...11 point in [tn −dtn ,tn ] e − tn λ(s)ds

(

)

*

(

= E 1no point in [0,t1 −dt1 ] ...P(1 point in[tn − dtn , tn ](past at tn − dtn ) ×

e−

)

≃ E 1no point in

P.Reynaud-Bouret

!T

tn

λ(s)ds

*

[tn−1 ,tn −dtn ] ×

!T

λ(tn )dtn e − tn −dtn λ(s)ds

[0,t1 −dt1 ] ...1no point in

Hawkes

Aussois 2015

21 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood and log-likelihood

(Pseudo)Likelihood

+

λ(ti )e −

!T

λ(s)ds

0

i

dependance on the past not explicit (ex Past before 0 for Hawkes)

na

:*

IHI

:p

Eyton

t.IN

.

P.Reynaud-Bouret

Hawkes

Aussois 2015

22 / 129

Point process and Counting process

Classical statistics for counting processes

Likelihood and log-likelihood

(Pseudo)Likelihood

+

λ(ti )e −

!T

0

λ(s)ds

i

dependance on the past not explicit (ex Past before 0 for Hawkes)

LogLikelihood

ℓλ (N) =

P.Reynaud-Bouret

#

T

]

λ(s)dNs

look

0

Hawkes

−

#

T

λ(s)ds

0

Aussois 2015

22 / 129

Point process and Counting process

Classical statistics for counting processes

Maximum likelihood estimator

If model for λ → λθ , θ ∈ Rd , how to choose the best θ according to the

observed data N on [0, T ] ?

.÷Xh

P.Reynaud-Bouret

Hawkes

...

Aussois 2015

23 / 129

Point process and Counting process

Classical statistics for counting processes

Maximum likelihood estimator

If model for λ → λθ , θ ∈ Rd , how to choose the best θ according to the

observed data N on [0, T ] ?

*I

D=

los

likely

.

likely

,÷€"bglH#o5ko&

7

:

Imre

n

-

observed

points

then MLE = θˆ = arg maxθ ℓλθ (N).

P.Reynaud-Bouret

Hawkes

Aussois 2015

23 / 129

Point process and Counting process

Classical statistics for counting processes

Maximum likelihood estimator(2)

If d, number of unknown parameter, fixed one can show usually nice

asymptotic properties (in T ... ) :

consistency (θˆ → θ)

asymptotic normality

efficiency (smallest asymptotic variance)

(cf. Andersen, Borgan, Gill and Keiding)

P.Reynaud-Bouret

Hawkes

Aussois 2015

24 / 129

Point process and Counting process

Classical statistics for counting processes

Maximum likelihood estimator(2)

If d, number of unknown parameter, fixed one can show usually nice

asymptotic properties (in T ... ) :

consistency (θˆ → θ)

asymptotic normality

efficiency (smallest asymptotic variance)

(cf. Andersen, Borgan, Gill and Keiding)

iterate

:*

In practice, T and d are fixed → if d large wrt T , i.e. in the non

asymptotic regime, over fitting.

P.Reynaud-Bouret

Hawkes

Aussois 2015

24 / 129

Point process and Counting process

Classical statistics for counting processes

Goodness-of-fit test

If Λt =

"t

0

λ(s)ds, then

Λt is a nondecreasing process, predictable and its (pseudo)inverse

exists.

It is the compensator of the counting process Nt , i.e. (Nt − Λt )t

martingale.

.

P.Reynaud-Bouret

D:

At

.

Hawkes

Aussois 2015

25 / 129

Point process and Counting process

Classical statistics for counting processes

Goodness-of-fit test

If Λt =

"t

0

λ(s)ds, then

Λt is a nondecreasing process, predictable and its (pseudo)inverse

exists.

It is the compensator of the counting process Nt , i.e. (Nt − Λt )t

martingale.

NΛ−1 is a Poisson process of intensity 1.

t

atrp

jj

'

.

.

.

.

P.Reynaud-Bouret

.

'

'

'

.

Hawkes

Aussois 2015

25 / 129

Point process and Counting process

Classical statistics for counting processes

Goodness-of-fit test

If Λt =

"t

0

λ(s)ds, then

Λt is a nondecreasing process, predictable and its (pseudo)inverse

exists.

It is the compensator of the counting process Nt , i.e. (Nt − Λt )t

martingale.

NΛ−1 is a Poisson process of intensity 1.

t

→ test that N (observed) has the desired intensity λ.

P.Reynaud-Bouret

Hawkes

Aussois 2015

25 / 129

Multivariate Hawkes processes and Lasso

Table of Contents

1

Point process and Counting process

2

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Parametric estimation

What can we do against over fitting ? (Adaptation)

Lasso criterion

Simulations

Real data analysis

3

Probabilistic ingredients

4

Back to Lasso

5

PDE

and point processes

P.Reynaud-Bouret

Hawkes

Aussois 2015

26 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Biological framework

(a) One neuron

P.Reynaud-Bouret

(b) Connected neurons

Hawkes

Aussois 2015

27 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

without synchronization

potentiel d’action

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

28 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

potentiel d’action

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

potentiel d’action

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

potentiel d’action

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

potentiel d’action

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

potentiel d’action

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Synaptic integration

with synchronization

potentiel d’action

potentiel d’action

seuil d’excitation

du neurone

potentiel de repos

du neurone

diff´

erentes

dendrites

signaux d’entr´

ees

P.Reynaud-Bouret

Hawkes

Aussois 2015

29 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Point process and genomics

There are several ”events” of different types on the DNA that may ”work”

together in synergy.

P.Reynaud-Bouret

Hawkes

Aussois 2015

30 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Point process and genomics

There are several ”events” of different types on the DNA that may ”work”

together in synergy.

Motifs

= words in the DNA-alphabet {actg}.

P.Reynaud-Bouret

Hawkes

Aussois 2015

30 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Point process and genomics

There are several ”events” of different types on the DNA that may ”work”

together in synergy.

Motifs

= words in the DNA-alphabet {actg}.

How can statistician suggest functional motifs based on the statistical

properties of their occurrences ?

Unexpected frequency → Markov models (see for a review Reinert,

Schbath, Waterman (2000))

P.Reynaud-Bouret

Hawkes

Aussois 2015

30 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Point process and genomics

There are several ”events” of different types on the DNA that may ”work”

together in synergy.

Motifs

= words in the DNA-alphabet {actg}.

How can statistician suggest functional motifs based on the statistical

properties of their occurrences ?

Unexpected frequency → Markov models (see for a review Reinert,

Schbath, Waterman (2000))

Poor or rich regions → scan statistics (see, for instance, Robin

Daudin (1999) or Stefanov (2003))

P.Reynaud-Bouret

Hawkes

Aussois 2015

30 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Point process and genomics

There are several ”events” of different types on the DNA that may ”work”

together in synergy.

Motifs

= words in the DNA-alphabet {actg}.

How can statistician suggest functional motifs based on the statistical

properties of their occurrences ?

Unexpected frequency → Markov models (see for a review Reinert,

Schbath, Waterman (2000))

Poor or rich regions → scan statistics (see, for instance, Robin

Daudin (1999) or Stefanov (2003))

If two motifs are part of a common biological process, the space

between their occurrences (not necessarily consecutive) should be

somehow fixed → favored or avoided distances (Gusto, Schbath

(2005))

P.Reynaud-Bouret

Hawkes

Aussois 2015

30 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Point process and genomics

There are several ”events” of different types on the DNA that may ”work”

together in synergy.

Motifs

= words in the DNA-alphabet {actg}.

How can statistician suggest functional motifs based on the statistical

properties of their occurrences ?

Unexpected frequency → Markov models (see for a review Reinert,

Schbath, Waterman (2000))

Poor or rich regions → scan statistics (see, for instance, Robin

Daudin (1999) or Stefanov (2003))

If two motifs are part of a common biological process, the space

between their occurrences (not necessarily consecutive) should be

somehow fixed → favored or avoided distances (Gusto, Schbath

(2005)) pairwise study.

P.Reynaud-Bouret

Hawkes

Aussois 2015

30 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Why real multivariate point processes in genomics ?

TRE

Transcription Regulatory Elements = ”everything” that may enhance or

repress gene expression

P.Reynaud-Bouret

Hawkes

Aussois 2015

31 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Why real multivariate point processes in genomics ?

TRE

Transcription Regulatory Elements = ”everything” that may enhance or

repress gene expression

promoter, enhancer, silencer, histone modifications, replication origin

on the DNA.... They should interact but how ? Can we have a

statistical guess ?

P.Reynaud-Bouret

Hawkes

Aussois 2015

31 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Why real multivariate point processes in genomics ?

TRE

Transcription Regulatory Elements = ”everything” that may enhance or

repress gene expression

promoter, enhancer, silencer, histone modifications, replication origin

on the DNA.... They should interact but how ? Can we have a

statistical guess ?

There are methods (ChIP-chip experiments, ChIP-seq experiments)

where after preprocessing the data one has access to the (almost

exact) positions of several type of TREs at one time, and this under

different experimental conditions. (ENCODE)

P.Reynaud-Bouret

Hawkes

Aussois 2015

31 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Why real multivariate point processes in genomics ?

TRE

Transcription Regulatory Elements = ”everything” that may enhance or

repress gene expression

promoter, enhancer, silencer, histone modifications, replication origin

on the DNA.... They should interact but how ? Can we have a

statistical guess ?

There are methods (ChIP-chip experiments, ChIP-seq experiments)

where after preprocessing the data one has access to the (almost

exact) positions of several type of TREs at one time, and this under

different experimental conditions. (ENCODE)

On the real line = DNA if the 3D structure of the DNA is negligible

(typically interaction range between points ≤ 10 kB)

P.Reynaud-Bouret

Hawkes

Aussois 2015

31 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Why real multivariate point processes in genomics ?

TRE

Transcription Regulatory Elements = ”everything” that may enhance or

repress gene expression

promoter, enhancer, silencer, histone modifications, replication origin

on the DNA.... They should interact but how ? Can we have a

statistical guess ?

There are methods (ChIP-chip experiments, ChIP-seq experiments)

where after preprocessing the data one has access to the (almost

exact) positions of several type of TREs at one time, and this under

different experimental conditions. (ENCODE)

On the real line = DNA if the 3D structure of the DNA is negligible

(typically interaction range between points ≤ 10 kB)

If the real structure → 3D point processes on graphs... ( ? ?)

Why just DNA ? RNA etc ...

P.Reynaud-Bouret

Hawkes

Aussois 2015

31 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Point processes and conditional intensity

dN

$ %&t '

Nbr observed points

in [t, t + dt]

λ(t)

P.Reynaud-Bouret

=

=

=

intensity

& '$ %

λ(t) dt

$ %& '

Expected Number

given the past before t

+

$noise

%& '

Martingales

differences

instantaneous frequency

random, depends on previous points

Hawkes

Aussois 2015

32 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Local independence graphs

(Didelez (2008))

1

3

1

2

P.Reynaud-Bouret

3

1

2

Hawkes

3

2

Aussois 2015

33 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Local independence graphs

(Didelez (2008))

1

3

1

2

3

1

2

3

2

If one is able to infer a local independence graph, then we may have access

to ”functional connectivity”.

&→ needs a ”model” .

P.Reynaud-Bouret

Hawkes

Aussois 2015

33 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

N1

N2

N3

P.Reynaud-Bouret

Hawkes

Aussois 2015

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

N1

N2

N3

λ(1) (t)

P.Reynaud-Bouret

=

ν

$%&'

spontaneous

Hawkes

Aussois 2015

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

1->1

N2

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

$

%&

self-interaction

Hawkes

'

Aussois 2015

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

1->1

x

N2

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

$

%&

self-interaction

Hawkes

'

Aussois 2015

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

x

1->1

x

N2

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

$

%&

self-interaction

Hawkes

'

Aussois 2015

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

x

1->1

x

x

h

N2

2->1

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

%&

$

self-interaction

Hawkes

'

+

,

hm→1 (t − T )

m ̸= 1,

T < t,

pt de Nm

%&

$

interaction

Aussois 2015

'

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

x

1->1

x

x

h

N2

2->1

x

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

%&

$

self-interaction

Hawkes

'

+

,

hm→1 (t − T )

m ̸= 1,

T < t,

pt de Nm

%&

$

interaction

Aussois 2015

'

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

x

1->1

x

x

h

N2

2->1

x

h

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

%&

$

self-interaction

Hawkes

x

3->1

'

+

,

hm→1 (t − T )

m ̸= 1,

T < t,

pt de Nm

%&

$

interaction

Aussois 2015

'

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

x

1->1

x

x

h

N2

2->1

x

h

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

%&

$

self-interaction

Hawkes

x

3->1

'

+

x

,

hm→1 (t − T )

m ̸= 1,

T < t,

pt de Nm

%&

$

interaction

Aussois 2015

'

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

Multivariate Hawkes processes

t

h

N1

x

1->1

x

x

h

N2

2->1

x

h

N3

λ(1) (t)

=

ν

$%&'

spontaneous

P.Reynaud-Bouret

+

,

h1→1 (t − T )

T <t

pt de N1

%&

$

self-interaction

Hawkes

x

3->1

'

+

x

x

,

hm→1 (t − T )

m ̸= 1,

T < t,

pt de Nm

%&

$

interaction

Aussois 2015

'

34 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

More formally

(r )

Only excitation (all the hℓ

are positive) : for all r ,

M # t−

,

(r )

(ℓ)

λ(r ) (t) = νr +

hℓ (t − u)dNu .

ℓ=1

−∞

Branching)/ Cluster representation,

stationary process if the spectral

*

" (r )

radius of

hℓ (t)dt is < 1.

P.Reynaud-Bouret

Hawkes

Aussois 2015

35 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

More formally

(r )

Only excitation (all the hℓ

are positive) : for all r ,

M # t−

,

(r )

(ℓ)

λ(r ) (t) = νr +

hℓ (t − u)dNu .

ℓ=1

−∞

Branching)/ Cluster representation,

stationary process if the spectral

*

" (r )

radius of

hℓ (t)dt is < 1.

Interaction, for instance, (in general any 1-Lipschitz function,

Br´emaud Massouli´e 1996)

.

M # t−

,

(ℓ)

(r )

(r )

.

λ (t) = νr +

hℓ (t − u)dNu

ℓ=1

P.Reynaud-Bouret

−∞

Hawkes

+

Aussois 2015

35 / 129

Multivariate Hawkes processes and Lasso

A model for neuroscience and genomics

More formally

(r )

Only excitation (all the hℓ

are positive) : for all r ,

M # t−

,

(r )

(ℓ)

λ(r ) (t) = νr +

hℓ (t − u)dNu .

ℓ=1

−∞

Branching)/ Cluster representation,

stationary process if the spectral

*

" (r )

radius of

hℓ (t)dt is < 1.

Interaction, for instance, (in general any 1-Lipschitz function,

Br´emaud Massouli´e 1996)

.

M # t−

,

(ℓ)

(r )

(r )

.

λ (t) = νr +

hℓ (t − u)dNu

ℓ=1

−∞

+

Exponential (Multiplicative

no guarantee of a stationary

.

- shapeMbut

, # t− (r )

(ℓ)

.

hℓ (t − u)dNu

version ...) λ(r ) (t) = exp νr +

P.Reynaud-Bouret

ℓ=1

Hawkes

−∞

Aussois 2015

35 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Previous works

Maximum likelihood estimates eventually (Ogata, Vere-Jones etc

mainly for sismology, Chornoboy et al., for neuroscience, Gusto and

Schbath for genomics)

Parametric tests for the detection of edge + Maximum likelihood +

exponential formula + spline estimation (Carstensen et al., in

genomics)

P.Reynaud-Bouret

Hawkes

Aussois 2015

36 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Previous works

Maximum likelihood estimates eventually (Ogata, Vere-Jones etc

mainly for sismology, Chornoboy et al., for neuroscience, Gusto and

Schbath for genomics)

Parametric tests for the detection of edge + Maximum likelihood +

exponential formula + spline estimation (Carstensen et al., in

genomics)

Main problem not enough spikes ! so either over-fitting because d

(number of parameters) too large or bad estimation if d too small

(see later).

Also MLE are not that easy to compute (EM algorithm etc)

̸= least-squares if linear parametric model.

P.Reynaud-Bouret

Hawkes

Aussois 2015

36 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Another parametric method : Least-squares

Observation on [0, T ]

A good estimate of the parameters should correspond to an intensity

candidate close to the true one.

P.Reynaud-Bouret

Hawkes

Aussois 2015

37 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Another parametric method : Least-squares

Observation on [0, T ]

A good estimate of the parameters should correspond to an intensity

candidate close to the true one.

"T

Hence one would like to minimize ∥η − λ∥2 = 0 [η(t) − λ(t)]2 dt in η

P.Reynaud-Bouret

Hawkes

Aussois 2015

37 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Another parametric method : Least-squares

Observation on [0, T ]

A good estimate of the parameters should correspond to an intensity

candidate close to the true one.

"T

Hence one would like to minimize ∥η − λ∥2 = 0 [η(t) − λ(t)]2 dt in η

"T

"T

or equivalently −2 0 η(t)λ(t)dt + 0 η(t)2 dt.

P.Reynaud-Bouret

Hawkes

Aussois 2015

37 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Another parametric method : Least-squares

Observation on [0, T ]

A good estimate of the parameters should correspond to an intensity

candidate close to the true one.

"T

Hence one would like to minimize ∥η − λ∥2 = 0 [η(t) − λ(t)]2 dt in η

"T

"T

or equivalently −2 0 η(t)λ(t)dt + 0 η(t)2 dt.

But dNt randomly fluctuates around λ(t)dt and is observable.

P.Reynaud-Bouret

Hawkes

Aussois 2015

37 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Another parametric method : Least-squares

Observation on [0, T ]

A good estimate of the parameters should correspond to an intensity

candidate close to the true one.

"T

Hence one would like to minimize ∥η − λ∥2 = 0 [η(t) − λ(t)]2 dt in η

"T

"T

or equivalently −2 0 η(t)λ(t)dt + 0 η(t)2 dt.

But dNt randomly fluctuates around λ(t)dt and is observable.

Hence minimize

γ(η) = −2

#

T

η(t)dNt +

0

#

T

η(t)2 dt,

0

for a model η = λa (t),

If multivariate minimize

P.Reynaud-Bouret

!

m

γm (η (m) ).

Hawkes

Aussois 2015

37 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

γ(η) = −2α

P.Reynaud-Bouret

#

T

0

2

N[t−b,t−a] dNt + α

Hawkes

#

0

T

2

N[t−b,t−a]

dt

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

"T

N[t−b,t−a] dNt

α

ˆ = "0 T

2

0 N[t−b,t−a] dt

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

"T

N[t−b,t−a] dNt

α

ˆ = "0 T

2

0 N[t−b,t−a] dt

b

a

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

"T

N[t−b,t−a] dNt

α

ˆ = "0 T

2

0 N[t−b,t−a] dt

b

a

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

"T

N[t−b,t−a] dNt

α

ˆ = "0 T

2

0 N[t−b,t−a] dt

b

a

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

"T

N[t−b,t−a] dNt

α

ˆ = "0 T

2

0 N[t−b,t−a] dt

b

a

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square contrast on a toy model

Let [a, b] an interval of R+ .

η(t) =

,

α1a≤t−T ≤b = αN[t−b,t−a]

T ∈N

There is only one parameter α → minimizing γ

"T

N[t−b,t−a] dNt

α

ˆ = "0 T

2

0 N[t−b,t−a] dt

The numerator is the number of pairs of points with delay in [a, b]

P.Reynaud-Bouret

Hawkes

Aussois 2015

38 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Full multivariate processes and linear parametrisation

(r )

?

λ (t) = ν

(r )

+

M

,

,

ℓ=1 T <t,T in N (ℓ)

P.Reynaud-Bouret

Hawkes

(r )

hℓ (t − T ).

Aussois 2015

39 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Full multivariate processes and linear parametrisation

(r )

?

λ (t) = ν

(r )

+

M

,

,

ℓ=1 T <t,T in N (ℓ)

(r )

hℓ (t − T ).

+ Piecewise constant model with parameter a

P.Reynaud-Bouret

Hawkes

Aussois 2015

39 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Full multivariate processes and linear parametrisation

(r )

?

λ (t) = ν

(r )

+

M

,

,

ℓ=1 T <t,T in N (ℓ)

(r )

hℓ (t − T ).

+ Piecewise constant model with parameter a

By linearity,

?

λ(r ) (t) = (Rct )′ a,

P.Reynaud-Bouret

Hawkes

Aussois 2015

39 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Full multivariate processes and linear parametrisation

(r )

?

λ (t) = ν

(r )

+

M

,

,

ℓ=1 T <t,T in N (ℓ)

(r )

hℓ (t − T ).

+ Piecewise constant model with parameter a

By linearity,

?

λ(r ) (t) = (Rct )′ a,

with Rct being the renormalized instantaneous count given by

′

(Rct ) =

P.Reynaud-Bouret

!

"

−1/2 (1) ′

−1/2 (M) ′

1, δ

(ct ) , ..., δ

(ct ) ,

Hawkes

Aussois 2015

39 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Full multivariate processes and linear parametrisation

(r )

?

λ (t) = ν

(r )

+

M

,

,

ℓ=1 T <t,T in N (ℓ)

(r )

hℓ (t − T ).

+ Piecewise constant model with parameter a

By linearity,

?

λ(r ) (t) = (Rct )′ a,

with Rct being the renormalized instantaneous count given by

′

(Rct ) =

(ℓ)

and with ct

!

"

−1/2 (1) ′

−1/2 (M) ′

1, δ

(ct ) , ..., δ

(ct ) ,

being the vector of instantaneous count with delay of Nℓ i.e.

(ℓ) ′

(ct ) =

P.Reynaud-Bouret

!

"

(ℓ)

(ℓ)

N[t−δ,t) , ..., N[t−K δ,t−(K −1)δ) .

Hawkes

Aussois 2015

39 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

An heuristic for the least-square estimator

Informally, the link between the point process and its intensity can be

written as

(r )

dN (r ) (t) ≃ (Rct )′ a∗ dt + noise.

P.Reynaud-Bouret

Hawkes

Aussois 2015

40 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

An heuristic for the least-square estimator

Informally, the link between the point process and its intensity can be

written as

(r )

dN (r ) (t) ≃ (Rct )′ a∗ dt + noise.

Let

G=

#

T

Rct (Rct )′ dt,

0

the integrated covariation of the renormalized instantaneous count.

P.Reynaud-Bouret

Hawkes

Aussois 2015

40 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

An heuristic for the least-square estimator

Informally, the link between the point process and its intensity can be

written as

(r )

dN (r ) (t) ≃ (Rct )′ a∗ dt + noise.

Let

G=

#

T

Rct (Rct )′ dt,

0

the integrated covariation of the renormalized instantaneous count.

b(r ) :=

P.Reynaud-Bouret

#

T

0

(r )

Rct dN (r ) (t) ≃ Ga∗ + noise.

Hawkes

Aussois 2015

40 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

An heuristic for the least-square estimator

Informally, the link between the point process and its intensity can be

written as

(r )

dN (r ) (t) ≃ (Rct )′ a∗ dt + noise.

Let

G=

#

T

Rct (Rct )′ dt,

0

the integrated covariation of the renormalized instantaneous count.

b(r ) :=

#

T

0

(r )

Rct dN (r ) (t) ≃ Ga∗ + noise.

where in b lies again the number of couples with a certain delay

(cross-correlogram).

P.Reynaud-Bouret

Hawkes

Aussois 2015

40 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

Least-square estimate

G=

#

T

Rct (Rct )′ dt,

0

(r )

b

:=

#

T

Rct dN (r ) (t)

0

Least-square estimate

ˆ(r ) = G−1 b(r ) ,

a

→ simpler formula than Maximum Likelihood Estimators for similar

properties (except efficiency).

P.Reynaud-Bouret

Hawkes

Aussois 2015

41 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

What gain wrt cross correlogramm ?

1

5ms

3

2

5ms

only 2 non zero interaction functions over 9

P.Reynaud-Bouret

Hawkes

Aussois 2015

42 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

What gain wrt cross correlogramm ?

Histogram of dist2

Histogram of dist2

−0.03 −0.02 −0.01

0.00

1−>2

P.Reynaud-Bouret

0.01

0.02

0.03

40

Frequency

0

0

0

20

50

20

40

100

Frequency

60

Frequency

150

80

60

200

100

80

Histogram of dist2

−0.03 −0.02 −0.01

0.00

2−>3

Hawkes

0.01

0.02

0.03

−0.03 −0.02 −0.01

0.00

0.01

0.02

0.03

1−>3

Aussois 2015

42 / 129

Multivariate Hawkes processes and Lasso

Parametric estimation

4

0

1

2

interaction

3

4

3

2

interaction

0

1

2

0

1

interaction

3

4

What gain wrt cross correlogramm ?

0.000 0.005 0.010 0.015 0.020 0.025 0.030

0.000 0.005 0.010 0.015 0.020 0.025 0.030

0.000 0.005 0.010 0.015 0.020 0.025 0.030

1−>2

2−>3

1−>3

P.Reynaud-Bouret

Hawkes

Aussois 2015

42 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Adaptive statistics

If no model known, one usually wants to consider the largest possible

model (piecewise constant with hundreds of parameters etc)

If use MLE or OLS, each parameter estimate has a variance ≃ 1/T

P.Reynaud-Bouret

Hawkes

Aussois 2015

43 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Adaptive statistics

If no model known, one usually wants to consider the largest possible

model (piecewise constant with hundreds of parameters etc)

If use MLE or OLS, each parameter estimate has a variance ≃ 1/T

If there are d parameters, global variance ≃ d/T

P.Reynaud-Bouret

Hawkes

Aussois 2015

43 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Adaptive statistics

If no model known, one usually wants to consider the largest possible

model (piecewise constant with hundreds of parameters etc)

If use MLE or OLS, each parameter estimate has a variance ≃ 1/T

If there are d parameters, global variance ≃ d/T

Over-fitting ! ! ! !

P.Reynaud-Bouret

Hawkes

Aussois 2015

43 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Moindres carrés

Penalty and parameter choices

Contraste

Complexité du modèle

P.Reynaud-Bouret

Hawkes

Aussois 2015

44 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Penalty and parameter choices

1

2

Moindres carr

3

P.Reynaud-Bouret

Hawkes

Aussois 2015

44 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Penalty and parameter choices

1

2

Moindres carr

3

1

10

xité du modèle (dimension)

P.Reynaud-Bouret

Hawkes

270

Aussois 2015

44 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Penalty and parameter choices

Moindres carrés

1

3

2

Complexité du modèle

P.Reynaud-Bouret

Hawkes

Aussois 2015

44 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Moindres carrés

Penalty and parameter choices

Pénalité

Contraste

Complexité du modèle

P.Reynaud-Bouret

Hawkes

Aussois 2015

44 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Penalty and parameter choices

Contraste + Pénalité =

Critère pénalisé

1

Moindres carrés

3

2

Pénalité

Contraste

P.Reynaud-Bouret

Complexité du modèle

Hawkes

Aussois 2015

44 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Previous works

log-likelihood penalized by AIC (Akaike Criterion) (Ogata, Vere-Jones,

Gusto and Schbath) : choose the model with d parameters such that

ℓ(λθˆd ) + d

Generally works well if few models and largest dimension fixed

whereas T → ∞

P.Reynaud-Bouret

Hawkes

Aussois 2015

45 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Previous works

log-likelihood penalized by AIC (Akaike Criterion) (Ogata, Vere-Jones,

Gusto and Schbath) : choose the model with d parameters such that

ℓ(λθˆd ) + d

Generally works well if few models and largest dimension fixed

whereas T → ∞

least-squares+ ℓ0 penalty, (RB and Schbath)

γ(λθˆd ) + cˆd

with cˆ data-driven. Proof that if cˆ > κ, it works even if d grows with

T (moderately, log(T ) with d)

P.Reynaud-Bouret

Hawkes

Aussois 2015

45 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Previous works

log-likelihood penalized by AIC (Akaike Criterion) (Ogata, Vere-Jones,

Gusto and Schbath) : choose the model with d parameters such that

ℓ(λθˆd ) + d

Generally works well if few models and largest dimension fixed

whereas T → ∞

least-squares+ ℓ0 penalty, (RB and Schbath)

γ(λθˆd ) + cˆd

with cˆ data-driven. Proof that if cˆ > κ, it works even if d grows with

T (moderately, log(T ) with d)

→ possible mathematically to search for the ”zeros”

P.Reynaud-Bouret

Hawkes

Aussois 2015

45 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Previous works

log-likelihood penalized by AIC (Akaike Criterion) (Ogata, Vere-Jones,

Gusto and Schbath) : choose the model with d parameters such that

ℓ(λθˆd ) + d

Generally works well if few models and largest dimension fixed

whereas T → ∞

least-squares+ ℓ0 penalty, (RB and Schbath)

γ(λθˆd ) + cˆd

with cˆ data-driven. Proof that if cˆ > κ, it works even if d grows with

T (moderately, log(T ) with d)

→ possible mathematically to search for the ”zeros”

Problem only for univariate because computational time and memory

size awfully large !

P.Reynaud-Bouret

Hawkes

Aussois 2015

45 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Previous works

log-likelihood penalized by AIC (Akaike Criterion) (Ogata, Vere-Jones,

Gusto and Schbath) : choose the model with d parameters such that

ℓ(λθˆd ) + d

Generally works well if few models and largest dimension fixed

whereas T → ∞

least-squares+ ℓ0 penalty, (RB and Schbath)

γ(λθˆd ) + cˆd

Magill

with cˆ data-driven. Proof that if cˆ > κ, it works even if d grows with

T (moderately, log(T ) with d)

→ possible mathematically to search for the ”zeros”

Problem only for univariate because computational time and memory

size awfully large !

→ convex criterion

P.Reynaud-Bouret

Hawkes

Aussois 2015

45 / 129

Multivariate Hawkes processes and Lasso

What can we do against over fitting ? (Adaptation)

Other works

Maximum likelihood + exponential formula + ℓ1 ”group Lasso”

penalty (Pillow et al. in neuroscience) but no mathematical proof

Thresholding + tests for very particular bivariate models, oracle

inequality (Sansonnet)

P.Reynaud-Bouret

Hawkes

Aussois 2015

46 / 129

Multivariate Hawkes processes and Lasso

Lasso criterion

ℓ1 penalty

with N.R. Hansen (Copenhagen), and V. Rivoirard (Dauphine) (2012)

The Lasso criterion can be expressed independently for each sub-process

by :

Least Absolute Shrinkage

introduced

c-

byTlbswnanid996)=

Lasso criterion

.

And

selection

Operate

(r )

ˆ(r ) = argmina {γT (λa ) + pen(a)}

a

= argmina {−2a′ br + a′ Ga + 2(d(r ) )′ |a|}

with d′ |a| =