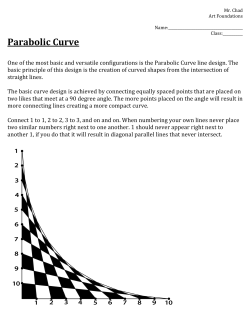

The British Society for the Philosophy of Science