On the Sample Size of Randomized MPC for Chance-

On the Sample Size of Randomized MPC for ChanceConstrained Systems with Application to Building Climate Control

Xiaojing Zhang, Sergio Grammatico, Georg Schildbach, Paul Goulart and John Lygeros

Abstract— We consider Stochastic Model Predictive Control

(SMPC) for constrained linear systems with additive disturbance, under affine disturbance feedback (ADF) policies. One

approach to solve the chance-constrained optimization problem

associated with the SMPC formulation is randomization, where

the chance constraints are replaced by a number of sampled

hard constraints, each corresponding to a disturbance realization. The ADF formulation leads to a quadratic growth in the

number of decision variables with respect to the prediction horizon, which results in a quadratic growth in the sample size. This

leads to computationally expensive problems with solutions that

are conservative in terms of both cost and violation probability.

We address these limitations by establishing a bound on the

sample size which scales linearly in the prediction horizon. The

new bound is obtained by explicitly computing the maximum

number of active constraints, leading to significant advantages

both in terms of computational time and conservatism of the

solution. The efficacy of the new bound relative to the existing

one is demonstrated on a building climate control case study.

I. I NTRODUCTION

Model Predictive Control (MPC) is a powerful methodology for control design for systems in which state and

input constraints are present [1]. By predicting the future

behavior of the plant, MPC is able to incorporate feedforward

information in the control design. Such information may

come, for example, in the form of predictions or reference

tracking. At each sampling time, MPC requires to solve

a finite horizon optimal control problem (FHOCP), and to

implement the first element of the optimal control sequence.

One way of designing controllers is to assume an exact

model of the plant and perfect evolution of the states according to that model. In practice, however, disturbances arise

from both model uncertainty and prediction errors. The latter,

for example, is a challenging problem in building climate

control [2], [3], where the goal is to control the comfort

dynamics in a building. In this case, disturbances appear

due to the uncertainty in weather and rooms occupancy. In

general, within the MPC literature two main methods have

been studied to address uncertainty: robust and stochastic

MPC. Robust MPC computes a control law that guarantees

constraint satisfaction for all possible disturbance realizations

[4]. Although successful in many cases, this strategy may

lead to conservative controllers that exhibit poor performance

The authors are with the Automatic Control Laboratory, Department

of Electrical Engineering and Information Technology, Swiss Federal

Institute of Technology Zurich (ETH Zurich), 8092 Zurich, Switzerland.

{xiaozhan, grammatico, schildbach, pgoulart,

lygeros}@control.ee.ethz.ch.

This research was partially funded by a RTD grant from Swiss NanoTera.ch under the project HeatReserves.

in practice. This is due to the need to over-approximate the

uncertainty set to obtain a tractable problem formulation.

These limitations are partially overcome by adopting the

notion of Stochastic MPC (SMPC) [5], where the constraints

of the FHOCP are interpreted probabilistically via chance

constraints, allowing for a (small) constraint violation probability. Unfortunately, chance constrained FHOCPs are in

general non-convex and require the computation of multidimensional integrals. Hence, SMPC is computationally intractable for many applications.

Randomized MPC (RMPC) [6] is a new method to approximate SMPC problems. It is computationally tractable

without being limited to specific probability distributions. At

every time step, the chance constrained FHOCP is solved via

the scenario approach [7]–[9], which is a randomized technique for solving convex chance constrained optimization

problems. The basic idea is to replace the chance constraints

with a finite number of sampled constraints, which correspond to independently sampled disturbance realizations. The

sample size is chosen so that, with high confidence, the

violation probability of the solution of the sampled FHOCP

remains small.

An important challenge for the practical application of

RMPC is its requirement for a large sample size, even

for relatively small systems. A large sample size generates

equally many constraints, so that the resulting sampled

FHOCP becomes computationally expensive to solve, even if

it is convex. Moreover, the bound on the sample complexity

established in [8], [9] is tight for the class of “fully supported” problems, yet many MPC problems do not fall into

this category. This typically leads to conservative solutions,

both in terms of cost and empirical violation probability.

The authors in [10] try to alleviate this conservatism by

using a combination of randomized and robust optimization,

which can be successfully applied towards SMPC in openloop control. However, when the same approach is applied to

closed-loop policies using affine disturbance feedback (ADF)

[11], the solution usually becomes more conservative than

the standard RMPC approach. Unfortunately, in case of ADF

policies, the latter is expensive to solve in practice, because

the number of decision variables grows quadratically in the

prediction horizon. This results in a quadratic growth of

the sampled constraints, making RMPC with ADF almost

impossible to solve in practice, even if the problem remains

convex.

In this paper we attempt to overcome these limitations

by establishing a novel upper bound on the sample size for

RMPC based on the ADF policy. Instead of a quadratic

growth of the number of samples, our bound on the sample size grows linearly in the prediction horizon. This is

achieved by exploiting structural properties of the constraints

in the sampled FHOCP. We apply this improved bound to a

building control problem, where the sample size is reduced

significantly. We restrict to RMPC for linear systems, while

we refer to [12] for the case of nonlinear control-affine

systems, based on the non-convex scenario-approach results

in [13], [14].

In Section II we formulate the Stochastic MPC problem.

Section III summarizes the standard RMPC approach. We

describe the proposed methodology in Section IV, whereas

Section V discusses numerical results for the building control

case study. Conclusions are drawn in Section VI. The proofs

are given in Appendix.

II. MPC P ROBLEM D ESCRIPTION

A. Dynamics, constraints and control objective

We consider the following discrete-time affine system

subject to additive disturbance

x+ = Ax + Bu + V v + Ew,

(1)

where x ∈ Rnx is the state vector, x+ ∈ Rnx the successor

state, u ∈ Rnu the input vector, v ∈ Rnv a vector that is

known a priori, and A, B, V and E are matrices of appropriate dimensions. The vector w ∈ Rnw models the stochastic

disturbance. If N is the prediction horizon and wk , for

k ∈ {0, . . . , N −1}, the disturbance at the kth step, we define

the “full-horizon” disturbance as w := [w0 , . . . , wN −1 ].

We assume that w is defined by a probability measure P.

The distribution itself need not be known explicitly, but we

require that independent samples can be drawn according to

this distribution. In practical applications, the samples could

arise from historical data. Note that the wk at different times

inside w need not be independent and identically distributed

(i.i.d.).

We assume that at each prediction step k ∈ {1, . . . , N },

the state xk is subject to polyhedral constraints which may

be violated with a probability no greater than k ∈ (0, 1).

They can be expressed as

P[F xk ≤ f ] ≥ 1 − k ,

(2)

PN −1

k=0

`(xk , uk ) + `f (xN ),

In its most general setting, the control problem consists

of finding a control policy Π := {µ0 , . . . , µN −1 }, with

µk = µk (x0 , . . . , xk ) ∈ Rnu , which minimizes the cost in

(3) subject to the constraints in (2) and dynamics in (1). The

intuition is to take measurements of the past and current

states into account when computing future control inputs.

However, since it is generally intractable to optimize over

the function space of state feedback policies, a common

approximation is the affine disturbance feedback (ADF)

policy [11] of the form

Pk−1

uk := hk + j=0 Mk,j wj ,

(4)

where one optimizes over all Mk,j ∈ Rnu ×nw and hk ∈

Rnu . It is shown in [11] that (4) is equivalent to affine state

feedback, but gives rise to a convex problem.

If x is the initial state and we define wk := [w0 , . . . , wk−1 ]

as the restriction of w over its first k components, hk :=

[h0 , . . . , hk−1 ], and vk := [v0 , . . . , vk−1 ], then the state xk

can be expressed as

xk = Ak x + Mk wk + Bk hk + Vk vk + Ek wk ,

(5)

for suitable matrices Mk , Bk , Vk , and Ek . Note that due

to causality, the matrix Mk is strictly block lower triangular,

while Bk , Vk , and Ek are block lower triangular.

C. Stochastic MPC formulation

The Stochastic MPC (SMPC) problem is obtained by

combining (1) – (4). Therefore, at each sampling time, we

solve the following chance constrained FHOCP

min E[J(M, h)]

(6)

M,h

s.t.

P [F xk ≤ f ] ≥ 1 − k

∀k ∈ {1, . . . , N },

where E is the expectation associated to P and xk is as in

(5). The matrix M ∈ RN nu ×N nw is a strict block lower triangular matrix collecting all Mk,j and h := [h0 , . . . , hN −1 ].

Note that the problem in (6) has multiple chance constraints

(“multi-stage problem”), where each constraint must be

satisfied with a predefined probability of k .

Remark 1 (Input Constraints): To ease the presentation of

our results we consider only state constraints. Nevertheless,

our results can handle input constraints as well, provided

they are interpreted in a probabilistic sense.

III. R ANDOMIZED MPC

where F ∈ Rnf ×nx , f ∈ Rnf , and nf is the number of state

constraints. Note that each stage is viewed as one (joint)

chance constraint.

The control objective is to minimize a performance function of the form

J(u0 , . . . , uN −1 ) =

B. Affine disturbance feedback policy

(3)

where ` : Rnx × Rnu → R and `f : Rnx → R are strictly

convex functions, and {xk }N

k=0 satisfies the dynamics in (1)

under control inputs u0 , . . . , uN −1 .

In general, the chance constraints turn the FHOCP in (6)

into a non-convex and computationally intractable problem,

making SMPC impractical to implement. RMPC is one

method to obtain a tractable approximation, based on the

scenario approach [7]–[9]. In its original formulation, RMPC

uses one joint chance constraint for all constraints along

the horizon [6]. The authors in [15] propose an RMPC

formulation to cope with multiple chance constraints, as

discussed next.

(1)

(S )

For each stage k ∈ {1, . . . , N }, let {wk , . . . , wk k }

be a collection of samples, obtained by first drawing Sk

independent full-horizon samples according to P and then

restricting them to their first k components. The idea of the

scenario approach is to replace the kth chance constraint in

(6) with Sk hard constraints, each corresponding to a sample

(i)

wk , with i ∈ {1, . . . , Sk }, [7]–[9]. Hence, we solve the

sampled FHOCP

min E[J(M, h)]

(7)

M,h

s.t.

(i)

F xk ≤ f

∀i ∈ {1, . . . , Sk }, ∀k ∈ {1, . . . , N },

(i)

where xk is the ith predicted state. It is obtained by

(i)

substituting the ith sample wk into (5). In the interest of

space, we do not describe all technical details of the scenario

approach; instead, the interested reader is referred to [7] for

typical assumptions (convexity, uniqueness of optimizer, i.i.d.

sampling), and to [14] for measure-theoretic technicalities

about the well-definedness of the probability integrals.

The main challenge in RMPC is to establish the required

sample sizes Sk , so that the solution of (7) is feasible for

(6) with high confidence. Based on the results of [8], [9], it

was shown in [15] that if Sk satisfies

Pζk −1 Sk j

Sk −j

≤ βk ,

(8)

j=0

j k (1 − k )

where βk ∈ (0, 1) is the confidence parameter, then the

solution of (7) is feasible for the kth constraint in (6) with

confidence at least 1 − βk . The parameter ζk is the socalled support dimension (s-dimension) [15, Definition 4.1],

and upper bounds the number of support constraints [7,

Definition 4] of the kth chance constraint. We call a sampled

constraint a support constraint if its removal changes the

optimizer. An explicit lower bound on the sample size was

established in [16] as

e

.

(9)

ζk − 1 + ln β1k

Sk ≥ 1k e−1

Thus, for fixed k and βk , it follows that Sk ∼ O(ζk ), so

that problems with a lower ζk require fewer samples.

Unfortunately, explicitly computing ζk is difficult in general, and usually an upper bound has to be computed. As

reported in [10, Section III], the standard bound

k(k − 1)

=: dk ,

(10)

2

always holds, where dk is the number of decision variables

up to stage k. Hence using the standard bound, Sk scales as

O(k 2 nu nw ), which is quadratic along the horizon k 1 . This

quadratic growth makes the application of RMPC challenging because of the number of sampled constraints that need

to be stored in the computer memory and processed when

solving the optimization problem. Moreover, if the sample

size is chosen larger than necessary, the obtained solution

ζk ≤ knu + nu nw

PN

1 If the total number of samples is denoted by S :=

k=1 Sk , then

S grows as O(N 3 nu nw ) using the standard bound in (10). To simplify

discussion, we compare the sample sizes Sk of the individual stages. Hence,

if we say “quadratic growth” along the horizon, we refer to Sk rather than

S. From Faulhaber’s formula, the total number of samples has a growth

rate that is 1 order higher compared to the individual stages.

becomes conservative in terms of violation probability and

consequently in terms of cost.

Section IV presents tighter bounds on the s-dimension ζk

by exploiting structural properties of the constraint functions.

IV. R EDUCING THE S AMPLE S IZE

We here provide two methods to upper bound the stagewise s-dimensions ζk for the FHOCP in (7). The first bound

is obtained by exploiting structure in the decision space. The

second bound exploits structure in the uncertainty space and

scales linearly in k. In general, the first bound performs well

for small k, whereas the second is better for larger k. Hence,

the minimum among them should be taken to obtain the

tightest possible bound.

A. Structure in the decision space

One way of bounding the stage-wise s-dimensions ζk is

to exploit structural properties of the constraint function in

(2) with respect to the decision space. To this end, we recall

the so-called support rank (s-rank) ρk from [15, Definition

4.6].

Definition 1 (s-rank): For k ∈ {1, . . . , N }, let Lk be the

largest linear subspace of the decision space RdN , with dN

(i)

as in (10), that remains unconstrained by F xk ≤ f for all

(i)

sampled instances wk almost surely. Then the s-rank of the

kth chance constraint is defined as

ρk := dN − dim(Lk ).

Note that dN is the total number of decision variables of the

sampled FHOCP in (7). We know from [15, Theorem 4.7]

that the s-rank upper bounds the s-dimension, i.e. ζk ≤ ρk .

The next statement establishes an explicit bound on the srank for the FHOCP in (7).

Proposition 1: For all k ∈ {1, . . . , N }, the s-rank ρk of

the sampled FHOCP in (7) satisfies

k(k − 1)

+ min{rank(F ), knu }.

2

Proposition 1 consists of an improvement upon the standard

bound in (10), but still scales quadratically along the horizon.

ρk ≤ n u n w

B. Structure in the uncertainty space

Another way of obtaining a bound on ζk is to find an

upper bound on the number of active constraints, which we

define for the kth chance constraint as follows.

(i)

Definition 2 (Active Constraint): The sample wk

∈

(1)

(S )

{wk , . . . , wk k } is called an active sample for the kth

stage and generates an active constraint if, at the optimal

(i)

(i)

solution (M? , h? ) of (7), F xk = f , where xk is the

(i)

trajectory generated by (M? , h? ) and wk according to (5).

For any stage k ∈ {1, . . . , N }, let the set Ak ⊆ {1, . . . , Sk }

index the active samples and |Ak | denote its cardinality.

From [8, pag. 1219], we have that the s-dimension ζk is

bounded by the number of active contraints as follows.

Lemma 1: For all k ∈ {1, . . . , N }, ζk ≤ |Ak |.

For constraint functions of general structure, it is not easy to

determine |Ak |. For FHOCP in (7), however, such a bound

can be found under the following assumption.

Assumption 1 (Probability Measure): The random variable w is defined on a probability space with an absolutely

continuous probability measure P.

Note that a probability measure is absolutely continuous if

and only if it admits a probability density function. Under

this assumption, the next proposition provides a bound on

the number of active constraints.

Proposition 2: For all k ∈ {1, . . . , N }, the number of

active constraints of the sampled FHOCP in (7) almost surely

satisfies

|Ak | ≤ nf knw .

Unlike the bounds dk and ρk , |Ak | does not depend on the

number of decision variables at all, but rather on the dimension of the uncertainty affecting the kth state. Moreover, it

shows that the s-dimension scales at most linearly in the

prediction horizon as O(knw ), as opposed to O(k 2 nw nu )

when using dk or ρk . Moreover, Proposition 2 suggests

that for the same probabilistic guarantees, plants subject to

fewer uncertainties (smaller nw ) require a smaller sample

size compared to plants affected by higher dimensional

uncertainties.

Remark 2: Note that Assumption 1 does not allow for

“concentrated” probability masses. While it might seem

restrictive in general, this is not the case for many practical applications. For example in building climate control,

the main disturbances are due to the uncertainty in future

temperatures, solar radiation, and occupancy, which can be

assumed to arise from continuous distributions. Indeed, even

occupancy can take continuous values. This is because the

system model is discretized, but people enter and leave a

room at arbitrary times.

We refer to [17] for similar sample-size bounds where

Assumption 1 is released.

C. Combining the bounds

In general, the bounds ρk and |Ak | will not be the same

since they depend on the dimension of the decision and

uncertainty space, respectively. To obtain the tightest possible

bound, the minimum among both should be taken when

upper bounding the s-dimension.

Theorem 1: For all k , βk ∈ (0, 1), with k ∈ {1, . . . , N },

if Sk satisfies (8) with

o

n

k(k − 1)

+ min{rank(F ), knu }

min nf knw , nu nw

2

in place of ζk , with confidence no smaller than 1 − βk , the

optimal solution of the sampled FHOCP in (7) is feasible for

each chance constraint in (6).

Note that for k = 1, ρk ≤ |Ak |, while for larger k we have

|Ak | ≤ ρk because |Ak | grows linearly in k compared to ρk .

V. A PPLICATION TO B UILDING C LIMATE C ONTROL

In this section we consider a case study in building climate

control, where we regulate the room temperature of an office

room. In the spirit of [3], we use historical data or scenarios

in ensemble forecasting to construct samples for weather

prediction uncertainty, without having to know the exact

distribution. We use the reduced model presented in [18].

The system dynamics are affine in the form of (1). The

vector xk = [xk,1 , xk,2 , xk,3 ]> ∈ R3 is the state vector,

where xk,1 is the room temperature, xk,2 the temperature

of the inside wall, and xk,3 the temperature of the outside

wall. The weather and occupancy prediction is modeled by

the vector vk = [vk,1 , vk,2 , vk,3 ]> ∈ R3 where vk,1 is the

outside air temperature, vk,2 the solar radiation, and vk,3 the

occupancy. We investigate three cases in which we allow the

uncertainty w to have different dimensions varying between

nw ∈ {1, 2, 3}. For nw = 1 we only assume uncertainty in

vk,1 , for nw = 2 uncertainty in vk,1 and vk,2 , and for nw = 3

uncertainty in all three predicted components.

The control objective is to keep room temperature above

21◦ C with minimum energy cost. Four constrained inputs

uk ∈ R4 represent actuators commonly found in Swiss

office buildings: a radiator heater, cooled ceiling, floor

heating system, and mechanical ventilation for additional

heating/cooling purposes. TheP

minimum energy requirement

N

is modeled by a linear cost E[ k=0 c> uk ]. The probabilistic

state constraint can be expressed as P [xk,1 ≥ 21] ≥ 1 − k

for every k = 1, . . . , N . For the purpose of illustration and

to keep computation short, we select N = 8, k = 0.2 and

βk = 0.1 for all k ∈ {1, . . . , N }.

In the following, we compare the performance of the

sampled FHOCP using the new bound from Theorem 1

with rank(F ) = 1 and nf = 1, versus the standard bound

based on (10). We focus on the following three questions:

sample size, computational complexity, and conservatism of

the solution.

A. Sample size

Table I lists the sample complexity of nw = 1, 2, 3. The

sample sizes Sk are obtained by numerical inversion of (8).

It can be seen from Table I that the new bound dramatically

improves upon the standard one of (10). Indeed, for this

particular example the total number of samples S based on

Theorem 1 is almost an order of magnitude lower than the

one obtained using the standard bound.

TABLE I

P

C OMPARISON OF THE TOTAL NUMBER OF SAMPLES S := 8k=1 Sk ,

FOR nw ∈ {1, 2, 3}.

S=

P

k

Sk

nw = 1

new

standard

nw = 2

new

standard

nw = 3

new

standard

277

491

694

2729

4500

6254

TABLE II

AVERAGE CPU TIMES TO SOLVE RMPC FOR nw = 1, 2, 3 FOR

DIFFERENT HORIZON LENGTH N .

0.12

0.1

pr e de fine d

ne w bound

s t andar d bound

Solver

times

N

N

N

N

N

=8

= 16

= 24

= 32

= 40

nw = 1

new

standard

61 ms

750 ms

5.7 s

26 s

1.5 min

560 ms

16 s

3.2 min

#

#

nw = 2

new

standard

450 ms

4.2 s

36 s

5.5 min

12 min

2.0 s

1.3 min

#

#

#

nw = 3

new

standard

480 ms

11 s

2.5 min

27 min

75 min

4.1 s

3.4 min

#

#

#

βk

0.08

0.06

0.04

0.02

0

1

2

3

4

B. Computational complexity

The difference in the sample size also influences the computation time and memory usage when solving the sampled

FHOCP in (7). Table II reports the average solver times

and Table III the required memory allocation to formulate

the sampled problem for different values of the prediction

horizon N ∈ {8, 16, 24, 32, 40}. We note that prediction

horizons of 40 hours are common in building control. In

fact, [3] has observed that longer horizons improve the

performance of the MPC controller. The timings in Table

II are taken on a server running a 64-bit Linux operating

system, equipped with 16-core hyperthreaded Intel Xeon

processor at 2.6 GHz and 128 GB memory (RAM). We use

the solver CPLEX interfaced via MATLAB 2013a.

TABLE III

R EQUIRED MEMORY ALLOCATION FOR CONSTRAINT MATRICES IN

RMPC FOR nw = 1, 2, 3.

Memory

N

N

N

N

N

nw = 1

new

standard

nw = 2

new

standard

5

6

7

8

time [h]

#: Out of memory error from MATLAB.

nw = 3

new

standard

= 8 6.2 MB 60 MB 21 MB 187 MB 44 MB 383 MB

= 16 81 MB 1.4 GB 287 MB 5.0 GB 614 MB 10.6 GB

= 24 373 MB 9.8 GB 1.3 GB 36 GB 2.87 GB 77.5 GB

= 32 1.1 GB 39 GB 4.0 GB 146 GB 8.77 GB 320 GB

= 40 2.6 GB 116 GB 9.6 GB 437 GB 21 GB 963 GB

Table II shows that our new bound dramatically reduces

the computational times required to solve the sampled program. Moreover, due to the linear scaling of the sample size

in the prediction horizon, we are now able to solve problems that could not be managed previously due to memory

problems, indicated by “#” in Table II. The reason for that

can be explained by Table III. For N = 32, for example,

the sampled FHOCP based on the standard bound can not

be solved, even for nw = 1. On the other hand, the sampled

FHOCP based on the new bound remains manageable in

practice even for nw = 3, allowing the problem to be solved

on most hardware.

C. Conservatism of the solution

A poor bound on the sample size not only results in an

excessive number of samples, but also introduces conservatism into the solution, both in terms of violation probability

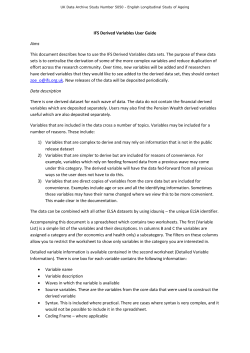

Fig. 1. Predefined βk (“red”), empirical estimate of βk using the new

bounds (“black”), and empirical estimate of βk using the standard bounds

(“blue”) for nw = 1.

TABLE IV

C OMPARISON OF EMPIRICAL COST FOR nw ∈ {1, 2, 3}.

Cost

N =8

nw = 1

new

standard

100.87

102.24

nw = 2

new

standard

103.92

107.70

nw = 3

new

standard

103.94

107.95

and cost. Fig. 1 depicts the empirical estimate of βk over

the prediction horizon for the case nw = 1. We observe

that the empirical confidence level using the new bound is

much closer to the predefined value of βk = 0.1 than the

standard bound. Since the new bound results in more frequent

violations (but remains smaller than a predefined acceptance

level), it also results in a lower cost, as displayed in Table

IV. From there we also see that the solution based on the

new sample size results in lower cost, allowing us to save

more energy in building control in all three cases.

VI. C ONCLUSION

In this paper, we have proposed new bounds on the

sample sizes for RMPC problems with additive uncertainty

and polyhedral constraints. The obtained bound results in

a sample size that scales linearly in the prediction horizon instead of quadratically, as for previous bounds. This

leads to less conservative solutions and dramatically reduces

the computational cost. The building control case study

has demonstrated that previously computationally infeasible

problems can now be solved.

A PPENDIX

Proof of Proposition 1

Let k ∈ {1, . . . , N }. The part “ρk ≤ nu nw k(k−1)

+

2

knu =: dk ” is the standard bound in (10) and follows immediately from

causality of the system in (5), where the decision

N −2

variables hk , . . . , hN −1 ; {Mk,j }k−1

j=0 , . . . , {MN −1,j }j=0

do not constrain the kth stage.

For

the

second

part,

note

that

the

kth

constraint can be expressed as gk (M

,

h

)

:=

k k

F Ak x + Mk wk + Bk hk + Vk vk + Ek wk − f ≤ 0,

where (Mk , hk ) are decision

variables. Clearly, for all

˜ k ∈ hk : F Bk hk = 0 the constraint gk (Mk , h

˜k ) ≤ 0

h

remains the same for all Mk . Hence, dim(Lk ) is at least

dim(null(F Bk )) = knu − rank(F Bk ), where the equality

is due to the Rank-Nullity Theorem. Thus, ρk is at most

+ rank(F ).

dk − (knu − rank(F Bk )) ≤ nu nw k(k−1)

2

Proof of Proposition 2

(1)

(S )

Let k ∈ {1, . . . , N }, and ωk := {wk , . . . , wk k } ⊂

knw

R

be a collection of Sk i.i.d. samples. Furthermore, let

the set Ak [ωk ] ⊆ {1, . . . , Sk } index the active samples of the

kth stage. Then, let us first prove the following supporting

statement.

Claim 1: For F ∈ R1×nx and f ∈ R, we almost surely

have

|Ak [ωk ]| ≤ knw .

Proof: We define p(Mk ) := F (Mk +Ek ) and q(hk ) :=

F (Ak x + Bk hk + Vk vk ) − f , so that the ith sampled

constraint at step k reads

(i)

p(Mk )wk + q(hk ) ≤ 0,

∀i ∈ {1, . . . , Sk }.

(11)

2

Let us assume that p(Mk ) 6= 0 for any Mk . This implies

that, for any pair (Mk , hk ), (11) can be interpreted as a

halfspace in Rknw , separated by a halfplane of the form

H(Mk , hk ) := wk ∈ Rknw p(Mk )wk + q(hk ) = 0 .

From linear algebra we know that knw points in “general position” uniquely define a hyperplane in Rknw . By

Assumption 1 it follows that, with probability one, any

(1)

(kn )

¯k ,...,w

¯k w }

collection of knw drawn samples ω

¯ := {w

uniquely defines a hyperplane. Since hyperplanes are affine

sets of dimension (knw − 1), their measure with respect to

Rknw is zero. Therefore, the probability of another sample

(kn +1)

¯k w

w

lying on the hyperplane defined by ω

¯ is zero.

Since the argument holds for any hyperplane H defined by

any (Mk , hk ), it also holds for the particular hyperplane

H(M?k , h?k ) associated with the solution (M? , h? ) of (7).

By inspection of (11) and the definition of an active sample

(Definition 2), it can be seen that all active samples lie on

the hyperplane H(M?k , h?k ). This concludes the proof of the

claim.

Let now F ∈ Rnf ×nx and f ∈ Rnf . It follows from the

above claim that for each row of the constraint, with probability one we have at most knw active samples. Therefore,

with nf constraints, with probability one we immediately get

at most nf knw active samples. This concludes the proof. 2 For any M it can be verified that the condition p(M ) 6= 0 is satisfied

k

k

whenever F E 6= 0. For most practical systems, the latter is satisfied because

F E = 0 would imply that the constraint is not affected by uncertainty.

In this degenerate case, there is no need to consider uncertainty for the

constraint. Thus, such a constraint can be ignored for the purpose of this

paper.

Proof of Theorem 1

By means of Lemma 1 and [15, Theorem 4.7] we have

ζk ≤ min{ρk , |Ak |}. By taking ρk and |Ak | as in Propositions 1 and 2, respectively, the statement follows immediately

from [15, Theorem 5.1].

R EFERENCES

[1] D. Q. Mayne, J. Rawlings, C. Rao, and P. Scokaert, “Constrained

model predictive control: stability and optimality,” Automatica, vol. 36,

pp. 789–814, 2000.

[2] F. Oldewurtel, A. Parisio, C. N. Jones, D. Gyalistras, M. Gwerder,

V. Stauch, B. Lehmann, and M. Morari, “Use of model predictive

control and weather forecasts for energy efficient building climate

control,” Energy and Buildings, vol. 45, pp. 15–27, 2012.

[3] X. Zhang, G. Schildbach, D. Sturzenegger, and M. Morari, “Scenariobased MPC for energy-efficient building climate control under weather

and occupancy uncertainty,” in Proc. of the IEEE European Control

Conference, Zurich, Switzerland, 2013.

[4] M. V. Kothare, V. Balakrishnan, and M. Morari, “Robust constrained

model predictive control using linear matrix inequalities,” Automatica,

vol. 32, no. 10, pp. 1361–1379, 1996.

[5] M. Cannon, B. Kouvaritakis, and X. Wu, “Probabilistic constrained

MPC for multiplicative and additive stochastic uncertainty,” IEEE

Trans. on Automatic Control, vol. 54, no. 7, pp. 1626–1632, 2009.

[6] G. C. Calafiore and L. Fagiano, “Robust model predictive control via

scenario optimization,” IEEE Trans. on Automatic Control, vol. 58,

2013.

[7] G. Calafiore and M. C. Campi, “The scenario approach to robust

control design,” IEEE Trans. on Automatic Control, vol. 51, no. 5,

pp. 742–753, 2006.

[8] M. C. Campi and S. Garatti, “The exact feasibility of randomized

solutions of robust convex programs,” SIAM Journal on Optimization,

vol. 19, no. 3, pp. 1211–1230, 2008.

[9] G. C. Calafiore, “Random convex programs,” SIAM Journal on Optimization, vol. 20, no. 6, pp. 3427–3464, 2010.

[10] X. Zhang, K. Margellos, P. Goulart, and J. Lygeros, “Stochastic model

predictive control using a combination of randomized and robust

optimization,” in Proc. of the IEEE Conf. on Decision and Control,

Florence, Italy, 2013.

[11] P. J. Goulart, E. C. Kerrigan, and J. M. Maciejowski, “Optimization

over state feedback policies for robust control with constraints,”

Automatica, vol. 42, no. 4, pp. 523–533, 2006.

[12] X. Zhang, S. Grammatico, K. Margellos, P. Goulart, and J. Lygeros,

“Randomized nonlinear MPC for uncertain control-affine systems with

bounded closed-loop constraint violations,” in IFAC World Congress,

Cape Town, South Africa, 2014.

[13] S. Grammatico, X. Zhang, K. Margellos, P. Goulart, and J. Lygeros,

“A scenario approach to non-convex control design: preliminary

probabilistic guarantees,” in Proc. of the IEEE American Control

Conference, Portland, Oregon, USA, 2014.

[14] ——, “A scenario approach for non-convex control design,” IEEE

Trans. on Automatic Control (submitted), 2014.

[15] G. Schildbach, L. Fagiano, and M. Morari, “Randomized solutions to

convex programs with multiple chance constraints,” SIAM Journal on

Optimization (in press), 2014.

[16] T. Alamo, R. Tempo, and A. Luque, “On the sample complexity of

randomized approaches to the analysis and design under uncertainty,”

in Proc. of IEEE American Control Conference, 2010.

[17] X. Zhang, S. Grammatico, G. Schildbach, P. Goulart, and J. Lygeros,

“On the sample size of random convex programs with structured

dependence on the uncertainty,” Automatica (submitted), 2014.

[18] F. Oldewurtel, C. N. Jones, and M. Morari, “A tractable approximation

of chance constrained stochastic MPC based on affine disturbance

feedback,” in Proc. of IEEE Conference on Decision and Control,

2008, pp. 4731–4736.

© Copyright 2026