CS 140 Final Exam Sample Solutions Summer 2008 Bob Lantz

CS 140 Final Exam Sample Solutions

Summer 2008

Bob Lantz

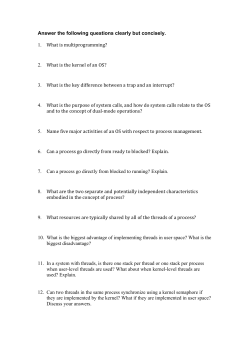

1. Synchronization (20 points)

This is actually a kind of interesting problem, and many implementations are possible ensuring correctness, deadlock-free operation, and fairness can be hard!

For this question, you just had to ensure that the various requirements were met.

Essentially write_lock() must lock out writers and readers, and read_lock() must

lock out writers, yet guarantee that writers are not starved. However, it’s OK for your

answer to give priority to writers (and possibly starve readers.)

Here’s a straightforward implementation using yield() :

typedef struct {

sema mutex;

int readers, writers;

} mlock;

void mlock_init(mlock *m) {

sema_init(&m->mutex, 1);

m->readers = 0;

m->writers = 0;

}

void read_lock(mlock *m) {

do {

P(&m->mutex);

if (m->writers == 0) break;

V(&m->mutex);

yield();

} while (1);

m->readers++;

V(&m->mutex);

}

void read_unlock(mlock *m) {

P(&m->mutex);

m->readers--;

V(&m->mutex);

}

void write_lock(mlock *m) {

P(&m->mutex);

m->writers++;

while (m->readers != 0) {

V(&m->mutex);

yield();

P(&m->mutex);

}

}

void write_unlock(mlock *m) {

m->writers--;

V(&m->mutex);

}

A version without using yield() might look something like this:

typedef struct {

sema read, write, count;

int readers;

} mlock;

void mlock_init(mlock *m) {

sema_init(&m->read, 1);

sema_init(&m->write, 1);

sema_init(&m->count, 1);

m->readers = 0;

}

void write_lock(mlock *m) {

P(&m->read);

P(&m->write);

}

void write_unlock(mlock *m) {

V(&m->read);

V(&m->write);

}

void read_lock(mlock *m) {

P(&m->read);

P(&m->count);

if (m->readers == 0)

P(&m->write);

m->readers++;

V(&m->read);

V(&m->count);

}

void read_unlock(mlock *m) {

P(&m->count);

m->readers--;

if (m->readers == 0)

V(&m->write);

V(&m->count);

}

2. Virtual Memory (25 points)

a) Page Directory - this can be one of two things: first, it is what intel calls the top level

of the page table hierarchy (so its function is reducing page table size by using a level of

indirection) ; second, it is what Pintos calls the per-process page table; in this sense, it

abstracts the x86 page table and manages virtual-physical address mappings.

SPT - per-process structure storing additional information that is not in the page table/

directory, such as: whether a page is swapped out, zero filled, code page, mapped page,

shared page, etc.; used to determine how to fill a page on a page fault, and also possibly

for page sharing and for deallocating pages when a process exits or unmaps a file. Not

actually necessary if you store this information in the page dir.

FT - global structure to track of all physical pages (i.e. frames) in the system, who is

using them, etc.; used to allocate and evict physical pages.

Swap Table - in Pintos, this may just be a bitmap; keeps track of allocated swap pages.

b) VMM needs to keep track of PPN -> MPN mappings for each VM.

Doesn’t require another level of indirection, because the TLB is actually a cache of the

shadow page table, which maps VPNs directly to MPNs.

c) Since the rates are per access, we can calculate AMAT as a weighted average as

follows:

Cache: !

TLB: ! !

!

!

!

!

.9 * 1 ns + .1 * 10 ns = 1.9 ns

500 cycles / (2e9 cycles/second ) = 250 ns

.01 * 250 ns = 2.5 ns

(note there’s no TLB hit penalty)

Page Faults: !.020 seconds * (1e9 ns/second) = 2e7 ns

!

!

.001 * 2e7 ns = 2e4 ns = 20,000 ns

AMAT = 20,004.4 ns or 20.0044 µs

Even if we only make one memory access per instruction, we’ll be limited to something

like 50 KIPS, because of the excessive page faults.

The interesting thing to note is that even though our rates for TLB misses and page

faults are much lower than our cache miss rate, they have a much larger contribution to

the memory latency of this (somewhat broken) computer system! But page faults are

dominating everything.

Clearly the best way to improve the performance in this system is to reduce the time

spent servicing page faults, most likely by getting more memory! Alternately you could

get a faster disk, defragment the swap file, shrink the workload, increase the page cache

relative to buffer cache size, or improve the page replacement algorithm.

d) For each of these (mostly lousy) replacement algorithms, we can easily achieve 1

page fault per reference.

You could either list the address references, e.g. 0x1000 0x2000 0x3000 0x4000 …

or the page references, e.g. 1 2 3 4 5

2. LRU: something like !

3. MRU: something like!

4. MFU: something like!

5. FIFO: 123456123456…

6. 123456565656565….

123456123456!

123456161616!

1 2 3 4 5 6 1 6 1 6 1 6!!

achieves 1 PF/ref

achieves 1 PF/ref

also achieves 1 PF/ref

e)

1. Simple: just requires add (map) and compare (check);

fast: add and compare can be done in parallel

2. The biggest problem with extent allocation is external fragmentation (though internal

fragmentation can also be an issue, since you can only release regions on the edge of

an extent!)

3. They might have tried periodic compaction and/or swapping.

3. File Systems (20 Points)

b) FFS splits files greater than 1M across cylinder groups, which are spaced across the

disk. It does this to leave space for file growth or additional, smaller files within the

same cylinder group (e.g. to preserve locality by co-locating these files’ inodes, data

blocks, and directory entries, thereby improving prefetching and reducing seeks

during file access and directory enumeration.)

b) Stanford students (and everyone else) tend to have large media files. These files are

both (a) larger than 1 MB (typically at least 3-4MB for an MP3) and (b) files that

require reasonably fast sequential access for smooth playback. While MP3s can easily

be cached in memory (cf. iPods) movies have much more data. Typical media

players (e.g. WMP or QuickTime player) don’t handle this well, as you can observe

whenever your file system is full or fragmented on Windows or OS X. If they

buffered more data for smooth playback, this could reduce the memory available to

other programs on the system, increasing paging, reducing caching, etc..

c) Sequential or random write performance is great because the log can be written

sequentially. Read performance is a bit dicey if the file was written randomly, but

sequential read performance is great. FFS, as we’ve noted above, splits files, so even

sequential writes and reads of large files require seeks. Basically LFS’s claim to fame

is being able to max out write performance of hard drives. However, for most people

read performance is more important than write performance, which is perhaps why

LFS itself hasn’t caught on, although write-ahead logging has.

d) Deleting files and/or modifying files leads to fragmentation, both of the log (i.e. gaps

in the log) as well as the files themselves; when the log can no longer be written

sequentially, the performance benefits of sequential logging (and writes) are lost.

e) What might LFS do to mitigate this problem? LFS has (or had) a defragmenter/

compressor program which runs continuously to coalesce fragmented files and

guarantee that there is always sequential free space on disk for the log. (Also the log/

filesystem was divided into multiple contiguous segments which could be

defragmented/coalesced independently, and one segment could be copied/

coalesced into another, empty segment).

4. Networking (20 points)

b) This is fairly straightforward, and is very similar to what was on the lecture slide!!

B

A

1

R1 3

2

1

2 R2

3

1 R3

2

C

D

R1

(B,3)

(C,3)

(D,2)

(A,1)

R2

(B,1)

(C,3)

(A,2)

(D,2)

R3

(C,2)

(B,1)

(A,1)

(D,1)

c) Very similar; note inbound paths are just the reverse of the outbound paths, so a real

network using this design could just store the single routes and look them up

backwards as well as forwards! However, for this question I wanted you to explicitly

write both routes. (Note this is a bit different than networks like ATM where the

virtual circuit ID is a link-local identifier. This is sort of a hybrid to show that the two

kinds of networks aren’t necessarily as far apart as you might imagine.)

B

A

1

R1 3

2

1

2 R2

3

1 R3

2

C

D

R1

(1,1,3)

(3,1,1)

(1,2,3)

(3,2,1)

(1,3,2)

(2,3,1)

R2

(2,1,1)

(1,1,2)

(2,3,3)

(3,3,2)

R3

(1,2,2)

(2,2,1)

d) No, this doesn’t invalidate the end-to-end argument, because a) it’s really a

workaround for TCP (or its implementations) backing off too much in the face of loss,

and b) it actually demonstrates the end-to-end argument because non-TCP protocols

(e.g. UDP) still end up getting retransmitted, which may be exactly the wrong things

for protocols like VoIP or streaming media, which prefer fast, lossy transmission to

delayed, retransmitted transmission. (Better to have a brief click or duplicated frame

than steadily increasing delay, for example.)

d) I sort of gave this one away, but I wanted you to consider more possibilities!! There

are many things that can go wrong with Stanford’s (and the RIAA’s) assumptions,

for example:

User ID/auth: could be someone else logged in as you (or your roommate using your

machine); also, what about multiuser systems (e.g. cluster machines! - Stanford just

ignores these complaints because you can’t tell which user on a multiuser system

made the connection; even on a cluster machine, an old program started by someone

else might still be running when you are logged in, etc.)

OS: maybe someone hacked into your system! I’ve definitely seen malware run filesharing programs.

IP: Maybe else was using the same IP address! This happens frequently. With

roaming, IP addresses are dynamic. Some OS’s cache DHCP leases and don’t

properly reconfigure; erroneous static configurations are also possible.

Network security: Maybe someone is spoofing your IP address on purpose! Or just

using a random one because they want to get on the network. Or because they want

to hide their tracks. (I’ve seen this for file-sharers.)

MAC address: MAC addresses are often reconfigurable/rewritable. This is a useful

feature (e.g. upgrade your network card, keep same MAC), but it can also be used to

subvert MAC address-based authentication or user/machine tracking. I’ve seen

multiple bogus MAC addresses, as well as very unlucky people who’ve had their

MAC addresses spoofed and gotten kicked off Stanford’s network for file sharing

and/or malware.

Naming/Content: There’s no guarantee that a file (e.g. crazy.mp3) is what you think it

is (e.g. the song by Gnarls Barkley). It might not even be an mp3. In one instance, a

professor’s recorded lectures resulted in a DMCA complaint. In a similar instance,

hackers tried to download the pictures of people named “bill” expecting that they

would contain billing information.

5. Security (20 points)

a) This was a problem because it enabled any local user to run any program as root,

or to get a root shell, etc..

This could be cited as an example of several sorts of problems, including:

- Local privilege escalation: while it was not directly remotely exploitable (you had to be

logged on to the machine, and possibly logged on to the console as well), it was an

easy way of getting root access on a cluster machine, e.g. in Tresidder or a dorm

cluster. Local privilege escalations are problematic because they can often be used by

remote attacks: for example, a remote exploit can get local non-root access and then

make use of a local root exploit; or a local non-root user can be tricked into executing

a program which uses the local root exploit, or a buggy program (e.g. web browser)

can be compromised and can then take advantage of the local flaw.

- (Unexpected) Feature interaction: consider that Mac OS X includes features from Mac

OS classic (e.g. AppleScript, AppleEvents) and UNIX (setuid programs, shell scripts)

- Ambient authority: unfortunately when executing the “do shell script” event,

ARDAgent made use of its ambient (i.e. setuid root) authority, rather than using the

authority/privileges of the requesting user

- Confused Deputy: exec(“/usr/bin/evil/whatever”) has no idea who is making

the request; is it a trusted user, or some random unprivileged user?

Some things that could be done to mitigate the problem include:

1. Disable AppleEvents

2. Require authentication for AppleEvents

3. Do not allow unprivileged programs/users to send events to privileged/setuid

programs

3. Disable the “do shell script” AppleEvent - for ARDAgent, for setuid programs, or

for the entire system

4. Implement MAC and/or capabilities

5. Drop privilege before executing shell script or Apple Event (make sure this doesn’t

open up other security holes!

I believe Apple what actually ended up doing (you can check this on-line) was

disabling loading of scripting extensions (and certain other extensions?) in setuid

programs.

b) (Perhaps you should have started worrying when you noticed

SecureWareSoftCorp was not actually a corporation [as the name seems to imply],

but was actually a Limited Liability Company!)

It’s not entirely clear that 65,536-bit encryption based on a very small password is

going to be any more secure than 128-bit encryption. Is everyone going to enter in

some 65,536 bit secret key from a USB device or something? Or perhaps multi-factor

authentication involving a USB key and a password? That might be a bit more secure,

and harder to crack passwords. But even if it is, making passwords harder to crack is

not really solving the major security problems with operating systems. For example,

all of the above problems (local root exploits, ambient authority, confused deputy)

and various security flaws we discussed in class (TOCTTOU, etc.) have nothing to do

with cracking passwords. Most Windows/Linux/OS X flaws are not related to weak

password (or other) encryption. If SecurePintos encrypts everything using your secret

key, the key must still be kept secret (e.g. if it’s on a USB key, or in memory, or written

down, or on disk, you may still be vulnerable.)

c) Changing the name of the root user to “administrator” will not really make the

system more secure. However, having finer-grained permissions might, so you can

perhaps argue some marginal security benefit.

Getting rid of setuid() will actually make it harder for administrator programs to

drop privileges - this could make things more secure (e.g. admin programs won’t leak

information to lower privilege levels), but it also might mean that they would run

longer with higher privileges and be exploitable for longer periods. Moreover, now

it’s not clear how programs like login will work.

Not inheriting permissions across fork() or exec() is also good, but this might also

break useful programs… like the shell. Root/administrator shells are security holes,

but they’re also incredibly useful - they’re the way you get things done. This would

likely result in a more secure, but very difficult to use system, as most privileges

which can be assigned dynamically on a Unix system (via setuid() + fork()/exec

()!) Possibly privileges would have to be assigned statically at startup (e.g. the kernel

could start up user shells based on a configuration file), or perhaps login would be

part of the kernel. Making it harder to do things at user level might increase kernel

complexity and decrease reliability or security.

d) It might be true if the VMM runs on top of SecurePintos and interposes on guest

OS activities. Moreover, the VMM might introspect into or monitor the encapsulated

OS for compromise or malicious behavior.

It might not be any improvement at all if SecurePintos and the other guest OS run at

the same level in the system. In this case, security bugs in (Windows, Linux, etc.)

would still have the same severity.

6. Virtual machines (25 points)

a) Here are some straightforward implementations (note there is a spurious word

“registers” in the declaration of R which should not be there, so you could call the

register array either R or registers):

/* Add source registers and store result in

destination register */

void add(int dest_reg, int source_reg, int source_reg1) {

R[dest_reg] = R[source_reg] + R[source_reg1];

}

/* Load a word at (source_reg + offset) into dest_reg */

void load(int dest_reg, int source_reg, short offset) {

uint32 paddr = translate_virtual(R[source_reg] + offset);

R[dest_reg] = *((int*)&MEM[paddr]);

}

/* Call: push the PC onto the stack,

and jump to the destination */

void call(int routine_addr) {

SP -= 4;

*((int*)&MEM[translate_virtual(SP)]) = PC;

PC = addr;

}

/* Return: return to the function that called us;

note this routine has to be renamed to compile in C */

void Return(void) {

PC = *((int*)&MEM[translate_virtual(SP)]);

SP += 4;

}

b) Binary translation/just-in-time compilation could batch instructions together,

avoiding the overhead of call/return for each instruction; also register values can be

cached and reused, and the BT/JIT can possibly dynamically optimize the (source

and/or translated) code.

c) A VMM can achieve dramatically improved performance by running these

instructions directly on the CPU. Much faster fetch/decode/execute/commit. These

are all user-level instructions, so they will run at full speed on a VMM.

d) Traps! Traps require at least two hardware traps (i.e. context switches) and also

require software emulation. System calls are a prime example. Privileged instructions

also require hardware traps/context switches as well as software emulation.

e) Traps and privileged instructions can actually run faster on an interpreter or

binary translator, because no hardware traps/mode switches/context switches are

required. They basically call the emulation functions directly. There’s a paper from

vmware comparing binary translation to hardware virtualization that describes this

in detail.

7. The Big Picture (10 points)

a) No, OS is not only a subset of HCI, because operating systems increase

functionality as well as usability of computer systems. You can really do what you

couldn’t (practically) do before. Perhaps your HCI friend would argue that

everything’s a Turing machine, or that you could program the hardware directly, but

in general HCI focuses on regular users rather than programmers. OSes do make

computer systems easier to program, however.

There are many ways which OSes make machines more usable. Consider:

responsiveness: more responsive systems are more usable

reliability: a system is not usable if it crashes all the time, or if it loses your data

security: a system is not usable if you can’t trust it not to disclose your data; a system

is not usable if hackers can take control of it and slow it down for you while they use

it for nefarious purposes.

Adding more features to the OS can possibly make a system easier to perform certain

tasks, or you could possibly use it to do something which you couldn’t do before.

The former is usability, while the latter is more functionality.

b) You can argue either way. Certainly there are many ways in which computers have

benefited humanity; improving communication is perhaps the main contribution:

certainly e-mail, the web, word processing, desktop publishing - these are

revolutionary technologies which have enabled people to communicate at a distance,

to share information, to write books, to create newspapers, etc.. Games and computer

entertainment seem frivolous but can actually make people happier, and can

improve hand-eye coordination for surgeons, for example. Robots can complete

dangerous, boring, difficult, or exhausting tasks, freeing humans for other activities.

Computers can reduce the potential for human error (e.g. computational/

transcription errors, the reason why Babbage invented his Difference Engine!)

Computational capabilities augment human intelligence: for example, computational

biology can help to cure disease, etc.. But what specifically about Operating System

design can help humanity at large?

I believe physicist Peter Feibelman said that research (and, by extension,

engineering) can be interesting if it solves a particular scientific or technical problem

or discovers some previously unknown principle, but it becomes compelling if it can

be shown to bear on some important human need in the real world. There are many

examples of compelling features of well-designed operating systems, for example:

1. Responsiveness/performance! By reducing the time which people wait for their

computers, OS designers can save hundreds (thousands?) of human-years of wasted time.

Also, unresponsive/slow computers slow people down in general and decrease

efficiency (not to mention increasing unhappiness!)

2. Power efficiency! By using components effectively, powering them down when

they’re not in use, and synchronizing power usage, an OS can reduce the overall

power usage of a system. This could help reduce rolling blackouts in California, and

could also reduce overall emission of greenhouse gases, possibly reducing global

warming.

3. Real-time systems. Computers can make decisions faster than humans, but they

have to be correct decisions (the application’s job) and reliable in terms of time. A

reliable real-time OS can guarantee that a program will be given adequate CPU time

(assuming the application requests the correct amount) to complete its task (e.g.

putting the brakes on in your car, or activating a critical piece of medical equipment) and

meet a critical deadline.

Other possibilities: a well-designed OS can make it easier to write all sorts of programs

(e.g. communication programs.) A more secure/reliable OS can also make it less likely

that your personal data will be stolen, lost, damaged, etc..

© Copyright 2026