Econ 742: Introductory Econometrics (2)

Econ 742: Introductory Econometrics (2)

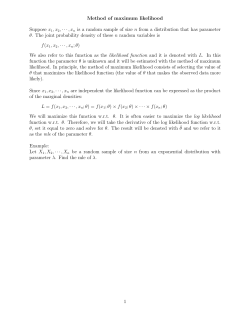

Chapter 3: Large Sample Analysis, the Method of Maximum Likelihood,

Nonlinear Regression, and Asymtotic Tests

3.0 Classical Asymptotic Theory

Some basic asymptotic concepts and useful results:

p

DeÞnition: Convergence in probability Xn −→ X or plimXn = X.

A sequence of random variables {Xn }is said to converge to a random variable X in probability if

lim P (|Xn − X| > ²) = 0,

n→∞

Equivalently, limn→∞ P (|Xn − X| ≤ ²) = 1,

for all ² > 0.

for all ² > 0.

d

DeÞnition: Convergence in distribution Xn −→ X.

A sequence {Xn } is said to converge to X in distribution if the distribution function Fn of Xn converges

to the distribution function F of X at every continuity point of F . (F is called the limiting distribution of

{Xn }).

Chebyshev’s inequality: For any random variable X with mean µ and variance σ 2 ,

P (|X − µ| ≥ λσ) ≤

1

,

λ2

λ > 0.

Continuity mapping theorems:

p

Proposition: Given g : Rk → Rl and any sequence {Xn } such that Xn → α where α is a k × 1 constant

p

vector, if g is continuous at α, then g(Xn ) → g(α).

d

d

Proposition: If g is a continuous function and Xn → X, then g(Xn ) → g(X).

a).

Proposition: (Slutsky) Let {Xn , Yn }, n = 1, 2, · · · be a sequence of pairs of random variables. Then

p

d

p

Xn → X, Yn → 0 ⇒ Xn Yn → 0.

b).

d

p

d

d

d

Xn → X, Yn → c ⇒ Xn + Yn → X + c, Xn Yn → cX, Xn /Yn → X/c,

if c 6= 0.

Law of Large Numbers

Proposition: (Kolmogorov theorem S.L.L.N) Let {Xi }, i = 1, 2, . . . be a sequence of independent random

variable such that E(Xi ) = µi , V (Xi ) = σi2 . Then

∞

X

σ2

i

i=1

i2

a.s

¯n − µ

< ∞ =⇒ X

¯ n → 0.

Proposition: (Chebyshev’s theorem W.L.L.N) Let E(Xi ) = µi , V (Xi ) = σi2 , cov(Xi , Xj ) = 0, i 6= j.

Then

N

1 X 2

p

¯n − µ

σi = 0 =⇒ X

¯n → 0,

lim

n→∞ N 2

i=1

¯n =

where X

1

N

PN

i=1

Xi and µ

¯n =

Central Limit Theorems

1

N

PN

i=1

µi .

1

Theorem: (Lindberg-Levy Theorem C.L.T.) Let X1 , X2 , · · · be a sequence of i.i.d. random variables such

that E(Xn ) = µ and V (Xn ) = σ 2 6= 0 exist. Then the d.f. of Yn ,

Yn =

√

¯ n − µ)/σ → Φ(x),

n(X

d

i.e., Yn → N (0, 1),

where Φ is the standard normal distribution.

Theorem: (Liapounov’s theorem) Let {XnP

} be a sequence of independent

random variables. Let E(Xn ) =

n

1 Pn

2+δ

µn , E(Xn − µn )2 = σn2 6= 0. Denote Cn = ( i=1 σi2 )1/2 . If C 2+δ

E|X

→ 0 for a positive

k − µk |

k=1

n

Pn

(Xi −µi ) d

i=1

→ N (0, 1).

δ > 0, then

Cn

3.1 Large Sample Results for The Linear Regression Model

Features: No normality assumption is needed. Instead appropriate assumptions are assumed such that

certain laws of large numbers and central limit theorems are applicable.

• Consistency:

1. plimn→∞ n1 X 0 ² = 0 under the assumption that limn→∞ n1 X 0 X = Q exists.

Pn

2

Proof: n1 X 0 ² = n1 i=1 x0i ²i . E(x0i ²i ) = x0i E(²i ) = 0 and Var[ n1 X 0 ²] = σn X 0 X/n. The result follows

because the variance of n1 X 0 ² goes to zero.

2. Assuming that Q is invertible, plimn→∞ βˆ = β + Q−1 plimn→∞ n1 X 0 ² = β.

ˆ

• Asymptotic distribution of β:

1. Applying an appropriate CLT (under some regularity conditions on x and ²),

n

1 X 0 d

1

√ X 0² = √

x ²i → N (0, σ 2 Q).

n

n i=1 i

√

2

d

2. n(βˆ − β) → N (0, σ 2 Q−1 ). Hence the asymptotic distribution for βˆ is N (β, σn Q−1 ).

1

2

2

0

3. In practice, n X X estimates Q and σ

ˆ estimates σ .

• Consistency of σ

ˆ2:

plimˆ

σ 2 = plim

²0 ²

²0 X X 0 X −1 X 0 ²

= σ 2 − plim

(

)

= σ 2 − 0 · Q−1 · 0 = σ 2 .

n

n

n

n

ˆ By a mean-value theorem,

• The Delta method: Let α

ˆ = f (β).

√

d

n(α

ˆ − α) → N (0,

∂f (β) 2 −1 ∂f 0 (β)

(σ Q )

).

∂β 0

∂β

• Asymptotic distributions of test statistics:

ˆ −βk

1.) tk = [ˆσ 2 (Xβk0 X)

−1 1/2 is asymptotically normal.

]

kk

σ 2 is asymptotically χ2 (J).

2.) The JFJ,n−K = (Rβˆ − q)0 [R(X 0 X)−1 R0 ]−1 (Rβˆ − q)/ˆ

3.2 The Method of Maximum Likelihood

• Log likelihood function: Suppose that y1 , · · · , yn are independent sample observations,

ln L(θ|y) =

n

X

ln f (yi , θ),

i=1

where f (yi , θ) is the density of yi (if y is continuous r.v. or probability if discrete r.v.)

• Likelihood equation:

∂ ln L(θ|y)

= 0.

∂θ

2

• information³ inequality:

´ E(ln f³(y, θ)) ´< E(ln f (y, θ0 )) for θ 6= θ0 . This follows from the Jensen’s

f (y,θ)

(y,θ)

inequality that E ln f (y,θ0 ) < ln E ff(y,θ

= 0 for θ 6= θ◦ .

0)

(Lemma: (Jensen’s inequality)

Let g : R → R be a convex function on an interval B ⊆ R and let X be a random variable such that

P (X ∈ B) = 1 and E(X) = µ. Then g(E(X)) ≤ E(g(X)). If g is concave on B, then g(E(X)) ≥ E(g(X)).

• A likelihood equality

¸

· 2

¸

∂ ln L(θ|y)

∂ ln L(θ|y) ∂ ln L(θ|y)

+E

= 0.

E

∂θ

∂θ 0

∂θ∂θ 0

·

R

This follows from taking derivatives with θ using the identity f (yi , θ)dyi = 1 for all possible θ.

Under the regularity conditions that the differentiation operator and the integration operator are interchangeable,

Z

Z

Z

∂L

∂

Ldy =

dy = 0.

Ldy = 1 ⇒

∂θ

∂θ

This implies

Eθ

µ

∂ ln L

∂θ0

¶

=

Z µ

1 ∂L

L ∂θ 0

¶

Z

Ldy =

∂L

dy = 0,

∂θ0

and

¶

µ

¶

µ ·

¸¶

∂ 2 ln L

∂ ∂ ln L

∂ 1 ∂L

=

E

=

E

θ

θ

∂θ∂θ0

∂θ ∂θ0

∂θ L ∂θ0

µ

¶

µ

¶ Z

µ

¶

−1 ∂L ∂L

∂ ln L ∂ ln L

∂2L

∂ ln L ∂ ln L

1 ∂2L

= −Eθ

+

,

= Eθ

+

dy = −Eθ

L2 ∂θ ∂θ0

L ∂θ∂θ0

∂θ ∂θ0

∂θ∂θ0

∂θ ∂θ 0

Eθ

because

R

µ

∂2L

∂θ∂θ0 dy

= 0.

• Information matrix is I(θ) where

I(θ) = E

·

¸

∂ ln L(θ|y) ∂ ln L(θ|y)

.

∂θ

∂θ0

• Large sample properties of the MLE

1.) consistency: plimn→∞ θˆM L = θ.

2.) asymptotic normality: θˆM L is asymptotically N (θ, I −1 (θ)).

Proof: By a Taylor expansion, 0 =

√

By the law of large number,

1

n

∂ ln L(θˆML )

∂θ

n(θˆM L − θ) =

Pn

i=1

µ

=

∂ ln L(θ)

∂θ

1 ∂ 2 ln L(θ∗ )

n ∂θ∂θ0

∂ 2 ln L(θ) p

→

∂θ∂θ 0

E( ∂

2

+

∂ 2 ln L(θ∗ ) ˆ

(θM L

∂θ∂θ 0

¶−1

ln f (y,θ)

)

∂θ∂θ

− θ). Therefore,

1 ∂ ln L(θ)

√

.

n

∂θ

and by a central limit theorem,

f (y,θ) ∂ ln f (y,θ)

)). The Þnal result follows from the likelihood equality.

N (0, E( ∂ ln ∂θ

∂θ0

∂ ln L(θ) d

√1

→

∂θ

n

3.) asymptotic efficient — it achieves the Cramer-Rao lower bound for consistent estimators (uniform convergence in distribution over any compact set of the parameter).

• Estimates of the Variance of MLE: The asymptotic variance I −1 (θ) can be estimated by

Ã

∂ 2 ln L(θˆM L )

−

∂θ∂θ 0

3

!−1

.

For independent sample, it can also be estimated by

Ã

n

X

∂ ln f (yi , θˆM L ) ∂ ln f (yi , θˆM L )

∂θ 0

∂θ

i=1

!−1

.

• (Cramer-Rao Lower Bound)

Under some regularity conditions, the variance of an unbiased estimator of θ will be at least as large as

I −1 (θ).

ln L

), and unbiasedness of

This follows from the postive deÞniteness of the variance matrix of (θ˜ − θ, ∂ ∂θ

∂

ln

L

˜ The latter implies that the covariance E((θ˜ − θ) 0 ) = I.

θ.

∂θ

i

h

¡ ∂ ln L ∂ ln L ¢

ln L

˜

. The covariance matrix of θˆ and

Proof: Let P = V (θ), R = E ∂θ ∂θ0 , and Q = E (θ˜ − θ) ∂∂θ

0

¶

µ

P Q

∂ ln L

, which is nonnegative deÞnite. By premultiplying and postmultiplying this covariance

is

∂θ

Q0 R

matrix by [I, −QR−1 ] and its transpose, it follows that P − QR−1 Q0 ≥ 0. This is so, by pre- and postmulplying this matrix with (I, −QR−1 ) and its transpose,

µ

µ

¶

¶

P Q

P − QR−1 Q0

−1 0

−1

(I, −QR−1 )

)

=

(I,

−QR

)

(I,

−QR

= P − QR−1 Q0 .

0

Q0 R

˜ = θ implies that

The matrix Q equals an identity matrix. This is so, since Eθ (θ)

I=

∂

(

∂θ

Z

˜

θL(y,

θ)dy) =

Z

∂L(y, θ)

dy,

θ˜

∂θ0

¶ Z

µ

∂L

∂ ln L

˜

= θ˜ 0 dy = I.

Q=E θ

0

∂θ

∂θ

Hence P − R−1 ≥ 0.

Q.E.D.

3.3 Maximum Likelihood Estimation of The Linear Regression Model under Normality

Assumption: ² is N (0, σ 2 In ).

• Log likelihood function of y given X is

ln L = −

n

1

n

ln 2π − ln σ 2 − 2 (y − Xβ)0 (y − Xβ).

2

2

2σ

• Likelihood equations:

1

∂ ln L

= 2 X 0 (y − Xβ) = 0,

∂β

σ

∂ ln L

−n

1

=

+ 4 (y − Xβ)0 (y − Xβ) = 0.

∂σ 2

2σ 2

2σ

2

0

• MLE: βˆM L = (X 0 X)−1 X 0 y; σ

ˆM

L = e e/n.

• The information matrix of the linear regression model:

µ 2 0 −1

σ (X X)

2

I(β, σ ) = −

00

This follows from

Ã

∂ 2 ln L

∂β∂β 0

∂ 2 ln L

∂σ 2 ∂β 0

∂ 2 ln L

∂β∂σ2

∂ 2 ln L

∂σ 2 ∂σ 2

!

=

µ

4

− σ12 X 0 X

− 2σ1 4 ²0 X

0

2σ 4 /n

¶

.

− 2σ1 4 X 0 ²

n

²0 ²

2σ4 − σ 6

¶

,

by taking expectations.

• The MLE βˆM L and the least squares estimate βˆ are identical. It is the efficient unbiased estimator as

it attains the Cramer-Rao variance bound.

2

• The MLE σ

ˆM

L is biased downward.

2

2

2

E(ˆ

σM

L ) = (n − K)σ /n < σ

for any Þnite sample size n.

3.4 Numerical Methods

Newton-Raphson Method

This method is applicable for either maximization or minimization problems. Let Qn (θ) be the object

function for optimization. Let θˆ1 be an initial estimate of θ. By a quadratic approximation, Qn (θ) ≡

2

ˆ

Qn (θˆ1 )

n (θ 1 )

Qn (θˆ1 ) + ∂Q∂θ

(θ − θˆ1 ) + 12 (θ − θˆ1 )0 ∂ ∂θ∂θ

(θ − θˆ1 ). Maximizing (or minimizing) the right-hand side

0

0

approximation provides a second-round estimator θˆ2 ,

"

∂ 2 Qn (θˆ1 )

θˆ2 = θˆ1 −

∂θ∂θ0

#−1

∂Qn (θˆ1 )

.

∂θ

The iteration is to be repeated until the sequence {θˆj } converges. Other modiÞcation of this algorithm is

ˆ

n (θ 1 )

to replace ∂Q

∂θ∂θ0 by a negative deÞnite matrix in each iteration (e.g., Quadratic Hill Climbing algorithm).

The step sizes in the iteration can also be modiÞed as

"

∂ 2 Qn (θˆ1 )

θˆ2 = θˆ1 − λ

∂θ∂θ 0

#−1

∂Qn (θˆ1 )

,

∂θ

which λ is a scalar.

Asymptotic Properties of the Second-round Estimator

√

Suppose that θˆ1 is a consistent estimator of θ◦ such that n(θˆ1 − θ◦ ) has a proper distribution, then

∗

n (θ )

the second-round estimator θˆ2 has the same asymptotic distribution as a consistent root θ∗ of ∂Q∂θ

. By

the mean-value theorem,

¯

∂Qn (θˆ1 )

∂Qn (θ◦ ) ∂ 2 Qn (θ)

(θˆ1 − θ◦ ),

=

+

∂θ

∂θ

∂θ∂θ0

where θ¯ lies between θˆ1 and θ◦ . It follows that

#−1 ·

"

¸

2

2

ˆ1 )

¯

√

√

Q

(

θ

(θ

)

Q

(

θ)

∂Q

∂

∂

n

n

n

◦

n(θˆ2 − θ◦ ) = n ˆ

(θˆ1 − θ◦ )

+

θ1 − θ◦ −

∂θ∂θ 0

∂θ

∂θ∂θ 0

#−1

#−1

"

"

2

¯ √

ˆ1 )

1

1 ∂ 2 Qn (θ)

Q

(

θ

1 ∂Qn (θ◦ )

∂

1 ∂ 2 Qn (θˆ1 )

n

√

n(θˆ1 − θ◦ ) −

.

= I−

n ∂θ∂θ0

n ∂θ∂θ0

n ∂θ∂θ0

n

∂θ

Since the Þrst term on the right-hand side converges to zero in probability, we conclude that

¶−1

µ

√

1 ∂Qn (θ◦ ) √ ∗

1 ∂ 2 Qn (θ◦ )

√

n(θˆ2 − θ◦ ) ≡ − plim

≡ n(θ − θ◦ ),

0

n ∂θ∂θ

n

∂θ

i.e., the second round consistent estimator has the same asymptotic distribution as the extremum estimator.

5

3.5: Asymptotic Tests

The null hypothesis is h(θ) = 0, where h : Rk → Rq with q < k. Let θˆ be the unconstrained MLE and

θ¯ the constrained MLE.

• Likelihood ratio test statistic:

¸

·

maxh(θ)=0 L(θ|y)

ˆ − ln L(θ|y)].

¯

= 2[ln L(θ|y)

−2 ln

maxθ L(θ|y)

• Wald test statistics:

ˆ

ˆ ∂h(θ)

h0 (θ)

∂θ0

Ã

• Efficient Score test (Rao) statistics:

¯

∂ ln L(θ)

0

∂θ

ˆ

∂ 2 ln L(θ)

−

∂θ∂θ 0

!−1

−1

ˆ

∂h(θ)

ˆ

h(θ).

∂θ

µ 2

¯ ¶−1 ∂ ln L(θ)

¯

∂ ln L(θ)

.

−

0

∂θ∂θ

∂θ

• All these three test statistics are asymptotically chi-square distributed with q-degrees of freedom.

The efficient score test statistics has the same limiting distribution as the likelihood ratio test. This

follows from the arguments.

¯

ˆ

∂ ln L(θ)

∂ ln L(θ)

∂ 2 ln L(θ∗ ) ¯ ˆ

(θ − θ),

=

+

∂θ

∂θ

∂θ∂θ0

and

¯ − ln L(θ)

ˆ =

ln L(θ)

2

2

∗

∗

ˆ

∂ ln L(θ)

ˆ = 1 (θ¯ − θ)

ˆ

ˆ + 1 (θ¯ − θ)

ˆ 0 ∂ ln L(θ ) (θ¯ − θ)

ˆ 0 ∂ ln L(θ ) (θ¯ − θ),

(θ¯ − θ)

0

0

∂θ

2

∂θ∂θ

2

∂θ∂θ

therefore,

¯

∂ ln L(θ)

0

∂θ

µ 2

2

¯ ¶−1 ∂ ln L(θ)

¯ D

∂ ln L(θ)

D

ˆ 0 ∂ ln L(θ0 ) (θ¯ − θ)

ˆ =

ˆ − ln L(θ)).

¯

−

= −(θ¯ − θ)

2(ln L(θ)

0

∂θ∂θ

∂θ

∂θ∂θ 0

3.6: Nonlinear Regression Models

A nonlinear regression model is

yi = h(xi , β) + ²i ,

where E(²|x) = 0. A standard case assumes the ²s have the similar properties in the standard linear regression

model.

• The method of nonlinear least squares:

min

β

• Normal equations:

n

X

i=1

2

• Consistent estimator of σ :

n

X

i=1

(yi − h(xi , β))2 .

[yi − h(xi , β)]

n

∂h(xi , β)

= 0.

∂β

1X

ˆ 2.

[yi − h(xi , β)]

σ

ˆ =

n i=1

2

6

Pn

√

d

• asymptotic distribution: n(βˆNL − β) −→ N (0, σ 2 C −1 ), where C = plim n1 i=1

Pn

Proof: Let S(β) = i=1 (yi − h(xi , β))2 . By a Taylor series expansion,

∂h(xi ,β) ∂h(xi ,β)

.

∂β

∂β 0

1 ∂S(βˆN L )

1 ∂S(β)

1 ∂S(β ∗ ) √ ˆ

0= √

· n(βN L − β),

=√

+

n

∂β

n ∂β

n ∂β∂β 0

which implies that

√

n(βˆNL − β) = −

Furthermore,

·

1 ∂ 2 S(β ∗ )

n ∂β∂β 0

¸−1

1 ∂S(β)

√

.

n ∂β

n

2 X ∂h(xi , β) d

1 ∂S(β)

√

²i

→ N (0, 4σ 2 C)

= −√

∂β

n ∂β

n i=1

and

n

n

1 ∂ 2 S(β)

1 X ∂h(xi , β) ∂h(xi , β)

1 X ∂ 2 h(xi , β) p

=2

²i

−2

→ 2C.

0

0

n ∂β∂β

n i=1

∂β

∂β

n i=1

∂β∂β 0

Gauss-Newton Method

This method is applicable to the estimation of a nonlinear regression equation: yi = fi (β) + ui .

ˆ

i (β1 )

Let βˆ1 be an initial estimate of β◦ . By a Taylor series expansion, fi (β) ≡ fi (βˆ1 ) + ∂f∂β

(β − βˆ1 ) and

0

Sn (β) =

n

X

i=1

2

(yi − fi (β)) ≡

n

X

i=1

Ã

!2

ˆ1 )

(

β

∂f

i

yi − fi (βˆ1 ) −

(β − βˆ1 ) .

∂β 0

By minimizing the right-hand quadratic approximation with respect to β,

βˆ2 = βˆ1 +

!−1 n

à n

X ∂fi (βˆ1 ) ∂fi (βˆ1 )

X ∂fi (βˆ1 )

(yi − fi (βˆ1 ))

0

∂β

∂β

i=1

i=1

!−1 n

à n

X ∂fi (βˆ1 ) ∂fi (βˆ1 )

X ∂fi (βˆ1 )

∂fi (βˆ1 ) ˆ

(yi − fi (βˆ1 ) +

=

β1 ).

0

∂β

∂β

∂β

∂β 0

i=1

i=1

Alternatively, yi ≡ fi (βˆ1 ) +

∂β

∂fi (βˆ1 )

∂β 0 (β

− βˆ1 ) + ui , or equivalently,

∂fi (βˆ1 ) ˆ

∂fi (βˆ1 )

β + ui .

yi − fi (βˆ1 ) +

β1 =

0

∂β

∂β 0

The second round estimator can be interpreted as the least squares estimation applied to the above equation. The second-round estimator of the Gauss-Newton

iteration is asymptotically as efficient as the NLLS

√

estimator if the iteration is started from an n-consistent estimator.

7

© Copyright 2026