Economics 520, Fall 2005 CB 10.1.1-10.1.3

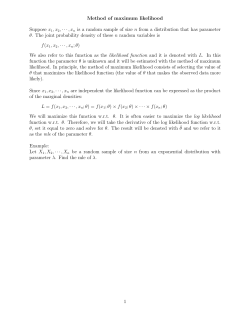

Economics 520, Fall 2005 Lecture Note 15: Large Sample Properties of Maximum Likelihood Estimators, CB 10.1.1-10.1.3 Previously, we showed that if there is an Minimum Variance Unbiased Estimator with variance equal to the Cramer–Rao bound, then the MVUE is equal to the Maximum Likelihood Estimator (MLE). However, the conditions were fairly restrictive. In models which do not satisfy those conditions, the MLE is not unbiased in general, so it cannot be the MVUE. Nevertheless, it turns out that in a certain approximate sense, the maximum likelihood estimator is unbiased and minimum variance. The approximations hold when the sample size is large, and we refer to these as asymptotic or large sample approximations. Example Let X1 , . . . , Xn be a random sample from an exponential distribution with arrival rate λ∗ : fX (x; λ∗ ) = λ∗ exp(−xλ∗ ). The Cram´er-Rao bound for the variance is λ∗2 /N . The log likelihood function is N X L(λ) = ln λ − xi λ, i=1 ˆ = 1/¯ and the maximimum likelihood estimator is λ x. What can we say about the large sample properties of this estimator? Using the law of large numbers we have p x ¯ −→ E[X] = 1/λ∗ , so p ˆ = 1/¯ λ x −→ 1/E[X] = λ∗ . Using the central limit theorem we also have √ d N · (¯ x − 1/λ∗ ) −→ N (0, 1/λ∗2 ). Then we can use the delta method to establish that √ d N · (g(¯ x) − g(1/λ∗ )) −→ N (0, g 0 (1/λ∗ )2 /λ∗2 ). Applying this with g(a) = 1/a, and thus g 0 (a) = −1/a2 , we get √ d N · (1/¯ x) − λ∗ ) −→ N (0, λ∗4 /λ∗2 ) = N (0, λ∗2 ). Hence, approximately, ˆ ∼ N (λ∗ , λ∗2 /N ). λ So, approximately, in large samples, this maximum likelihood estimator is unbiased, and has variance approximately equal to the Cram´er-Rao bound. This is true in general for maximum likelihood estimators. 2 Result 1 Let X1 , . . . , Xn be a random sample from fX (x; θ∗ ). Assume that the regularity conditions in CB 10.6.2 hold, and let θˆ be the maximum likelihood estimator: θˆ = argmaxθ N X i=1 1 ln fX (xi ; θ). Then θˆ is consistent for θ∗ : p θˆ −→ θ∗ , and θˆ has asymptotically a normal distribution: √ d N (θˆ − θ∗ ) −→ N (0, I(θ∗ )−1 ), where I(θ∗ ) is the single observation information matrix: 2 2 ∂ ln fX ∂ ln fX ∗ ∗ ∗ (X; θ ) . (X; θ ) = −E I(θ ) = E ∂θ ∂θ2 2 First let us interpret this result using the Cramer–Rao bound. The CR bound implies that no unbiased estimator has a variance smaller than I(θ∗ )−1 /N. The maximum likelihood estimator has a limiting normal distribution √ d N (θˆ − θ∗ ) −→ N (0, I(θ∗ )−1 ), implying that for fixed, large N , √ N (θˆ − θ∗ ) ≈ N (0, I(θ∗ )−1 ). This in turn implies that θˆmle ≈ N (θ∗ , I(θ∗ )−1 /N ). Now, if this was the exact distribution of the MLE, it would be the minimum variance unbiased estimator. Although this is only the approximate distribution in large samples, it seems reasonable to think of the MLE is “approximately optimal.”1 Example To illustrate what this means consider an example we have looked at before, where the maximum likelihood estimator differs from the minimum variance unbiased estimator. Suppose X1 , . . . , XN are a random sample from a normal distribution with unknown mean µ and unknown variance σ 2 . We are interested in the variance σ 2 . The minimum variance unbiased estimator is N 1 X ¯ 2. W1 = (Xi − X) N −1 i=1 The maximum likelihood estimator is N 1 X ¯ 2 = N − 1 · W1 . (Xi − X) W2 = N N i=1 As the sample gets large, the two estimators get close to each other. They are both consistent and have the same large sample distributions. √ d N · (W1 − σ 2 ) −→ N (0, 2 · σ 4 ), 1 This reasoning can be made more formal. One such result is a statement that any other estimator that is asymptotically unbiased has higher asymptotic variance, at almost all points in the parameter space. 2 and √ d N · (W2 − σ 2 ) −→ N (0, 2 · σ 4 ), 2 Sketch of Proof of Result 1: For each value of θ, we can apply a law of large numbers so that N 1 1 X p L(θ) = ln fX (Xi ; θ) −→ E[ln fX (X; θ)]. N N i=1 In addition we know from Jensen’s inequality that θ∗ = argmaxE[ln fX (X; θ)]. To get the result that 1 1 L(θ) = θ∗ , argmax L(θ) = argmaxE N N we need that the convergence is not just pointwise, but uniform in θ, that is, 1 p 1 sup L(θ) − E L(θ) −→ 0. N N θ This implies that the convergence to the limit is not much weaker for some values of θ than for others. It requires stronger regularity conditions than pointwise convergence. (Sufficient but not necessary is that ln fX (x; θ) ≤ k(x), with E[k(X)] < ∞.) In large samples at the maximum likelihood estimator the derivative of the log likelihood function must be equal to zero: ∂L ˆ (θ) = 0. ∂θ Now expand the derivative of the log likelihood function around the true value of theta: 0= ∂L ∗ ∂2L ˜ ˆ ∂L ˆ (θ) = (θ ) + (θ) · (θ − θ∗ ), ∂θ ∂θ ∂θ2 ˆ In large samples θˆ → θ∗ , and therefore θ˜ → θ∗ . Rearranging for some θ˜ between θ∗ and θ. this gives 2 ∂ L ˜ −1 ∂L ∗ ˆ θ−θ = (θ) · (θ ), ∂θ2 ∂θ or 2 −1 √ ∂ L ˜ ∂L ∗ √ ˆ N · (θ − θ) = (θ) N · (θ ) N . ∂θ2 ∂θ In large samples N ∂2L ˜ 1 X ∂ 2 ln fX p ˜ −→ − 2 (θ) N ≈ − (xi ; θ) I(θ∗ ), 2 ∂θ N ∂θ i=1 converges in probability to the information matrix I(θ∗ ). The second part, N ∂L ∗ √ 1 X ∂ ln fX d (θ ) N=√ (xi ; θ∗ ) −→ N (0, I(θ∗ )), ∂θ N i=1 ∂θ 3 because it satisfies a central limit theorem with variance equal to the information matrix. This completes the argument. Random Vectors and Multiple Parameters In many models, the parameter θ may be a vector. For example, in the normal model with mean µ and variance σ 2 , we can think of the parameter as a 2-vector θ = (µ, σ)0 . It turns out that everything extends very easily to the case with a vector parameter, but we need to introduce a bit of additional notation to state it clearly. First, let us take a step back and consider random vectors. Suppose X is a k × 1 random vector X = (X1 , . . . , Xk )0 . Here, the X1 , . . . , Xk are (scalar) random variables, not necessarily independent or identically distributed. Define the mean of X as µ1 E(X1 ) .. E(X) = µ = ... := . . µk E(Xk ) The variance matrix (or variance-covariance matrix) is E[(X1 − µ1 )2 ] E[(X1 − µ1 )(X2 − µ2 )] · · · E[(X2 − µ2 )2 ] ··· V (X) = E[(X −µ)(X −µ)0 ] = E E[(X2 − µ2 )(X1 − µ1 )] . .. .. .. . . . So, the (i, j) element of V (X) is the covariance between Xi and Xj . The CDF of the random vector X can be defined as before. For x ∈ Rk , FX (x) := P (X ≤ x), where now X ≤ x means that the inequality holds for every element: Xi ≤ xi for each i. Now consider a sequence of random vectors X1 , X2 , . . .. (Be careful of notation: now each Xn is a k−dimensional random vector.) Then Xn converges in distribution to a random vector X if our previous definition holds: FXn (x) → FX (x), at each continuity point of FX . For convergence in probability, we need to modify our previous definition only slightly. For a vector x ∈ Rk , its length is defined as ||x|| := k X !1/2 x2i . i=1 This is just the usual “Euclidean length” of a vector. Now, a sequence of random vectors Xn converges in probability to a constant vector c ∈ Rk if, for every > 0, P (||Xn − c|| > ) → 0. 4 The standard Law of Large Numbers and the Central Limit Theorem extend to the vector case. For example, if X1 , X2 , . . . are IID random vectors with mean µ and variance matrix Σ (note: this allows elements within a vector to be nonindependent and have different distributions), then √ d ¯ − µ) −→ n(X N (0, Σ), where N (0, Σ) is the multivariate normal distribution with mean (0, 0, . . . , 0)0 and variance matrix Σ. Finally, having developed this extra notation, we can consider the large sample properties of MLE. Suppose that our model f (x; θ) now depends on a vector parameter θ = (θ1 , . . . , θk )0 . Result 1 extends to this case as follows: Result 2 Let X1 , . . . , Xn be a random sample from fX (x; θ∗ ), where θ∗ is k×1. Let θˆ be the maximum likelihood estimator: θˆ = (θˆ1 , . . . , θˆk )0 = arg max θ Then θˆ is consistent for θ∗ : N X ln fX (xi ; θ). i=1 p θˆ −→ θ∗ , and θˆ is asymptotically normally distributed: √ d N (θˆ − θ∗ ) −→ N 0, I(θ∗ )−1 , where I(θ∗ ) is the single observation information matrix: 2 ∂ ln f (X; θ∗ ) ∂ ln f (X; θ∗ ) ∂ ln f (X; θ∗ ) · = −E . I(θ∗ ) = E ∂θ ∂θ0 ∂θ∂θ0 (Note: the term ∂ ln f (X;θ∗ ) ∂θ is k × 1 and the information matrix I(θ) is k × k. ) 5

© Copyright 2026