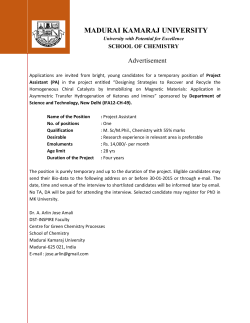

Learning Guide - Abbott Diagnostics