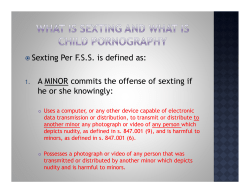

Discussion Meeting RECENT DEVELOPMENTS IN CHILD INTERNET SAFETY Chair: Diana Johnson MP