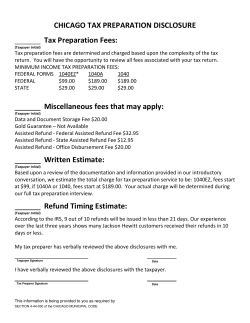

Assisted Review Guide