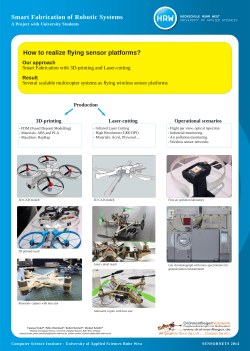

v1 - Advanced Cyber Defence Centre