performance evaluation of brain tumor diagnosis techniques in mri

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

PERFORMANCE EVALUATION OF BRAIN TUMOR

DIAGNOSIS TECHNIQUES IN MRI IMAGES

V.Kala1, Dr.K.Kavitha2

1

M.Phil Scholar, Computer Science, Mother Teresa women’s university, (India)

2

Assistant Professor, Dept. of. Computer Science, Mother Teresa women’s university, (India)

ABSTRACT

Magnetic Resonance Imaging (MRI) images are generally employed in ischemic stroke diagnosis since it is

quicker accomplishment and compatibility with majority life affirms devices. This paper proposed an efficient

approach for automated ischemic stroke detection of employing segmentation, feature extraction, median

filtering and classification that distinguish the region of ischemic stroke from sizeable tissues in MR images.

The proposed approach comprises of five stages such as pre-processing, segmentation, median filtering,

features extraction and classification. The former ischemic stroke detection is presented to enhance accuracy

and efficiency of clinical pattern. The experimental results are numerically evaluated by a human proficient.

The average overlap utility, average accuracy and average retrieve between the results found employing our

proposed scheme.

Key Words: Classification And Ischemic Stroke, Feature Extraction, Median Filtering ,MRI

Images, Segmentation

I.INTRODUCTION

Generally, a stroke is denoted as a Cerebrovascular Accident (CVA) which is the frequent loss of the function of

brain owing to interference in the blood issue to the brain. Such can be because of the ischemia induced by

blockage or a bleedings a solution, the impressed brain area cannot purpose that may effect in an unfitness to

precede one or more branches on one slope of the human body, unfitness to realize or articulate speech, or an

unfitness to assure one slope of the visual area.

An ischemic stroke is sometimes addressed in a hospital with thrombolytic agent who is also known as ‘clot

buster’ and approximately hemorrhagic strokes gain from neurosurgery. Since the mean life span of human has

enhanced, stroke gets turn the third extending campaign of death globally behind heart disease and also cancer.

Hazard components for stroke admit geezer hood, cigarette smoking, eminent cholesterol, high blood pressure,

former stroke or Transient Ischemic Attack (TIA), a test fibrillation, and diabetes. Our proposed scheme is an

approach for the detection of stroke to settle the small area of ischemia for a distinct diagnosis and to assort

whether the MR image is getting stroke or not.

II. RELATED WORK

The precision of the brain standardization approach immediately impacts the precision of statistical investigation

of operational Magnetic Resonance Imaging (MRI) information. The medical secular lobe and cortical stratum

structures necessitate an exact enrolment approach owing to prominent bury subject variance. Innovation of

fully automated MRI post treating pipeline directed to minimize the error at the process of registration

1636 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

throughout group analyzes and we will establish their transcendence over two generally employed registration

approaches by leading comprehensive surface to surface length quantifications throughout blunder cortical and

sub cortical areas.

Areas in 3-D Magnetic Resonance Images (MRI) of brain can be assorted utilizing protocols for manually

sectioning and marking structures. For prominent cohorts, expertness and time essentials build such approach

visionary. In order to attain mechanization, a single segmentation can be disseminated to some other single

employing an anatomical reference symmetry approximation linking the atlas image to the objective image. The

precision of the leading target marking has been determined but can possibly be enhanced by aggregating

multiple segmentations employing decision fusion process.

Though researches have furnished abundant manifest for caused advances in psychological and physiological

welfare, trivial is recognized about potential links to brain structure of pattern. Applying high-resolution

Magnetic Resonance Images of 22 Tai Chi Chuan (TCC) practicing and 18 assures checked for age, education

and sex. We take off to analyze the fundamental anatomical correlatives of semi-permanent Tai chi pattern at

two dissimilar levels of regional particularity.

The structure of mean examples of anatomy, besides retrogression investigation of anatomical constructions is

fundamental issues in medical field research, for example in the analysis of brain growth and disease procession.

While the fundamental anatomical operation can be patterned by arguments in a Euclidian space, authoritative

statistical approaches are applicable. Recent epoch work proposes that efforts to depict anatomical reference

divergences employing flat Euclidian spaces counteract our power to constitute natural biological variance.

All areas of neuroscience which utilize medical imaging of brain require for transmitting their solutions with

address to anatomical areas. Particularly, relative morph metric and the group investigation of operational and

physiologic data necessitate brains co-registration to demonstrate agreements throughout brain structures. It is

considerably demonstrated that additive registration of one brain image to another image is unequal for

adjusting brain structures, so legion algorithms induce issued to nonlinearly register brain images to each other.

III. PROPOSED SYSTEM

In our proposed approach we defeat the trouble and withdraw of existing approach. There are five stages are

applied in our process. At feature extraction using GLCM and HOG (Histogram of Gradient) is employed and

for assortment SVM and Neuro fuzzy ANFIS also presented. From the above process, we decide the region of

ischemic stroke in MR images. MR images are more approachable, less costly and faster particularly in

critically ill patients. By using our proposed approach we can obtain high accuracy images and the overall

efficiency of the system is enhanced.

3.1. Data Flow

Our proposed approach contains following four stages

·

Pre-processing

·

Segmentation

·

Feature extraction

·

Classification

1637 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

3.2. Pre-Processing

In pre-processing approach median filters are employed to eliminate noise from the MRI input images. It is

frequently suitable to be capable of executing few kind of noise diminution on images or signals. The median

filters are known as nonlinear digital filters, frequently utilized to eliminate noise. Such noise elimination is a

distinctive pre-processing level to enhance the solutions of more recent processing. Median filtering is widely

utilized in digital image processing approach.

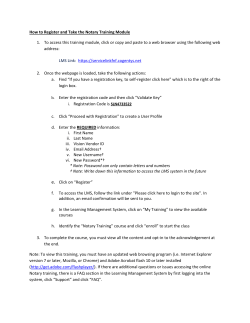

3.3. Architecture Diagram

3.4. Median Filtering

As we have encountered that smoothing filters decrease noise. Nevertheless, the fundamental presumption is

that the adjacent pixels represent extra samples of the like measures as the source pixel that is they constitute the

same characteristic. At the image edges, this is obviously not true, and blurring of characteristics effects. We

have employed convolution approach to enforce weighting kernels as a locality function that presented a linear

1638 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

procedure. There are nonlinear locality functions which can be executed for the intention of noise removal

which can execute a better task of maintaining edges than Simple Smoothing Filters.

3.5. Segmentation

Here, we really extract impressed region from the input image which a part of it and that comprises exactly the

postcode. The aim of segmentation is to vary and simplify the cooperation of an input image into something

which is more significant and lighter to analyze.

3.6. Feature Extraction (GLCM)

Here, we are going to extract the video feature by GLCM and a gray level coincidence matrix (GLCM)

comprises information concerning the situations of pixels causing similar gray level measures. Then, we

compute various movement features at each and every point with local secular units separated in order to regard

straight of motions. We compute the fluctuation between the each and every frame. Such measures will be

employed as feature measures of video.

The GLCM is determined by, Where ‘nij’ is defined as the number of occurrences and that possess the pixel

values ‘(i,j)’ resting at the distance ‘d’ in the input image. The above co-occurrence matrix ‘Pd’ contains the

dimension of about ‘n× n’, where ‘n’ is denoted as the number of gray levels in the input image.

3.7. Classification

In this section, we are going to classify the input image whether the image is frontal or non-frontal image by

employing Support Vector Machine (SVM) classifier. SVMs are also known as support vector networks that are

monitored discovering examples with related discovering algorithms which analyze information and distinguish

patterns, employed for the regression analysis and classification process.

3.8. Svm Classifier

·

Data setup: our proposed dataset comprises three categories, each ‘N’ samples. The information is‘2D’ plot

source information for visual review.

·

SVM with analogy kernel (-t 0) and we require to discover the better parameter measure C employing 2fold cross establishment.

·

After detecting the better parameter measure for C, we aim the full data again employing such parameter

measure.

·

Plot support vectors

·

Plot decision area

SVM functions input vectors to a more eminent dimensional space vector where an optimum hyper plane is

fabricated. Among the various hyper planes uncommitted, there is only too hyper plane which increases the

length between them self and the closest data vectors of each and every class. Such hyper plane that increases

the margin is known as the optimal distinguishing hyper plane. The margin is determined as the addition of

hyper plane distances to the nearest training vectors of each and every category.

1639 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

IV. EXPERIMENTAL RESULTS

Histogram of Oriented Gradients appropriates edge or gradient constructions which are feature of local shape.

HOG is an image descriptor which is established on the image’s gradient preferences. Here we extract the

mathematical measure from HOG only; HOG descriptor is established on dominant image edge orientations and

the image are splitted into cells.

1640 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

V. CONCLUSION

A Computer Aided Detection (CAD) system is capable of describing few ischemic stroke has been formulated.

We have formulated an automated approach for the ischemic stroke detection in brain of MR images employing

segmentation, feature extraction and classification. Here we enhance the precision by applying classification and

by using SVM to discover the stoke level in the brain image but which is deficient in detecting precision, rather

this we may employ some other progress classifier like GMM, HMM, Feed Forward Neural Network and thus

the accuracy of the input image can be enhanced.

REFERENCE

[1]. Akansha Singh , Krishna Kant Singh, “A Study Of Image Segmentation Algorithms For Different Types

Of Images”, International Journal of Computer Science Issues, vol. 7,Issue 5, pp 414-417,2010.

[2]. Mansur Rozmin, Prof. Chhaya Suratwala, Prof. Vandana Shah,”Implementation Of Hard C-Means

Clustering Algorithm For Medical Image Segmentation”, Journal Of Information Knowledge and

Research in Electronics and Communication Engineering,vol.2, no.2, pp 436-440,Nov12-Oct13.

[3]. Rajesh Kumar Rai, Trimbak R. Sontakke, “Implementation of Image Denoising using Thresholding

Techniques”,International

Journal

of

Computer

Technology

and

Electronics

Engineering

(IJCTEE),vol.1,no. 2, pp 6-10.

[4]. T.Kalaiselvi, S.Vijayalakshmi, K.Somasundara, “Segmentation of Brain Portion from MRI of Head Scans

Using Kmeans Cluster”, International Journal of Computational Intelligence and Informatics ,vol. 1,no. 1,

pp 75-79,2011.

[5]. S.S Mankikar , “A Novel Hybrid Approach Using Kmeans Clustering and Threshold filter For Brain Tumor

Detection”, International Journal of Computer Trends and Technology, vol. 4, no.3, pp 206-209,2013.

[6]. M.C. Jobin Christ, R.M.S.Parvathi, “Segmentation of Medical Image using Clustering and Watershed

Algorithms”, American Journal of Applied Sciences, vol. 8, pp 1349-1352, 2011.

[7]. Manali Patil, Mrs.Prachi Kshirsagar, Samata Prabhu, Sonal Patil, Sunilka Patil,” Brain Tumor Identification

Using K-Means Clustering”, International Journal of Engineering Trends and Technology,vol. 4,no. 3,pp

354-357,2013.

[8]. P.Dhanalakshmi , T.Kanimozhi, “Automatic Segmentation of Brain Tumor using K-Means Clustering and

its Area Calculation”, International Journal of Advanced Electrical and Electronics Engineering ,vol. 2,no.

2,pp 130-134,2013.

[9]. Sanjay Kumar Dubey, Soumi Ghosh, “Comparative Analysis of K-Means and Fuzzy C Means Algorithms”,

International Journal of Advanced Computer Science and Applications, vol. 4, no. 4, pp 35-39, 2013.

[10]. M. Masroor Ahmed, Dzulkifli Bin Mohamad, “Segmentation of Brain MR Images for Tumor Extraction

by Combining Kmeans Clustering and Perona-Malik Anisotropic Diffusion Model”, International Journal

of Image Processing, vol. 2 , no. 1, pp 27-34,2008.

[11]. Anam Mustaqeem, Ali Javed, Tehseen Fatima, “An Efficient Brain Tumor Detection Algorithm Using

Watershed & Thresholding Based Segmentation”, I.J. Image, Graphics and Signal Processing, vol. 10,no.

5, pp 34-39,2012.

1641 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

[12]. P.Vasuda, S.Satheesh, “Improved Fuzzy C-Means Algorithm for MR Brain Image Segmentation”,

International Journal on Computer Science and Engineering (IJCSE), vol. 02, no.05, pp 1713-1715, 2010.

[13]. Ananda Resmi S, Tessamma Thomas, ”Automatic Segmentation Framework for Primary Tumors from

Brain MRIs Using Morphological Filtering Techniques”, in 5th Int Conf on Biomedical Engineering and

Informatics,2012,IEEE.

1642 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

A REVIEW OF BENCHMARKING IN SERVICE

INDUSTRIES

Sunil Mehra1, Bhupender Singh2, Vikram Singh3

1

M.Tech. Student, 2Asst. Professor, 3Associate Professor, Mechanical Engineering,

YMCA University of Science and Technology, (India)

ABSTRACT

Benchmarking is recognized as a fundamental tool for continuous improvement of quality. The objective of this

paper is to examine service factor that contribute to the effectiveness of benchmarking in service industries. This

study found that complexity and flexibility has significant correlation with effectiveness of benchmarking in

service industries. The purpose of this paper is to understand of success factors aimed at increasing service

revenue in manufacturing companies or service industries. We find out different types of success factors and

those factors are improving performance of any type of service industries.

Keywords: Benchmarking, Finding Factors, Implementing Benchmarking, Organisation, Service

I. INTRODUCTION

Benchmarking is one of the most useful tools of transferring knowledge and improvement into organisations as

well as industries (Spendolini, 1992; Czuchry et al., 1995). Benchmarking is used to compare performance with

other organisations and other sectors. This is possible because many business processes are basically the same

from sector to sector. Benchmarking focused on the improvement of any given business process by exploiting

best rather than simply measuring the best performance. Best practices are the cause of best performance.

Companies studying best practices have the greatest opportunity for gaining strategic, operational, and financial

advantages. Benchmarking of business processes is usually done with top performing companies in other

industry sectors.

The systematic discipline of benchmarking is focused on identifying, studying, analysing, and adapting best

practices and implementing the results. The benchmarking process involves comparing one’s firm performance

on a set of measurable parameters of strategic importance against those firms known to have achieved best

performance on those indicators. Development of benchmarking is an iterative and ongoing process that is likely

to involve sharing information with other organisations working with them towards a satisfying metrology.

Benchmarking should be looked upon as a tool for improvement within a wider scope of customer focused

improvement activities and should be driven by customer and internal organisation needs. Benchmarking is the

practices of being humble enough to admit that someone else is better at something and wise enough to learn

how to match even surpass them at it.

1643 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

II. IMPLEMENTATION OF BENCHMARKING

There are five phases for implementation of benchmarking

2.1 Planning

Planning is the first step of implementation of benchmarking in any organisation. During this phase the

organisation determines which process to benchmark and against what type of organisation.

2.2 Analysis

During this phase to analysis is performed for the performance gap between the source organisation and the

receiver organisation.

2.3 Integration

It involves the preparation of the receiver for implementation of actions.

2.4 Action

This is the phase where the actions are implemented within the receiver organization.

2.5 Maturity

This involves continuous monitoring of the process and enables continuous learning and provides input for

continuous improvement within the receiver organization.

III. SERVICE

The concept of service can be described as the transformation of value, an indescribable product, from the

service supplier (also termed the provider) to the customer (also termed the consumer). The process of

transformation can be set in motion by a customer whose needs can be provided by the supplier, by a service

supplier who offers a particular service to the customer.

The definitions usually include:

1644 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

·

Public transportation.

·

Public utilities (telephone communication, energy service, sanitation stores).

·

Restaurants, hotels and motels.

·

Marketing (retail food, apparel, automotive, wholesale trade, department stores).

·

Finance (commercial banks, insurance, sales finance, investment).

·

Personal service (amusements, laundry and cleaning, barber and beauty shops).

·

Professional services (physicians, lawyers).

·

Government (defense, health, education, welfare, municipal services).

·

News media

IV. LITERATURE REVIEW

4.1 Roger Moser et al., (2011),

In order to develop a benchmarking framework for supply network configuration we draw upon insights various

theories addressing different levels: the dyadic relationship, the supply chain, and the network levels. The all

three levels for the benchmarking of supply network configuration. The foundation for the development of our

benchmarking framework for supply network configuration is primarily based on the theories of relationships

and networks.

4.2 Heiko Gebauer et al., (2011),

The different requirements of the service strategies described as “after-sales service providers” and “customer

support service providers” influence the logistics of spare parts. The service organisations would face increasing

pressure to improve their financial performance, and compared with the corresponding values for the

manufacture and distribution of the finished product within the company.

4.3 Min et al., (Hokey 2011)

The benchmarking process begins with the establishment of service standards through identification of service

attributes that comprise service standards. Since serving customer better is the ultimate goal of benchmarking.

4.5 Panchapakesan padma et al., (2010)

Several researchers have established that service quality not only influences the satisfaction of buyers but also

their purchase intentions. Even though there are other antecedents to customer satisfaction , namely, price,

situation, and personality of the buyer, service quality receives special attention from the service marketers

because it is within the control of the service provider and by improving service quality its consequence

customer satisfaction could be improved, which may be turn influence the buyer’s intention to purchase the

service.

4.5 Luiz Felipe Scavard et al., (2009)

Product variety proliferation is a trend in many industry sectors worldwide. Benchmarking should be a reference

or measurement standard for comparison a performance measurement that is the standard of excellence for a

specific business and a measurable, best in class achievement. Benchmarking as the continuous process of

1645 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

measuring products, services, and practices against the toughest competitors or those organization known as

industry leaders.

4.6 Monika koller, Thomas Salzberger, (2009)

Benchmarking is a tool which is widely used in both the manufacturing as well as the service industry. When we

compared to the manufacturing sector, benchmarking in the service sector is more difficult because

idiosyncrasies of particular fields of application. Bhutta and Huq (1999) gives that benchmarking is not only a

comparative analysis, it is a process to establish the ground for creative breakthroughs and a way to move away

from tradition.

4.7 Okke Braadbaart, (2007),

Benchmarking has significant potential for application in the public sector. According to Magd and Curry

(2003), benchmarking is a powerful vehicle for quality improvement and a paradigm for effectively managing

the transformation of public-sector organizations into public-sector organizations of quality. The collaborative

benchmarking literature focuses on information sharing and the positive effect this has on the quality and

quantity of information about what public sector organisations do and how well they do it.

4.8 Sameer Prasad, Tata, (2006)

Employing an appropriate theory building process can help us improve our precision and understanding. Both

precision and understanding are important element of benchmarking. The greater the degree of precision allows

for a finer ability to make comparisons. Greater power provides an understanding on the way to improve upon

the service.

4.9 Nancy M. Levenburg, (2006)

Benchmarking is the process of comparing one’s practices and procedures against those believed to be the best

in the industry. While benchmarking efforts focused on manufacturing and logistics, the process has grown to

encompass a wider array of activities including exporting, quality goals in service systems, supply chain

interface, employee practices and brand management. This study aims to gain insight into practice that can

enable organizations to utilize the internet more effectively for customer service purpose.

4.10 Ashok Kumar et al., (2006)

Benchmarking has been variously defined as the process of identifying, understanding, and adapting outstanding

practices from organizations anywhere in the world to help your organizations improve its performance. It is an

activity that looks outward to find best practice and high performance and then measures actual business

operations against those goals.

V. FINDING FACTORS

With the help of this literature review we find out different types of success factors and those factors are

improving performance of any type of service industries. Many service factors are finding in service industries.

Some factors are also inter relate to each other. Service factor are:-

1646 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

Sr.

ISBN: 978-81-931039-3-7

Factor

Author

Define

Unique or superior

Melton and Hartline,

Offering superior core attributes and supporting

service

2010

services.

Synergy

Ottenbacher

No.

1

2

and

Capabilities

Harrington, 2010

3

customer

Carbonell et al., 2009

involvement

Operational outcomes and innovation volume, but

no impact on competitive superiority and sales

performance.

4

Technology

Lin et al., 2010

By applying more advanced marketing information

systems based on the data acquired from their

customers, companies are able to create more

service innovations to explore potential markets

5

6

Knowledge

Leiponen,

Collective ownership of knowledge should be

management

2006

promoted.

Culture

Liu (2009)

Supportive culture as a construct of complementary

dimensions consisting of innovative

7

Market orientation

Atuahene-Gima (1996)

The organization-wide collection

and dissemination of market information, as well as

the

organizational

responsiveness

to

that

information.

8

Process quality

Avlonitis et al., 2001

This factor is important in all phases from idea

generation and analysis to concept development,

testing, and launch.

9

Cross-functional

Storey et al.,2010

It seems to be important in companies which rely

involvement

heavily on tacit knowledge, where the codification

of information is difficult

10

employee

Ordanini

involvement

Parasuraman, 2011,

and

Extensive internal marketing is conducted to raise

support and enthusiasm for the product

VI. CONCLUSION

From this review it is seen that Benchmarking is an important tool to improve quality of the product/service in

manufacturing as well as service industries. The main objective of the review to highlight the main factor in

service industries and these are interrelate each other. These different types of successive factor are improving

1647 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

performance of any type service industries as well as manufacturing and those factors are also useful for

implementation of benchmarking. A service entails a unique experience between the service provider and

service customer. The constellation of features and characteristics inherent in a service offering takes place

during its development. Hence, it is important to be aware of certain elements which contribute to the success of

a service while designing it. Benchmarking establishes company’s true position versus the rest, making thus

easier for the company to raise organizational energy for change and develop plans for action.

REFERENCES

[1]

Heiko Gebauer, Gunther Kucza, Chunzhi Wang, (2011),"Spare parts logistics for the Chinese market",

Benchmarking: An International Journal, Vol. 18 Iss: 6 pp. 748 – 768.

[2]

Roger Moser, Daniel Kern, Sina Wohlfarth, Evi Hartmann, (2011),"Supply network configuration

benchmarking: Framework development and application in the Indian automotive industry",

Benchmarking: An International Journal, Vol. 18 Iss: 6 pp. 783 – 801.

[3]

Sameer Prasad, Jasmine Tata, (2006),"A framework for information services: benchmarking for countries

and companies", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp. 311 – 323.

[4]

Nancy M. Levenburg, (2006),"Benchmarking customer service on the internet: best practices from family

businesses", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp. 355 – 373

[5]

Monika Koller, Thomas Salzberger, (2009),"Benchmarking in service marketing - a longitudinal analysis

of the customer", Benchmarking: An International Journal, Vol. 16 Iss: 3 pp. 401 – 414.

[6]

Okke Braadbaart, (2007),"Collaborative benchmarking, transparency and performance: Evidence from

The Netherlands water supply industry", Benchmarking: An International Journal, Vol. 14 Iss: 6 pp. 677 –

692.

[7]

Ashok Kumar, Jiju Antony, Tej S. Dhakar, (2006),"Integrating quality function deployment and

benchmarking to achieve greater profitability", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp.

290 – 310. for achieving service innovation. Decision Sciences, 40, 431-475.

[8]

Luiz Felipe Scavarda, Jens Schaffer, Annibal José Scavarda, Augusto da Cunha Reis, Heinrich Schleich,

(2009),"Product variety: an auto industry analysis and a benchmarking study", Benchmarking: An

International Journal, Vol. 16 Iss: 3 pp. 387 – 400.

[9]

Panchapakesan Padma, Chandrasekharan Rajendran, Prakash Sai Lokachari, (2010),"Service quality and

its impact on customer satisfaction in Indian hospitals: Perspectives of patients and their attendants",

Benchmarking: An International Journal, Vol. 17 Iss: 6 pp. 807 – 841

[10] Hokey Min, Hyesung Min, (2011),"Benchmarking the service quality of fast-food restaurant franchises in

the USA: A longitudinal study", Benchmarking: An International Journal, Vol. 18 Iss: 2 pp. 282 – 300.

[11] G.M. Rynja, D.C. Moy, (2006),"Laboratory service evaluation: laboratory product model and the supply

chain", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp. 324 – 336.

[12] Heiko Gebauer, Thomas Friedli, Elgar Fleisch, (2006),"Success factors for achieving high service

revenues in manufacturing companies", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp. 374 –

386.

[13] Jo Ann M. Duffy, James A. Fitzsimmons, Nikhil Jain, (2006),"Identifying and studying "best-performing"

services: An application of DEA to long-term care", Benchmarking: An International Journal, Vol. 13 Iss:

3 pp. 232 – 251.

1648 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

[14] Li-Jen Jessica Hwang, Andrew Lockwood, (2006),"Understanding the challenges of implementing best

practices in hospitality and tourism SMEs", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp.

337 – 354.

[15] Lin Peter Wei-Shong, Mei Albert Kuo-Chung, (2006),"The internal performance measures of bank

lending: a value-added approach", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp. 272 – 289.

[16] Mahmoud M. Nourayi, (2006),"Profitability in professional sports and benchmarking: the case of NBA

franchises", Benchmarking: An International Journal, Vol. 13 Iss: 3 pp. 252– 271.

[17] Sarath Delpachitra, (2008),"Activity-based costing and process benchmarking: An application to general

insurance", Benchmarking: An International Journal, Vol. 15 Iss: 2 pp. 137 – 147.

[18] Hervani, A.A., Helms, M.M. and Sarkis, J. (2005), “Performance measurement for green supply chain

management”, Benchmarking: An International Journal, Vol. 12 No. 4, pp. 330-53.

[19] Kyro¨, P. (2003), “Revising the concept of benchmarking”, Benchmarking: An International Journal, Vol.

10 No. 3, pp. 210-25.

[20] Simatupang, T.M. and Sridharan, R. (2004), “Benchmarking supply chain collaboration, an empirical

study”, Benchmarking: An International Journal, Vol. 11 No. 5, pp. 484-503.

1649 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

IDENTIFICATION OF BARRIERS AFFECTING

IMPLEMENTATION OF 5S

Sunil Mehra1, Rajesh attri2, Bhupender Singh3

1

M.Tech. Student, 2,3Asst. Professor, Mechanical Engineering,

YMCA University of Science and Technology, (India)

ABSTRACT

The 5S methodology is the best tool for generating a change in attitude among employee and serves as a way to

engage important activities the workplace. But, implementation of this universally accepted and challenging

system is not an easy task by any means as it requires establishing new cultures, changing attitudes, creating

good work environments. There are certain barriers which effects the implementation of 5S in the

manufacturing organisation. The aim of the present work is to identify the different types of barriers which

affect the implementation of 5S in manufacturing organisation.

Keywords: 5s, Barriers, Implementation, Manufacturing Organisation

I. INTRODUCTION

The 5S methodology is a very suitable way to start the process of continuous improvement (Carmen Jaca et al.,

2013). The 5S methodology is one of the best tools for generate a change in attitude among workers and serves

as a way to engage improvement activities the workplace (Gapp et al., 2008). This methodology was developed

in Japan by Hirano (1996). The 5S name corresponding to the first latter of five Japanese word – Seiri, Seiton,

Seiso, Seiketsu, Shitsuke, (Ramos Alonso 2002) and their rough English equivalents – Sort, Set in order

(Straighten), Shine, Standardize, Sustain. Hirano establishes that Lean Culture requires a change in people’s

mentality as well as applying 5S as a requirement for the implementation of other actions to achieve

improvement and as a basic step towards eliminating waste. In Japanese culture each word that makes up 5S

means the following (Ramos Alonso 2002):

1. Seiri (Sort) – In which, we identify what is needed to do daily work, what is not needed, and what can be

improved.

2. Seiton (Straighten/ Set in Order) – In which, we organise the work area with the best locations for the

needed items.

3. Seiso (Shine) – In which, we Clean or remove reasons for unorganized, unproductive and unsafe work.

Create measures and preventative maintenance to ensure the Shine step.

4. Seiketsu (Standardize) – In which, we provide procedures to ensure understanding of the process. This S

supports the first 3 S’. Keep using best practices.

5. Shitsuke (Sustain) – In which, we set up the system to ensure the integrity of the process and build it so it

that improvement is continuous.

1650 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

5S can ultimately be applied to any work area, in and outside manufacturing. The same techniques apply to any

process including those in the office (Liker et al., 2004).

The main objective of the current research is to identify the barriers in the implementation of 5S in Indian

manufacturing organizations.

II. BENEFITS OF 5S

•

5S will improve work efficiency, safety and also improves productivity.

•

A clean and organised workplace is safer. It decreases the possibility of injuries occurring.

•

Increase product quality and process quality.

•

5S will motivate and involve your employees.

•

5S will organize your whole organisation as well as workplace.

•

5S will remove clutter from workspace.

III. IMPLEMENTATION OF 5S

The 5S implementation requires commitment from both top management and everyone in the organisation. The

5S practice requires investment in time and if properly implemented it has a huge impact on organisational

performance (Ho 1999a; linker 2004; linker and Hoseus 2008).

The effective implementation of the 5S method is the responsibility of the management and the entire team of

employees. The implementation should be carried out after prior training and making staff aware of the validity

and the effectiveness of the method used.

Literature review and experiences of managers and academicians reveal that implementation of 5S is not an easy

task by means as it requires establishing new cultures, changing attitudes, creating good work environment’s

and shifting the responsibility to the every employee of the organisation. The main purpose of implementing 5S

is to achieve better quality and safety.

IV. IDENTIFICATION OF BARRIERS

4.1 Lack of Top Management Commitment

Top management is to control and help the continuous improvement activities, it relate the activities to business

target and goals. Top management support and commitment is necessary for any strategic program success

(Hamel & Prahalad, 1989; Zhu & Sarkis, 2007). Lack of commitment from top management is a chief barrier

for successful adoption of green business practices (Mudgal et al., 2010). Without top management

commitment, no quality initiative can succeed. Top management must be convinced that registration and

certification will enable the organization to demonstrate to its customers a visible commitment to quality. The

top management should provide evidence of its commitment to the development and implementation of the

quality management system and continually improve its effectiveness. The lack of management support is

attributed to management not completely understanding the true goal of the implementation of 5S (Chan et al.,

2005; Rodrigues and Hatakeyama, 2006 and Attri et al., 2012).

1651 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

4.2 Financial Constraints

Financial constraints are a key barrier to implementation of 5S. Information and technological systems require

more funds because without these, implementation of 5S is not possible in the present environment. Funds are

needed to institute training programs, provide quality resources, payments for external consultants, payment for

auditors, and payment for certification. Lack of financial support affects the certification programs also. 5S

improves operational efficiency, customer service, provides an ability to focus on core business objectives and

provides greater flexibility (Barve et al., 2007). If any organization has insufficient financial resource, it will not

be in their budget to implementation of 5S.

4.3 Lack of Awareness of 5S

A major barrier of 5S seen in Indian organisation is lack of awareness about the benefits of the 5S provider. The

lack of awareness of the benefits of the 5S both from economic and agile could be a major factor for the

resistance to change to 5S. But in manufacturing organisation, due to lack of awareness of 5S, manufacturing

organisation is not able to improve performance and work efficiency. If employees of any industry will not have

proper understanding of 5S. They will not achieve their objective and goals. Better understanding will help in

implementation of 5S. Thus, we can say that lack of awareness of 5S is a major barrier to implementation of 5S.

4.4 Lack of Strategic Planning of 5S

Strategic planning is the identification of 5S goals and the specification of the long term plan for managing

them. The main role of strategic planning is of paramount importance to any new concept to get institutionalized

and incorporated into routine business.

4.5 Lack of Employee Commitment

Employee check the deadlines and result of the continuous improvement activities, it spend time helping root

cause solving problem activities and standardization. Employee has good communication skills and sufficient

knowledge about the implementation of 5S. Employee has confidence on 5S implementation and its result.

Without employee commitment, no quality initiative can succeed.

4.6 Resistance to Change and Adoption

To attenuate this resistance to change, employees at all levels of the organization must be educated about the

goals of 5S implementation well in time (Khurana, 2007). A chief barrier seen in implementation of 5S is the

resistance to change, human nature being a fundamental barrier. Employee’s commitment to change

programmes is essential given that they actually execute implementation activities (Hansson et al., 2003).

4.7 Lack of Cooperation/Teamwork

The success of any type of business relies on the effective teamwork and collaboration of employees at all levels

of the organisation. When employees fail to work together as a team, business initiatives and goals become more

difficult to attain and the surroundings workplace environment can become negative and disrupting.

4.8 Lack of Education and Training

Employees of an organization must be properly educated and trained in a sufficient manner. If employees are

not trained, this factor also affects the implementation of 5S. Without proper knowledge they will not be aware

1652 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

of work culture of this quality program and many misconceptions will be in their mind without training.

According to Mital et al. (1999), there is a dire need to train workers in manufacturing organizations and thereby

improve the overall effectiveness and efficiency of such organizations. A long-term educational and training

programme should be designed and implemented to train the employees so that reallocation of the human

resource for jobs requiring advanced technical expertise can be made possible (Hung and Chu, 2006).

4.9 Lack of Motivation

Motivation derived from the latin word ‘movere’, which means to move (Kretiner, 1998). It is an inner drive and

intention that causes a person to do something or get a certain way (Fuhrmann, 2006). Employee motivation is a

major factor in the success or failure for any organization (Barrs, 2005). Employees as the bridge to

Competitiveness So Organizations must invest in effective strategies to motivate employees (Latt, 2008).

Motivation changes the behavior of an employee towards work from negative to positive. Besides that, without

motivated employees, profit, products, services, moral and productivity suffer.

4.10 Inability to Change Organisational Culture

The organisational culture provides the rule of behavior and attitude. Organisational culture is also motivating

the employees and helps leaders accelerate strategy implementation in their organisation. Top management is

able to change organisational culture for improving performance and work efficiency.

4.11 Non – Clarity of Organisation Policy and 5S Programme

Managers and employee have non - clarity of organisation policy and objectives of 5S. Managers have sufficient

technical knowledge and skills to manage their employees and clarify organisation policy for achieving their

goals and objective.

4.12 Lack of Communication

Employees are not communicating about the continuous improvement result, the activities being under taken,

the people that was part of the activities, the objectives and next steps. Communication is very essential in any

organization. One department has to communicate to the other to get some information. So the relations between

the departments should be good otherwise it will harm the effectiveness of the organization. Lack of

communication will also result in the non- participation of the employee. An effective communication channel

is required in the organization.

4.13 No Proper Vision and Mission

For good implementation of 5S any organization must have a proper vision and mission. In any type of an

organization without an aim will not be able to take advantage of the quality program. An organization should

be clear in this aspect that why they are implementing 5S and what are their objectives or goals.

4.14 Lack of Leadership

Leadership relating to quality is the ability to inspire people to make a total willing and voluntary commitment

to accomplishing or exceeding organizational goals. Leadership establishes the unity and purpose for the

internal environment of the organization. 5S may fail due to lack of leadership.

1653 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

4.15 Conflict with Other Quality Management System

Quality management system is a technique used to communicate to employees. Each employee has different

opinion regarding the quality management system so there is a conflict among the employee. The rule and

regulation also vary from one quality system to another.

V. CONCLUSION

The present study conclude that 5S is an important tool to organize the whole organization in a systematic

manner. 5S satisfies both organization and customer. Implantation of 5S method is the responsibility of the

management and the entire team of employees. In this study we identify the different barriers that hinder the

implementation of 5S in an organization. With the help of these barriers will improve performance and

efficiency in any type of organization. These barriers are independent to each other so with the help of these

barriers we improve quality and performance.

REFERENCES

[1]. Ahuja, I., & Khamba, J. (2008). Strategies and success factors for overcoming challenges in TPM

implementation in Indian manufacturing industry. Journal of Quality in Maintenance Engineering, 14(2),

123-147.

[2]. Bain, N. (2010). The consultant guide to successfully implementing 5S. Retrieved August, 3, 2010, from

http: www.leanjourney.ca/Preview/Preview- TheConsultantsGuideTo5S.pdf

[3]. Attri, R., N. Dev, and V. Sharma. 2013. “Interpretive Structural Modelling (ISM) Approach: An

Overview.” Research Journal of Management Sciences 2 (2): 3–8.

[4]. Achanga, P., E. Shehab, R. Roy, and G. Nelder. 2006. “Critical Success Factors for Lean Implementation

within SMEs.” Journal of Manufacturing Technology Management 17 (4): 460–471.

[5]. Ablanedo-Rosas, J., B. Alidaee, J. C. Moreno, and J. Urbina. 2010. “Quality Improvement Supported by

the 5S, an Empirical Case Study of Mexican Organisations.” International Journal of Production Research

48 (23): 7063–7087.

[6]. Anand, G., & Kodali, R. (2010). Development of a framework for implementation of lean manufacturing

systems. International Journal of Management Practice, 4(1), 95–116.

[7]. Dahlgaard, J.J., & Dahlgaard-Park, S.M. (2006). Lean production, six sigma quality, TQM and company

culture. The TQM Magazine, 18(3), 263–281.

[8]. Hospital .(2001). Report on the 5s training in provincial hospitals of three convergence sites. Unpublished

report: Negros Oriental Provincial Hospital.

[9]. Kumar, S., & Harms, R. (2004). Improving business processes for increased operational efficiency:a case

study. Journal of Manufacturing Technology Management, 15(7), 662–674.

[10]. Ramlall, S. (2004). A Review of Employee Motivation Theories and their Implications for Employee

Retention within Organizations . The Journal of American Academy of Business, 52-63.

[11]. Raj, T., Shankar, R. and Suhaib, M. (2009) ‘An ISM approach to analyse interaction between barriers of

transition to Flexible Manufacturing System’, Int. J. Manufacturing Technology and Management, Vol.

[12]. Raj T. and Attri R., Identification and modelling of barriers in the implementation of TQM, International

Journal of Productivity and Quality Management, 28(2), 153-179 (2011).

1654 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

[13]. Attri R., Grover S., Dev N. and Kumar D., An ISM approach for modelling the enablers in the

implementation of Total Productive Maintenance (TPM), International Journal System Assurance

Engineering and Management, DOI: 10.1007/s13198-012-0088-7 (2012) 16. Attri R., Grover S., Dev N.

and Kumar D., An.

[14]. Ab Rahman, M.N., et al., Implementation of 5S Practices in the Manufacturing Companies: A Case Study.

American Journal of Applied Sciences, 2010. 7(8): p. 1182-1189.

[15]. Hough, R. (2008). 5S implementaion methodology. Management Services, 35(5)44-45. Retrieved from

Academic Search Complete database.

[16]. Gapp, R., R. Fisher, and K. Kobayashi. 2008. “Implementing 5S within a Japanese Context: An Integrated

Management System.” Management Decision 46 (4): 565–579.

[17]. Hirano, H. 1996. 5S for Operators. 5 Pillars of the Visual Workplace. Tokyo: Productivity Press.

[18]. Ramos Alonso, L. O. 2002. “Cultural Impact on Japanese Management. An Approach to the Human

Resources Management.” [La incidencia cultural en el management japonés. Una aproximación a la

gestión de los recursos humanos]. PhD diss., Universidad de Valladolid, Valladolid, Spain.

[19]. Ho, S. K. M. 1998. “5-S Practice: A New Tool for Industrial Management.” Industrial Management &

Data Systems 98 (2): 55–62.

[20]. Liker, J.K., 2004. The Toyota way: fourteen management principles from the world’s greatest

manufacturer. New York: McGraw-Hill.

[21]. Liker, J.K. and Hoseus, M., 2008. Toyota culture: the heart and soul of the Toyota way. New York:

McGraw-Hill.

1655 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

VARIOUS WAVELET ALGORITHMS FOR IRIS

RECOGNITION

R.Subha1, Dr. M.Pushparani2

1

Research Scholar, Mother Teresa Women’s University, Kodaikanal

2

Professor and Head, Department of Computer Science,

Mother Teresa Women’s University, Kodaikanal (India)

ABSTRACT

Individual identification has become the need of modern day life. The recognition must be high-speed, automatic

and infallible. Biometrics has emerged as a strong alternative to identify a person compared to the traditional

ways. Also biometric identification can be made speedy, automatic and is already foolproof. Among other

biometrics, Iris recognition has emerged as a strong way of identifying any person. Iris recognition is one of the

newer biometric technologies used for personal identification. It is one of the most reliable and widely used

biometric techniques available. In geAneral, a typical iris recognition method includes Localization,

Normalization, and Matching using traditional and statistical methods. Each method has its own strengths and

limitations. In this paper, we compare the recital of various wavelets for Iris recognition algorithm like complex

wavelet transform, Gabor wavelet, and discrete wavelet transform.

Keywords: Iris Recognition, Complex Wavelets, Gabor Wavelets, Discrete Wavelet Transform

I. INTRODUCTION

Biometric is the science of recognizing a person based on physical or behavioral characteristics. The commonly

used biometric features include speech, fingerprint, face, voice, hand geometry, signature, DNA, Palm, Iris and

retinal identification future biometric identification vein pattern identification, Body odor identification, Ear

shape identification, Body salinity (salt) identification. Out of all biometric system, Iris biometric is best

suitable as it cannot be stolen or cannot be easily morphed by any person. The human iris is an annular part

between the pupil and white sclera has an extraordinary structure.

Fig 1: The Human Eye Structure

The iris begins to form in the third month of gestation and structures creating its pattern are largely complete by

the eight months, although pigment accretion can continue in the first postnatal years. Its complex pattern can

contain many distinctive features such arching ligaments, furrows, ridges, crypts, rings corona, and freckles.

These visible characteristics, which are generally called the texture of the iris, are unique to each subject.

1656 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

II. OVERVIEW OF THE IRIS RECOGNITION SYSTEM

Biometrics is automated method of identifying a person or verifying the identity of a person based on

physiological or behavioral characteristic. Examples of physiological characteristics include hand or fingers

images, facial characteristic and iris recognition. Behavioral Image processing techniques can be employed to

extract the unique iris pattern from a digitized image of the eye and encode it into the biometric template,

which can be stored in database.

The iris being a protected internal organ, whose random texture is most reliable and stable throughout life, can

serve as a kind of living password that one need not to remember but always carries along. Every iris is distinct,

even two irises of the same individual, and the irises of twins are different. Iris patterns are formed before birth

and do not change over the course of a life time. This biometric template contains an objective mathematical

representation of the unique information stored in the iris, and allows the comparisons made between

templates. When a person wishes to be identified by an iris recognition system, their eye is first photographed

and then template is created for their iris region. This template is then compared with the template stored in a

database, until either a matching template is found and a subject is identified, or no match is found and subject

remains unidentified.

III. VARIOUS WAVELETS TRANSFORM

3.1 Complex Wavelets

Complex Wavelets Transforms use complex valued filtering that decomposes the real/complex signals into

real and imaginary parts in transform domain. The real and imaginary coefficients are used to compute

amplitude and phase information, just the type of information needed to accurately describe the energy

localization of oscillating functions. Here complex frequency B-spline wavelet is used for iris feature

extraction.

3.1.1 Result

The iris templates are matched using different angles 210,240,280,320 and 350 Degrees and it is observed

that as angles increases percentage of matching also increases the better match is observed at angle 350

which is 93.05%.Further by detecting eyelids and eyelashes the iris image is cropped and iris template is

generated for matching purpose the results obtained is better than previous results the matching score is

95.30%.

3.2 Gabor Wavelet

The main idea of this method is that: firstly we construct two-dimensional Gabor filter, and we take it to

filter these images, and after we get phase information, code it into 2048 bits, i.e. 256 bytes. In image

processing, a Gabor filter, named after Dennis Gabor, is a linear filter used for edge detection. Frequency and

orientation representations of Gabor filter are similar to those of human visual system, and it has been

found to be particularly appropriate for texture representation and discrimination. In the spatial domain, a 2D

Gabor filter is a Gaussian kernel function modulated by a sinusoidal plane wave. The Gabor filters are selfsimilar - all filters can be generated from one mother wavelet by dilation and rotation.

Its impulse response is defined by a harmonic function multiplied by a Gaussian function. Because of

the multiplication-convolution property (Convolution theorem), the Fourier transform of a Gabor filter's

impulse response is the convolution of the Fourier transform of the harmonic function and the Fourier

1657 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

transform of the Gaussian function. The filter has a real and an imaginary component representing

orthogonal directions. The two components may be formed into a complex number or used individually.

Gabor filters are directly related to Gabor wavelets, since they can be designed for a number of dilations and

rotations. However, in general, expansion is not applied for Gabor wavelets, since this requires computation of

bi-orthogonal wavelets, which may be very time- consuming. Therefore, usually, a filter bank consisting of

Gabor filters with various scales and rotations is created. The filters are convolved with the signal,

resulting in a so-called Gabor space. This process is closely related to processes in the primary visual cortex.

Jones and Palmer showed that the real part of the complex Gabor function is a good fit to the

receptive field weight functions found in simple cells in a cat's striate cortex.

3.2.1 Result

We use the Daugman's methods to iris regions segmentation and use Gabor wavelet for feature extraction. At

last, in the identification stage we calculate Hamming distance between a test image & a training image.

The smallest distance among them is expressed, that test image belongs to this class. The recognition rate is

96.5%.

3.3 The Discrete Wavelet Transform

Computing wavelet coefficients at every possible scale is a fair amount of work, and it generates an awful lot of

data. That is why we choose only a subset of scales and positions at which to make our calculations. It turns

out, rather remarkably, that if we choose scales and positions based on powers of two so-called dyadic scales

and positions then our analysis will be much more efficient and just as accurate.

3.3.1 Result

The technique developed here uses all the frequency resolution planes of Discrete Wavelet Transform

(DWT). These frequency planes provide abundant texture information present in an iris at different

resolutions. The accuracy is improved up to 98.98%. With proposed method FAR and FRR is reduced up to

0.0071% and 1.0439% respectively.

IV. CONCLUSION

In this paper, we compare the performance of various wavelets for Iris recognition like complex wavelet

transform, Gabor wavelet, and discrete wavelet transform. Using complex wavelet, different coefficient

vectors are calculated. Minimum distance classifier was used for final matching. The smaller the distance the

more the images matched. It is observed that for the complex wavelets the results obtain are good than the

simple wavelet because in complex wavelet we get both phase and angle also real and imaginary coefficients,

so we can compare all these parameters for iris matching purpose.2D Gabor wavelets have the highest

recognition rate. Because iris is rotator, and 2D Gabor wavelets have rotation invariance, it has the

highest recognition rate. But 2D Gabor wavelets have high computational complexity, and need more

time. Discrete wavelet transform used for iris signature formation gives better and reliable results.

REFERENCE

[1].

Biometrics: Personal Identification in a Networked Society, A. Jain, R. Bolle and S. Pankanti, eds.

Kluwer, 1999.

1658 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

[2].

ISBN: 978-81-931039-3-7

D. Zhang, Automated Biometrics: Technologies and Systems. Kluwer, 2000 Anil Jain. Introduction to

Biometrics. Michigan State University, East Lansing, MI.

[3].

L. Ma, T, Yunhong Wang, and D. Zhang. Personal identification based on iris texture analysis. IEEE

Transactions on Pattern Analysis and Machine Intelligence, vol.25, no.12, 2003

[4].

John Daugman. Recognizing persons by their iris patterns. Cambridge University, Cambridge, UK.

[5].

J. Daugman, "Demodulation by Complex-Valued Wavelets for Stochastic Pattern Recognition," Int'l

J. Wavelets, Multiresolution and Information Processing, vol. 1, no. 1, pp. 1-17, 2003

[6].

W. Boles and B. Boashash, "A Human Identification Technique Using Images of the Iris and Wavelet

Transform," IEEE Trans. Signal Processing, vol. 46, no. 4, pp. 1185- 1188, 1998

[7].

R. Wildes, J. Asmuth, G. Green, S. Hsu, R. Kolczynski, J. Matey, and S. McBride, "A Machine-Vision

System for Iris Recognition," Machine Vision and Applications, vol. 9, pp. 1-8, 1996

[8].

Makram Nabti and Bouridane, "An effective iris recognition system based on wavelet maxima and Gabor

filter bank", IEEE trans. on iris recognition, 2007.

[9].

Narote et al. "An iris recognition based on dual tree complex wavelet transform". IEEE trans. on iris

recognition, 2007.

[10]. Institute of Automation Chinese Academy of Sciences. Database of CASIA iris image [EB/OL]

[11]. L. Masek, "Recognition of Human Iris Patterns for Biometric Identification", The University of

Western California, 2003.

[12]. N. G. Kingsbury, "Image processing with complex wavelets," Philos.Trans. R. Soc. London A,

Math. Phys. Sci, vol. 357, no. 3, pp. 2543-2560, 1999.

[13]. Vijay M.Mane, GauravV. Chalkikar and Milind E. Rane, "Multiscale Iris Recognition System",

International journal of Electronics and Communication Engineering & Technology (IJECET),

Volume 3, Issue 1, 2012, pp. 317 - 324, ISSN Print: 0976- 6464, ISSN Online: 0976 -6472.

[14]. Darshana Mistry and Asim Banerjee, "Discrete Wavelet Transform using Matlab", International

journal of Computer Engineering & Technology (IJCET), Volume 4, Issue 2, 2012, pp. 252 - 259,

ISSN Print: 0976 - 6367, ISSN Online: 0976 - 6375.

[15]. Sayeesh and Dr. Nagaratna p. Hegde “A comparison of multiple wavelet algorithms for iris

Recognition” International Journal of Computer Engineering and Technology (IJCET), ISSN 09766367(Print), ISSN 0976 - 6375(Online) Volume 4, Issue 2, March - April (2013), © IAE

1659 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

HIDING THE DATA USING STEGANOGRAPHY

WITH DIGITAL WATERMARKING

1

G.Thirumani Aatthi, 2A.Komathi

1

Research Scholar, Department of Computer Science & Information Technology,

Nadar Saraswathi College of Arts & Science, Theni,Tamil Nadu,(India)

2

Department of Computer Science & Information Technology;

Nadar Saraswathi College of Arts & Science, Theni, Tamil Nadu,(India)

ABSTRACT

Data Security is the method of shielding Information. It protects its accessibility, privacy and Integrity. Access

to Stored data on computer data base has improved greatly. More Companies store business and individual

information on computer than ever before. Much of the data stored is highly confidential and not for public

viewing. Cryptography and steganography are well known and widely used techniques that manipulate

information in order to cipher or hide their existence. These two techniques share the common goals and

services of protecting the confidentiality, integrity and availability of information from unauthorized access. In

Existing research, data hiding system that is based on image steganography and cryptography is proposed to

secure data transfer between the source and destination. In this the main drawback was that, the hackers may

also get the opportunity to send some information to destination and it may lead confusion to receiver.

In my research I proposed LSB(Leased Significant Bit) Technique used for finding the image pixel position and

pseudorandom permutation method used for store the data in random order. Moreover I have proposed digital

watermark technique to avoid the unauthorized receiving information from hackers. In this proposed system, the

above three technique will be combined for secure data transfer. Experimental results will prove the efficiently

and security of my Proposed work.

Key Word: Cryptography, Steganography, Data Security, Key Generation

I. INTRODUCTION

In the Internet one of the most important factors of information technology and communication has been the

security of information. Cryptography was created as a technique for securing the secrecy of communication

and many different methods have been developed to encrypt and decrypt data in order to keep the message

secret. Unfortunately it is sometimes not enough to keep the contents of a message secret, it may also be

necessary to keep the existence of the message secret. The technique used to implement this, is called

steganography.

Steganography is the art and science of invisible communication. This is accomplished through hiding

information in other information, thus hiding the existence of the communicated information. All digital file

formats can be used for steganography, the four main categories of file formats that can be used for

steganography.

1660 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

Figure 1: Categories of Steganography

Over the past few years, numerous steganography techniques that embed hidden messages in multimedia objects

have been proposed. There have been many techniques for hiding information or messages in images in such a

manner that the alterations made to the image are perceptually indiscernible. Common approaches are include:

(i) Least significant bit insertion (LSB)

(ii) Masking and filtering

(iii) Transform techniques

Least significant bits (LSB) insertion is a simple approach to embedding information in image file. The simplest

steganographic techniques embed the bits of the message directly into least significant bit plane of the coverimage in a deterministic sequence. Modulating the least significant bit does not result in human-perceptible

difference because the amplitude of the change is small.

Masking and filtering techniques performs analysis of the image, thus embed the information in significant areas

so that the hidden message is more integral to the cover image than just hiding it in the noise level.

Transform techniques embed the message by modulating coefficients in a transform domain, such as the

Discrete Cosine Transform (DCT) used in JPEG compression, Discrete Fourier Transform, or Wavelet

Transform. These methods hide messages in significant areas of the cover-image, which make them more robust

to attack. Transformations can be applied over the entire image, to block through out the image, or other

variants.

II. LITERATURE REVIEW

Here, Ms.Dipti and Ms.Neha, developed a technique named, “Hiding Using Cryptography and

Steganography”[01]is discussed. In that, Steganography and Cryptography are two popular ways of sending vital

information in a secret way. One hides the existence of the message and the other distorts the message itself.

There are many cryptography techniques available; among them AES is one of the most powerful techniques. In

Steganography we have various techniques in different domains like spatial domain, frequency domain etc. to

hide the message. It is very difficult to detect hidden message in frequency domain and for this domain we use

various transformations like DCT, FFT and Wavelets etc. They are developing a system where we develop a

new technique in which Cryptography and Steganography are used as integrated part along with newly

developed enhanced security module. In Cryptography we are using AES algorithm to encrypt a message and a

part of the message is hidden in DCT of an image; remaining part of the message is used to generate two secret

keys which make this system highly secured.

Another newly developed Method named,“Two New Approaches for Secured Image Steganography Using

Cryptographic Techniques and Type Conversions. Signal & Image Processing”

[02]

is discussed. Mr. Sujay, N.

and Gaurav, P,introduces two new methods wherein cryptography and steganography are combined to encrypt

the data as well as to hide the encrypted data in another medium so the fact that a message being sent is

concealed. One of the methods shows how to secure the image by converting it into cipher text by S-DES

1661 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

algorithm using a secret key and conceal this text in another image by steganographic method. Another method

shows a new way of hiding an image in another image by encrypting the image directly by S-DES algorithm

using a key image and the data obtained is concealed in another image.

Another newly developed Method named, “ Image Based Steganography and Cryptography “[06] is discussed. In

that Mr. Domenico, B. and Luca, L. year.. describe a method for integrating together cryptography and

steganography through image processing. In particular, they present a system able to perform steganography and

cryptography at the same time using images as cover objects for steganography and as keys for cryptography.

They will show such system is an effective steganographic one (making a comparison with the well known F5

algorithm) and is also a theoretically unbreakable cryptographic one (demonstrating its equivalence to the

Vernam Cipher)

III. PROPOSED WORK

In this paper I proposed, Data hiding in media, including images, video, and audio, as well as in data files is

currently of great interest both commercially, mainly for the protection of copyrighted digital media, and to the

government and law enforcement in the context of information systems security and covert communications. So

I present a technique for inserting and recovering “hidden” data in image files as well as gif files. Each Color

pixel is a combination of RGB Values wherein each RGB components consists of 8 bits. If the letters in ASCII

are to be represented within the color pixels, the rightmost digit, called the Least Significant Bit (LSB) can be

altered to hide the images.

3.1 Key Stream Generation

In order to encrypt the message, we choose a randomly generated key-stream. Then the encryption is done byte

by byte to get the ciphered text.

The key stream is generated at the encryption and decryption site.

For encryption, a secret seed is applied to the content which in turn generates the key stream. In order to

generate the same key at the decryption site, the seed must be delivered to the decryption site through a secret

channel.Once the seed is received, it can be applied to the cipher text to generate the key stream which is further

used for decryption

C = E(M,K) = (Mi+Ki) mod 255

where i=0 to L-1

Where M is Message

K is randomly generated key-stream.

3.2 Watermarking Algorithm

3.2.1 SS (Spread Spectrum)

We proposed a spread spectrum watermarking scheme. The embedding process is carried out by first generating

the watermark signal by using watermark information bits, chip rate and PN sequence.The watermark

information bits b = {bi}, where bi = {l, -1}, are spread by, which gives aj=bi

The watermark signal W = {wj}, where

wj = ajPj

where Pj= {l, -1}

The watermark signal generated is added to the encrypted signal, to give the watermarked signal

CW= C + W = Cwi= ci + wi.

1662 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

IV. SAMPLE SCREEN SHOTS

Send the Message

Receive the Message

Encrypt & Validation Code

Verification Encryption Key & Validation Code

Decrypt: (Water Marking With Text)

V. CONCLUSION

I propose a novel technique to embed a robust watermark in the JPEG2000 compressed encrypted images using

three different existing watermarking schemes. The algorithm is simple to implement as it is directly performed

in the compressed-encrypted domain, i.e., it does not require decrypting or partial decompression of the content.

1663 | P a g e

International Conference on Emerging Trends in Technology, Science and Upcoming Research in Computer Science

DAVIM, Faridabad, 25th April, 2015

ISBN: 978-81-931039-3-7

The scheme also preserves the confidentiality of content as the embedding is done on encrypted data. The

homomorphic property of the cryptosystem are exploited, which allows us to detect the watermark after

decryption and control the image quality as well. The detection is carried out in compressed or decompressed

domain. In case of decompressed domain, the non-blind detection is used. I analyze the relation between

payload capacity and quality of the image (in terms of PSNR and SSIM) for different resolutions. Experimental

results show that the higher resolutions carry higher payload capacity without affecting the quality much,

whereas the middle resolutions carry lesser capacity and the degradation in quality is more than caused by

watermarking higher resolutions.

REFERENCES

[1]. Dipti, K. S. and Neha, B. 2010. Proposed System for Data Hiding Using Cryptography and Steganography.

International Journal of Computer Applications. 8(9), pp. 7-10. Retrieved 14th August, 2012

[2]. Sujay, N. and Gaurav, P. 2010. Two New Approaches for Secured Image Steganography Using

Cryptographic Techniques and Type Conversions. Signal & Image Processing: An International Journal

(SIPIJ), 1(2), pp 60-73.

[3]. Domenico, B. and Luca, L. year. Image Based Steganography and Cryptography.

[4]. Jonathan Cummins, Patrick Diskin, Samuel and Robert Par-lett,“Steganography and Digital

Watermarking”, 2004.

[5]. Clara Cruz Ramos, Rogelio Reyes Reyes, Mariko Nakano Miyata-keandHéctor Manuel Pérez Meana,

“Watermarking-Based Image Authentication System in the Discrete Wavelet Transform Domain”.intechopen.

[6]. Domenico, B. and Luca, L. year. Image Based Steganography and Cryptography.

[7]. Niels, P. and Peter, H 2003. Hide and Seek: An Introduction to Steganography. IEEE Computer Society.

IEEE Security and Privacy, pp. 32-44.

1664 | P a g e