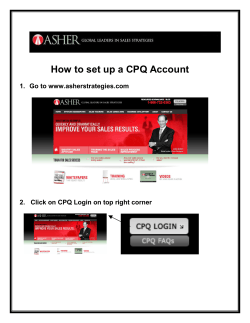

An Accurate Comparison of One-Step and Two