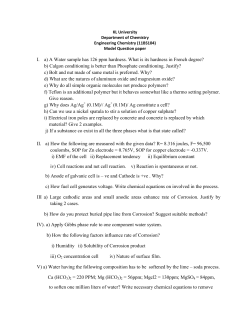

11 Improved Cost Control with Digital

Improved Cost Control with Digital Oil

Field Chemistry

John-Arne Stokkan, Schlumberger; Bengt Ellingsen, Schlumberger; Tor Sigve Taksdal,

Schlumberger, Hector Manuel Escalona Gonzalez, Schlumberger

Tekna Oil Field Chemistry Symposium 2015, 22-25 th March 2015, Geilo, Norway

Abstract

The traditional data management and reporting within oil field chemistry and flow assurance has been

driven by somewhat conservative thinking. Although digital solutions has been a topic over the years the

oil field chemistry domain remains conservative in this regard. Highly focused on reporting chemical

usage and dosage, analysis trends and financial parameters, and perhaps not so much digging in to the

large potential in understanding data and data dependencies. Making an analogy like going to a medical

check-up that does not only include blood pressure and coughing into a stethoscope; instead there are

multiple indicators that isolated may not tell the whole story, however, when combined we get a better

view on the health status and if intervention is required before there is a problem.

The oil field can in such perspective be viewed as a living organism with veins, pulse and organs. Each

dependent on each other, where one defect can impact the flow further down the line. Overlooking

indicators due to cost saving or lack of management strategy can prove to be costly as issues are often

not captured in time to take a proper decision. It is all about data quality, amount and frequency. A

single data point is rarely information, however trends and capture changes are essential. The cost of

investment in proper data acquisition, management and visualization will in most cases be small

compared to the value of saved cost from less intervention and extended facility lifetime. Knowledge is

the key and in this sense, experts are always scarce, so capturing best practices becomes as well

essential for continuous improvement.

We present a novel approach using software suites well known for production data management,

simulation, real-time visualization and dashboard information. By extending production data

management solution (PDMS) into production chemical data management (PCDMS) and put in use as a

hub for data management, simulation and surveillance. We have analyzed the requirements, available

features and possibilities for a digital oil field solution for the future with SCADA, real-time visualization,

performance intelligence dashboards (PI) and function as a decision support system (DSS) focusing on

the right information required to manage operational changes. This approach with automation reduces

need for costly interventions and keep the cost of operation under control, allowing for better decisions

in a combination of key performance indicators on an operational, tactical and strategic level.

1

Introduction to digital data management and automated processes

Digital oil field, asset management and decision support

In the last decade, there has been an increasing focus on digital oilfield solutions. High speed

communications now link production facilities with centralized operations support Centers. Major oil

companies are investing strategically in their digital oilfield initiatives; “integrated operations”, “field of

the future”, “remote operations”, “smart fields”,” I-fields” and “field monitoring”.

Digital Oil Field in this sense, is regarded as the cross-domain combination of People, Processes,

Technology and Information, through the entire production system, in a way that allows the

optimization of the use of resources, increasing the efficiency of the overall system, with the aim to

minimize the repetitive tasks to be done implicitly by humans, in particular those that do not generate

value. By enabling this process in an integrated and automated digital oilfield solution or decision

support system, the value is then materialized (14).

The ability to provide performance metrics at the right time through the entire production system is

fundamental to be able to optimize cost and maximize the value of a producing field. The technology

available today gives the engineer the data required to monitor production and flow assurance

parameters, assuming that deviation from a performance parameter will be detected by monitoring the

received data, and that a good decision for improvement and optimization will be based on this data.

The base foundation for the Decision Support System architecture (1) is built with two fundamental

components:

1) A Data Integration Platform (Figure 1) that performs various data management aspects, such as:

2

Automated Data capture, validation and sharing

Data Quality improvements using in build processing tools

Data Integrity and Traceability

With scalable data model and functionality

Offers broad range of reporting and graphing options

Figure 1. Data integration platform

2) A Production Surveillance and Engineering Desktop (Figure 2) that:

3

Provides the required set of tools for data analysis and modeling

Allows the analysis process to be done in an efficient way

Feeds continuously from the data integration platform

Enables faster and more reliable decisions

Figure 2. Production Surveillance and Engineering Desktop

Production chemistry and flow assurance parameters and measurements

The art of production chemistry and flow assurance is a complex operation depending on many

parameters and thorough understanding of operations and production facilities. We know that changes

in operational and physical parameters have an impact on flow assurance and in many cases the effect is

not directly observed, but a related effect occurring further upstream or downstream from the point of

observation. Understanding operational and flow parameters is the key to understand and predict

causes and effects. This understanding is very much data driven and the ability to transform data into

information thus providing a foundation for decisions and actions.

Production chemistry and flow assurance goes a step further from production and operational

management as it is necessary to understand fluid properties and its subsequent changes as conditions

vary over time. This knowledge starts by understanding reservoir fluid properties and how it becomes

affected not only by change in PVT properties, but also impacted by mixing fluids, geochemical reactions

and the use of chemicals. A change in property that happens in the reservoir or when the fluid enters

the well may not become a problem until further change in properties make the conditions ideal for

causing flow restrictions or integrity issues.

Sampling, measurements and cost

The knowledge about fluid behavior and the ability to monitor changes is restricted by the amount and

quality of data. Brown fields may lack baselines for fluid composition, and also in Green fields the

amount of samples and type of samples (e.g. downhole pressurized samples) is a cost issue and is often

neglected in order to keep budget. Sampling involves cost as labor cost, equipment cost (especially for

4

pressurized sampling), access to wells and analysis cost. The result can be questionable data quality to

be able to process data in order to provide information as decision support. The question is then; what

is the impact on decisions that are data driven when good quality data is not present? Does saving cost

in one end leads to increased cost in the other end when we cannot base decisions on reliable

information? Although it does not necessarily always lead to a severe impact as a scaled up well or

hydrate blockage etc., the risk certainly increases with unreliable or incomplete information.

Experiences have shown that there are different views across operators on the necessity to perform risk

analysis of the potential cost by minimizing data collection as a cost reduction measure.

Operational parameters

Fluids may contain a composition that puts it in risk for flow assurance issues such as scale, corrosion,

asphaltenes, wax, hydrates, napthenates, etc. However, it is when the operational parameters like

changes in PVT or facility design when the fluid may cause a problem. This could be in the near-well

area, well completion, production tubing, subsea line, riser, separation, water treatment, oil export etc.

We can verify dependencies between operational parameters and fluid composition; e.g. temperature

and wax crystals (wax appearance temperature and wax dissolution temperature) and thus indirectly

monitor the conditions for wax precipitation. Thus we can already see that operational parameters

impact fluid behavior and properties during flow and when combined with fluid parameters these

parameters are key performance indicators (KPI) that can be monitored individually or in scorecards.

Key performance indicators (KPI) and scorecards

In short, a key performance indicator is a measure for how well we are doing compared to a benchmark.

However, KPI’s are found in multiple levels, trending or status. KPI’s are defined from the question

‘What do we want to know?’ This implies that we need to know the question, thus we need to

understand our system and the chemistry and physics behind flow assurance problems than can arise in

a production facility. Even more the parameter and its value need to be recognized and acknowledged.

As already discussed there is a cost associated to sampling, analysis and monitoring. Do we need all

parameters? There is a saying:

“A value only has a value if its value is valued”

(Bryan Dyson, former CEO of Coca Cola)

So, what does it mean? It could mean that something does not have any impact and can be therefore

disregarded, or if the impact or meaning is not understood even a high risk KPI may be overlooked. Back

to the question ‘what do we want to know?’ We can coarsely divide our KPI’s into categories:

5

Figure 3. A two-level KPI hierarchy, typical for a chemical supply contract. The performance KPI, parent, measured by each of the

child KPI’s. Each of the child KPI has a value and a score by its importance or desired contribution to the performance. The

parent is then a result KPI of its children in a scorecard.

Figure 3 shows a simple two-level scorecard where the parent KPI is a result of its children by their

individual score. The word Performance Intelligence (PI) is related to Business Intelligence (BI) which

gives a measure on how well we are doing. Business intelligence is used in financial data analysis,

although the real objective is to determine the performance, hence Performance Intelligence. KPI’s

stretches through a hierarchy of parent and child KPI’s rolled up into few but descriptive KPI’s. An

example of performance indicator contributors is shown in Figure 4.

Figure 4. Several contributors to a performance KPI with each carrying a value and a score. Each of the child KPI can have

multiple children and further.

The intelligence in Performance Intelligence mean that “hidden” indicators have a set of rules enabling

creation of smart dashboard visualization enables us to focus on the status instantly presented only

requiring action and further data analysis when alerts are showing. Figure 5 show an example of a

surveillance information dashboard.

As we already know; data is not Information. A transformation process is required to reach that label (12).

Large amounts of data need to be treated carefully from the beginning.

Automated and manual QC has to detect meter or gauge failures and typing errors.

Merging and aggregation rules have to be defined appropriately and be transparent to the

engineer.

6

The origin and traceability of the data (how it has been generated) has to be clear.

Data that derives from measured data has to be documented.

The data repository has to be organized and accessible.

The engineer has to identify the target, preprocess and transform the data, and evaluate the

results.

Information can then be obtained and transformed into Knowledge.

To illustrate this: At the start of the day, the production engineer has two concerns: whether the wells

or production facilities are performing as expected and the reason in case they are not. But the actual

value of production is not enough by itself, it needs to be compared against a benchmark such as

planned or target value, to know how much the actual value deviates from it. The answer needs then to

be classified and ranked based on certain constraints.

The generation of proper Key Performance Indicators (KPIs) is also very important to accurately

represent the system in all its levels (reservoir, wells, facilities) and to provide insight into the possible

causes of deviation from specified targets.

The objectives (3) (9) behind surveillance are:

Detection of opportunities. Integration of operational data and events, QC and QA

Corrective alternatives. Analysis of problems and selection of possible corrective actions.

Selection of candidates. Candidate ranking and economic analysis support.

As opportunities are executed, performance needs to be measured to assess the decision and provide

feedback into the surveillance process for continuous improvement and knowledge management.

7

Figure 5. Sample Dashboard for Operational Surveillance for Meter Data Quality

Data management and automation

The digital oil field also includes automation of data collection where it’s possible. This is getting more

focus where investments can be justified by cost reduction, safety requirements or added value through

increased performance or sales. Using SCADA systems data can be captured and transported to

databases where data is organized, quality checked and stored. Linking other data analysis or simulation

software enables data analysis and scenarios to run on fresh data and even visualized with real-time

data.

In certain situations, in particular when there are more wells to look after in the production system, it is

not uncommon to spend ¾ parts of the time in a production engineer’s work day to perform routine

tasks of data cleansing, aggregation, calculation and visualization, leaving only a few hours for real

analysis and decision making. To add to the challenge, the process is not standardized and depends on

the individual preference or work practice.

To overcome these challenges, the decision support solution should be built in accordance with the

following targets:

Maximizing available time for value added activities in the analysis life cycle, by minimizing the

time employed in data preparation and processing activities.

8

Provide the required range of functional capabilities to perform studies and analysis, from

knowledge driven, to data driven methodologies and streamline the process.

Enable a big picture awareness that often is overlooked by working in siloes with little

collaboration or by often constraining the inputs and outputs from other domains.

Enable Key Performance Indicators that enable comparison of planned vs actual performance,

and give information to improve current models as well as results interpretation.

To achieve better control over our asset and operation we need integration between databases and

applications. It has become more popular to talk about integrated software platform that communicate

across applications; as shown in Figure 6 as an example of software platform integration and

relationship between applications for data analysis, simulation and visualization. With a rapid changing

industry; applications need to be highly configurable to meet new requirements in a changing

environment.

Figure 6. With Digital Oil Fields and Asset Management data capture & historians, simulation models, data analysis, decision

workflows and visualization are all linked. Automating data collection is only a part of optimizing field surveillance, linking data

to simulation models allows real-time and performance data to be visualized with predictions and scenario models.

9

Asset Data Management and Production Data Management Systems

(PDMS)

When implementing the Digital Oilfield Decision Support System, the Data Management tasks, Analysis

and Reporting has been noted to improve to a greater extent than other areas in the process execution,

such as Standardization, Integration and Automation. Typical improvement ranges from 30% to 90% in

the overall workflow (9).

The life of equipment, such as compressors, pumps, etc. can also be extended by monitoring their

operational parameters, and change operational settings to keep them running optimally, where all

required information is readily available in the data repository (11).

By making use of the readily available information, the analysis of Events and their consequences, for

example production shortfalls, becomes straightforward and can be visualized easily through Business

Intelligence tools (2):

Figure 7. Shortfall Analysis with Volume lost vs main causes (Pareto Chart)

One of the critical aspects of the implementation of field data management is a big increase in Reporting

Efficiency (~70%). The ease to create both pre-defined and user driven reports is a task that if done

manually takes a good amount of time from the engineer (1) (6).

10

Figure 8. Pre-defined Reports can be generated automatically and send to specific recipients.

The provision of the official hydrocarbon volumes to the financial department, Partners and

Government enabled through the Production Allocation process (Figure 9) allows for a better

distribution of costs, understanding of the Asset and materializes more effectively the impact of

operational activities. When value has been tracked and shown financially, activities such as work overs

or remediation programs can be justified more easily (3).

11

Figure 9. Allocation Network where fiscal measured production adjusts reference production at the facility and well level.

Other benefits include improved Data Ownership and responsibility, cross-discipline data sharing and

security.

As shown in Figure 10, the added value is identified as the added production resulting in the

improvement from the time a particular event is detected (T1), to the time when analysis takes place

(T2) and finally to the time the action is taken in the field. T1 can be reduced to a minimum with

automation and data quality improvements, while T2 can be employed more effectively by the focused

engineer. T3 is more dependent on the organization process and response.

12

Figure 10. Added Value - From Event detection to Operational Action

Tying it all together into dashboards (Figure 11) demonstrates how information is visualized used in

different levels in the organization (operational, tactic and strategic) to help the Decision Making

process (3)(9).

13

Figure 11. A visual portal with easy access from a web browser, directed at three different organizational levels.

14

Value through Production chemical data management systems (PCDMS)

Adding value and optimizing cost

The objectives with setting up systems for data management and reporting:

Getting control over your asset with information for making decisions

Reducing time from data collection to transformation into information enabling quick response

Cost control by optimizing chemical treatments and supply

Added value from cost control and optimizing treatments

When evaluating cost related to chemical usage and treatments, often it’s been measured in money

spent on chemicals. A common remedy has been to reduce the use of chemical. This is short-sighted and

most likely only postponing problems. However, this is usually a result of missing information, which

lead us to the question if we have enough data. We have established that data is not information, and

we can also say that incomplete, missing or low quality data also gives incomplete information leading

decisions to be in best case educated guesses. However, it’s not only incomplete data that may lead to

misleading information. The same is true when the value of the data or data dependencies are not

understood by those who process data into information. The operational and technical knowledge are

essential.

Let us look at some examples. We know the factors contributing to corrosion (13). These are child

indicators that have a value, target and a score that contribute to the parent, corrosion KPI. The

contribution (score) for each depends on availability, quality, frequency, reliability, impact and

sensitivity. Figure 12 shows an example on corrosion KPI by its contributing indicators.

Figure 12 Indicators / parameters that make a base for a corrosion KPI. Each child indicator has a value, target and score /

weight that tells something about how each impact the corrosion.

We can do the same for calcium carbonate scale as illustrated in Figure 13 with the factors contributing

to scale as indicators.

15

Figure 13.Carbonate scale KPI illustration with child indicators that affect the final scale KPI. Each of the child indicator has a

value, target and score/weight.

Comparing the child indicators for both corrosion and carbonate KPI we observe common indicators.

The difference is how their behavior impact the result or parent KPI. In each case they will have different

target.

Thus when we are setting the score for each parameter/indicator we need to evaluate its impact to the

result (parent KPI). For example, production downtime or equipment failure is an indicator that

something is wrong and can be used in evaluating treatment performance e.g. by measuring reduction

of number of downtimes and failures over a time period. In a surveillance dashboard we would like to

see early warnings that enable us to act and change operational parameters or treatment strategy

before a more severe indicator as downtime or failure happens.

We will here provide some example scenarios for surveillance monitoring and performance intelligence

based on projects we are working on to demonstrate the information found in data and how to use

them to add value and control cost.

Production Chemical eManagement and automated data collection

When we talk about improved data collection we do need to talk about investments in hardware. The

benefit and profitability will however differ from field to field. A cost-benefit analysis is required caseby-case and should be a part of any field revisions under improvements. We would look at requirements

as how critical is the availability of data in a short time frame. This depends on how sensitive the

production is to changes. Some production facilities have few issues, and performance monitoring using

historical data is sufficient. Other fields have more critical issues requiring a constant surveillance to

avoid costly intervention from flow assurance or integrity problems.

16

Let’s look at some examples. In case of corrosion surveillance we start by investigating the parameters /

indicators (13) that yield the corrosion KPI.

Indicator

Measured

Data collection

method

Possible collection

improvements

Iron, Fe

Chloride, Cl

Bicarbonate, HCO3

H2S

Water analysis

Water analysis

Water analysis

PVT analysis

Iron sensitive ion electrode

Chloride sensitive ion electrode

CO2

PVT analysis

pH

Water analysis

Sampling

Sampling

Sampling

Sampling

Field measure

Sampling

Field measure

Sampling or modelled

Corrosion rate,

modelling

SRB

Simulation models

Online biostuds, rapid in field

analysis. Future automatic field

measurements

Pressure

Operational data

Temperature

Operational data

Oil

Operational data

Water

Operational data

Water cut

Operational data

Field sample kits and

incubation

Side stream rigs with

biostuds

Pressure gauges

Simulation

Temperature gauges

Simulation

Flow meters

Allocation

Flow meters

Allocation

Calculation

Well downtime

from corrosion

Equipment failures

from corrosion

Corrosion rate

Operational data

Operational

Operational data

Operational

Corrosion coupon

Treatment

volumes

Treatment

Collecting corrosion

coupons for laboratory

measurements

Manual tank

measurements

Microbiology survey

Increase sampling frequency.

Online H2S measurement

Increase sampling frequency.

Online CO2 measurement

Online pH measurement in well

stream

Data availability through

database connections

Data availability through

database connections

Data availability through

database connections

Data availability through

database connections

Data availability through

database connections

Data availability through

database connections

Data availability through

database connections

Corrosion probes with Wifi or

mobile network for real-time

data capture

Automatic tank measurements

Flow meters with mobile data

collection

The table above shows the most common indicators that when combined yield a measure for corrosion.

Each of the parameters has a target model and score/weight. The target models are somewhat universal

as we know what indicators should be low and those who should be on target or as high as possible. The

score/weight is very much field dependent. The data quality as reliability and frequency is a factor when

setting the score. Some parameters are not even possible to measure.

17

The following table also gives an example of common data collection methods. Let us review some

typical field equipment that improves data collection from a digital oil field perspective (15).

Measurement

Investments

Cost

Benefit

Ion electrodes with

data capturing

Probes,

data

collection

network,

SCADA

Software license

Flow meters, data

collection network,

SCADA

Software license

Remote operated

valves

Software license

Capex

Reduced Opex

Corrosion probes

with data capturing

Probes,

data

collection

network,

SCADA

Software license

Database direct

connection

Communication

network / open

firewall

Capex

Reduced Opex

(manpower)

Reduced cost of

intervention and /

or hardware failure

-

Quick access to data.

Rapidly capture changes to fluids.

Benefit systems where time is essential

setting KPI alerts

Accurate measurements.

Combined with dosage calculation and

alert KPI capture critical under/overdosing.

Enable quick reaction from surveillance

dashboard alerts on critical treatment

regimes.

Automated response by dosage

requirements reduce risk of wrong rate

Corrosion rates are regarded as a critical

parameters. Automating data collection

with real-time surveillance dashboard

enables quick response to deviation

avoiding costly intervention.

Flow meters on

chemical injection

with data

capturing*

Two-way

communication

dashboards*

Capex

Reduced Opex

Reduced cost of

intervention

Capex

Reduced cost of

intervention and /

or hardware failure

With existing historians the access to

data should not be underestimated.

Trends, prediction and real-time

calculation depends on access to data

stored in other resources. In other

words; go away Excel…**

Mobile

devices Mobile device

Capex

In fields where automated data

data capture

Software license

Reduced Opex

collection is not a solution due to cost

or other reasons, equipping field

engineers with mobile devices may be a

solution. With Wifi/GSM/3G/4G etc. it is

possible to capture data in the field, set

validations and upload to database.

Combined with e.g. barcode improves

data validation.

*By capturing flow data and reliable production data it is possible to set automatic adjustment of injection rates

based on dosage requirement and calculation of rate. Using dashboards with two-way communication the rate can

be operated from the dashboard or automatically based on pre-set criteria avoiding both under- and overdosing

chemicals.

**Microsoft Excel is a great tool for personal data analysis and calculations. However, it should not be used as

database or source for other systems because lack of security, validations and version control.

Experience has shown that when data management is also followed by improved workflow and

automated collection reduces time spent on collecting- and manage data as well as reporting.

Automated measurements also means quicker access to information for making decision impacting cost

and earning.

18

Scenario 1 – Corrosion surveillance

In this scenario we analyze workflows for monitoring corrosion in wells in a land field being regularly

treated by shot trucks. The current situation:

The treatments are managed in a database providing the drivers with a schedule each day on

wells to treat.

The truck drivers record chemical treatments on papers and report back to supervisor.

During treatment the truck drivers take water samples that are sent to the laboratory for

analysis.

To save cost the operator only request iron analysis

Corrosion is only measured by iron loss calculated as mass production from available water

production. No iron baseline available (old field)

Production data is only available from well tests and measured on the headers only 3 days a

week.

An access database was used for reporting with intricate queries leading up to 3 days to print

reports for about 700 wells.

An analysis of the workflows and reporting showed that savings could be made by

Improving data collection

Changing data collection and reporting software

More corrosion indicators to improve the accuracy of corrosion surveillance

Obtain better production data

By changing the access database to a platform with structured data in SQL and with dedicated standard

data captures screens and data loading capabilities the reporting time was reduced from 3 days to about

15 minutes for 5 print reports on 700 wells with complex calculations. Estimated 4-5% of the time spent

on reporting per month saved and available for other tasks.

The next step was to enable web based interactive dashboards for corrosion surveillance. The nature of

the current data frequency and quality did not require a real-time dashboard, so the dashboard is set to

refresh once a day to keep processing resources at a minimum. Figure 14 shows one of the dashboard

for corrosion surveillance where the corrosion KPI equals the iron loss. The dashboard enables both the

account team and operational team to access and check status at any time, and reduce time spent to

identify wells at risk with as much as 80% using KPI status to visualize any wells at risk, instead of flipping

through 700 paper copies.

19

Figure 14. Example of corrosion dashboard combining status with trends. The KPI table on the right shows status for the wells in

a selected field. Any alert or warning is a result of the combined child indicators. Selected indicators for trending is placed in the

middle for the selected time period to capture any deviation linked to other events. The trends are filtered by the well list and

date filter. The presented dashboard shows average iron analysis (count) and iron loss (mass) with sum production and sum

chemical usage for a header (collection of wells). Individual wells can be investigated by using filter.

The next phases in improving corrosion surveillance for this field includes:

Action

Improvement

Time period

Estimated cost-benefit

Equip truck drivers with

mobile devises

Remove paper copy

schedule.

Electronically log treatments

with upload to database with

data validation

Short term

Extend analysis

Add pH measurements

More frequent H2S and CO2

measurements

Using frequent corrosion

modelling

Short term

Set automatic pull of

production and operational

data for use in calculations

and reporting

Short time

Remove printing cost

20% less time spent on

schedule

60% less time spent on QA

treatment data and transfer

from paper to electronic format

More accurate corrosion KPI

and information about the well

status

More accurate corrosion KPI

and information about the well

status.

Better understanding of hotspots in the wells.

With allocated and more

complete data gives more

accurate calculations and

information. Saving time used

in Excel up to 50% saved time.

More frequent modelling

Direct access to

operational database

20

Short term

Barcode on wells

Access for laboratory

Flow meters on trucks

Truck and stock tank

automatic levels

Apply same principles for

topside treatments

21

Ensuring truck drivers use

the correct treatment

program. Ensuring data entry

on correct well

Enable laboratory personnel

to enter results directly

Short-mid term

10-20% reduction in QC and

data validation for supervisors.

Short term

Enabling recording accurate

volume directly to mobile

device without manual data

entry

Enabling alarms and alerts

on tank levels. Initiate

product order based on level

measurement.

Accurate and real-time

volume usage per tank and

well.

Mid-long term

(Capex

investment)

Improved control and

management of all chemical

treatment in performance

and surveillance dashboards

Short-mid term

Reduce time for analysis

reporting to status update by

about 40%

Further reduce data

management time with up to

20%. Automate data QC. More

accurate volume reporting.

10% time reduction in level

monitoring and chemical

ordering. Eliminating shutdown from no available

chemicals. Accurate volume

usage for continuous injection

and dosage control to eliminate

under- or overdosing.

Reduction in time for data

management, reporting and

interpreting by 20-50%

Long term

(Capex

investment)

Scenario 2 – categorizing wells by scale risk

Scale is a critical problem for many wells and intervention can be high cost to remove deposits when

they have been allowed to form. In the worst case if scale has been allowed to slowly deposit, or we are

exceeding the amount of scale dissolver treatments a well can tolerate, the completion may need to be

pulled at high cost. Scale surveillance is therefore a low cost option to avoid high cost intervention.

In the following scenario the objective is to capture the scale indicators and categorize wells based on

their scale risk. We capture:

Ion analysis – Ba, Sr, Ca, Mg, bicarbonate, pH, suspended solids

Operational data – oil, water, water cut, CO2, HS2, temperature and pressure

Operational failures and downtime

Simulation data – pH, scale risk (scaling index, delta scaling index)

Treatment data – chemical dosage (calculated as injection rate x water * 10-6 ppm)

We set our criteria for the risk categories as well as target value and score for each indicator. Our scale

category KPI becomes then:

𝐾𝑃𝐼 𝑐𝑎𝑡𝑒𝑔𝑜𝑟𝑦 (𝑆𝑐𝑎𝑙𝑒) =

𝑇𝑎𝑟𝑔𝑒𝑡

∫

𝑇𝑎𝑟𝑔𝑒𝑡

{[𝐶𝑎2+ ], [𝐵𝑎 2+ ], [𝑆𝑟 2+ ], [𝑀𝑔2+ ], 𝑝𝐻𝑚𝑜𝑑𝑒𝑙 , [𝐻𝐶𝑂3− ]𝑡𝑢𝑛𝑒𝑑 , ∆𝑄𝑤𝑎𝑡𝑒𝑟 , ∆𝑄𝑜𝑖𝑙 , ∆𝑊𝐶, 𝑆𝐼, ∆𝑆𝐼, 𝑑𝑜𝑤𝑛𝑡𝑖𝑚𝑒, 𝐷𝑐ℎ𝑒𝑚𝑖𝑐𝑎𝑙 }

Where [𝑋 𝑛 ] is the concentration of species, Qwater is water production (loss in water), Qoil is oil production (loss in

oil production), WC is water cut (change), SI is scaling index and delta SI is change in scaling index and Dchemical is

the average chemical dosage.

Each of the child indicators have a score thus the KPI category (Scale) = ∑ 𝐼 × 𝑆 (where I is child indicator

and S is score).

The final scale KPI is the category defined by certain criteria for this field that classifies; red = high risk,

amber = moderate risk, green = low risk.

As this is not a surveillance dashboard we do not use the sensitivity as a score criteria but look at impact

and likeliness to happen as criteria for the score. Using historical data as analysis, production and

operational downtime combined with scale simulation models we can categorize wells into areas where

we need to focus. The model is updated at certain time intervals e.g. every 3rd, 6th or 12 months to verify

if the category landscape has changed. Using GPS coordinates for the wells we can visualize the

categories in a map as seen in Figure 15.

Categorizing wells like this enables focus on only those who require attention and may reduce treatment

requirements anything between 10% and 40% or even more. The major investment is data collection

and setting up criteria and dashboard. As the dashboard is connected to live data in Avocet PROCHEM

we only need to refresh the dashboard to obtain new data assuming frequent data collection.

22

Figure 15. Example of scale tendency dashboards. The map shows wells based on GPS coordinates and color labeled based on

scale risk categories. The underlying scale indicators performs a check of indicator values against targets rolled up to a result

scale KPI identifying wells based on their risk categories. The map also function as a filter for the other dashboard elements

allowing data drill down. The top right shows is the first drill down with a well completion diagram where the markings are KPI

indicators for each location where there is a measurement or calculation. These KPI markings are both child and parent KPI’s i.e.

they contain a hierarchy under with more data. The bottom right is highlighting scale risk from simulation model in a PT

diagram where the size is the scaling index value.

The same visualization can be used for surveillance by changing categories to result KPI using real-time

data capture or over a shorter time period and include indicator sensitivity in the score. Data frequency

and quality then becomes more important, and need to initiate a cost-benefit analysis for improved data

collection. A surveillance dashboard will show wells requiring immediate attention and thus reducing

further risk of deferred production or downtime.

23

Scenario 3 – Process diagram alert with details

In many cases we would like to monitor a specific system. With the visualization tools available we are

able to combine process diagrams with KPI status and alert. In this scenario we have picked a water

treatment facility process where corrosion is being closely watched.

The available parameters for monitoring are listed in Figure 16 and the corrosion KPI is a combination of

these with set target and score. An image of the process is added and hot spots created for each

monitoring point showing the KPI results. Each hot spot filters a spark line chart and a last result grid

table enabling data drill down.

Figure 16.Example of dashboards with monitoring points. Each monitoring point is a KPI consisting of child KPI that are weighed

based on impact, likeliness and sensitivity to yield a result KPI. When hovering over a KPI more information is provided in a text

box and filter the spark lines and last measured numerical values to the right. The spark lines give more details about the

parameters providing additional information to determine the cause of the alert.

Such visualizations hugely reduce time analyzing data as it pulls data directly from the database and

gives a status at any time. When the hot spots are green no action is required. Expanding the child

indicators can be done when one or more change color, to determine which one is missing its target and

set appropriate action. The risk is data quality and data frequency which need to be ensured by

sufficient data collection workflows.

24

Scenario 4– identify top chemical spend wells

Within production chemistry there is a high focus on chemical spend and chemical usage. This focus is

motivated by cost, product (oil) quality and environmental concerns by water discharge.

The challenge lies within how to measure chemical usage and how to break it down into areas of

interest. We can measure chemical volumes by:

Measurement

Description and challenges

Chemical delivery

Gives total volume with chemical spend over a larger time period. Useful for chemical

usage and spend KPI field totals for quarterly and yearly review. Also useful comparison

against actual usage.

Tank volumes

Tank volumes measurement may be either manually measures (tank strapping) or using

flotation devise to measure levels. Manual measurement may not be accurate and the

frequency of data may be low. A chemical day tank often feed multiple injections (wells,

topside) and this feed may not be completely known thus only totals by product are

measured.

Injection rate

Some installations have flow meters measuring the flow for each recipient. When

chemical distribution cabinets are used for wells it creates additional uncertainty as the

distribution is mostly depending on well pressure. Using allocation models e.g. with

Avocet would enable tracking of chemical flow to recipients and through the process

and to discharge (16). Parameters as pressure and oil/water distribution can be used in

such calculations.

Let us also look at some chemical KPI’s. Looking at volumes or chemical spend may only contain part of

the story. The cost-effectiveness is performance vs. cost. But how do we measure performance? We can

track dosage, but it does not tell us how effective the chemical is. We need more to evaluate:

KPI

Description

Chemical volume

Consumed volume as total product or per treated system

Chemical volume per

production

Tracking how much volume required per system and per production provides

information about cost-effectiveness of the treatment

Chemical spend

Reporting on chemical spend provides a total cost for chemical uses that can be

broken down to treatments and treated system

Chemical spend per

production

Tracking spend per barrel of oil or water provides the cost-effectiveness for

chemicals in terms of production and lifetime.

Chemical spend per oil

price

This will show how much the chemical spend is of the product (oil) sale.

Chemical spend per

production and operation

The real cost-effectiveness is not only measured in production uptime, as this does

depend on other factors. Including more factors as increased equipment lifetime,

reduced intervention etc. will provide the real cost-effectiveness of a chemical.

25

Tracking chemical spend depends on the availability of data. As shown above the data quality depends

on how it is measured and where. In some cases we can compensate for not having flow meters

available by using allocation. This is well known from production allocation. Avocet includes an

allocation module when used for chemical enables tracking of chemicals not only to recipients but also

throughout the process stream adding calculations with known parameters for chemical distribution.

Figure 17 is a dashboard example for simple volume usage by well which answers the question “what is

my highest consumer of chemicals?” We can use bar diagrams or more effective the decomposition map

where a big square equals highest consumer filtered by top 20 wells by usage.

Figure 17. Chemical usage and spend dashboard example. The left side show volume and spend for each well with color codes

for product. The middle shows a decomposition map for the top 20 wells by chemical volume – larger size means higher volume.

Under the decomposition map the production trend. The dashboard is filtered by date filter and shows data within the selected

time period. To the right some sums are displayed for the period selected.

More advanced dashboards can expand the decomposition map to show e.g.

𝑈𝑠𝑎𝑔𝑒 𝐾𝑃𝐼 =

𝑐ℎ𝑒𝑚𝑖𝑐𝑎𝑙 𝑠𝑝𝑒𝑛𝑑 (𝑢𝑠𝑑)

× 𝐼𝑚𝑝𝑟𝑜𝑣𝑒𝑚𝑒𝑛𝑡 𝑓𝑎𝑐𝑡𝑜𝑟

𝑃𝑟𝑜𝑑𝑢𝑐𝑒𝑑 𝑜𝑖𝑙 (𝑏𝑏𝑙)

Where the improvement factor contains uptime as a direct results of chemical usage, subtracted

downtime not related to chemicals and increased equipment lifetime.

26

Scenario 5 – improving logistics

When measuring production chemicals its focus is often on chemical spend or performance in the field.

However part of chemical supply is additional cost for example related to chemical delivery and tank

management. Ensuring delivery on time is one factor and also optimizing tank management.

The next scenario looks at tank deliveries and circulation of transport tanks on permanent hire. The first

objective is to identify how much time each tank spend on a location and increase turnaround to reduce

the cost of hire. The second is to identify inactive tanks that could be taken off hire and rather be ad-hoc

hire tanks.

Figure 18 shows a timeline for each tank based on status and location. By setting target value (max time)

for the time spent on each different location we identify, by amber or red color, where we need to

initiate actions. The result is reducing unnecessary cost related to idle or stuck transport tanks.

Figure 18. Tank tracking using location and KPI status. The objective is to reduce time on certain locations improving turnaround

and thus cost. In this scenario tanks are on permanent hire; meaning taking idle tanks out of hire reduce hiring expenses.

The next question is about the data collection process. How do we know where the transport tanks are?

How often do we get the information? Usually we are in the mercy of staffs in different location to

record and send information, and not uncommonly there are certain weaknesses with such data

collection. Delayed data result in delayed information and unable actions to be made as the tank already

may have left the location although days overdue. There are different measures to improve data

collection. Barcode scanners is one solution, however routines must be established to ensure data is

immediately loaded to the database to become available in the dashboard.

RFid and GPS are other systems for tracking. With these linked to satellites or network with SCADA data

appear in the database by the frequency set for updating. There is a larger investment than e.g. barcode

27

however when combining reduction in manpower and enabling quicker turnaround to lower hire cost

the investment may be worth the money.

Figure 19 illustrates a scenario with GPS tracking where the location is seen on a map as KPI spots

changing colors from counting days at each location combined with a hire cost overview.

Figure 19. Tank location and cost tracking example. By equipping the transport tanks with GPS their location can be visualized

on a map. The location points is also set up as KPI checking against time and cost criteria. When a tank should have arrived and

are overdue the location marking change colors. This example also shows a live cost tracking based on time out.

28

Data Quality

Challenges in data collection processes

One of the biggest challenges in the data collection process in today’s environment can be explained

with the recent creation of the Big Data concept.

Big Data is categorized as having the three V’s: Volume, Velocity and Variety. With the technology

available today, the measured data from one well can easily reach more than 100,000 data points per

day. High frequency data needs to be processed in a similar speed as it is generated and the sources and

formats of the data are multiple and dispersed.

The amount of automatically generated data can overwhelm analysis. It requires aggregation “to report

one number” from the hundreds of thousand values that are collected in the field.

On the other hand, some information still needs to be recorded manually, this is where data collection

and transmission can result in errors, especially when transcribing to a spreadsheet or custom solution.

Excel is widely used and often as quick mechanism for collection, storage and computations. Companies

get swamped by an “Excel storm”, thousands of spreadsheets that make collating and rolling up

information difficult. It is also very difficult to track changes.

Figure 20. Swamped by an "Excel storm"...

29

The impact of incomplete data

Often we come across incomplete data. We have seen data without dates, or even analysis with analysis

date rather than sample date. Anytime we capture data, either through reading or sampling we are

taking a snapshot of the system that is valid for the specific time of the snapshot. The production system

changes character over time due to changes in operational parameter or even slugging. Certain

indicators are therefore only valid within a time slot, and the larger time slot the larger the uncertainty.

As Figure 23 illustrates when we have gaps in our data it gives challenges for performing calculation. For

instance mass production from ion analysis and water production, chemical dosage from injection rate

and water or oil production. We can fill gaps by interpolating but do we know if this is correct? What if

there was no production. This affect calculations and totals. What is the total production over the time

period? What is the total chemical usage?

timeline days

Oil

Water

Injection rate

Analysis

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

Figure 21. Illustration of a timeline with gaps. If we set the time interval to be days and we have gaps we may run into trouble

with calculations.

Data collection vs. cost

It is required to take into account the investment made on the infrastructure and measuring devices in

order to have the data available. In some cases human taking measurements are cost-effective or the

only possible approach when automatic data measurement and infrastructure is not available. But the

value of information is obtained after data is processed. Although more available data is desirable, the

aim is to balance the amount of measurements with the value of information the data generates, to

minimize cost.

It is important to understand the data and its value by performing cost-benefit analysis by application

and system when looking at possible improvements. Data management programs should include

analysis of each parameter including value for monitoring and the added value to understanding

processes and changes in processes.

Automatic data measurement vs. human measurement vs. cost

The “link each piece with each other” build mechanism often leads to what is called “organized chaos”,

where companies get comfortable for a period of time. Sooner or later they realize that it becomes

difficult to sustain these operations for a longer time, especially in changing or growing operational

environments or when subject to regulatory compliance and audits.

The proposed solution aims to reduce the complex “data gymnastics” and build simple, yet effective

mechanisms for E&P companies to collect, compute and distribute production information. The core is

the cost-benefit analysis that should be updated regularly as technology changes both with new

equipment and changes in cost. Not all parameters provide enough value to be valued to justify Capex

investment, and some critical parameters add enough value to justify investment. This differs from

system to system.

30

Ensuring data quality and data organizing

Some of the methods to increase data quality can be embedded in the Data Management platform, in

order to trigger warnings or errors when a specific quality rule has been breached. Missing data can be

carried forward (repeating last available value), interpolated (if available as sporadic data) or calculated

based on additional parameters (e.g. through an equation or data-driven model).

Software application with custom made screens for data usually eases data management and reduce

risk or wrong data in the wrong place. Figure 22 shows an example of such screen and in combination

with data validation based on rules, as shown in Figure 24, the user will be notified when adding an

unexpected value.

Figure 22. Using dedicated screens with business logic for capturing data reduce risk of error in data when doing manual data

management. Using software platform with easy configurable screens means the ability to adapt data management to most

workflows and business scenarios.

Often we will need to connect to other data sources as databases, SCADA and even Excel. Automating

data collection or simplifying data import with rules and easy data mapping also reduces time spent on

data transformation and manual data management. Again coupled with data validation we have tools to

review and validate data before becoming available to data analysts and in dashboards and reports.

31

Figure 23. The ability to write and use data loaders eases the data management process and combined with data validation

ensures correct data in the correct container.

Specific business rules are required to handle invalid data (e.g. through a numerical limit validation) as

shown in the following example:

Figure 24. Enhanced Data Quality through validation rules

32

Discussion

The oil field industry transformation

The oil and gas industry is progressing in implementing cost effective solutions that prepare the stage

for more advanced workflows. With higher water production, increased cost of operation,

unconventional oil field and falling oil price the oil field industry will be even more cost-driven. More

effective solutions, smarter investments and optimizing operations will be the drive for towards the

future. Cost-benefit analysis will drive the investment in new technology pushing towards smarter

solutions.

There is a huge untouched potential in data analysis, mainly due to “tradition” where data was pieces of

information we collected so we at least knew something. Data management has entered a new

movement where Big Data, Analytics and Cloud Computing are playing an interesting role in the general

discussions. The action translates in the need of a company to ‘jump on the train’ before it leaves. With

more complex operations, higher focus on operational cost and need for making the right decisions we

need the tools for decision support to minimize “educated guesses”. The information we need is found

in data which require tools that improve data acquisition, data transformation and presentation to

support decisions.

When we take a look at oil field production chemistry there has been a progressive development the

last 30 years or so, with many different contract models:

CALL OFF: Simple contract for product supply with limited service following the serious work of

winning the business. Limited management input e.g. monthly off take report. Having been

selected to supply a product we then wait for the orders to roll in! Of course it is never that

simple!

MASTER SERVICE: As Call-Off but will generally cover several products and may include certain

specified services; offshore manpower rates, Tank rental, Pump hire etc. Generally involves a

higher level of input especially supporting field application.

PREFERRED SUPPLIER: Evolved from Master Service Contracts with restricted number of

suppliers covering a single or group of assets.

SOLE SUPPLIER: As Preferred Supplier but one Company manages total supply chain. Includes

contractual definition around field support. Contract personnel may be paid for. Will likely

include supply of competitive products. May cover supply of “a-typical” items such as; Utility

products, maintenance chemicals, sub-sea control fluids.

CHEMICAL MANAGEMENT: A step further from sole supplier but with highly developed sole

supplier arrangement, detailed business relationship, contract team embedded within clients

facilities keeping high focus on Total Cost of Operation (TCO). This being achieved with high

degree of measured competence and performance targets and often based upon an open book

contract with incentive schemes with audit and peer reviews as key to long term, measured

success.

33

DIGITAL OIL FIELD AND ASSET MANAGEMENT: As we have moved into a new century there is

more focus on digitalization of the data management and reporting. Automation with data

management for decision support will drive the industry forward to a more knowledge based

workday. As new data collection equipment becomes smaller and less-expensive it will become

a motivator for automation of old field, including production chemistry and flow assurance. The

drive is cost, and reduction of cost by smaller investment in equipment and software reduces

need for manpower and reduces risk of failures or downtime with proper surveillance.

The production and asset management has taken steps into the future with digital oil field and

production chemistry and flow assurance is not far behind moving from traditional data management

into smarter solutions. As production chemistry and flow assurance is one of the most complex areas

only proper data management supported by chemical knowledge can simplify the complexity and

translate into information we can use.

Capex investments in automation. Does is pay off?

It has been proved that in general, the investment on automation pays off significantly fast. From one of

the references, the fact of a one-time visualization of the right information at the right time paid the

cost of the Decision Support System by 10 times.

The effort to systemize can be summarized with the following benefits:

Efficiency improvement – The organized chaos has gone so far that it is becoming difficult to

operate.

Cost reduction – maintaining either Excel or custom solutions is draining IT resources and a

better solution is required.

Outgrowth – growing operations are exacerbating the organized chaos problem. This is a

derivative of the efficiency improvement problem.

Technology turnover – changes in operating platforms, databases, operating systems, requires

that companies refresh current solutions.

By standardizing systems and data management we achieve:

Global asset management – The effort to get all producing Assets into one platform,

allows companies to leverage the economies of scale.

Regulatory compliance – Subject to growing country laws, listed and unlisted companies

need solutions that can be audited.

The foundation for optimization is to enable the move into other levels of integration, like Model Based

Surveillance or Integrated Operations, requiring data management aspect to be covered. Without this

foundation, companies are not able to take advantage of the newer technologies that would help to

increase production in an optimal way.

Impact on decisions from data management

Proper data management improves decision making, by reducing time spent in non-value added tasks,

increasing data quality and integrating across domains in the organization. Decisions can be taken with

34

better support and justification, and increased available time will avoid making premature decisions

based on poor data.

Using visualizations and dashboards for monitoring and surveillance of assets and KPI comparisons the

information becomes more available and increase understanding of what is happening within the asset.

The capability to monitor assets and compare their main indicators, for example, utilization ratios, loss

efficiencies, fuel efficiencies, treatment performance across assets and how these translate into bottom

line benefits. Comparisons allow ranking of assets to occur and a focus on targeted actions can result

from such analysis. Using KPIs, actual production trends, companies have a better visibility on how their

production may occur.

References

1. Mariam Abdul Aziz et al.: “Integrated Production Surveillance and Reservoir Management

(IPSRM) – How Petroleum Management Unit (PMU) Combines Data Management and

Petroleum Engineering Desktop Solution to Achieve Production Operations and Surveillance

(POS) Business Objectives”, paper SPE 111343 presented at the SPE Intelligent Energy

Conference and Exhibition held in Amsterdam, The Netherlands, 25-27 February 2008

2. Michael Stundner et al.: “From Data Monitoring to Performance Monitoring”, paper SPE 112221

presented at the SPE Intelligent Energy Conference and Exhibition held in Amsterdam, The

Netherlands, 25-27 February 2008

3. Sandoval, G. et al.: “Production Processes Integration for Large Gas Basin – Burgos Asset”, paper

SPE 128731 presented at the SPE Intelligent Energy Conference and Exhibition held in Utrecht,

The Netherlands, 23-25 March 2010

4. Shiripad Biniwale et al.: “Streamlined Production Workflows and Integrated Data Management:

A Leap Forward in Managing CSG Assets”, paper SPE 133831 presented at the SPE Asia Pacific

Oil & Gas Conference and Exhibition held in Brisbane, Queensland, Australia, 18020 October

2010

5. G. Olivares et al.: “Production Monitoring Using Artificial Intelligence, APLT Asset”, paper SPE

149594 presented at the SPE Intelligent Energy International held in Utrecht, The Netherlands,

27-29 March 2012

6. Mark Allen et al.: “Enhancing Production, Reservoir Monitoring, and Joint Venture Development

Decisions with a Production Data Center Solution”, paper SPE 149641 presented at the SPE

Intelligent Energy International held in Utrecht, The Netherlands, 27-29 March 2012

7. Cesar Bravo et al.: “State of the Art of Artificial Intelligence and Predictive Analytics in the E&P

Industry: A Technology Survey”, paper SPE 150314 presented at the SPE Intelligent Energy

International held in Utrecht, The Netherlands, 27-29 March 2012

8. Wokoma, E. et al: “Total Asset Surveillance and Management”, paper SPE 150813 presented at

the Nigeria Annual International Conference and Exhibition held in Abuja, Nigeria, 30 July – 3

August 2011

9. Diaz, G. et al.: “Integration Production Operation Solution applied in Brown Oil Fields – AIATG

Asset PEMEX”, paper SPE 157180 presented at the SPE International Production and Operations

Conference and Exhibition held in Doha Qatar, 14-16 May 2012

10. J. Goyes et al.: “A Real Case Study: Well Monitoring System and Integration Data for Loss

Production Management, Consorcio Shushufindi”, paper SPE 167494 presented at the SPE

35

Middle East Intelligent Energy Conference and Exhibition held in Dubai, UAE, 28-30 October

2013

11. N.E. Bougherara et al. “Improving ESP Lifetime Performance Evaluation by Deploying an ESP

Tracking and Inventory Management System”, paper SPE 144562 presented at the SPE Asia

Pacific Oil and Gas Conference and Exhibition held in Jakarta, Indonesia, 20-22 September 2011

12. Georg Zangl et al. “Data Mining: Applications in the Petroleum Industry”, Round Oak Publishing

13. Brit Graver et al. “Corrosion Threats – Key Performance Indicators (KPIs) Based on a Combined

Integrity Management and Barrier Concept”, NACE 2716, NACE corrosion 2013

14. Omar O. Al Meshabi et al. “Attitude of Collaboration, Real-Time Decision Making in Operated

Asset Management”, SPE 128730, PE Intelligent Energy Conference and Exhibition held in

Utrecht, The Netherlands, 23–25 March 2010

15. Farooq A Khan and Hamad Muhawes, “Real-Time Database Inventory Model, KPI and

Applications for Intelligent Fields”, SPE 160874, SPE Saudi Arabia Section Technical Symposium

and Exhibition held in Al-Khobar, Saudi Arabia, 8–11 April 2012

16. N. Aas, B. Knudsen. “Mass Balance of Production Chemicals”, SPE 74083, SPE International

Conference on Health, Safety and Environment in Oil and Gas Exploration and Production held in

Kuala Lumpur, Malaysia, 20–22 March 2002

36

© Copyright 2026

![] How to…Implement BI for PM, targeting Johannes Lombard LSI Consulting](http://cdn1.abcdocz.com/store/data/000199378_1-9bf6d7f7998302cfc97d637ac8a15608-250x500.png)