Poster

Sources of Evidence for Automatic Indexing of Political Texts

Mostafa Dehghani, Hosein Azarbonyad, Maarten Marx, and Jaap Kamps

University of Amsterdam

Motivation

Experiments

Political texts on the Web, documenting laws and policies and the process leading to

them, are of key importance to government, industry, and every individual citizen.

Data Collection

I Annotated Data: JRC-Acquis

. from 2002 to 2006

. 16,824 documents

I Taxonomy: EuroVoc

. 6,796 hierarchically structured concepts

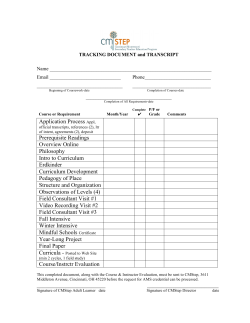

Experiment 1: Performance of LTR

Laws

Questions

Debates

What laws are being made?

What committees are working on?

What parliaments is talking about?

Performance of JEX, best single feature, and LTR methods.

Research Questions:

I What are the different possible sources of evidence for automatic indexing of political

text?

I How effective is a learning to rank (LTR) approach integrating a variety of sources of

information as features?

Method

JEX

BM25-Titles

LTR-all

P@5 (%Diff.)

0.4353

0.4798 (10%)

0.5206 (20%)

Recall@5 (%Diff.)

0.4863

0.5064 (4%)

0.5467 (12%)

I Exploiting LTR enables us to learn an effective way to combine features from different

sources of evidence.

I What is the relative importance of each of these sources of information for indexing

political text?

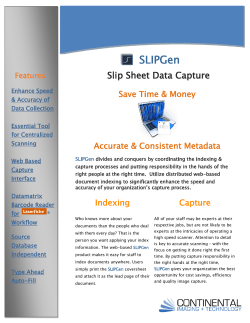

Experiment 2: Feature Analysis

I Can we build a lean-and-mean system that approximate the effectiveness of the large

LTR system?

lit

y

ne

ra

Ch

ild

ty

(#

gu

i

Ge

ity

Am

er

en

bi

)

gu

ity

(#

Pa

re

nt

s)

M

(t

itl

e_

D)

Labeled

Documents

Po

pu

lar

-M

(t

M

itl

(t

e_

ex

C)

t_

_B

D)

M

-M

25

(t

M

e

xt

(t

_C

ex

t_

)_

D)

BM

-M

25

(t

M

i

tle

(t

_C

itl

e_

)_

D)

BM

-M

25

(t

M

e

xt

(t

itl

_C

e_

)_

D)

BM

-M

25

(d

M

e

sc

(t

ex

_C

t_

)_

D)

BM

M

-M

(t

25

(d

ex

es

t_

c_

D)

C)

-M

_B

(

an

M

M

c_

(t

25

itl

de

e_

sc

D)

_C

-M

)_

(a

BM

nc

25

_d

es

c_

C)

_B

M

25

I Different sources of evidence for the selection of appropriate indexing terms for

political documents:

P@5

SVMRank Weight

Am

bi

Learning to Rank for Sources of Evidence Combination

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

Feature importance: 1) P @5 of individual features, 2) weights in SVM-Rank model

Textual

Glosses of

Descriptor Terms

Thesaurus

Structure

I Findings:

. Titles can be considered as the most succinct predictor of classes (Titles of political

documents tend to be directly descriptive of the content)

I Mapping Indexing Problem to the Retrieval Problem:

. Popularity is not an effective feature itself, but increases the performance along

with other features.

. Features for reflecting the similarity of descriptor terms and documents:

I Formal models of documents and descriptor terms:

M odelD = hM (titleD ), M (textD )i

M odelDT = hM (titleDT ), M (textDT ), M (glossDT ), M (anc_glossDT )i

Experiment 3: Lean and Mean System

I Selected Features:

Documents

. Similarity of text submodels

. Similarity of title submodels

. Similarity of text and textual glosses of descriptor terms

. Popularity of descriptor terms

..

.

. ..

Title

Text

Performance of LTR on all features, and on four selected features

Description

Query

Method

LTR-all

LTR-ttgp

Features

Similarity

Title

Title

Text

Text

Description

Description

Title

P@5 (%Diff.)

0.5206 (-)

0.5058 (-3%)

all@5 (%Diff.)

0.5467 (-)

0.5301 (-3%)

Text

.

..

..

.

.

..

..

I Selective LTR approach is a computationally attractive alternative to the full

LTR-all approach.

.

Thesaurus structure

I

For each combination, three IR measures are employed: a) language modeling

similarity based on KL-divergence using Dirichlet smoothing, b)the same run using

Jelinek-Mercer smoothing, and c) Okapi-BM25.

. Features for reflecting the characteristics of descriptor terms independent of

documents:

I

Popularity

I

Generality

I

Ambiguity

ExPoSe:Exploratory Political Search,

Conclusion

I Using a learning to rank approach integrating all features has significantly better

performance than previous systems.

I The analysis of feature weights reveals the relative importance of various sources

of evidence, also giving insight in the underlying classification problem.

I A lean and mean system using only four features (text, title, descriptor glosses,

descriptor term popularity) is able to perform at 97% of the large LTR model.

Funded by NWO (project# 314-99-108)

37th European Conference on information retrieval

{dehghani,h.azarbonyad}@uva.nl

© Copyright 2026