Random walks EPFL - Spring Semester 2014

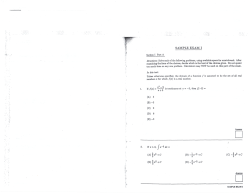

Random walks EPFL - Spring Semester 2014-2015 Solutions 7 1. a) This is a direct computation: (k) (P φ )j = N −1 X pjl exp(2πilk/N ) = . . . = N −1 X ! cm exp(2πimk/N ) (k) exp(2πijk/N ) = λk φj m=0 l=0 b1) The eigenvalues are λk = 1 2 (exp(2πik/N ) + exp(−2πik/N )) = cos(2πk/N ) b2) Recalling that N −1 X j=0 ( N exp(2πijk/N ) = 0 if k = 0 otherwise we obtain that the eigenvalues are ( N −1 N −1 1 1 X 1 X λk = exp(2πikj/N ) = exp(2πikj/N ) − 1 = −1 N −1 N −1 N −1 j=1 j=0 if k = 0 otherwise c) Observe that if the spectral gap is determined by the second largest eigenvalue (i.e., the one closest to +1), then adding self-loops of weight α to every state can only reduce the spectral gap, as this addition increases all eigenvalues. Adding self-loops might only increase the spectral gap (and therefore speed up the convergence to equilibrium) when the gap is determined by the least eigenvalue (i.e., the one closest to −1). c1) In this case, the second largest eigenvalue is given by cos(2π/N ), while the least eigenvalue is given by cos(2π(N −1)/2N ) = cos(π−π/N ) = − cos(π/N ). So the spectral gap is γ = 1−cos(π/N ). Adding self-loops of weight α changes these eigenvalues to α + (1 − α) cos(2π/N ) and α − (1 − α) cos(π/N ) respectively. The weight α realizing the largest spectral gap is therefore solution of the equation 1 − α − (1 − α) cos(2π/N ) = 1 − |α − (1 − α) cos(π/N )| = 1 + α − (1 − α) cos(π/N ) so α (2 + cos(π/N ) − cos(2π/N )) = cos(π/N ) − cos(2π/N ). For N large, the value of α (which represents approximately the increase of the spectral gap in this case) is therefore roughly given by 1 π2 2π 2 3π 2 α' 1− − 1 + = 2 2N 2 N2 4N 2 (and recall that the spectral gap of the original chain is ' π2 ) 2N 2 c2) For the complete graph, the computation is (much) simpler. The only eigenvalue not equal to 1 is − N 1−1 . If one therefore sets α = N1 (which one can check leads to the transition matrix pij = 1/N for all i, j), this increases the value of the spectral gap from 1 − N 1−1 to 1. In this case, the convergence to the uniform distribution is immediate: after one step only, the distribution of the chain is indeed uniform. 1 2. a) The transition matrix is doubly stochastic, so the stationary distribution is uniform (i.e., 1 π(ij) = KM = N1 for every (ij) ∈ S). The chain is clearly irreducible (and finite), so also positiverecurrent. It is aperiodic (and therefore ergodic) if and only if either K or M is odd, in which case π is also a limiting distribution. b) First observe that when both K and M are even, then one eigenvalue is equal to −1 (take k = K/2 and m = M/2), so the spectral gap is 0. In the case were K is odd and M is even, the eigenvalue which is the closest to either +1 or −1 is π 1 π(K − 1) 1 π2 λ( K−1 , M ) = cos − 1 = − cos + 1 ' −1 + 2 2 2 K 2 K 4K 2 for K large. We have a symmetric situation for K even and M odd. Finally, when both K and M are odd, the eigenvalue which are candidates for being the closest to either +1 or −1 are π π π(K − 1) π(M − 1) 1 1 λ( K−1 , M −1 ) = cos + cos = − cos + cos 2 2 2 K M 2 K M 2 2 π π ' −1 + + 2 2 4K 4M 1 2π 2π 2 λ(1,0) = cos +1 '1− 2 2 K K 1 2π 2π 2 λ(0,1) = 1 + cos '1− 2 2 M M for K, M large. When K is close to M , the first of these three eigenvalues “wins” and determines the spectral gap. c) In the case where K = M is an odd (and large) number, N = K 2 = M 2 and the spectral gap is given approximately by π2 γ' 2N so by the theorem seen in class, √ N 1 log N nπ 2 n max kP(ij) − πkTV ≤ exp(−γn) ' exp − 2 2 2 2N (ij)∈S 2N log N which falls below ε for n = 2 2 + c and c = log(1/2ε). π 2

© Copyright 2026