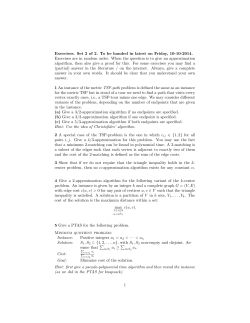

What Works Best When? A Framework for