Alexandre Allauzen, Nicolas Pécheux, Quoc Khanh Do, Marco

LIMSI @WMT13

Alexandre Allauzen, Nicolas Pécheux, Quoc Khanh Do, Marco Dinarelli, Thomas Lavergne, Aurélien

Max, Hai-Son Le, François Yvon Univ. Paris Sud and LIMSI—CNRS Orsay, France

n-code

Highlights

n-code models

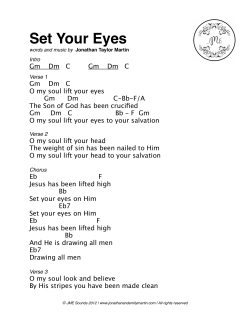

Tuples are bilingual units

!

{French,German,Spanish}↔English shared translation tasks

• n-code (http://ncode.limsi.fr):

org : ....

– Source reordering and n-gram translation models (TMs)

– n-best reranking with SOUL LM and TM

• First participation to the Spanish-English task

S : ....

T : ....

• Preliminary experiments with source pre-ordering

• Tighter integration of continuous space language models

à

recevoir

le

s̅8: à

s̅9: recevoir

s̅10: le

prix nobel de la paix

s̅11: nobel de la paix

s̅12: prix

̅t8: to

̅t9: receive

̅t10: the

̅t11: nobel peace

̅t12: prize

u8

u9

u10

u11

u12

The translation model is a n-gram model of tuples

• 3-gram tuple LM and 4-gram target word LM

• Four lexicon models (similar to the phrase table)

• Two lexicalized reordering models

(orientation of next/previous translation unit)

• Weak distance-based distortion model

• Word-bonus and a tuple-bonus

• Different tuning sets according to the original language

Data Processing

Artificial Text generation with SOUL

• Better normalization tools provide better BLEU scores

Issue:

• Specific pre-processing for German as source language

• Conventional models are used to build the pruned search space and

the potentially sub-optimal k-best lists

1

• Using lemmas and POS for the Spanish-English (freeling )

• Cleaning noisy data sets (CommonCrawl )

– Filter out sentences in other languages

• Continuous space models can only be used to rerank k-best lists

ppx

• Language model tuning

A solution for the language model:

• Sample text from a 10-gram NNLM

• Estimate a conventional 4-gram model used by the decoder.

– Sentence alignment

– Sentence pair selection using a perplexity criterion

1:

http://nlp.lsi.upc.edu/freeling/

Pre-ordering (English to German)

Experiments:

• ITG-like parser to

generate

reordered

source sentences

• On the English to German task

Tuning vs original language

• Different tunings for different original languages:

• Only 16% of the

source training sentences are modified

• No clear impact

Histogram of token movement size

1

:http://www.phontron.com/lader/

Direction

Baseline fr2en

22.3

36.4

31.6

18.5

30.2

29.4

6

8

10

12

Experimental Results

en2de

Solution:

Original language

cz

en

fr

de

es

all

4

times 400M sampled sentences

• The original language of a text has a significant impact on translation performance

• Build a SMT system

using pre-ordered parallel data

artificial texts

artificial+original texts

original texts

2

Assumption:

1

280

270

260

250

240

230

220

210

200

190

Tuning per source

23.8

39.2

32.4

18.5

29.3

30.1

de2en

en2fr

fr2en

en2es

es2en

System

base

+artificial text

+SOUL

+artificial text+SOUL

base+SOUL

base+SOUL

base

tuning per source

+SOUL

base+pos

base+pos+lem

BLEU

dev nt09 test

15.3

15.5

16.4

16.5

22.8

29.3

29.1

30.1

dev nt11

32.3

30.7

nt10

16.5

16.8

17.6

17.8

24.7

32.6

29.4

30.1

30.6

test nt12

33.8

33.9

© Copyright 2026