Document

A robust combined trust region–line search exact penalty structured approach for constrained nonlinear least squares problems N. Mahdavi-Amiri Sharif University of Technology Tehran, Iran M. R. Ansari IKI University Qazvin, Iran The 8th International Conference of Iranian Operations Research Society Outline • • • • • • • • • • Problem description and structure Exact penalty method Trust region and line search methods Step directions: trust region projected quadratic subproblems Penalty parameter updating strategy Projected structured Hessian approximation Special nonsmooth line search Computational results Conclusions References 1/52 Problem description and structure Constrained NonLinear Least Squares Problem (CNLLSP): Objective function Equality constraints Inequality constraints Functional constraints where, , and , : (smooth functions) 2/52 Notations: (Transpose of Jacobian) (Gradient of objective function) (Hessian of objective function) where, 1 2 Note: By knowing , we can conveniently compute and part 1 of the Hessian . 3/52 Exact penalty method Aim: CNLP UCNLP Continuously differentiable: • Fletcher (1973): equality constrained. • Glad and Polak: (1979) equality and inequality constrained. Nondifferentiable: • Zangwill: (1967). • Pietrzykowski: (1969). 4/52 Some attractive features: • They overcome difficulties posed by degeneracy. • They overcome difficulties posed by inconsistent constraint linearization. • They are successful in solving certain classes of problems in which standard constraint qualifications do not hold. • They are used to ensure feasibility of subproblems and improve robustness. Coleman and Conn (1982): CNLP implementation Mahdavi-Amiri and Bartels (1989): CNLLS implementation 5/52 Pertinent to our scheme: • Dennis, Gay and Welsh (1981): structured DFP update for UNLP and for UNLLS, in particular. • Nocedal and Overton (1985): quasi-Newton update of projected Hessian for CNLP. • Mahdavi-Amiri and Bartels (1989): projected structured update for CNLLS. • Dennis and Walker (1981): local Q-superlinear convergence for the structured PSB and DFP methods, in general, and for UNLLS, in particular. • Dennis, Martinez and Tapia (1989): structured principle, and local Q-superlinear convergence of structured BFGS update for UNLP. • Mahdavi-Amiri and Ansari (2012): Global convergent exact penalty projected structured Hessian updating schemes. • Mahdavi-Amiri and Ansari (2013): Local superlinearly convergent exact penalty projected structured Hessian updating schemes. 6/52 -exact penalty function (violation adds penalty) where, (1-norm of the constraint violations) Note: There exists fixed for any : , local minimizer of local minimizer of (CNLP) Note: is not differentiable everywhere. 7/52 “ -active’’ merit function: where, ( -violated equalities) ( -violated inequalities) Notes: 1) 2) is differentiable in a neighborhood of . , 8/52 More notations: • active equality constraint indices: • active inequality constraint indices: • active constraint indices: • active constraints gradient matrix: 9/52 First-order necessary conditions: If of that then there exist “multipliers’’ (a) is a stationary point: (b) is in-kilter: is a local minimizer , for , such 10/52 Second-order sufficiency conditions: If first-order necessary conditions are satisfied and then is an isolated local minimizer of . 11/52 Using QR decomposition of , where : basis for : basis for , , , and as identities, (projected or reduced gradient) stationarity Note: Computationally, is accepted. for a small 12/52 Regions of interest: ( , stationary tolerance parameter) Multiplier estimates in Region 2: using QR decomposition of . 13/52 Trust Region and Line Search Methods Trust region: for smooth functions • are reliable and robust. • • can be applied to ill-conditioned problems. • can be used for both convex and non-convex approximate models. have strong global convergence properties. 14/52 Line search for nonsmooth exact penalty function Near a nondifferentiable point the quadratic subproblem cannot approximate properly in a large interval (this may happen in any iterate, whenever moves to a point where an inactive constraint becomes active). Therefore, the algorithm may have some inadequate iterations. To overcome this difficulty, we propose a special line search strategy that makes use of the structure of least squares and examines all nondifferentiable points in the trust region along a given descent direction h for a proper minimizer of . 15/52 Step Directions: 1) global step direction: . a) global horizontal step direction: b) global vertical step direction: 2) dropping step direction: . (Region 1) . . 3) Newton step direction: . a) asymptotic horizontal step direction: b) vertical step direction: . (Region 2) . 16/52 Use of step direction: Region 1:( ) compute ; perform a line search on update : ; to get Region 2:( ) compute , ; if is out-of-kilter for any then compute dropping step direction ; perform a line search on to get update : ; else (the are in-kilter) compute Newton step direction ; update : (no line search). ; ; 17/52 Trust Region Projected Quadratic Subproblems Computing horizontal steps: (global and local) Define (approximation of projected Hessian) exact part (quadratic subproblem) ( is trust region radius and a prescribed constant) is 18/52 Region 1 (global) Compute and set : use , , Region 2 (local) Compute : use estimated , and set . . Solution of this subproblem is shown by . Computing vertical steps: enforce activity A Newton iterate Notes: • stands for or . • if necessary, we cut back to ensure that . 19/52 Computing dropping step: 1) Find an index 2) Solve: where, is the corresponding to most out-of-kilter , th unit vector and Note: • • gives a local, first-order decrease in , and moves the away from zero up to the first-order. 20/52 Quadratic subproblems: 1) When is feasible: (local quadratic subproblem) which is a penalty parameter free quadratic subproblem (thus, no computational difficulties in the local phase). 2) When : (auxiliary quadratic subproblem) 21/52 Note: The last auxiliary quadratic subproblem determines the optimal improvement in quadratic feasibility achievable inside the trust region. helps to balance the progress towards both optimality and feasibility in the exact penalty approach. Solution of auxiliary subproblem is shown by Byrd, Nocedal and Waltz (2008) Here . Linear subproblem Quadratic subproblem 22/52 Penalty parameter updating strategy Essential for practical success (robustness) in the context of penalty methods: too small a a too large inefficient behavior and slow convergence possible convergence to an infeasible stationary point. Note: We allow the possibility of both increasing (at times with a safeguard) or decreasing value of . . 23/52 a weak progress towards feasibility by in comparison with the optimal achievable reduction predicted by a very good progress towards feasibility by , but a poor improvement in optimality Note: • • decrease (improve feasibility) increase (improve optyimality) is updated only in Region 1 (global phase), and when, (that is, is not -feasible). 24/52 Notations: the predicted decrease in the objective function : the predicted improvement in measure of infeasibility: the predicted decrease in : best predicted improvement in measure of infeasibility achievable inside the trust region predicted improvement in measure of infeasibility 25/52 Reduce : (e.g., ) is reduced (if necessary) to satisfy followings: 1) predicted reduction in infeasibility of constraints is a fraction of the best possible predicted reduction; that is, (e.g., ) 2) penalty function provides a sufficient improvement of feasibility; that is, (e.g., ) 26/52 Increase : (e.g., ) In practice (on a few problems), to ensure satisfaction of (1) and (2) above, may become too small too quickly. Conditions for such an occurrence: • cases (1) and (2) above are satisfied ( does not decrease), • there is a very good improvement in feasibility; that is, (e.g., ) • objective function is not optimal and could be improved; that is, • there is a poor improvement in optimality (e.g., ) 27/52 Therefore, while increase (e.g., ) (e.g., ) . Note: If the new does not satisfy (1) and (2), then back to its previous value. safeguard (to avoid attaining too large a too soon) is set increase a limited number of times at most 2 times in one iteration and at most 6 times in all the iterations 28/52 Step acceptance For a trial point , define (actual reduction) Also, for a step direction = , or , define 29/52 Predicted reduction: • global step: • dropping step: • Newton step: A trial point , , , is accepted if and 30/52 Updating the trust region radius: For current step : Note: Locally (in Region 2): • • . there exists a fixed : . 31/52 Projected structured Hessian approximation 32/52 Notations: • violated equality constraints gradient matrix: • violated inequality constraints gradient matrix: • violated equality vector of signs: Let 33/52 Assumption: ( ) Region 2 (locally) Secant condition: Mahdavi-Amiri and Bartels (1989) where, 34/52 Structured Broyden’s family update: Engels and Martinez: (1991) Set BFGS: 35/52 DFP: 36/52 Special nonsmooth Line Search Strategy (for global or dropping direction) :a descent search direction. : step length along . Breakpoint : A point where derivative of new constraint becomes active). does not exist (when a Note: A minimizer exists and a minimum occurs either at a breakpoint, or at a point where is zero. Motivation: Consider the and the to be linear. Approximate using linear approximations of the denoted by . Let and the , 37/52 Derivative of and Right and left derivatives of Similar notations are used for approximation of ) ( , as a linear Note: • Breakpoints occur at zeros of the . • If then (by linearity of gives a breakpoint. • Breakpoints are computed approximately using linear approximations. ) 38/52 Strategy: Examine breakpoints sequentially, and find a minimum at one of the following situations: (i) a breakpoint (Figure 1), where Figure 1 39/52 (ii) or at a point, where . Figure 2 Note: By the used linearizations, all derivatives are computed without any new objective or constraint function evaluation. 40/52 Computational considerations If something goes wrong computationally, then In region 1 (global ( ) ( to change large) or too small Failure In region 2 (asymptotic too small otherwise ) ) Failure (to have , and transfer to global phase) 41/52 Computational results 32 CNLLS problems from Hock and Schittkowski (1981) number of variables: number of constraints: 7 CNLLS randomly generated test problems from Bartels and Mahdavi-Amiri (1986) number of variables: number of constraints: objective function and constraints: all nonlinear, Lagrangian Hessian at solution: positive definite for problems 1, 4 and 6, and indefinite for problems 2, 3, 5 and 7. 42/52 6 CNLLS problems from Biegler, Nocedal, Schmid and Ternet: 2000 number of variables: number of constraints: objective function and constraints: all nonlinear. 12 CNLLS problems from Luksan and Vlcek (1986) number of variables: number of constraints: objective function and constraints: all nonlinear. 43/52 Comparisons: 6 methods tested by Hock and Schittkowski (1981). 3 algorithms of KNITRO (Byrd, Nocedal and Waltz 2006): Interior/Direct, Interior/CG, Active-set. Method of Mahdavi-Amiri and Bartels (1989). Method of Bidabadi and Mahdavi-Amiri (2013). Two algorithms provided by ‘fmincon’ in MATLAB. Features of our code: Language: Compiler: Linear algebra: Computer: C++, Microsoft visual C++ 6.0, Simple C++ Numerical Toolkit, x86-based PC, 1667Mhz processor. 44/52 Performance profile: Means of comparison: Number of objective function evaluations. , Define : Number of objective function evaluations in algorithm problem . for : Number of algorithms. Performance function for algorithm : , where is the total number of problems and 45/52 Legends: Tr-LS: our combined trust region-line search method. B: method of Bidabadi and Mahdavi-Amiri. H: best methods tested by Hock and Schittkowski. MA: Method of Mahdavi-Amiri and Bartels. D: Interior/Direct algorithm of KNITRO. CG: Interior/CG algorithm of KNITRO. AS: Active-set algorithm of KNITRO. FM1: Active-set algorithm provided by ‘fmincon’ in MATLAB. FM2: Interior point algorithm provided by ‘fmincon’ in MATLAB. 46/52 47/52 48/52 Conclusions two-step superlinear rate of convergence effective new scheme for approximating and updating projected structured Hessian practical adaptive scheme for the penalty parameter updates efficient combined trust region-line search strategy help in speeding up the global iterations Employment of an effective trust region radius adjustment competitive as compared to other methods 49/52 References [1] M. R. ANSARI and N. MAHDAVI-AMIRI, A robust combined trust region-line search exact penalty projected structured scheme for constrained nonlinear least squares, Optimization Methods & Software (OMS) 30(1) (2015), 162-190. [2] R. H. BARTELS and N. MAHDAVI-AMIRI, On generating test problems for nonlinear least squares, SIAM J. Sci. Stat. Computing 7(3) (1986), 769-798. [3] N. BIDABADI and N. MAHDAVI-AMIRI, A two-step superlinearly convergent projected structured BFGS method for constrained nonlinear least squares, Optimization: A Journal of Mathematical Programming and Operations Research 62(6) (2013), 797-815. [4] L. T. BIEGLER, J. NOCEDAL, C. SCHMID and D. TERNET, Numerical experience with a reduced Hessian method for large scale constrained optimization, Comput. Optim. Appl. 15(1) (2000), 45–67. [5] R. H. BYRD, J. NOCEDAL and R. A. WALTZ, Steering exact penalty methods for nonlinear programming, Optimization Methods & Software 23 (2008), 197–213. [6] T. F. COLEMAN and A. R. CONN, Nonlinear programming via an exact penalty function: Global analysis, Math. Program. 24 (1982), 137–161. [7] A. R. CONN and T. PIETRZYKOWSKI, A penalty function method converging directly to a constrained minimum, SIAM J. Numer. Anal. 14 (1977), 348–375. 50/52 [8] J. E. DENNIS, Jr., D. M. GAY and R. E. WELSCH, An adaptive nonlinear least-squares algorithm, ACM Trans. Math. Soft. 7(3) (1981), 348–368. [9] J. E. DENNIS, Jr., H. J. MARTINEZ and R. A. TAPIA, A convergence theory for the of structured BFGS secant method with an application to nonlinear least squares, Journal of Optimization Theory and Applications 61 (1989), 161–178. [10] J. R. ENGELS and H. J. MARTINEZ, Local and superlinear convergence for partially known quasi-Newton methods, SIAM Journal on Optimization 1 (1991), 42–56. [11] R. FLETCHER, An exact penalty function for nonlinear programming with inequalities, Math. Program. 5 (1973), 129–150. [12] T. GLAD and E. POLAK, A multiplier method with automatic limitation of penalty growth, Math. Program. 17 (1979), 140–155. [13] W. HOCK and K. SCHITTKOWSKI, Test Examples for Nonlinear Programming Codes, Lecture Notes in Economic and Mathematical System #187, Springer-Verlag, New York, 1981. [14] L. LUKŠAN and J. VLČEK, Sparse and partially separable test problems for unconstrained and equality constrained optimization, Tech. Rep. 767, Institute of Computer Science, Academy of Sciences of the Czech Republic, 1999. [15] N. MAHDAVI-AMIRI and M. R. ANSARI, Superlinearly convergent exact penalty projected structured Hessian updating schemes for constrained nonlinear least squares: Asymptotic analysis, Bulletin of the Iranian Mathematical Society 38(3) (2012), 767-786. 51/52 [15] N. MAHDAVI-AMIRI and M.R. ANSARI, A superlinearly convergent penalty method with nonsmooth line search for constrained nonlinear least squares, SQU Journal for Science 17(1) (2012), 103-124. [16] N. MAHDAVI-AMIRI and M.R. ANSARI, Superlinearly convergent exact penalty projected structured Hessian updating schemes for constrained nonlinear least squares: Global analysis, Optimization: A Journal of Mathematical Programming and Operations Research 62(6) (2013), 675-691. [17] N. MAHDAVI-AMIRI and R. H. BARTELS, Constrained nonlinear least squares: An exact penalty approach with projected structured quasi-Newton updates, ACM Transactions on Mathematical Software 15(3) (1989), 220–242. [18] J. NOCEDAL and M. L. OVERTON, Projected Hessian updating algorithms for nonlinearly constrained optimization, SIAM J. Numer. Anal. 22(5) (1985), 821–850. [19] T. PIETRZYKOWSKI, An exact potential method for constrained maxima, SIAM J. Anal. 6 (1969), 299–304. [20] W. I. ZANGWILL, Nonlinear programming via penalty functions, Management Sciences 13 (1967), 344–358. 52/52 Thank you for your attention!

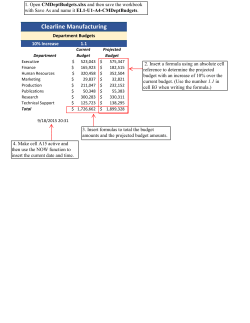

© Copyright 2026