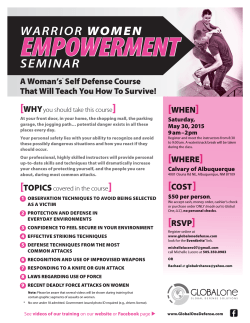

Architecting a Predictive and Proactive Cyber Defense System

Little Book of Security: Cyber Defense Mission Precognition: Architecting a Predictive and Proactive Cyber Defense System A must-read for IT decision-makers and information security professionals Get There Faster Little Book | Cyber Defense 2 Get There Faster Little Book | Cyber Defense Contents Is Proactive Cyber Security Really Achievable?....................................................4 Architecting a Predictive Cyber Defense System................................................ 5 Architecture.............................................................................................................. 7 Design for Scale.......................................................................................................9 Monitoring.............................................................................................................. 10 Correlation............................................................................................................... 10 Prediction................................................................................................................ 12 Response................................................................................................................. 14 Analyses.................................................................................................................. 15 Realizing the Possible.............................................................................................16 Get There Faster 3 Little Book | Cyber Defense Is Proactive Cyber Security Really Achievable? The ever-increasing rise in cyber warfare attacks on our country’s defense, corporate, and financial systems, has given rise to the need for a better approach to cyber defense. The old reactive paradigm will no longer suffice to protect our strategic cyber assets. Attack vectors are consistently becoming more complex and harder to detect. Attacks patterns are moving up the stack from the network layer to the application layer. Our current approach of monitor, detect, and then analyze after the fact is putting us at risk. In effect, we are shutting the barn doors after the horses are already out. Once we have completed analyzing the attack, the damage is done and usually can’t be reversed. We need a new approach to defend against this new threat pattern. In order to prevent catastrophic damage to our infrastructure, we need the ability to detect cyber attacks in real time and stop them before they complete. Traditional defenses such as firewalls and intrusion detection systems are not enough, we need a new generation of tools that can react as fast as, or even faster, than the automated tools used to attack our systems. Many question whether achieving a proactive cyber posture is even possible. The answer is yes! The products and tools necessary to properly protect our mission-critical systems already exist today. We will look at how this new generation of products is making proactive cyber defense a reality. 4 Get There Faster Little Book | Cyber Defense Architecting a Predictive Cyber Defense System This guide proposes an architecture that leverages a new generation of tools, to create a real-time proactive cyber defense system. A system that not only detects attacks as they are happening but even uses predictive analytics to anticipate them and take proactive action to prevent them. A system that learns from previous attack patterns in order to detect and predict similar attacks in the future. We will prescribe an event-driven architecture utilizing a complex event processing (CEP) engine to enable this capability by providing a highly performant, intelligent monitoring and response system to fuse security events from a number of point solutions, detect attack patterns across the security product spectrum and in automated fashion, take proactive measures to thwart or reduce the impact of attacks. We will also discuss how predictive analytics can minimize infrastructure risk by merging real-time events with historical Big Data into actionable intelligence. The key steps in this process are: 1. Monitor real-time security feeds from all available security and relevant social data sources 2. Combine and correlate data feeds and build predictive models using features across data feeds 3. Analyze patterns to predict system, network, and social vulnerabilities that are most attractive to attackers 4. Automate response workflows that proactively prevent threats 5. Store relevant data in Hadoop for both historical analysis (finding previously undiscovered attacks) and real-time investigations using inmemory computing and visual analytics Get There Faster 5 Little Book | Cyber Defense Attack vectors are consistently becoming more complex and harder to Figure 1 - Threat Prevention Steps It’s difficult to build systems that can ingest the amount of data needed to recognize cyber attacks and then process that data within the relevant time windows needed to prevent or mitigate the impact of the attack. The data needed for recognition of the event is unknown at design time and therefore requires a model that can dynamically recognize new threat clusters, alert analysts to label these new clusters, and feed them to an online learning model for future predictions. As shown in Figure 1, the process for proactive threat prevention consists of five main phases: 1. Monitoring: Ingesting large amounts of streaming, real-time security events from multiple systems 2. Correlation: Aggregating, filtering, and transforming data streams into correlated events 6 Get There Faster Little Book | Cyber Defense 3. Prediction: Detecting patterns and anomalies across correlated events to predict attacks 4. Response: Initiating automated and manual workflows to respond to and prevent attacks 5. Analysis: Analyzing historical and real-time data to detect and label new or undetected attacks Each of thee phases is critical to the success of a proactive cyber defense system. Unfortunately, most of today’s cyber security products and applications focus heavily on monitoring and detection at a granular level. They lack the ability to correlate events across other vendors’ products, predict complex attacks in flight, and most importantly, respond to those attacks using dynamic workflows to ensure immediate and thorough coverage of the response protocols. Architecture As the amount of security data associated with applications continues to grow, the ability to store and process that data in a useful timeframe continues to shrink. Big Data technologies let us store and process extremely large amounts of data, but don’t necessarily provide an adaptive way to process and analyze the data in real-time, thus limiting our ability to perform predictive analyses on the data. Big Data technologies such as Hadoop, are batch-oriented, focusing more on running different analyses over the same data set, than ingesting new data and performing the same types of analyses, such as you would want to do in a cyber-crime or fraud detection scenario. What is needed is a technology that allows data in the form of events from multiple sources to be correlated in real-time and actions to be taken on events matching patterns of interest in order to see ahead and take action to avert the pattern end-state. For example, Get There Faster 7 Little Book | Cyber Defense cyber attacks and fraud often follow known patterns. If the pattern can be detected early, actions can be taken to avoid a successful intrusion or a fraudulent transaction. In order to address the way we approach this new era of real-time data collection and predictive analyses we need a new paradigm. One such approach is to utilize an event driven architecture which allows you to treat data feeds as streams, searching for items of interest, rather than continuously storing all the data and repeatedly processing it. 8 Get There Faster Little Book | Cyber Defense Design for Scale The basis for any real-world threat detection system must be a scalable architecture. Cyber attacks are increasingly growing more complex and the data that must be collected continues to multiply. In order to address the ever-growing number of events and the shrinking response window, an event-driven architecture (EDA) must be used to ensure real-time performance. Event Driven Architectures are an approach to handling a large amount of asynchronous events, such as in a stock trading system. They differ from traditional Service Oriented Architectures in the way that they handle requests. Instead of batching data together into requests that are submitted and responded to synchronously. EDA exposes fine-grain data that represent events in an asynchronous manner. As a result, data streams can be filtered for particular events. EDA architectures are usually designed for performance, concentrating on handling thousands to millions of events a second. This type of architecture is well suited for large sensor networks in which millions of events must be sifted through and correlated. Traditionally, rules engines were used for this type of processing, but they become quickly overwhelmed with such high throughput systems. Get There Faster 9 Little Book | Cyber Defense Monitoring The first step in any threat prevention architecture is a pervasive monitoring strategy. Most organizations have robust network monitoring tools, but neglect the entire stack in favor of focusing on the entry points. Monitoring needs to be put in place across all of the platform tiers and at every layer. It is not sufficient to protect only the perimeter when threats are increasingly coming from the inside, as well as higher up the application stack. In addition to network sensors, database logs, application server logs, application logs, iDM logs, directory server logs, OS logs, host-based intrusion detection systems, and a slew of other security sensors must be monitored continuously. Within the government, CDM (continuous diagnostics and mitigation) has become a religion because security experts all agree that most of the attacks taking place today can be stopped just through better monitoring. Correlation When all of the proper security sensors are in place and are being continuously monitored, all of that data must be correlated. Today’s threats are sophisticated and designed to evade single monitoring sensors, such as a network-based intrusion detection systems. Instead, they exploit vulnerabilities at many different layers and in several disparate products. In order to detect these sophisticated attacks, events from all of the different types of security sensors must be aggregated and correlated. Aggregating and correlating the millions of events per second in real time that can occur in a large organization or government agency requires a different way of handling events. In order for you to process this magnitude of events from so many different data streams, a complex event-processing (CEP) engine is required. 10 Get There Faster Little Book | Cyber Defense A complex event processor, correlates events by creating extemely lightweight event listeners that can be tied together and given lifetimes in order to detect higher order events. For instance, a simple correlation rule might look like: “if event A from stream X happens with 5 seconds of event B from stream Y, take action C”. Simple rules like this can then be strung together to look for very sophisticated patterns, such as those that are used in fraud detection by credit card companies, or high frequency trading violations. Get There Faster 11 Little Book | Cyber Defense A good CEP engine, such as Apama, provides an integrated development environment with graphical tools to construct and dynamically deploy event monitors. It also provides a rich set of analysis tools to gain deeper insights into the data streams as well as providing a playback capability that allows you test different scenarios and create monitors that can be tested against real data in an accelerated or decelerated time frame. Using a CEP, events from all of the different security sensors that are being monitored can be aggregated and correlated to provide a more holistic view of the ongoing threat landscapes. Threats that were once hard to detect becuase they spanned multiple sensors, can now be easily recognized, once the corresponding events are correlated. Prediction When all the events from the various security sensors and logs have been aggregated and correlated to detect patterns, these patterns can be recognized and future events can be predicted. For instance, consider a multi-phase attack that starts with a phishing attack, then downloads a file onto a target’s host, scans the file system for certain document types and then uploads those documents to another compromised machine, where the attacker can collect them. Once the system correlates the events from the email server and the host-based intrusion detection system, it can recognize the pattern and predict that documents will be stolen by being uploaded to one of the attacker’s machines. It is this ability to recognize patterns and predict future events that is core to an adaptive security strategy. 12 Get There Faster Little Book | Cyber Defense To be able to continuously detect patterns and make predictions on trained data sets, but those data sets must be continuously updated. In order to process in real-time, an In-memory computing solution such as Software AG’s Terracotta platform, must be used. In-memory computing allows the millions of streaming events to be maintained and processed in memory, in order to allow for predictions to happen in real-time. It also speeds up the process of building new classification trainings sets to recognize new features and re-train and/or learn online with new data sets. Training sets for the prediction algorithms must be built by analysts that can identify and label attack patterns in the correlated event sets. They can also be built from a visual analytics platform like Presto, using current data available in-memory along with historical data stored in a Big Data store such as Hadoop. Response Now that the capability exists to predict future stages of an attack, steps Get There Faster 13 Little Book | Cyber Defense must be taken to proactively prevent theft or corruption of the data by responding with preventative measures. To respond in real-time to known and new, learned attacks, requires the ability to dynamically create and deploy response workflows. To accomplish this, a business processing engine (BPE) is needed. A BPE, like webMethods, provides dynamic workflow creation that is easy to use and powerful enough to integrate all of the governance requirements associated with a formal threat response capability. Security analysts can interact with BPE processes using task management and collaboration capabilities. The various business and IT stakeholders can define and change rules that drive the threat response processes “on the fly” without any development work. Processes are orchestrated, resulting in transparent, efficient and adaptive processes for active threat prevention and end-to-end visibility. 14 Get There Faster Little Book | Cyber Defense In addition to manual portions of the workflow, many automated responses can be configured as well, such as the ability to lock down ports and user accounts. In our earlier example, once the threat has been recognized and the future stages predicted, the BPE could respond by shutting off outbound traffic from the compromised host and then alerting a security response team so that they can go in and quickly remove the attacker’s tools from the machine, before any data is stolen. All of the steps in the process can be managed, monitored and can be tracked to ensure a proper auditing of the attack and the response. Analyses Any security incident starts and ends with analyses. Security analysts need the tools to be able to weed through piles of data to dissect an attack and understand its impact. Large organizations employ groups of security forensic experts to analyze gigabytes of data in log files and reports, to comprehend the extent of damage of a successful attack. Get There Faster 15 Little Book | Cyber Defense Therefore, the platform must provide visual analytics support that enables analysts to merge, filter, sort and run complex queries on multiple static and streaming data sources. Through visual analytics, analysts are empowered to easily combine different data sources, from log files to vendor provided web services, and not only analyze the data, but apply a variety of visualizations to it and then share those visualizations with other analysts and stakeholders. Presto is a visual analytics platform built for analysts that need to aggregate and visualize data from multiple data sources in real-time. It leverages its Real-Time Analytics Query Language (RAQL), a SQL-like query language that allows access to static and streaming data sources to provide drag and drop analytics to analysts without the need for programming. Realizing the Possible Architecting a predictive cyber defense system entails several steps and requires a variety of different products and technologies. Such a system should provide a single solution to monitor and manage threat investigations as well as proactive threat defense in the form of automated response workflows, in order to prevent attacks in process. The first step in the process is to ensure there is proper monitoring in place at all critical points throughout the IT landscape. All of the cross vendor/product monitoring data must be aggregated and correlated to provide a common operating picture from a security perspective. The cyber defense system must have the ability to predict events and our straw man architecture does so using industry standards such as R and JavaML. It has the ability to detect new threat scenarios by allowing security analysts to visually analyze and label new threats and then create subsequent response workflows to prevent completion of those scenarios 16 Get There Faster Little Book | Cyber Defense This is an open architecture that can be tailored to an agency’s specific requirements. It can be rapidly implemented through use of GUI-driven tools and Intuitive graphical interfaces and visualizations. It also prescribes a case management approach to threat response, leveraging a fully audited workflow for governance compliance and better risk awareness. Our proactive threat response architecture relies on technologies and tools that are readily available today. Leveraging these tools, it is possible to implement a predictive cyber security defense system that doesn’t just enhance existing cyber defense capabilities, but extends them to provide proactive defense by predicting an attack in progress and taking action to stop it before any real damage is done. Get There Faster 17 Find out how to Prove IT First and Prove IT Fast at www.SoftwareAGgov.com ABOUT SOFTWARE AG GOVERNMENT SOLUTIONS Software AG Government Solutions is dedicated to serving the U.S. Federal Government and Aerospace and Defense communities with massive-scale and real-time solutions for integration, business process management and in-memory computing. Our flagship products are webMethods, Terracotta/Enterprise Ehcache, ARIS and Apama. The company’s highly effective “special forces” approach to solving complex IT challenges quickly and efficiently is embraced across our customer base as a prove it first and prove it fast means for minimizing risk associated with IT investments. Headquartered in Reston, VA, Software AG Government Solutions is a wholly owned subsidiary of Software AG USA with more than 30 years of experience with the federal government. Learn more at www.SoftwareAGgov.com © 2015 Software AG Government Solutions Inc. All rights reserved. Software AG and all Software AG products are either trademarks or registered trademarks of Software AG. SAG_Cyber_Defense_18PG_5.5_7.5_LB_Jan15 Get There Faster

© Copyright 2026