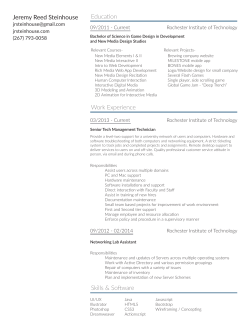

NET 4.6