How to spot invalid expert opinions in auto injury litigation:... lawyer needs to know about pre-test probabilities

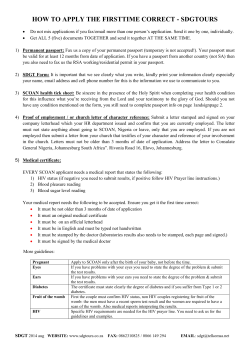

How to spot invalid expert opinions in auto injury litigation: what every lawyer needs to know about pre-test probabilities Michael D Freeman PhD MPH DC1,2 Douglas Mossman MD3 Picture the following scenario. You have just gotten a routine blood test to renew your life insurance policy. It’s no big deal – this happens every year, you’re fit as a fiddle, and you’re not worried that anything untoward will pop up. Two weeks after the blood test, your insurance agent calls and tells you that he is very sorry: the company that you were renewing your insurance with doesn’t insure people with HIV. What should you do? You’ve just been told that you tested positive for a disease that is almost certain to kill you. Do you hit the internet and investigate the latest treatments for HIV? Do you drain your retirement account, buy a Harley and book a world cruise? Or do you astonish your insurance agent by laughing off the positive result while you inform him that the odds against you’re really having HIV are 10,000 to 1? The correct answer is that it depends on who you are. If you have shared needles during intravenous drug use or have had unprotected male/male sex with multiple partners, then you should start picking a color for your new motorcycle. If you are like many people who renew their life insurance—you’re not an IV drug user, you’ve been faithfully married for 10-plus years, and you had a negative HIV test when you first got your life insurance policy—then you can start laughing. Why? People with different behavior patterns have very different probabilities of acquiring an HIV infection. Unlike many diseases which are opportunistic (meaning that they lie in wait for some weakness, like the bacteria that typically live harmlessly in your gut until your appendix 1 You can contact the author at [email protected], 205 Liberty Street NE Suite B, Salem OR 97301, 503586-0127. 2 Department of Public Health and Preventive Medicine, Oregon Health and Science University School of Medicine and the Institute of Forensic Medicine, Faculty of Health Sciences, Aarhus University. 3 Glenn M. Weaver Institute of Psychiatry and Law, University of Cincinnati College of Law; Department of Psychiatry , University of Cincinnati College of Medicine ruptures), virtually all people who have been infected with HIV in the past 10 years could have prevented the infection by modifying their behavior. (The US Centers for Disease Control estimates that more than 99% of new HIV infections resulted from IV drug use or high-risk sex, neither of which is likely to occur “accidentally.”) The probability that you have a condition that you are being tested for is what epidemiologists call the prevalence of the condition. Prevalence rates vary a lot for some conditions. The prevalence of HIV among long-term IV drug users, for example, may be as high as 0.25 (25 out of 100 are positive). By contrast, the prevalence of HIV in a healthy, faithfully married, heterosexual man who has no history of HIV-risk behaviors and previously had a negative HIV test is so tiny that it’s hard to estimate, but a reasonable guess might be less than 0.0000001 (<1 in 10,000,000). The HIV test that the insurance agent had you get could have yielded two results, “positive” or “negative.” HIV tests are highly accurate, but they are not perfect. A very small fraction of the time, both positive and negative results are incorrect. This means that there are correct or “true” positives and negatives, and incorrect or “false” positive and negatives. As is the case with most medical tests, we are most interested in a positive result with an HIV test—no one needs to call you to tell you that the test was negative if you had no reason to believe it would be. So all you really care about is whether your result is a true positive or a false positive. True positives If you are like many people, you may think that if a test has a “true positive rate” of 90%, for example, that it means that when the test is positive, the condition is present 90% of the time. This seemingly intuitive conclusion is actually an error of reasoning called the “Prosecutors Fallacy.”4 What is meant by a true positive rate of 90% is that the test will be positive 90 out of 100 times the condition is present. This doesn’t mean that every positive is a true positive, however. The true positive rate is really the true positive detection rate. False positives 4 The Prosecutor’s Fallacy is classically presented as follows: a murder suspect has a rare hair color that is identical to a hair that was found at the crime scene. The hair color is only present in 1% of the population. The prosecution presents this evidence as proof that there is a 99% probability the suspect is guilty, disregarding the fact that in a city of 1,000,000, roughly 10,000 people would have the same hair color. The diametrically opposite fallacy is the Defense Lawyer’s Fallacy, in which the same evidence is used to claim that the odds against the suspect having committed the murder are 9,999 to 1 because there 9,999 other possible suspects in the same city. Both fallacies were employed by prosecution and defense in the murder trial of OJ Simpson, apparently more effectively by the defense. A false positive is simply an incorrectly positive test result, that is, the test is “positive” for a condition when the condition is not really present. False positive rates may not be the same for all populations. The mistake that most people make when they hear that a test is positive is to assume that it is a true positive without knowing the prevalence, or pre-test probability, of the condition that the test is designed to detect. Confused? Let’s illustrate these concepts with an example. Returning to your hypothetical positive result on the HIV test, if the test you were administered by the life insurance company has a fairly typical true positive rate of 99.9% and a fairly typical false positive rate of 0.1%, you can figure out for yourself whether or not you should be worried, depending on whether you are in the high risk or low risk group. We already know that if you are in the high risk IV drug user group that there is a 25% chance that you are HIV-positive. We can show a hypothetical population of 10 million high-risk persons graphically (the reason for 10 million will be apparent shortly), first with everyone mixed together, and then divided into HIV-infected and HIV-uninfected subgroups High Risk Population 10,000,000 Infected Uninfected 2,500,000 people 7,500,000 people We can depict the low risk population in the same fashion: Low Risk Population 10,000,000 Uninfected 9,999,999 people 1 Infected person As you can see, we need to have 10,000,000 people in the low-risk group just to have one HIV infected person—that’s why we put 10,000,000 people in the high-risk group for comparison. If we assume that both groups take the insurance company’s HIV test, we can figure out what fraction of the time the test results will be true positives and false positives. Remember that the test has a 99.9% true positive rate, a 0.1% false positive rate, that the true positive rate only applies to the people who are really positive (the infected subgroup on the right), and the false positive rate only applies to the uninfected subgroup on the left. When the high-risk group takes the HIV test, we expect the following result: High Risk Population 10,000,000 Uninfected 7,500,000 people Infected 2,500,000 people 0.1% False Positive Rate 99.9% True Positive Rate 750 False Positive Tests 2,497,500 True Positive Tests In the high-risk group, we expect to get 2,497,500 true positive tests and 750 false positive tests, or 3,330 correct positive results for each incorrect positive result. So, if a member of this high-risk group has a positive HIV test, the post-test ratio is 3,330 to 1 in favor of actually having HIV. Now let’s examine what we can expect for members of the low-risk group. Low Risk Population 10,000,000 Uninfected 9,999,999 people 0.1% False Positive Rate 10,000 False Positive Tests 1 person Infected 99.9% True Positive Rate 1 True Positive Test What a “positive” test means for someone in the low-risk group is astoundingly different from what the result meant for the high-risk group: 10,000 falsely positive results for every 1 right result. To put this another way, even if a member of this low-risk group has a positive HIV test, the post-test ratio is only 1:10,000 in favor of actually having HIV. Remember, the low-risk group took the exact same HIV test that as the high-risk group! What was different about the two groups was their pre-test probability of having HIV. If you hadn’t seen the mathematical results with your own eyes, you might ask, “How can the exact same test yield results that are so enormously different?” The answer that should now be obvious is that the pre-test probability of the condition of interest is critical to what a test result means and whether the results can be considered valid,5 and this is where the story gets interesting for injury litigation. Pre-test Probabilities and Injury Litigation A middle-aged client comes to your office and gives the following history: he was in a rearimpact collision with minimal damage to his car. He went to his primary care doctor the day of the crash complaining of dizziness, nausea, a headache, and confusion. After 2 months of complaints, the same doctor sent your client for a MRI scan of his brain, which showed microcavitation in the frontotemporal lobe, and subsequent neuropsychological testing related the MRI finding to a brain injury that now affects the client’s ability to do his job. Both his primary care doctor and the neuropsychologist relate the injury to the collision. You take the case and file suit. You later learn the law firm representing the insurer plans to use the testimony of neuropsychologist who will testify that he administered a malingering test called the Fake Bad Scale to your client and it came back positive. His conclusion is that your client is malingering and does not have a real head injury. If the opinion sounds familiar it’s because the allegation of malingering is the most commonly used method for defending injury litigation. Most attorneys deal with such opinions with crossexamination, often with mixed results. There is a better way, however, and this comes through an understanding of how pre-test probabilities affect the validity of tests. All forensic methods are really tests, and all forensic opinions are really test results Regardless of the type of expert opinion, the bases for all testimony can be described in terms of test results. This is obvious when experts testify about tests they have administered like in our example, but the same concept applies to orthopedic, radiologic, and even biomechanical expert testimony as well. Given this fact, all reported positive test results can be broken down into true positives and false positives, and this allows for an evaluation of the test validity. What forensic tests mean and why plaintiff tests are inherently more valid than defense tests 5 Validity can be defined in a variety of ways, but we will use it to describe the true positive to false positive ratio of a test for a population with a specified pre-test probability. Determining the cutoff value for a test to be considered “valid” is somewhat debatable; the medical literature generally doesn’t consider a test valid for use in diagnosis unless it’s right 10 times for every one time it’s wrong, and this is a good lower threshold value for a validity ratio that has also been used in the forensic literature. An evaluation of the validity ratio of the positive findings from the plaintiff and defense experts requires an understanding of the same three factors described earlier in the hypothetical HIV test scenario: the true positive rate of the test, the false positive rate of the test, and the pretest probability of real injury versus malingering given the facts presented earlier. What we have learned from the HIV test example is a medical test’s meaning is largely a function of pretest probability, that is, what our prior knowledge tells us about the likelihood of the condition being present. If the condition we are interested in is reasonably common in the group being tested, then a positive test result will imply a high probability of having the condition—the validity—is high, but if the condition is rare, then the results may fall below the 10 to 1 ratio threshold for the test to be considered valid. To figure out what any test means,6 we have to assign some estimated value for the three factors needed to calculate the validity ratio. Often, estimating the true and false positive rate of a test is fairly straightforward, because a published study may report these values. Estimating the most important value, the pre-test probability, can be a bit more involved. But one intuitive way to get at this is to ask a question about persons in the population who will be tested. For tests applied by the plaintiff expert, this question would be, “How often would I find a real brain injury in a group of 100 people with the same history, physical exam findings, and test results as the plaintiff has?” For the test applied by the defense expert, the question is, “How often would I find malingering in 100 people who have the same history, physical exam findings, test results, a prior physician determination of real injury, and no prior finding of malingering, as with this plaintiff?”7 We might estimate the validity ratio of the plaintiff and defense experts’ tests follows: Plaintiff’s expert’s neuropsychological test for brain injury (a positive test means the person really has a brain injury). True positive rate = 95%. Certain neuropsychological tests are very good at detecting problems in persons with the types of brain injury that has shown up on the plaintiff’s brain MRI scan. False positive rate = 20%. This reasonable ballpark estimate recognizes that other problems besides brain injuries may contribute to poor performance on very sensitive neuropsychological tests. 6 One might reasonably argue that if an expert can’t give some estimation of the validity of his test, i.e. how often he expects it to be correct vs. incorrect, then the Court should not let the expert present the results of the test, as they have no real meaning. 7 Notice that in our example (and most of the time in real world litigation circumstances), a defense determination of malingering contradicts a prior physician’s evaluation and diagnosis of a real injury. So for the defense opinion to be correct, the original determination that the plaintiff had a real injury must always be in error. Pre-test probability of brain injury = 80%. By the time a patient has been referred to a neuropsychologist for testing, the doctor has a high index of suspicion that the patient has a brain injury. However, the possibility remains that the patient is faking injury or has some other condition that may mimic brain injury, such as pain or anxiety. Here, we’re estimating this possibility at 100% – 80% = 20%. Defense’s expert’s Fake Bad Scale test for malingering (a positive test means that the plaintiff is malingering and not really brain-injured). True positive rate = 90%. This is an approximation from the literature, based on studies of volunteers who pretended to malinger and were administered the Fake Bad Scale (FBS). There are no actual data on the true positive rate for malingering tests; real malingerers hide their existence and typically don’t assemble in groups. False positive rate = 20%. The false positive rate for the FBS has been studied a number of times but it is not clear how well it can correctly identify truly brain injured people (true negatives). Given the fact that the questions on the FBS that indicate malingering include wearing glasses and female gender and other questions that are not particularly indicative of malingering or any other diagnosis beyond myopia and possible pregnancy a 20% false positive rate is in all likelihood overly conservative. Pre-test probability of malingering = 10%. Malingering and real injury are essentially complements; that is, if you add all of the malingerers together with all of the really injured people you should come up with 100% of the population being tested. Thus, the simplest way to estimate the rate of malingering is to subtract the estimated pre-test probability of real injury from 100%. For the plaintiff test we estimated this at 80%, but the remaining 20% was divided between malingerers and people with real injuries or condition that mimicked brain injury, thus the value is less than 20%. Although some estimates in the literature for malingering are as high as 30%, most are much lower. Since the population being tested has, by definition, already been determined to be truly injured by the original evaluating neuropsychologist, this initial negative screening result for malingering affects pre-test probability much like the prior negative HIV test did for our life insurance test example, and the 10% value may very well be too high. Validity Ratio Calculations The following diagram illustrates the validity ratio of the plaintiff’s test for injury and the defense’s tests for malingering, using the above estimated figures for true and false positives and pre-test probability on a hypothetical group of 100 plaintiffs for each test. Validity Assessment of the Plaintiff and Defense Expert Tests Plaintiff test for real injury (100 plaintiffs) Defense test for malingering (100 plaintiffs) 0.80 pre-test probability of brain injury etc. 0.10 pre-test probability of malingering injury 20 with no brain injury 80 with real injury 90 not malingering 10 malingering False positive rate 20% True positive rate 95% False positive rate 20% True positive rate 90% 18 false positives 9 true positives 4 true positives 76 true positives As demonstrated above, the validity assessment results for the plaintiff’s injury tests were 76 correct tests to 4 incorrect tests, a validity ratio of 19 to 1. The results for the defense’s Fake Bad Scale test were as follows 9 correct tests to 18 incorrect tests, a validity ratio of 1 to 2. Thus, the plaintiff’s test exceeded the validity ratio threshold of 10 to 1, and the defense’s test fell below it. As a result the defense’s test for malingering is neither valid nor can it be considered reliable8 for any forensic application. Discussion A couple of things to pay attention to: first note that the values for true and false positive rates for both plaintiff and defense neuropsychology tests were nearly identical and that it was the pre-test probabilities that were responsible for the widely divergent results.9 This is because of the difference between the plaintiff and defense expert approach to explaining the symptoms of the plaintiff in the context of the history of a traffic crash. The plaintiff expert acts like a typical clinician, searching for the most probable explanation for the symptoms and history. This approach results in high pre-test probabilities. The defense expert searches for a reason to point away from the most probable explanation for the symptoms and toward a less probable but still possible explanation. This approach leads to low pre-test probabilities. It is the nature of the beast, so to speak, that dictates low pre-test probabilities for defense tests for malingering, fraud, faking, no injury, pre-existing injury, and all other minimally probable explanations for complaints of injury following traffic crashes. As long as the most probable explanation for such complaints remains the most obvious explanation, tests performed by plaintiff experts to demonstrate real injury will always be far more accurate than those performed by defense experts to demonstrate malingered injury. 8 Most would agree that a test that is wrong more often than it is right cannot be considered to be reliable source of truth in any jurisdiction. 9 For those of you interested in the mathematical relationship between the pre-test probability and the validity ratio, you will notice that at 0.8 pre-test probability the plaintiff’s neuropsychology testing was assessed at 19 to 1 true to false positives versus 1 to 2 true to false positives for the defense’s FBS test. If we cross multiply and divide (think grade school math) this comes out to an accuracy ratio of 38 to 1 for plaintiff vs. defense testing, nearly 5 times the pre-test probability ratio of 8 to 1 (0.8 vs. 0.1). Practical Application As we have seen, the meaning of a “positive” test is far from straightforward. The understanding that a seemingly infallible test can be wrong far more often than it’s right (like the life insurance HIV test) because of a third factor that has nothing to do with the test (pretest probability) is far from intuitive. If attorneys wish to do the best for their clients they need to start asking experts about the accuracy of their tests, and how often they expect to find the positive results that they report. In the future, attorneys may want to consider some of the following questions during the discovery process of defense expert opinions: 1. Are the concepts of accuracy and reliability important in expert testimony? 2. Can the expert define the terms? 3. Are there ways to quantify accuracy? What are they? 4. How often is the test used by the expert right vs. how often it’s wrong? How did the expert arrive at the estimate? 5. Does the expert agree that a test for malingering, etc. needs to be able to identify those who are not malingering as well as those who are? 6. Does the expert know what a false positive rate is?10 7. Does the expert agree that a test that has too many false positives would not be a particularly reliable test? 8. Does the expert agree that the allegation of malingering that is supported with a falsely positive test would cause harm to a person who is legitimately injured? 9. Does the expert know the false positive rate for his test when it is given to a group of 100 people who are truly injured who have a history of a trauma, immediate onset of symptoms, a diagnosis of traumatic injury, and no prior finding of malingering, just like the plaintiff?11 10 Another way to ask this question is to ask what the true negative rate is, and then subtract that value from 1 or 100% to find the false positive rate. 11 Remember that a false positive rate is only applicable to people who are really injured. 10. How often would the expert expect to find malingering etc. in a group of 100 people who have a history of a trauma, immediate onset of symptoms, a diagnosis of traumatic injury, and no prior finding of malingering? What is the basis for the estimate? 12 11. Should an expert who is incapable of answering any of the prior questions be allowed to testify to his conclusions as “a reasonable probability or (worse yet) certainty?” 12 Hopefully by now you recognize this as the pre-test probability

© Copyright 2026