What is...Linear Logic? Introduction Jonathan Skowera

What is...Linear Logic?

Jonathan Skowera

Introduction

Let us assume for the moment an old definition of logic as the art “directive

of the acts of reason themselves so that humans may proceed orderly, easily

and without error in the very act of reason itself.”1 It is a definition to reflect

everyday usage: people usually say reasoning is logical or illogical according

to the apparent quality of the reasoning. So assuming there is a human art

of logic, and asking what domain of human activity it governs, a reasonable

guess would be acts of reasoning.

With this in mind, it is not clear at first glance that linear logic falls in the

domain of logic. The logic is called linear logic, and its rules of inference treat

formulas as much like finite resources as propositions. That is a radical difference. With intuitionistic logic, you can rephrase calculus as non-standard

analysis and then define enigmatic (yet consistent!) infinitesimals. But an

analogous “linear analysis” based on linear logic seems unlikely. The logic

lacks inferences any proposition should satisfy.

On the other hand, logician certainly recognize linear logic immediately

as just another symbolic logic with a formal language, rules of inference and

theory of its models. It is nontrivial with interesting categorical models.

Even better, linear logic has plenty of uses for computer scientists. That’s a

strong selling point in the tradition of the Curry-Howard isomorphism.

This counter-intuitive, ”what is it?” nature of linear logic which will hopefully fuel your interest, even if it lies outside your everyday working experience.

One caveat: the definitions of logic ground the definitions of mathematics,

but the logician remains free to use any (sound) deductive reasoning whatever

to infer properties of the logic. Logicians are not mathematicians attempting

to turn their eyeballs inwards; that is not an effective way to know oneself.

1

St. Thomas Aquinas, Expositio libri Posteriorum. quoted in “Did St. Thomas Aquinas

justify the transition from ‘is’ to ‘ought’ ?” by Piotr Lichacz

1

So it is perfectly consistent to use mathematical results to prove theorems

about the foundations of mathematics (e.g., the compactness theorem in

logic follows from Tychonoff’s theorem in topology) or analyse their content

(e.g., categories as a semantics for logic), provided that those properties are

unnecessary for grounding mathematics.

More to the point (or to the comma)

Linear logic arises by suppressing the rules of weakening and contraction.

These are rules of sequent calculus. A sequent look like,

A, B ` C, D

where A, B, C and D are variables standing in for unspecified formulas. If

you’re seeing this for the first time, I suggest for this talk to secretly read it

as “A and B imply C or D”. That’s just a stopgap solution, since this leads

to interpreting two different symbols as implication, since a formula can itself

contain an implication symbol, e.g., A1 , A1 → A2 ` A2 is a sequent in some

logic. A better reading of the turnstile ` might be “proves”. For example,

((∀g)ge = g), ((∀g)gg = e) ` ((∀g)(∀h)gh = hg)

where e is an atomic formula. This could easily be viewed as a lemma in

the theory of groups which says that g 2 = e for all g ∈ G implies G is

commutative (not directly, but there exists a proof).

To combine valid statements together into more complex statements requires rules of inferences. A (sound) rule of inference infers one sequent

(the conclusion) from another (the premise). This is written by writing the

premise over the conclusion in fraction style.

((∀g)ge = g), ((∀g)gg = e) ` ((∀g)(∀h)gh = hg)

((∀g)ge = g) ∧ ((∀g)gg = e) ` ((∀g)(∀h)gh = hg)

where A ∧ B symbolizes A and B resembling the set intersection symbol

A ∩ B, selects points which are in A and B. This could be viewed as combing the two “theorems” on the left hand side into a single theorem which

continues to imply the theorem on the right hand side. The small size of

the above logical step admittedly makes the above inference mathematically

uninteresting. The steps of a proof usually make much bigger leaps. But

for the study of the proof system itself, restricting to a few simple rules of

inference greatly simplifies the analysis of the proof system and, as long as

the simple rules of inference of the logician compose to form the complex

inferences of the mathematician, there is no loss of generality.

2

The three forms of inference could be viewed as sorting mathematics into

three levels:

A → B represents the sort of implication within the statement of a proposition, e.g., if A is true, then B is true.

A ` B is the sort of implication between propositions, e.g., assuming A one

can prove B.

A`B

C`D

is the sort of implication performed in the process of proving, e.g., given

a proof of B from A one can prove D from C. In this sense, the rules

of inference are rules for rewriting.

Newcomers should be warned that this innocuous looking interpretation actually relates to a contentious philosophy called intuitionism that views a

proposition A especially as the claim that one has a proof of A (that one

has reduced A to steps which intuition can verify). This leads to contention,

because the age-old law of the excluded middle2 does not satisfy this interpretation, i.e., it is not true that one either has a proof of A or a proof of

¬A. For example, if A is independent of the axioms, then neither A nor ¬A

is provable, and one may freely decide whether to assume A or ¬A.

Let Γ, Γ0 , ∆, ∆0 , etc. be finite sequences of formulas3 , and A, B, C, . . . be

formulas. A certain number of formulas should be true using the above

natural language interpretation of sequents,

ax

A`A

i.e., a formula proves itself, and also,

Γ, A ` ∆

L∧

Γ, A ∧ B ` ∆

Γ ` ∆, A

Γ ` ∆, B

R∧

Γ ` ∆, A ∧ B

Γ, A, B ` ∆

Γ ` ∆, A Γ0 ` ∆0 , B 0

L ∧0

R∧

Γ, A ∧ B ` ∆

Γ, Γ0 ` ∆, ∆0 , A ∧ B

There should also be a few banal rules about the commas,

Γ, A, B, Γ0 ` ∆

exch

Γ, B, A, Γ0 ` ∆

Γ`∆

weak

Γ, A ` ∆

Γ, A, A ` ∆

contr

Γ, A ` ∆

and their right-side analogues. The first is called the exchange rule. The last

two are called weakening and contraction, respectively.

2

The law of the excluded middle says that either a proposition or its negation holds:

` A ∨ ¬A.

3

This follows the very well-written notes, Lectures on Linear Logic, by A.S. Troelstra.

3

Keeping track of formulas In order to treat formulas more like resources,

their spontaneous appearance and disappearance should be restricted. In

other words, the weakening and contraction rules should be omitted. This

naturally affects the other rules.

Assuming weakening and contraction, the rules L∧ and R∧ are equivalent

respectively to L∧0 and R∧0 . For example, R∧0 and R∧ are equivalent in the

presence of weakening and contraction rules (∆ is omitted for simplicity).

Γ`A w

Γ0 ` B w

Γ, Γ0 ` B

Γ, Γ0 ` A

R∧

Γ, Γ0 ` A ∧ B

Γ`A

Γ`B

R∧’

Γ, Γ ` A ∧ B

c

Γ`A∧B

Without weakening and contraction, these are no longer equivalent, so we

could legitimately distinguish between the ∧ of the first two rules, and the

connective of the second two rules, call it ∧0 . That is just what we’ll do, but

the first connective will be called instead & (with), and the second connective

will be called ⊗ (tensor ). The ⊗ rules apply in any context (they are contextfree), while the & rule R∧ requires the context of the two sequences to agree

(it is context-sensitive).

Another way to divide the rules? We might attempt to divy up the

rules among the connectives differently. For example, we might define a

connective with rules R∧ and L∧0 . In that case, as soon as we add the cut rule

(see below), we can derive the rule of contraction. Weakening follows from

the complementary choice. So if the ∧ is either replaced by two connectives

in the wrong way, or if it is not kept as it is, the rules of weakening and

contraction can be derived. Hence linear logic is forced upon us by the single

choice to suppress weakening and contraction.

To sum up, we have arrived at rules

Γ, A ` ∆

L&

Γ, A & B ` ∆

Γ ` ∆, A

Γ ` ∆, B

R&

Γ ` ∆, A & B

Γ, A, B ` ∆

L⊗

Γ, A ⊗ B ` ∆

Γ ` ∆, A Γ0 ` ∆0 , B

R⊗

Γ, Γ0 ` ∆, ∆0 , A ⊗ B

Linear logic was proposed by the French logician Jean-Yves Girard in

1986. It seems to have been a rare case of logician single-handedly proposing

an entire theory. Though published, the principal paper [Gir87] was not

subjected to the normal review process because of its length (102pp) and

novelty.

4

Does it have any negatives?

The modifications to classical logic multiplied connectives, but it does not

multiply negatives. There will still be a single negation operator called dual

and written using the orthogonal vector sub-space notation A⊥ .

At this point, a few assumptions will simplify the presentation without

affecting the expressiveness of the logic.

1. Applications of the exchange rule are ignored by letting Γ, ∆, etc.

denote multisets instead of sequences. (A multiset is a function f :

S → Z >0 assigning a positive number to each element of a set.)

2. The right-hand side of a sequent suffices to express all possible sequents,

Γ ` A, ∆

Γ, A⊥ ` ∆

Γ, A ` ∆

Γ ` A⊥ , ∆

So all sequents will be assumed to be of the form ` ∆.

3. The negation operator does not appear in the signature of the logic.

Instead of being a connective, the dual is an involution on atomic formulas which induces an involution on all formulas by a few simple rules

(see below) [?, p.68].

This removes the unnecessary bookkeeping of moving between equivalent expressions of a single formula.

New connectives The introduction of negation doubles the number of

connectives in analogy with the relation ¬(A ∧ B) = ¬A ∨ ¬B. Negating

and’s should give or’s. Negating ⊗ gives a connective which we will write

` (par, as in “parallel or”) and negating & gives ⊕ (plus). In other words,

negation satisifes,

A⊥⊥ = A

(A ⊗ B)⊥ = A⊥ ` B ⊥

(A & B)⊥ = A⊥ ⊕ B ⊥

(A ` B)⊥ = A⊥ ⊗ B ⊥

(A ⊕ B)⊥ = A⊥ & B ⊥

These properties determine the rules of inference for ` and ⊕ (just switch

the rules for ⊗ and & to the left hand side of the turnstile). They will be

summarized below.

The connectives differ chiefly by how they handle the context of a formula.

The context of the conclusion of the & and ⊕ rules agrees with the context of

each premise. These are called additive connectives. On the other hand, the

rules for ⊗ and ` do not restrict the context of the affected formulas, and

the conclusion concatenates the contexts of the premises. These are called

multiplicative connectives.

5

Multiplicatives ⊗ (multiplicative ∧) and ` (multiplicative ∨) give an embedding of classical logic

Additives & (additive ∧) and ⊕ (additive ∨) give an embedding of intuitionistic logic, Cf. Section .

As for the choice of symbols, it might at first glance seem better to label

the negative of ⊗ as ⊕ instead of `. The symbols are assigned so that the

relation between ⊗ and ⊕ is U ⊗ (V ⊕ W ) ∼

= U ⊗ V ⊕ U ⊗ W . The choice of

symbols reflects this relation, but proving it requires a complete list of rules

of inference, and we aren’t quite there yet.

Implication Using the negative, implication can be defined in analogy with

the classical case where A → B = ¬A ∨ B. In other words, implication is

true when either the premise A is false or the conclusion B is true.

If that seems odd to you, you are not the first to think so. This implies,

for example, that if A is true, then B → A is true for all B. This could make

irrelevant events seem to have cosmic ramifications:“If you will give me some

G¨

uetzli, then 2 and 2 will make 4.” a child might say, and be correct! The

truth of the statement A → (B → A) lies at the root of the strangeness.

In any case, mimicking the definition ¬A ∨ B in linear logic gives the

definition,

A ( B := A⊥ ` B

So the implication ( is not a connective of the logic but a shorthand. Since

the ∨ split into ⊕ as well as `, one might also try defining implication as

!

A ( B = A⊥ ⊕ B. This does not work for the philosophical reason that

the additives & and ⊕ have intuitionistic properties whereas the negation

⊥ used in the definition of the implication has classical properties (namely

A⊥⊥ = A.) This mismatch would produce undesirable results.

Interpretation As promised, these symbols result in a resource aware

logic, and with all in hand a menu can be written:

(10CHF)⊗(10CHF) ( (Salad)⊗((Pasta)&(Rice))⊗((Apricots)⊕(CarrotCake))

The interpretation:

⊗ : receive both

( : give the left side and receive the ride side (convert resources)

& : receive one or the other (you choose)

6

⊕ : receive one or the other (you don’t choose), e.g., apricots in spring

and summer, otherwise cake

When a formula appears negated, its interpretation should be reversed (receive ↔ give). Since moving formulas across ( negates them, the ⊗ on the

right side should be reas as giving instead of . The interpretations come from

reading the rules of inference for each connective backwards (from bottom to

top). The multiplicative A⊗B saves the context of both variables, so reading

upwards, we have enough resources (the contexts) to follow both branches.

But the additives & and ⊕ save only one copy of the context, so there are

only enough resources to follow one or the other branch upward.

In that case, why not view the & as a type of or ? The menu interpretation

concerns the contexts of a & connective, whereas the interpretation of & as

a type of and concerns the interpretation of A & B in terms of true/false

values. Since the formula A & B ( A is provable, & cannot be a type of or.

It might look like the ` connective was omitted, but it hides in A ( B

which is A⊥ ` B by definition.

Exponentials What if we would like to have our cake and eat it too? This

is the raison d’ˆetre of the so-called exponentials, ! and ?:

` cake

` cake, ?(cake ( ⊥)

The exponentials are the unary operators resembling modals. They allow

controlled application of weakening and contraction rules. In other words,

they allow the inferences,

`?A, ?A

`A

` A, ?B

`?A

Though it is simple, the idea works. The negation operator ensures there

will be two different exponentials which are related by (!A)⊥ =?A⊥ . Their

eccentric names are ofcourse for ! and whynot for ?. But two questions still

need to be addressed.

1. How should the rules handle the context of A, i.e., other formulas in

the same sequent?

2. In what ways should one be permitted to introduce exponentials?

These are called exponentials because they convert additive connectives

into multiplicative ones:

!(A & B) ≡ (!A⊗!B)

?(A ⊕ B) ≡ (?A`?B)

where the equivalence ≡ means that the formula (A ( B) & (B ( A) is

derivable.

7

Units Not just the connectives of classical logic but also the constant T

and F multiply. The equation T ∧ T = T becomes

1⊗1=1

>&>=>

The notation is again confusing to the newcomer:

1⊥ = ⊥

>⊥ = 0

Cutting

The cut rule puts a finishing touch on the rules of inference. Just the axiom

rule allows the introduction of formulas into a proof, so the cut rule allows

the removal of formulas.

` ∆, A ` ∆0 , A

cut

` ∆, ∆0

Given the above rules, cut implies (but is not equivalent to) the familiar rule

of modus ponens. Even if the name sounds unfamiliar, you use this rule every

day. It says, if A implies B and A is true, then B is true,

`A(B `A

`B

The proof of modus ponens using the cut rule:

ax

`A

` B, B ⊥

⊗

`A(B

` A ⊗ B⊥, B

cut

`B

where the identification A ⊗ B ⊥ = (A⊥ ` B)⊥ = (A ( B)⊥ has been used.

Now the rules of inference for linear logic can be listed in figure 1.

The cut rule is the only way of removing formulas when proving, but it

adds no inferential power to the proof system. In fact, all the cuts can be

removed by a simple process called cut elimination. Figure 1 shows the two

cut elimination operations for proofs in M LL− . It has two rules: one for

cuts of atomic formulas and one for cuts of composite formulas.

Proposition 1. [?, Section 3.14] Linear logic permits cut elimination.

As an algorithm applicable to all linear logic proofs, cut elimination serves

as a model of computation. In this regard, it resembles modus ponens, which

corresponds to currying in λ-calculus.

The contexts play no active role in cut elimination; it is entirely local.

Cut elimination terminates in linear time (linear in the number of premises).

8

LL

⊥

ax

` A, A

` Γ, A, B

`

` Γ, A ` B

` Γ, A

` Γ, B

&

` Γ, A & B

`?∆, A

!

`?∆, !A

` ∆, ?A, ?A

c

` ∆, ?A

M

A

E

` Γ, A

` ∆, A⊥

cut

` Γ, ∆

` Γ, A

` ∆, B

`Γ

1

0

⊗

`1

` Γ, 0

` Γ, ∆, A ⊗ B

` Γ, A

` Γ, B

>

⊕1

⊕2

` Γ, >

` Γ, A ⊕ B

` Γ, A ⊕ B

` A, ∆

?

`?A, ∆

`∆ w

` ∆, ?A

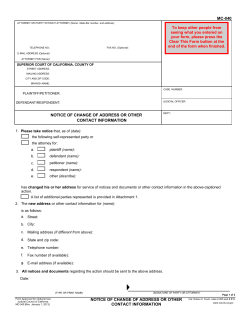

Table 1: Rules of inference for linear logic. Γ and ∆ are multisets of formulas.

The labels on the left specify fragments of the logic. For example, the LL, M

and E rows comprise the MELL fragment of linear logic. A superscript minus

sign indicates that units should be excluded from the logic. For example,

MLL− should be the LL and M rows without the rules about 1 and 0.

A⊥

B⊥

−→ A

A⊥ m⊥ B ⊥ cut

A

B

AmB

A, A⊥

ax

A

A

A⊥ cut

B

B ⊥ cut

cut −→ A

Figure 1: Cut elimination for M LL− where m = ⊗, ` is a multiplicative

connective.

Proof nets

Proof nets surpass sequent calculus proofs by eliminating the bureaucracy

of proofs, to use Girard’s dramatic phrase. For example, the following two

proofs differ by a necessary but arbitrary choice (applications of the exchange

rule, as above, are left implicit),

` A, B, C

`D

` A, B, C ⊗ D

` A ` B, C ⊗ D

` A, B, C

` A ` B, C

`D

` A ` B, C ⊗ D

These correspond to the same proof net pictured in Figure 2.

A proof net is a particular type of proof structure, and a proof structure

is a sort of graph. Their precise graphical representation varies slightly from

place to place (sometimes they are even defined as a set of “links”, e.g.,

⊗

A, B C A ⊗ B, with the graphical expression left implicit), so the below is

9

i

i

A

i

i

C

B

`

A`B

o

D

⊗

C ⊗D

o

Figure 2: A single proof structure which corresponds to multiple proofs.

an improvised definition to fit the mathematical definition of a graph (every

edge connects exactly two vertices) and remain simple (there is exactly one

label for each edge).

Definition 2 (Proof structure). A proof structure is a graph with vertices

labelled by i (input), o (output), `, ⊗, a (axiom) or c (cut) and edges labelled

by formulas. The edge labels must be compatible with the vertex labels, e.g.,

the edges of a ` should be labelled A, B and A ` B. Exactly three edges

must be attached to ` and ⊗ vertices, exactly two edges to a and c vertices,

and exactly one edge to i and o vertices. The only exception is the i which

may also be connected by one or two edges to other i-vertices to symbolize

formulas appearing in a single sequent (these edges distinguish ` A, B from

the pair ` A and ` B).

The immediate question arises: which proof structures come from sequent

calculus proofs? Correctness criterion answer this question. A proof structure satisfying a correctness criterion is called a proof net and comes from

a sequent calculus proof. MLL admits a rather simple correctness criterion.

But it requires a further definition.

Definition 3 (Switch). Given a proof structure P , a switch of P is the

unlabelled graph associated to P with every `-vertex replaced by an edge

(see Figure 3). Hence if N is the number of `-vertices in a proof structure,

then there are 2N switches associated the proof structure.

Some proof structures are not the image of any MLL sequent proof.

Definition 4. A proof net is a proof structure whose switches are connected

and acyclic.

Proposition 5. Proof nets are exactly those proof structures which come

from proofs.

From a proof net, a proof can be produced. Not surprisingly, this process

is named sequentialization.

How does this compare with classical logic?

10

(a)

(b)

i

i

A

i

i

i

i

B

`

A`B

o

o

o

Figure 3: The par link in (a) is replaced by one of the two switches in (b).

Back to classical logic

It would be nice to have a way to allow weakening and contraction for particular formulas as desired. A unary operator resembling a modality enables the

transition back to intuitionistic logic. The operator ! is called the exponential

modality. The embedding of intuitionistic logic is generated by,

A∧B

A∨B

A→B

T

F

7→

7→

7→

7

→

7

→

A&B

!A⊕!B

!A ( B

>

0

where A and B are atomic formulas. Then provability in intuitionistic logic

coincides with provability in (exponential) linear logic. [?, Section 1.3.3]

Categorical models

A model of classical and linear logic is:

l

(C, ×, e) m

-

(L, ⊗, 1)

c

where

• Classical logic: (C, ×, e) is a cartesian category with finite products

and terminal object e

• Linear logic: (L, ⊗, 1) is a symmetric monoidal closed category with

product ⊗ and identity 1

11

• c is symmetric monoidal and takes a linear formula and makes it classical

• l is symmetric monoidal and takes a classical formula and makes it

linear

• l is the left-adjoint to c: L(l(φ), λ) ∼

= C(φ, c(λ))

• The exponential modality is the composition l ◦ c : L → L.

Given a model of the linear logic, there are at least three ways to form

an associated classical logic:

1. (comonads) In this case C is the category of monoids in the functor

category End(L)op :

C := comon(L, ⊗, e) = {(κ : L → L, δ : κ =⇒ κ ◦ κ, : κ =⇒ id)}

2. (algebras of comonad) Given a comonad T on L, C := LT , where

Ob(LT ) = Ob(L)

LT (A, B) = L(T A, B)

3. (coalgebras of comonad) Given a comonad T on L, C := LT , where

Ob(LT ) = ∪A∈L L(A, T A)

L (A → T A, B → T B) = L(A, B) commuting with structure morphism, comultiplicatio

T

12

Bibliography

[Gir87] J.-Y. Girard, Linear logic, Theoretical Computer Science 50 (1987),

no. 1, 1–102.

13

© Copyright 2026