Why GPUs? 07.04.2011 GPU optimizations at RRZE GPU

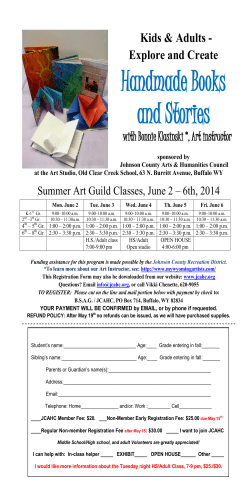

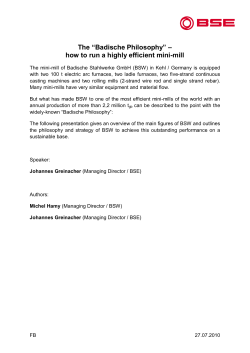

07.04.2011 GPU optimizations at RRZE GPU--Computing Workshop GPU Why GPUs? J. Habich(a), Prof. Dr. G. Wellein(a,b) , C. Feichtinger(b) (a)HPC Services – Regional ComputingCenter Erlangen of Computer Science (b)Department April 6th 2011 University Erlangen-Nürnberg Peak Performance of CPU vs. GPU [email protected] 2 Peak Memory Bandwidth of CPU vs. GPU Consumer Cards Consumer Cards Professional HP Cards 6x 4x Single Chip Single Chip April 6th 2011 [email protected] 3 April 6th 2011 [email protected] 4 1 07.04.2011 Outline GPGPU CUDA Hardware Memory performance by massive parallelism Iterative Solvers Lattice Boltzmann on GPUs April 6th 2011 [email protected] GPGPU CUDA Hardware 5 Specifications of the NVIDIA Fermi GPU April 6th 2011 Up to 6 GB of global memory (DRAM) 32 processors SP driven by : Single Instruction Multiple Data (SIMD) Single Instruction Multiple Thread (SIMT) Explicit in-order architecture 32K Registers 48 KB of local on-chip memory (shared memory) 1500 MHz DDR 384 bit bus Global gather/scatter possible 144 GB/s bandwidth 16 GB/s PCIe 2.0 x16 interface to CPU 1st and 2nd level Cache hierarchy clock rate of 1.15 GHz Memory Memory Clock Peak Memory Memory Interface Bandwidth (GB) Clock (MHz) (MHz) (GFLOPs) (bit) (GB/sec) 1030 GFLOP/s (single precision) 515 GFLOP/s (double precision) 1150 1030 6 1500 384 144 GeForce GTX 280 1400 Tesla C2070 1000 1 1160 512 148.6 1350 345 0.768 900 384 86 Host ( Westmere) 2.66 255 24 1333 3*64 63 GeForce 8800 GTX [email protected] 6 Specifications of the NVIDIA Fermi GPU 14 Multiprocessors (MP); each with: April 6th 2011 [email protected] 7 April 6th 2011 [email protected] 8 2 07.04.2011 Features and paradigm of the CUDA toolkit Remember: OpenMP 0 Thread 0 Typical coarse-grained scheduling using contiguous chunks Thread 1 Divide domain to huge chunks 1 2 3 Memory Bandwidth on GPUs April 6th 2011 Thread 2 5 [email protected] 9 Features and paradigm of the CUDA toolkit Now: CUDA Block 1 Cyclic mapping between workload and threads is often helpful Block 2 April 6th 2011 Parallelize the most outer loop to minimize overhead [email protected] 10 Features and paradigm of the CUDA toolkit Example: Streamcopy-benchmark (GPU) 32 Blocks, 32 Threads each Fine-grained workload scheduling! No caches no consecutive data access within a thread required Divide domain/loop into small chunks Equally distribute to threads 4 Thread 0 0 Thread 1 1 Thread 2 2 Thread 0 3 Thread 1 4 Thread 2 5 __global__ void vectorCopyGPUOuterIter 1st element Block 0 Thread 0 33rd element Block 1 Thread 0 (float *d_C, float *d_A, int DATA_N){ 1025th element Block 0 Thread 0 for ( int pos = (blockIdx.x * blockDim.x) + threadIdx.x; pos < DATA_N ; pos += blockDim.x*gridDim.x bl kDi * idDi ) { ID of current thread d_C[pos] = d_A[pos]; ID of current block Number of threads per } block __syncthreads(); } Jump across all Elements April 6th 2011 [email protected] 11 April 6th 2011 [email protected] 12 3 07.04.2011 Memory bandwidth case study CUDA Scheduling impacted by Resource limits How much parallelism is needed? Example: Streamcopy C=A Resources per Streaming Multiprocessor (MP) 32 000 (32bit) Register 16 KB to 48 KB of Shared Memory 2010 Tesla 2008 Tesla Paralellism is limited by resource usage per Block/Thread 2007 Consumer AMD Magny Cours 1536 threads can be executed/scheduled parallel per MP 0 Registers eg s e s pe per Thread ead 20 10 to 35 byte shared memory per Thread (GT300 allows switching) Using more registers will decrease parallel threads per MP Threads 1536 1024 512 Registers • Not enough blocks • GPUs not used perfectly April 6th 2011 20 30 62 384 256 128 64 83 125 250 500 • Not enough work per blocks • Threads run empty [email protected] 13 April 6th 2011 [email protected] 14 PCI Express bandwidth measurements PCIe Gen 2.0: Theoretically 8 GB/s per direction Pinned host memory is mandatory! Blocked data data-copy copy only improves unpinned memory transfer Iterative Jacobi Solver on GPUs Cuda Version 2.3 nVIDIA GTX 280 float * h_A; float MAXMEMORY = 1024*1024*512 cudaHostAlloc( (void**)&h_A, (sizeof (float) * MAXMEMORY), 0) ; April 6th 2011 [email protected] 15 April 6th 2011 [email protected] 16 4 07.04.2011 Seminar work: Moritz Kreutzer Evaluation of real SP to DP ratio Jacobi solver on a 3-dimensional domain Case Study: (Ω = [0; 1]³ ) using CUDA Jacobi iterative solver (6 point stencil) Loads 6 elements / Stores 1 element Underlying PDE: Poisson’s equation with right hand side 0 Does 6 FLOPs (implementation dependent) ∆u = f ≡ 0 Algorithmic balance between 4.6 (sp) byte/flop and 9.3(dp) byte/flop Sinusoidal boundary y conditions with a maximum at each face A kernel running at 1 Gflop/s needs 9.3 Gbyte/s of data 6-point stencil: gdst (x; y ; z) = a · ( gsrc (x+1; y ; z) + gsrc (x - 1; y ; z) + gsrc (x; y+1; z) + gsrc (x; y - 1; z) + gsrc (x; y ; z+1) + gsrc (x; y ; z - 1)) Tesla Top Speed 9/18 GFLOPs (dp/sp) Far away from any floating point barrier Watch all occuring flops and integer calculations 6 Flops, 6 reads, 1 write per lattice site update (LUP) April 6th 2011 [email protected] 17 April 6th 2011 [email protected] 18 Jacobi on GPUs Shared memory access One GPU-block treats 3 dim. Block of domain Threads within a block can share data through a 16 KB shared memory One thread per node of GPU-Block Use of shared memory, e.g. for simple blocking Threads load central point for neighbors (central stripe) Element gsrc (x; y ; z) is stored into shared memory for all threads ( = 1;…; (x 1 di X – 1) dimX Access to elements gsrc (x + 1; y ; z) and gsrc (x - 1; y ; z) via shared memory Extension to multiple stripes: load complete stencil data to shared memory in advance April 6th 2011 [email protected] 19 April 6th 2011 [email protected] 20 5 07.04.2011 Jacobi on GPUs Jacobi on GPUs Texture Cache Padding GPUs do not comprise ordinary caches known from CPUs needed to obtain coalesced memory access sensible only in x-direction (adjacent elements in x-direction are adjacent in memory) However, it is possible to exploit the Texture Cache Source grid is mapped to a texture Repetitive read operations can be satisfied by this fast cache with much higher performance Consider boundary/ghost layer when calculating optimal padding Swap grids re-map source grid April 6th 2011 [email protected] 21 April 6th 2011 [email protected] 22 Jacobi on GPUs: Results Speedup of 2 for cached algorithms Double precision leads to 50 % reduction as known from CPUs Memory bound execution Lattice Boltzmann on GPUs April 6th 2011 [email protected] 23 April 6th 2011 [email protected] 24 6 07.04.2011 The lattice Boltzmann method The lattice Boltzmann method f(0:xMax+1,0:yMax+1,0:zMax+1,0:18,0:1) Incompressible flow solver x = threadIdx.x ; // set i index of current cell y = blockIdx.x+1; // set j index of current cell z = blockIdx.y+1; // set k index of current cell Explicit, fully discrete Boltzmann equation with BGK collision operator 0, t) 1, t) 2, t) 3, t) y-1, z-1,18, t) 1st order accurate in time SAVE f(x, Pull/collision optimized layout y, n io Relaxation (complex computations) s en f( x, z, z, z, z, m Di 2nd order accurate in space f( x, y, f( x+1, y+1, f( x, y+1, f( x-1, y+1, X- LOAD LOAD LOAD LOAD … LOAD Physical discretization: D3Q19 Z-Dimension if( fluidcell(x,y,z) ) then z, 0:18, t+1) endif Halfway bounce-back for obstacle treatment April 6th 2011 [email protected] 25 April 6th 2011 [email protected] Pure kernel (SP), no PCIe/IB transfer Kernel with boundary transfer (SP), no IB Maximum performance starting at 50x50x50 Maximum performance starting at 200x200x200 (64 times more than pure kernel (50x50x50)!!) Fluctuations due to different thread numbers and influence of alignment Blocks influence kernel with any domainsize Blocks influence kernel Domains < 200x200x200 Comparison: Xeon Node ~100 MLUPS LUPS: Lattice updates per second April 6th 2011 [email protected] 26 28% is lost for 64 blocks Why? 27 April 6th 2011 [email protected] 28 7 07.04.2011 Time measurements of kernel with 1 and 64 blocks Performance on GPU Domains > 250^3 about 50% of execution time is spent in nonkernel parts C2050 speedup of 2 compared to full 12 core Intel Westmere in dp 75% of attainable memory bandwidth Kernel execution time is constant no matter how much blocks are used SP Domains < 150^3 non-kernel non kernel part becomes dominant ECC=1 DP Difference of sp and dp vanishes for 64 blocks Algorithm becomes communication bound April 6th 2011 [email protected] 29 April 6th 2011 [email protected] 30 [email protected] 32 WaLBerla Heterogeneous GPU CPU computing April 6th 2011 [email protected] 31 April 6th 2011 8 07.04.2011 WaLBerla Heterogeneous LBM Copy to Buffers on CPU and GPU Patch and Block based domain decomposition After each iteration, boundary data is copied to Communication Buffers Block contains Simulation Data and Metadata e.g. for parallelization, advanced models Block can be algorithm or architecture specific All Blocks are equal in spatial dimensions Processes can have one or multiple blocks April 6th 2011 [email protected] 33 Buffer swap on GPU April 6th 2011 34 Transfer of buffers to the host Local Communication Buffers are only swapped. No Copy is done! April 6th 2011 [email protected] [email protected] Data of GPU processes is transferred to the Host 35 April 6th 2011 [email protected] 36 9 07.04.2011 Transfer of buffers to the host Disparity of Scales: The tale of the load balance In Theory: 2 MPI processes for CPU domain 1 MPI process for GPU domain Buffers are transferred/received to/from other hosts Time per iteration Sample block of 75x75x75 GPU: 3ms (@130 MLUPS) CPU: 9ms (@ 50 MLUPS) Take 8 blocks 6 for GPU and 2 for CPUs What about 4 socket machines? April 6th 2011 [email protected] 37 Disparity of Scales: The tale of the load balance 2 April 6th 2011 [email protected] 38 Disparity of Scales: The tale of the load balance 3 Problems involved: Theory estimate: (130 + 50 +50 ) MLUPS = 230 MLUPS GPU as well as CPU get better with large domains and only one block Reality: = 167 MLUPS However, a factor of 7 to 2 restricts largest blocksize and gives you multiple blocks Tuning of parameters leads to balance of 7 to 2 (GPU to CPU) blocks. Both architectures are not operated under best conditions (N t that (Note th t a CPU bl block k is i computed t d by b three th CPU cores via i OpenMP) O MP) Results of tuned setup : 189 MLUPS Overheads (PCIe transfers, Thread spawning, onGPU copys) are not covered by simple performance model Still behind lightspeed estimate April 6th 2011 [email protected] 39 April 6th 2011 [email protected] 40 10 07.04.2011 Weak scaling GPU per Node performance Strong Scaling GPU per Node performance Weak scaling works as expected Initial performance drop from one to two cards per node Loss of 64% in SP on 30 Nodes (60 GPUs GT200) Loss of 75% in DP on 30 Nodes (60 GPUs GT200) Up to 16 GFLUPS max. performance (GT200) Huge performance drop due to domain size 66% Up to 137 Intel Xeon nodes necessary! Up to 7 GFLUPS in SP 46% Up to 1275 BlueGene/P nodes necessary! 67% Up to 70 Intel Xeon nodes necessary! 35% About 3 GFLUPS in DP Up to 750 BlueGene/P nodes necessary! April 6th 2011 [email protected] 41 April 6th 2011 [email protected] 42 Summary Thank you very much for your attention High attainable performance on GPUS is achievable by sophisticated optimization Sweetspots of different architectures are quite the same (i.e . large domains) Data locality plays important role PCIexpress is still a major bottleneck All trademarks are the property of their respective owners. April 6th 2011 [email protected] 43 April 6th 2011 [email protected] 44 11

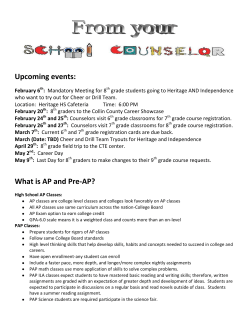

© Copyright 2026