Nonlinear Semidefinite Optimization —why and how— Michal Ko ˇcvara Isaac Newton Institute, 2013

Nonlinear Semidefinite Optimization

—why and how—

Michal Koˇcvara

School of Mathematics, The University of Birmingham

Isaac Newton Institute, 2013

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

1 / 45

Semidefinite programming (SDP)

“generalized” mathematical optimization problem

min f (x)

subject to

g(x) ≥ 0

A(x) 0

A(x) — (non)linear

matrix operator Rn → Sm

P

(A(x) = A0 + xi Ai )

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

2 / 45

SDP notations

Sn . . . symmetric matrices of order n × n

A 0 . . . A positive semidefinite

hA, Bi := Tr(AB). . . inner product on Sn

A[Rn → Sm ]. . . linear matrix operator defined by

A(y) :=

n

X

yi Ai

with Ai ∈ Sm

i=1

A∗ [Sm → Rn ]. . . adjoint operator defined by

A∗ (X ) := [hA1 , X i, . . . , hAn , X i]T

and satisfying

hA∗ (X ), yi = hA(y), X i

ˇ

Michal Kocvara

(University of Birmingham)

for all y ∈ Rn

Isaac Newton Institute, 2013

3 / 45

Linear SDP: primal-dual pair

infhC, X i := Tr(CX )

X

∗

s.t. A (X ) = b

(P)

[hAi , X i = bi , i = 1, . . . , n]

X 0

suphb, yi :=

y ,S

X

bi yi

s.t. A(y) + S = C

(D)

X

[

yi Ai + S = C]

S0

Weak duality: Feasible X , y, S satisfy

hC, X i − hb, yi = hA(y) + S, X i −

X

yi hAi , X i = hS, X i ≥ 0

duality gap nonnegative for feasible points

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

4 / 45

Linear Semidefinite Programming

Vast area of applications. . .

LP and CQP is SDP

eigenvalue optimization

robust programming

control theory

relaxations of integer optimization problems

approximations to combinatorial optimization problems

structural optimization

chemical engineering

machine learning

many many others. . .

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

5 / 45

Why nonlinear SDP?

Problems from

Structural optimization

Control theory

Examples below

There are more but the researchers just don’t know about. . .

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

6 / 45

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

7 / 45

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

8 / 45

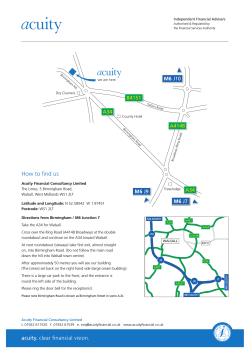

Free Material Optimization

Aim:

Given an amount of material, boundary conditions and external load f ,

find the material (distribution) so that the body is as stiff as possible

under f .

The design variables are the material properties at each point of the

structure.

M. P. Bendsøe, J.M. Guades, R.B. Haber, P. Pedersen and J. E. Taylor:

An analytical model to predict optimal material properties in the context

of optimal structural design. J. Applied Mechanics, 61 (1994) 930–937

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

9 / 45

Free Material Optimization

2

2

1

1

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

9 / 45

FMO primal formulation

FMO problem with vibration/buckling constraint

min

u∈Rn ,E1 ,...,Em

m

X

TrEi

i=1

subject to

Ei 0, ρ ≤ TrEi ≤ ρ,

i = 1, . . . , m

⊤

f u≤C

A(E )u = f

A(E ) + G(E , u) 0

. . . nonlinear, non-convex semidefinite problem

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

10 / 45

Why nonlinear SDP?

Problems from

Structural optimization

Control theory

Examples below

There are more but the researchers just don’t know about. . .

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

11 / 45

Conic Geometric Programming

Venkat Chandrasekaran’s slides

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

12 / 45

Nonlinear SDP: algorithms, software?

Interior point methods (log det barrier, Jarre ’98), Leibfritz ’02,. . .

Sequential SDP methods (Correa-Ramirez ’04, Jarre-Diehl)

None of these work (well) for practical problems

(Generalized) Augmented Lagrangian methods (Apkarian-Noll

’02–’05, D. Sun, J. Sun ’08, MK-Stingl ’02–)

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

13 / 45

Nonlinear SDP: algorithms, software?

Interior point methods (log det barrier, Jarre ’98), Leibfritz ’02,. . .

Sequential SDP methods (Correa-Ramirez ’04, Jarre-Diehl)

None of these work (well) for practical problems

(Generalized) Augmented Lagrangian methods (Apkarian-Noll

’02–’05, D. Sun, J. Sun ’08, MK-Stingl ’02–)

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

13 / 45

Nonlinear SDP: algorithms, software?

Interior point methods (log det barrier, Jarre ’98), Leibfritz ’02,. . .

Sequential SDP methods (Correa-Ramirez ’04, Jarre-Diehl)

None of these work (well) for practical problems

(Generalized) Augmented Lagrangian methods (Apkarian-Noll

’02–’05, D. Sun, J. Sun ’08, MK-Stingl ’02–)

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

13 / 45

PENNON collection

PENNON (PENalty methods for NONlinear optimization)

a collection of codes for NLP, (linear) SDP and BMI

– one algorithm to rule them all –

READY

PENNLP AMPL, MATLAB, C/Fortran

PENSDP MATLAB/YALMIP, SDPA, C/Fortran

PENBMI MATLAB/YALMIP, C/Fortran

PENNON (NLP + SDP) extended AMPL, MATLAB,

C/FORTRAN

NEW

PENLAB (NLP + SDP) open source MATLAB implementation

IN PROGRESS

PENSOC (nonlinear SOCP) ( + NLP + SDP)

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

14 / 45

PENSDP with an iterative solver

min hC, X i

(P)

X ∈Sm

s.t. hAi , X i = bi , i = 1, . . . , n

X 0

Large n, small m (Kim Toh)

problem

theta12

theta162

n

17979

127600

m

600

800

PENSDP

CPU

27565

mem

PEN-PCG

CPU CG/it

254

8

13197

13

Large m, small/medium n (robust QCQP, Martin Andersen)

problem

rqcqp1

rqcqp2

n

101

101

m

806

3206

ˇ

Michal Kocvara

(University of Birmingham)

PENSDP

CPU

324

6313

PEN-PCG

CPU CG/it

20

4

364

4

Isaac Newton Institute, 2013

15 / 45

PENNON: the problem

Optimization problems with nonlinear objective subject to nonlinear

inequality and equality constraints and semidefinite bound constraints:

min

x∈Rn ,Y1 ∈Sp1 ,...,Yk ∈Spk

subject to

f (x, Y )

gi (x, Y ) ≤ 0,

i = 1, . . . , mg

hi (x, Y ) = 0,

i = 1, . . . , mh

λi I Yi λi I,

i = 1, . . . , k

ˇ

Michal Kocvara

(University of Birmingham)

(NLP-SDP)

Isaac Newton Institute, 2013

16 / 45

The problem

Any nonlinear SDP problem can be formulated as NLP-SDP, using

slack variables and (NLP) equality constraints:

G(X ) 0

write as

G(X ) = S

element-wise

S0

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

17 / 45

The algorithm

Based on penalty/barrier functions ϕg : R → R and ΦP : Sp → Sp :

gi (x) ≤ 0 ⇐⇒ pi ϕg (gi (x)/pi ) ≤ 0,

Z 0 ⇐⇒ ΦP (Z ) 0,

i = 1, . . . , m

p

Z ∈S .

Augmented Lagrangian of (NLP-SDP):

F (x,Y ,u,U,U,p)=f (x,Y )+

P mg

i=1

ui pi ϕg (gi (x,Y )/pi )

P

P

+ ki=1 hU i ,ΦP (λi I−Yi )i+ ki=1 hU i ,ΦP (Yi −λi I)i ;

here u ∈ Rmg and U i , U i are Lagrange multipliers.

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

18 / 45

The algorithm

A generalized Augmented Lagrangian algorithm (based on R. Polyak

’92, Ben-Tal–Zibulevsky ’94, Stingl ’05):

1

Given x 1 , Y 1 , u 1 , U 1 , U ; pi1 > 0, i = 1, . . . , mg and P > 0.

For k = 1, 2, . . . repeat till a stopping criterium is reached:

k

(i)

Find x k +1 and Y k +1 s.t. k∇x F (x k +1 , Y k +1 , u k , U k , U , p k )k ≤ K

(ii)

uik +1 = uik ϕ′g (gi (x k +1 )/pik ),

U ki +1

k +1

Ui

(iii)

=

=

i = 1, . . . , mg

DA ΦP ((λi I − Yi ); U ki ),

k

DA ΦP ((Yi − λi I); U i ),

i = 1, . . . , k

i = 1, . . . , k

pik +1 < pik , i = 1, . . . , mg

P k +1 < P k .

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

19 / 45

Interfaces

How to enter the data – the functions and their derivatives?

Matlab interface

AMPL interface

C/C++ interface

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

20 / 45

Matlab interface

User provides six M ATLAB functions:

f . . . evaluates the objective function

df . . . evaluates the gradient of objective function

hf . . . evaluates the Hessian of objective function

g . . . evaluates the constraints

dg . . . evaluates the gradient of constraints

hg . . . evaluates the Hessian of constraints

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

21 / 45

Matlab interface

Matrix variables are treated as vectors, using the function

svec : Sm → R(m+1)m/2 defined by

a11

svec

sym

a12 . . .

a22 . . .

..

.

a1m

a2m

..

.

amm

= (a11 , a12 , a22 , . . . , a1m , a2m , amm )T

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

22 / 45

Matlab interface

Matrix variables are treated as vectors, using the function

svec : Sm → R(m+1)m/2 defined by

a11

svec

sym

a12 . . .

a22 . . .

..

.

a1m

a2m

..

.

amm

= (a11 , a12 , a22 , . . . , a1m , a2m , amm )T

Keep a specific order of variables, to recognize which are matrices and

which vectors. Add lower/upper bounds on matrix eigenvalues.

Sparse matrices available, sparsity maintained in the user defined

functions.

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

23 / 45

AMPL interface

AMPL does not support SDP variables and constraints. Use the same

trick:

Matrix variables are treated as vectors, using the function

svec : Sm → R(m+1)m/2 defined by

a11

svec

sym

a12 . . .

a22 . . .

..

.

a1m

a2m

..

.

amm

= (a11 , a12 , a22 , . . . , a1m , a2m , amm )T

Need additional input file specifying the matrix sizes and lower/upper

eigenvalue bounds.

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

24 / 45

What’s new

PENNON being implemented in NAG (The Numerical Algorithms

Group) library

The first routines should appear in the NAG Fortran Library, Mark 24

(Autumn 2013)

By-product:

PENLAB — free, open, fully functional version of PENNON coded in

MATLAB

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

25 / 45

PENLAB

PENLAB — free, open source, fully functional version of PENNON

coded in Matlab

Designed and coded by Jan Fiala (NAG)

Open source, all in MATLAB (one MEX function)

The basic algorithm is identical

Some data handling routines not (yet?) implemented

PENLAB runs just as PENNON but is slower

Pre-programmed procedures for

standard NLP (with AMPL input!)

linear SDP (reading SDPA input files)

bilinear SDP (=BMI)

SDP with polynomial MI (PMI)

easy to add more (QP, robust QP, SOF, TTO. . . )

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

26 / 45

PENLAB

The problem

min

x∈Rn ,Y1 ∈Sp1 ,...,Yk ∈Spk

subject to

f (x, Y )

gi (x, Y ) ≤ 0,

i = 1, . . . , mg

hi (x, Y ) = 0,

i = 1, . . . , mh

Ai (x, Y ) 0,

i = 1, . . . , mA

λi I Yi λi I,

i = 1, . . . , k

(NLP-SDP)

Ai (x, Y ). . . nonlinear matrix operators

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

27 / 45

PENLAB

Solving a problem:

prepare a structure penm containing basic problem data

>> prob = penlab(penm); MATLAB class containing all data

>> solve(prob);

results in class prob

The user has to provide MATLAB functions for

function values

gradients

Hessians (for nonlinear functions)

of all f , g, A.

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

28 / 45

Structure penm and f/g/h functions

Example: min x1 + x2

s.t.

x12 + x22 ≤ 1, x1 ≥ −0.5

penm = [];

penm.Nx = 2;

penm.lbx = [-0.5 ; -Inf];

penm.NgNLN = 1;

penm.ubg = [1];

penm.objfun = @(x,Y) deal(x(1) + x(2));

penm.objgrad = @(x,Y) deal([1 ; 1]);

penm.confun = @(x,Y) deal([x(1)ˆ2 + x(2)ˆ2]);

penm.congrad = @(x,Y) deal([2*x(1) ; 2*x(2)]);

penm.conhess = @(x,Y) deal([2 0 ; 0 2]);

% set starting point

penm.xinit = [2,1];

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

29 / 45

PENLAB gives you a free MATLAB solver for large scale NLP!

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

30 / 45

Toy NLP-SDP example 1

min

x∈R2

1 2

(x + x22 )

2 1

subject to

1

x1 − 1 0

x1 − 1

1

x2 0

0

x2

1

D. Noll, 2007

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

31 / 45

Toy NLP-SDP example 2

min x1 x4 (x1 + x2 + x3 ) + x3

x∈R6

subject to

x1 x2 x3 x4 − x5 − 25 = 0

x12 + x22 + x32 + x42 − x6 − 40 = 0

x1

x2

0

0

x2

x4 x2 + x3 0

0

0x2 + x3 x4

x3

0

0

x3

x1

1 ≤ xi ≤ 5, i = 1, 2, 3, 4,

xi ≥ 0, i = 5, 6

Yamashita, Yabe, Harada, 2007

(“augmented” Hock-Schittkowski)

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

32 / 45

Example: nearest correlation matrix

Find a nearest correlation matrix:

min

X

n

X

(Xij − Hij )2

(1)

i,j=1

subject to

Xii = 1,

i = 1, . . . , n

X 0

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

33 / 45

Example: nearest correlation matrix

The condition number of the nearest correlation matrix must be

bounded by κ.

Using the transformation of the variable X :

e =X

zX

The new problem:

min

n

X

eij − Hij )2

(z X

(2)

e

z,X

i,j=1

subject to

eii = 1,

zX

i = 1, . . . , n

e κI

IX

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

34 / 45

Example: nearest correlation matrix

For

X =

1.0000 -0.3775 -0.2230 0.7098 -0.4272

-0.3775 1.0000 0.6930 -0.3155 0.5998

-0.2230 0.6930 1.0000 -0.1546 0.5523

0.7098 -0.3155 -0.1546 1.0000 -0.3857

-0.4272 0.5998 0.5523 -0.3857 1.0000

-0.0704 -0.4218 -0.4914 -0.1294 -0.0576

-0.0704

-0.4218

-0.4914

-0.1294

-0.0576

1.0000

the eigenvalues of the correlation matrix are

eigen =

0.2866

0.2866

0.2867

ˇ

Michal Kocvara

(University of Birmingham)

0.6717

1.6019

2.8664

Isaac Newton Institute, 2013

35 / 45

Example: nearest correlation matrix

Cooperation with Allianz SE, Munich:

Matrices of size up to 3500 × 3500

Code PENCOR:

C code, data in xml format

feasibility analysis

sensitivity analysis w.r.t. bounds on matrix elements

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

36 / 45

NLP with AMPL input

Pre-programmed. All you need to do:

>> penm=nlp_define(’datafiles/chain100.nl’);

>> prob=penlab(penm);

>> prob.solve();

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

37 / 45

NLP with AMPL input

problem

vars

constr.

chain800

pinene400

channel800

torsion100

lane emd10

dirichlet10

henon10

minsurf100

gasoil400

duct15

marine400

steering800

methanol400

3199

8000

6398

5000

4811

4491

2701

5000

4001

2895

6415

3999

4802

2400

7995

6398

10000

21

21

21

5000

3998

8601

6392

3200

4797

ˇ

Michal Kocvara

(University of Birmingham)

constr.

type

=

=

=

≤

≤

≤

≤

box

=&b

=&≤

≤&b

≤&b

≤&b

PENNON

sec

iter.

1

14/23

1

7/7

3

3/3

1

17/17

217

30/86

151

33/71

57 49/128

1

20/20

3

34/34

6

19/19

2

39/39

1

9/9

2

24/24

PENLAB

sec

iter.

6

24/56

11

17/17

1

3/3

17

26/26

64

25/49

73

32/68

63

76/158

97 203/203

13

59/71

9

11/11

22

35/35

7

19/40

16

47/67

Isaac Newton Institute, 2013

38 / 45

Linear SDP with SDPA input

Pre-programmed. All you need to do:

>>

>>

>>

>>

sdpdata=readsdpa(’datafiles/arch0.dat-s’);

penm=sdp_define(sdpdata);

prob=penlab(penm);

prob.solve();

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

39 / 45

Bilinear matrix inequalities (BMI)

Pre-programmed. All you need to do:

>>

>>

>>

>>

bmidata=define_my_problem; %matrices A, K, ...

penm=bmi_define(bmidata);

prob=penlab(penm);

prob.solve();

min c T x

x∈Rn

s.t.

Ai0

+

n

X

k =1

xk Aik

+

n X

n

X

xk xℓ Kki ℓ < 0,

i = 1, . . . , m

k =1 ℓ=1

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

40 / 45

Polynomial matrix inequalities (PMI)

Pre-programmed. All you need to do:

>>

>>

>>

>>

load datafiles/example_pmidata;

penm = pmi_define(pmidata);

problem = penlab(penm);

problem.solve();

1 T

x Hx + c T x

x∈R 2

subject to blow ≤ Bx ≤ bup

minn

Ai (x) < 0,

with

A(x) =

X

i = 1, . . . , m

xκ(i) Qi

i

where κ(i) is a multi-index with possibly repeated entries and xκ(i) is a

product of elements with indices in κ(i).

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

41 / 45

Other pre-programmed modules

Nearest correlation matrix

Truss topology optimization (stability constraints)

Static output feedback (input from COMPlib, formulated as PMI)

Robust QP

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

42 / 45

Example:

Robust quadratic programming on unit simplex with constrained

uncertainty set

Nominal QP

minn [x T Ax − b T x] s.t.

x∈R

X

x ≤ 1, x ≥ 0

Assume A uncertain: A ∈ U := {A0 + εU, σ(U) ≤ 1}

Robust QP

minn max [x T Ax − b T x] s.t.

x∈R A∈U

X

x ≤ 1, x ≥ 0

equivalent to

minn [x T (A0 ± εI)x − b T x] s.t.

x∈R

ˇ

Michal Kocvara

(University of Birmingham)

X

x ≤ 1, x ≥ 0

Isaac Newton Institute, 2013

43 / 45

Example: Robust QP

Optimal solution: x ∗ , A∗ = A0 + εU ∗ (non-unique)

Constraint: A∗ should share some properties with A0

For instance: A∗ 0 and (A0 )ii = A∗ii = 1, i.e., Uii∗ = 0 for all i

=⇒ then the above equivalence no longer holds

b to the maximization

Remedy: for a given x ∗ find a feasible solution A

problem. This is a linear SDP:

max [x ∗T (A0 + εU)x ∗ − b T x ∗ ]

U∈Sm

s.t.

−I 4U 4I

A0 + εU 0

Uii = 0, i = 1, . . . , m

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

44 / 45

Example: Robust QP

b nearest to A∗ → nonlinear SDP

Alternatively: find feasible A

b

Finally, we need to solve the original QP with the feasible worst-case A

to get a feasible robust solution xrob :

X

b − b T x] s.t.

x ≤ 1, x ≥ 0

minn [x T Ax

x∈R

The whole procedure

solve QP with A = A0 (nominal)

solve QP with A = A0 + εI (robust)

b

solve (non-)linear SDP to get A

b (robust feasible)

solve QP with A

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

45 / 45

NL-SDP, Other Applications, Availability

polynomial matrix inequalities (with Didier Henrion)

nearest correlation matrix

sensor network localization

approximation by nonnegative splines

financial mathematics (with Ralf Werner)

Mathematical Programming with Equilibrium Constraints (with

Danny Ralph)

structural optimization with matrix variables and nonlinear matrix

constraints (PLATO-N EU FP6 project)

approximation of arrival rate function of a non-homogeneous

Poisson process (F. Alizadeh, J. Eckstein)

Many other applications. . . . . . any hint welcome

Free academic version of the code PENNON available

PENLAB available for download from NAG website

ˇ

Michal Kocvara

(University of Birmingham)

Isaac Newton Institute, 2013

46 / 45

© Copyright 2026