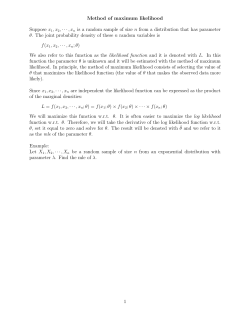

Example 1. Somites of earthworms. 1. Introduction

1. Introduction

1.1 Why empirical likelihood

• nonparametric method: without having to assume the form of

the underlying distribution

• likelihood based inference: taking the advantages of likelihood

methods

• alternative method when other (more conventional) methods

are not applicable

Example 1. Somites of earthworms.

Earthworms have segmented bodies. The segments are known as

somites. As a worm grows, both the number and the length of its

somites increases.

The dataset contains the No. of somites on each of 487 worms

gathered near Ann Arbor in 1902.

The histogram shows that the distribution is skewed to the left,

and has a heavier tail to the left.

Skewness: γ =

E{(X−EX)3}

,

{Var(X)}3/2

E{(X−EX)4}

Kurtosis: κ = {Var(X)}2 −3,

—

a measure for symmetry

—

a measure for tail-heaviness

Estimation for γ and κ

¯ = n−1

Let X

Remark. (i) For N (µ, σ 2), γ = 0 and κ = 0.

(ii) For symmetric distributions, γ = 0.

γb =

P

P

¯ 2.

b 2 = (n − 1)−1 1≤i≤n(Xi − X)

1≤i≤n Xi, and and σ

n

1 X

¯ 3,

(Xi − X)

b3

nσ

i=1

b =

κ

n

1 X

¯ 4.

(Xi − X)

b4

nσ

i=1

How to find the confidence sets for (γ, κ)?

(iii) When κ > 0, heavier tails than those of N (µ, σ 2).

Answer: Empirical likelihood contours.

Let l(γ, κ) be the (log-) empirical likelihood function of (γ, κ). The

confidence region for (γ, κ) is defined as

{(γ, κ) : l(γ, κ) > C},

where C > 0 is a constant determined by the confidence level, i.e.

P {l(γ, κ) > C} = 1 − α.

Why do conventional methods not apply?

Parametric likelihood. Not normal distribution! Likelihood inference for high moments is typically not robust wrt a misspecified distribution.

Bootstrap. Difficult in picking out the confidence region from

a point cloud consisting of a large number of bootstrap estimates for (γ, κ).

In the second panel, the empirical likelihood confidence regions

(i.e. contours) correspond to confidence levels of 50%, 90%,

95%, 99%, 99.9% and 99.99%.

For example, given 1000 bootstrap estimates for (γ, κ), ideally

95% confidence region should contain 950 central points.

In practice, we restrict to rectangle or ellipse regions in order

to facilitate the estimation.

Note. (γ, κ) = (0, 0) is not contained in the confidence regions

1.2 Introducing empirical likelihood

Let X = (X1, · · · , Xn)τ be a random sample from an unknown

distribution F (·). We know nothing about F (·).

Since

P {X1 = x1, · · · , Xn = xn} = p1 · · · pn,

the likelihood is

In practice we observe Xi = xi (i = 1, · · · , n), x1, · · · , xn are n

known numbers.

L(p1, · · · , pn) ≡ L(p1, · · · , pn; X) =

n

Y

pi ,

i=1

which is called an empirical likelihood.

Basic idea. Assume F is a discrete distribution on {x1, · · · , xn}

with

pi = F (xi),

i = 1, · · · , n,

where

pi ≥ 0,

n

X

Remark. The number of parameters is the same as the number

of observations.

Note

pi = 1.

i=1

What is the likelihood function of {pi}? What is the MLE?

n

Y

i=1

pi

1/n

≤

n

1 X

1

pi = ,

n i=1

n

the equality holds iff p1 = · · · = pn = 1/n.

Example 2. Find the MELE for µ ≡ EX1.

Put pbi = 1/n, we have

L(p1, · · · , pn; X) ≤ L(pb1, · · · , pbn; X)

for any pi ≥ 0 and

P

i pi = 1.

Hence the MLE based on the empirical likelihood, which is called

maximum empirical likelihood estimator (MELE), puts the

equal probability mass 1/n on the n observed values x1, · · · , xn.

Namely the MELE for F is the uniform distribution on observed

data points. The corresponding distribution function

Fn(x) =

n

1 X

n i=1

I(Xi ≤ x)

is called the empirical distribution of the sample X = (X1, · · · , Xn)τ .

Corresponding to the EL,

µ=

n

X

i=1

pixi = µ(p1, · · · , pn).

Therefore, the MELE for µ is

b = µ(pb1, · · · , pbn) =

µ

n

n

1 X

1 X

¯

xi =

Xi = X.

n i=1

n i=1

Similarly, the MELE for µk ≡ E(X1k ) is the simply the sample k-th

moment:

n

1 X

bk =

µ

X k.

n i=1 i

2. Empirical likelihood for means

Let X1, · · · , Xn be a random sample from an unknown distribution.

Remarks. (i) MELEs, without further constraints, are simply the

method of moments estimators, which is not new.

(ii) Empirical likelihood is a powerful tool in dealing with testing

hypotheses and interval estimation in a nonparametric manner

based on the likelihood tradition, which also involves evaluating

MELEs under some further constraints.

Goal: test hypotheses on µ ≡ EX1, or find confidence intervals

for µ.

Tool: empirical likelihood ratios (ELR)

2.1 Tests

Consider the hypotheses

H0 : µ = µ0

Let L(p1, · · · , pn) =

ELR

T =

Q

i pi .

vs

H1 : µ 6= µ0.

We reject H0 for large values of the

L(n−1, · · · , n−1)

max L(p1, · · · , pn)

=

,

maxH0 L(p1, · · · , pn)

L(pe1, · · · , pen)

where {pe1} are the constrained MELEs for {pi} under H0.

Two problems:

Theorem 1. For µ ∈ (x(1), x(n)),

(i) pei =?

(ii) What is the distribution of T under H0?

pi(µ) =

(i) The constrained MELEs pei = pi(µ0), where {pi(µ)} are the

solution of the maximisation problem:

max

n

X

{pi} i=1

subject to the conditions

n

X

pi ≥ 0,

i=1

n

X

i

i=1

n

X

xj − µ

=0

n

−

λ(xj − µ)

j=1

(2)

Proof. We use the Lagrange multiplier technique to solve this

optimisation problem. Put

pixi = µ.

The solution for the above problem is given in the theorem below. Note

x(1) ≡ min xi ≤

(1)

in the interval ( x n−µ , x n−µ ).

(1)

(n)

i=1

n

X

1 ≤ i ≤ n,

where λ is the unique solution of the equation

log pi

pi = 1,

1

> 0,

n − λ(xi − µ)

Q=

X

log pi + ψ

i

pi xi ≤ max xi ≡ x(n) .

X

i

X

pi − 1) + λ(

i

pixi − µ).

Letting the partial derivatives of Q w.r.t. pi, ψ and λ equal 0, we

i

It is natural we require x(1) ≤ µ ≤ x(n) .

have

Now let g(λ) be the function on the LHS of (2). Then

p−1

i + ψ + λxi = 0

X

pi = 1

i

X

p i xi = µ

i

(3)

(4)

(5)

lim

By (3),

pi = −1/(ψ + λxi).

(6)

Hence , 1 + ψpi + λxipi = 0, which implies ψ = −(n + λµ). This

together with (6) imply (1). By (1) and (5),

X

xi

= µ.

(7)

n

−

λ(x

i − µ)

i

It follows (4) that

X

X

µ

.

n

−

λ(x

i − µ)

i

i

This together with (7) imply (2).

µ=µ

pi =

(xi − µ)2

> 0.

2

i {n − λ(xi − µ)}

Hence g(λ) is a strictly increasing function. Note

g(λ)

˙

=

λ↑ x n −µ

(n)

X

g(λ) = ∞,

lim

λ↓ x n −µ

(1)

g(λ) = −∞,

Hence g(λ) = 0 has a unique solution between in the interval

n

n

,

.

x(1) − µ x(n) − µ

Note for any λ in this interval,

1

> 0,

n − λ(x(1) − µ)

1

> 0,

n − λ(x(n) − µ)

and 1/{n − λ(x − µ)} is a monotonic function of x. It holds that

pi(µ) > 0 for all 1 ≤ i ≤ n.

¯ λ = 0, and

Remarks. (a) When µ = x

¯ = X,

pi(µ) = 1/n,

i = 1, · · · , n.

It may be shown for µ close to E(Xi), and n large

pi(µ) ≈

(c) The likelihood function L(µ) may be calculated using R-code

and Splus-code, downloaded at

1

1

,

·

n 1 + x¯−µ (xi − µ)

http://www-stat.stanford.edu/∼owen/empirical/

S(µ)

1 P (x − µ)2.

where S(µ) = n

i i

(ii) The asymptotic theorem for the classic likelihood ratio tests

(i.e. Wilks’ Theorem) still holds for the ELR tests.

(b) We may view

L(µ) = L{p1(µ), · · · , pn(µ)}.

as a profile empirical likelihood for µ.

Let X1, · · · , Xn i.i.d., and µ = E(X1). To test

Hypothetically consider an 1-1 parameter transformation from

{p1, · · · , pn} to {µ, θ1, · · · , θn−1}. Then

H0 : µ = µ0

vs

H1 : µ 6= µ0,

L(µ) = max L(µ, θ1, · · · , θn−1) = L{µ, θb1(µ), · · · , θbn−1(µ)}

{θi }

the ELR statistic is

(1/n)n

max L(p1, · · · , pn)

=

T =

maxH0 L(p1, · · · , pn)

L(µ0)

n n

o

Y

λ

1

=

1 − (Xi − µ0) .

n

i=1 npi(µ0)

i=1

where λ is the unique solution of

=

n

Y

n

X

Xj − µ 0

= 0.

n

−

λ(Xj − µ0)

j=1

Theorem 2. Let E(X12) < ∞. Then under H0,

2 log T = 2

n

X

i=1

n

log 1 −

o

λ

(Xi − µ0) → χ2

1

n

in distribution as n → ∞.

A sketch proof. Under H0, EXi = µ0. Therefore µ0 is close to

¯ for large n. Hence the λ, or more precisely, λn ≡ λ/n is small,

X

which is the solution of f (λn) = 0, where

f (λn) =

n

Xj − µ 0

1 X

.

n j=1 1 − λn(Xj − µ0)

By a simple Taylor expansion 0 = f (λn) ≈ f (0) + f˙(0)λn,

.

¯ − µ0 )

λn ≈ −f (0) f˙(0) = −(X

1X

(Xj − µ0)2.

n j

Now

λ2

{−λn(Xi − µ0) − n (Xi − µ0)2}

2

i

¯ − µ0)2

X

n(X

¯ − µ0) − λ2

.

(Xi − µ0)2 ≈ −1 P

= −2λnn(X

n

2

n

i(Xi − µ0)

i

√

P

¯−

By the LLN, n−1 i(Xi − µ0)2 → Var(X1). By the CLT, n(X

µ0) → N (0, Var(X1)) in distribution. Hence 2 log T → χ2

in

distri1

bution.

2 log T ≈ 2

X

Example 3. Darwin’s data: gains in height of plants from crossfertilisation

2.2 Confidence intervals for µ.

For a given α ∈ (0, 1), since we will not reject the null hypothesis

X = height(Cross-F) - height(Self-F)

H0 : µ = µ0

2

2

iff 2 log T < χ2

1,1−α , where P {χ1 ≤ χ1,1−α } = 1 − α. For α = 0.05,

2

χ1,1−α = 3.84.

15 observations:

6.1, -8.4, 1.0, 2.0, 0.7, 2.9, 3.5, 5.1, 1.8, 3.6, 7.0, 3.0,

9.3, 7.5, -6.0

Hence a 100(1 − α)% confidence interval for µ is

n

=

µ − 2 log{L(µ)nn} < χ2

1,1−α

n

n

X

µ

o

o

log pi(µ) > −0.5χ2

1,1−α − n log n

i=1

n

n X

o

= µ

log{npi(µ)} > −0.5χ2

1,1−α .

i=1

¯ = 2.61, the standard error s = 4.71.

The sample mean X

Is the gain significant?

Intuitively: YES, if no two negative observations -8.4 and -6.0.

Let µ = EXi.

(i) Standard approach: Assume {X1, · · · , X15} is a random sample

from N (µ, σ 2)

10

5

QQ-plot:

Quantile

of

N (0, 1) vs Quantile of the

empirical distribution.

0

H1 : µ > 0

−5

vs

Observed quantiles

(a) Normal plot

H0 : µ = 0

¯ = 2.61

b=X

MLE: µ

−1

0

1

Normal quantiles

The t-test statistic:

¯ = 2.14

nX/s

Is N (µ, σ 2) an appropriate assumption? as the data do not

appear to be normal (with a heavy left tail); see Fig(a).

(b) Lk likelihood, k=1,2,4,8

0.8

The profile likelihood lk (µ) is

plotted against µ for k = 1

(solid), 2 (dashed), 4 (dotted), and 8 (dot-dashed).

0.4

Since T ∼ t(14) under H0, the p-value is 0.06 — significant but

not overwhelming.

0.0

√

Profile likelihood

T =

−5

0

µ

5

10

(ii) Consider a generalised normal family

fk (x|µ, σ) =

2−1−1/k

exp

Γ(1 + 1/k)σ

1 x − µ k

− ,

2 σ If we use the distribution functions with k = 1 to fit the data,

the p-value for the test is 0.03 – much more significant than that

under the assumption of normal distribution.

which has the mean µ. When k = 2, it is N (µ, σ 2).

(iii) The empirical likelihood ratio test statistic 2 log T = 3.56,

which rejects H0 with the p-value 0.04.

To find the profile likelihood of µ, the ‘MLE’ for σ is

The 95% confidence interval is

Hence

n

k X

bk ≡ σ

b (µ)k =

σ

|Xi − µ|k .

2n i=1

1

n

1

b ) = −n log Γ(1 + ) − n(1 + ) log 2 − n log σ

b− .

lk (µ) = lk (µ, σ

k

k

k

b = µ(k)

b

varies with respect to k. In fact

Fig.(b) shows the MLE µ

b

µ(k) increases as k decreases.

15

X

{µ i=1

log pi(µ) > −1.92 − 15 log(15)} = [0.17, 4.27].

1 e−|x−µ|/σ . With µ fixed, the

The DE density is of the form 2σ

P

−1

MLE for σ is n

i |Xi − µ|. Hence the parametric log (profile)

likelihood is

−n log

X

i

|Xi − µ|.

−4

−2

Let X1, · · · , Xn be i.i.d. random vectors from distribution F .

Similar to the univariate case, we assume

−6

log−likelihood

0

3. Empirical likelihood for random vectors

pi = F (Xi), i = 1, · · · , n,

P

where pi ≥ 0 and i pi = 1. The empirical likelihood is

n

Y

pi

L(p1, · · · , pn) =

i=1

0

2

4

6

µ

Parametric log-likelihood (solid curve) based on the DE distribution, and the empirical log-likelihood (dashed curve). (Both curves

were shifted vertically by their own maximum values.)

Without any further constraints, the MELEs are

pbi = 1/n,

i = 1, · · · , n.

3.1 EL for multivariate means

The profile empirical likelihood for µ = E X1 is

L(µ) = max

Y

n

i=1

pi pi ≥ 0,

n

X

pi = 1,

i=1

n

X

i=1

piXi = µ ≡

n

Y

pi(µ),

i=1

where pi(µ) is the MELE of pi with the additional constraint E Xi =

µ. Define the ELR

T ≡ T (µ) =

L(1/n, · · · , 1/n)

=1

L(µ)

Y

n

i=1

n

X

i=1

log{npi(µ)} → χ2

d

in distribution.

(iv) Bootstrap calibration. Since (ii) and (iii) are based on an

asymptotic result. When n is small and d is large, χ2

d,1−α may be

replaced by the [Bα]-th largest value among 2 log T1∗, · · · ,

∗ which are computed as follows.

2 log TB

(a) Draw i.i.d. sample X∗1, · · · , X∗n from the uniform distribution on {X1, · · · , Xn}. Let

T∗ = 1

n

. Y

i=1

(ii) The null hypothesis H0 : µ = µ0 will be rejected at the significance level α iff

n

X

{npi(µ)}.

Theorem 3. Let X1, · · · , Xn be d × 1 i.i.d. with mean µ and finite

covariance matrix Σ and |Σ| 6= 0. Then as n → ∞,

2 log{T (µ)} = −2

Remarks. (i) In the case that |Σ| = 0, there exists an integer q < d

for which, Xi = AYi where Yi is a q × 1 r.v. with |Var(Yi)| 6= 0,

and A is a d × q constant matrix. The above theorem still holds

with the limit distribution replaced by χ2

q.

¯ )},

{np∗i (X

¯ = 1 Pn Xi, and p∗(µ) is obtained in the same

where X

i

n i=1

manner as pi(µ) with {X1, · · · , Xn} replaced by {X∗1, · · · , X∗n}.

(b) Repeat (a) B times, denote the B values of T ∗ as

∗.

T1∗, · · · , TB

We may draw an X∗ from the uniform distribution on {X1, · · · , Xn}

i

as follows: draw Z ∼ U (0, 1), define X∗ = Xi if Z ∈ [ i−1

n , n ).

i=1

log{npi(µ0)} ≤ −0.5χ2

d,1−α ,

2

where P {χ2

d ≤ χd,1−α } = 1 − α.

(iii) A 100(1 − α)% confidence region for µ is

X

n

µ i=1

log{npi(µ)} ≥ −0.5χ2

d,1−α .

Since the limiting distribution is free from the original distribution

of {Xi}, we may draw Xi∗ from any distribution {π1, · · · , πn} instead

¯)

of the uniform distribution used above. Of course now p∗i (X

P

∗

e where µ

e = i πiXi.

should be replaced by p (µ),

(v) Computing pi(µ).

Assumptions: |Var(Xi)| 6= 0, and µ is an inner point of the convex

hull spanned by the observations, i.e.

µ∈

n

n X

i=1

piXi pi > 0,

n

X

o

pi = 1 .

i=1

This ensures the solutions pi(µ) > 0 exist.

Step 1:

We solve the problem in 3 steps:

Similar to Theorem 1, the LM method entails

1. Transform the constrained n-dim problem to a constrained d-dim problem.

2. Transform the constrained problem to an unconstrained

problem.

3. Apply a Newton-Raphson algorithm.

pi(µ) =

1

,

n − λτ (Xi − µ)

i = 1, · · · , n,

where λ is the solution of

n

X

Xj − µ

= 0.

τ

j=1 n − λ (Xj − µ)

(8)

Hence

Put

l(µ) ≡ log L(µ) =

= max

n

X

log pi(µ)

i=1

n

n

X

X

log pi pi ≥ 0,

pi = 1,

piXi =

i=1

i=1

i=1

X

n

l(µ) = −

µ .

i = 1, · · · , n.

(10)

The original n-dimensional optimisation problem is equivalent to

a d-dimensional problem of minimising M (λ) subject to the constraints (10).

Let Hλ be the set consisting all the values of λ satisfying

i = 1, · · · , n.

log{n − λτ (Xi − µ)} ≡ M (λ).

(9)

n

X

∂ 2M (λ)

(Xi − µ)(Xi − µ)τ

=

> 0.

τ

τ

2

∂ λ∂ λ

i=1 {n − λ (Xi − µ)}

P

Note. (10) and (8) together imply i pi(µ) = 1.

n − λτ (Xi − µ) > 1,

i=1

Note ∂∂λ M (λ) = 0 leads to (8), and

Thus M (·) is a convex function on any connected sets satisfying

n − λτ (Xi − µ) > 0,

n

X

Then Hλ a convex set in Rd, which contains the minimiser of the

convex function M (λ). (See ‘Note’ above)

Step 2: We extend M (λ) outside of the set Hλ such that it is still

a convex function on the whole Rd.

Define

log⋆(z) =

(

log z

−1.5 + 2z − 0.5z 2

z ≥ 1,

z < 1.

It is easy to see that log⋆(z) has two continuous derivatives on R.

Put

M⋆(λ) = −

Pn

τ

i=1 log⋆ {n − λ (Xi − µ)}.

Then

• M⋆(λ) = M (λ) on Hλ.

• M⋆(λ) is a convex function on whole Rd.

Unfortunately M (λ) is not defined on the sets

{λ | n − λτ (Xi − µ) = 0},

i = 1, · · · , n.

Hence, M⋆(λ) and M (λ) share the same minimiser which is the

solution of (8).

Step 3: We apply a Newton-Raphson algorithm to compute λ

iteratively:

n

¨ ⋆(λk )

λk+1 = λk − M

o−1

˙ ⋆(λk ).

M

3.2 EL for smooth functions of means

A convenient initial value would λ0 = 0, corresponding to pi = 1/n.

Basic idea. Let Y1, · · · , Yn be i.i.d. random variables with variance

σ 2. Note

σ 2 = EYi2 − (EYi)2 = h(µ),

Remarks. (i) S-code “el.S”, available from

www-stat.stanford.edu/∼owen/empirical

where µ = E Xi, and Xi = (Yi, Yi2). We may deduce a confidence

interval for σ 2 from that of µ.

calculates the empirical likelihood ratio

n

X

log{npi(µ)}

i=1

and other related quantities.

Theorem 4. Let X1, · · · , Xn be d × 1 i.i.d. r.v.s with mean µ0

and |Var(X1)| 6= 0. Let θ = h(µ) be a smooth function from Rd

to Rq (q ≤ d), and θ 0 = h(µ0). We assume

|GGτ | 6= 0,

G=

∂θ

.

∂ µτ

n

X

n

and

C3,r =

Then as n → ∞,

i=1

o

log{npi(µ)} ≥ −0.5r ,

θ 0 + G(µ − µ0) n

o

µ ∈ C1,r .

P {θ ∈ C3,r } → P (χ2

q ≤ r).

(ii) Under more conditions, P {θ ∈ C2,r } → P (χ2

q ≤ r), where

n

For any r > 0, let

C1,r = µ Remarks. (i) The idea of bootstrap calibration may be applied

here too.

o

C2,r = h(µ) µ ∈ C1,r .

C2,r is a practical feasible confidence set, while C3,r is not since

µ0 and θ0 are unknown in practice. Note for µ close to µ0,

θ 0 + G(µ − µ0) ≈ h(µ).

(iii) In general, P {µ ∈ C1,r } ≤ P {θ ∈ C2,r }.

(By Theorem 3, P {µ ∈ C1,r } → P (χ2

d ≤ r))

Example 4. S&P500 stock index in 17.8.1999 — 17.8.2000 (256

trading days)

(iv) The profile empirical likelihood function of θ is

L(θ ) = max

= max

n

n Y

i=1

n

n Y

pi(µ) h(µ) =

θ

Let Yi be the price on the i-th day,

o

n

X

pi h

piXi =

i=1

i=1

n

o

X

pi = 1 ,

i=1

Xi = log(Yi/Yi−1) ≈ (Yi − Yi−1)/Yi−1,

θ , pi ≥ 0,

which may be calculated directly using the Lagrange multiplier

method. The computation is more involved for nonlinear h(·).

which is the return, i.e. the percentage of the change on the i-th

day.

By treating Xi i.i.d., we construct confidence intervals for the

annual volatility

σ = {255Var(Xi)}1/2.

The simple point-estimator is

b =

σ

1/2

X

255 255

¯ 2

(Xi − X)

= 0.2116.

255 i=1

1450

1500

S&P500 index

1350

1400

The 95% confidence intervals are:

1250

1300

Method

EL

Normal

0

50

100

150

200

250

The EL confidence interval is 41.67% wider than the interval based

on normal distribution, which reflects the fact that the returns

have heavier tails.

QQ plot

0.0

-0.02

-0.04

-0.06

S&P500 return quantile

0.02

0.04

•

•

••

•••

••••

••

•••

•••••••

•••••

••••

•••••••

•

•

•

•

•

•

••

•

••••

••••••

••••••••

•••••••

•••••••

••••••

•••••••

•••••

•••••••••

•••••••••

•

•

•

•

•••••

•••••

•••••

••••••••

•••

••

•• •

•

•

•

-3

-2

-1

0

Normal quantile

1

C.I.

[0.1895, 0.2422]

[0.1950, 0.2322]

2

3

4. Estimating equations

A natural estimator for θ is determined by the estimating equation

n

1 X

b ) = 0.

m(Xi, θ

n i=1

4.1 Estimation via estimating equations

Let X1, · · · , Xn be i.i.d. from a distribution F . We are interested

in some characteristic θ ≡ θ (F ), which is determined by equation

E{m(X1, θ )} = 0,

where θ is q × 1 vector, m is a s × 1 vector-valued function.

For example,

Obviously, in case F is in a parametric family and m is the score

b is the ordinary MLE.

function, θ

b may be uniquely determined by (11)

Determined case q = s: θ

Underdetermined case q > s: the solutions of (11) may form a

(q − s)-dimensional set

θ = EX1 if m(x, θ) = x − θ,

θ = E(X1k ) if m(x, θ) = xk − θ,

θ = P (X1 ∈ A) if m(x, θ) = I(x ∈ A) − θ,

θ is the α-quantile if m(x, θ) = I(x ≤ θ) − α.

Example 5. Let {(Xi, Yi), i = 1, · · · , n} be a random sample. Find

a set of estimating equations for estimating γ ≡ Var(X1)/Var(Y1).

In order to estimate γ, we need to estimate µx = E(X1), µy =

E(Y1) and σy2 = Var(Y1). Put θ τ = (µx, µy , σy2, γ), and

m1(X, Y, θ ) = X − µx,

(11)

m2(X, Y, θ ) = Y − µy ,

m3(X, Y, θ ) = (Y − µy )2 − σy2,

m4(X, Y, θ ) = (X − µx)2 − σy2γ,

and m = (m1, m2, m3, m4)τ . Then E{m(Xi, Yi, θ )} = 0, leading to

the estimating equation

n

1 X

m(Xi, Yi, θ ) = 0,

n i=1

b for θ .

the solution of the above equation is an estimator θ

Remark. Estimating equation method does not facilitate hypothesis tests and interval estimation for θ .

Overdetermined case q < s: (11) may not have an exact solution,

approximating solutions are sought. One such an example is socalled the generalised method of moments estimation which is

very popular in Econometrics.

4.2 EL for estimating equations

Aim: construct statistical tests and confidence intervals for θ

The profile empirical likelihood function of θ :

L(θ ) = max

n

Y

i=1

n

n

X

X

pi pi m(Xi, θ ) = 0, pi ≥ 0,

pi = 1

i=1

i=1

The following theorem follows from Theorem 2 immediately.

Theorem 5. Let X1, · · · , Xn be i.i.d., m(x, θ ) be an s × 1 vectorvalued function. Suppose

E{m(X1, θ 0)} = 0,

Then as n → ∞,

in distribution.

Var{m(X1, θ 0)} 6= 0.

−2 log{L(θ 0)} − 2n log n → χ2

s

The theorem above applies in all determined, underdetermined

and overdetermined cases.

Remarks (i) In general L(θ ) can be calculated using the method

for EL for multivariate means in §3.1, treating m(Xi, θ ) as a random vector.

Let X1, · · · , Xn be i.i.d. For a given α ∈ (0, 1), let

m(x, θα) = I(x ≤ θα) − α.

b which is the solution of

(ii) For θ = θ

Then E{m(Xi, θα)} = 0 implies θα is the α quantile of the distribution of Xi. We assume the true value of θα is between X(1) and

X(n).

n

1 X

b ) = 0,

m(Xi, θ

n i=1

b ) = (1/n)n.

L(θ

Example 6. (Confidence intervals for quantiles)

The estimating equation

(iii) For θ determined by E{m(X1, θ )} = 0, we will reject the null

hypothesis H0 : θ = θ 0 iff

log{L(θ 0)} + n log n ≤ −0.5χ2

s,1−α .

(iii) An (1−α) confidence set for θ determined by E{m(X1, θ )} = 0

is

{θ

log{L(θ )} + n log n > −0.5χ2

s,1−α }

n

X

i=1

entails

m(Xi, θbα) =

n

X

i=1

I(Xi ≤ θα) − nα = 0

θbα = X(nα),

where X(i) denotes the i-th smallest value among X1, · · · , Xn. We

assume nα is an integer to avoid insignificant (for large n, e.g.

n = 100) technical details.

In fact L(θα) can be computed explicitly as follows.

Let

L(θα) = max

n

n Y

i=1

X

n

pi i=1

pi ≥ 0,

piI(Xi ≤ θα) = α,

n

X

i=1

o

pi = 1 .

An (1 − β) confidence interval for the α quantile is

Θα = {θα log{L(θα)} > −n log n − 0.5χ2

1,1−β }.

Note L(θbα) = (1/n)n ≥ L(θα) for any θα. It is always true that

θbα ∈ Θα.

Let r = r(θα) be the integer for which

X(i) ≤ θα for i = 1, · · · , r, and

X(i) > θα for i = r + 1, · · · , n.

Thus

L(θα) = max

n

n Y

i=1

pi pi ≥ 0,

r

X

pi = α,

i=1

= (α/r)r {(1 − α)/(n − r)}n−r .

n

X

i=r+1

pi = 1 − α

o

5. Empirical likelihood for estimating conditional distributions

References on kernel regression:

Hence

Θα = {θα log{L(θ α)} > −n log n − 0.5χ2

1,1−α }

n(1 − α)

nα

= θα r log

+ (n − r) log

> −0.5χ2

1,1−α ,

r

n−r

which can also be derived directly based on a likelihood ratio test

for a binomial distribution.

• Simonoff, J. S. (1996).

Springer, New York.

Smoothing Methods in Statistics.

• Wand, M.P. and Jones, M.C. (1995).

Chapman and Hall, London.

Kernel Smoothing.

References on nonparametric estimation for distribution functions:

• Hall, P., Wolff, R.C.L. and Yao, Q. (1999). Methods for

estimating a conditional distribution function. Journal of the

American Statistical Association, 94, 154-163.

• Fan, J. and Yao, Q. (2003). Nonlinear Time Series: Nonparametric and Parametric Methods. Springer, New York. Sections 10.3 (also Section 6.5).

5.1 From global fitting to local fitting

Put β = (β1, · · · , βd)τ

Consider linear regression model

With observations {(Yi, Xi), 1 ≤ i ≤ n}, where Xi = (Xi1, · · · , Xid)τ ,

the LSE minimises

where ε ∼ (0, σ 2).

Y = X1β1 + · · · + Xdβd + ε,

(12)

This model is linear wrt unknown coefficients β1, · · · , βd as the

variable X1, · · · , Xd may be

• quantitative inputs

• transformations of quantitative inputs, such as log, squareroot etc

• interactions between variables, e.g. X3 = X1X2

• basis expansions, such as X2 = X12, X3 = X13, · · ·

• numeric or “dummy” coding of the levels if qualitative

inputs

n X

i=1

Yi − Xτi β

2

,

(13)

resulting to

b = (Xτ X)−1Xτ Y ,

β

where Y = (Y1, · · · , Yn)τ , and X = (X1, · · · , Xn)τ is an n×d matrix.

The fitted model is

b.

c = Xβ

Y

This is a global fitting, since the model is assumed to be true

b is obtained

everywhere in the sample space and the estimator β

using all the available data.

© Copyright 2026