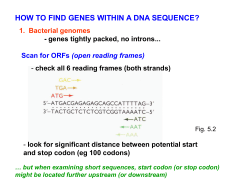

Summation: Principles of Bioinformatics

Summation: Principles of Bioinformatics • Review the key ingredients of the Recipe for Bioinformatics. • Use the Human Genome results as examples for understanding the importance of these ingredients in future genomics and bioinformatics problems. • Integrate these principles with all the specifics you’ve learned this quarter: these principles are present in everything we’ve done in this class. What is Genomics? G=(MB)n Genomics is any (molecular) biology experiment taken to the whole genome scale. • Ideally in a single experiment. • E.g. genome sequencing. • E.g. DNA microarray analysis of gene expression. • E.g. mass spectrometry protein mixture analyses: quantity, phosphorylation, etc. Genomics Foundation: High Throughput Technology • Automation: any human step is a bottleneck. • Multiplexing & parallelization. • Miniaturization. • Read-out speed, sensitivity. • “GMP” Q/A, reproducibility, “production line” mindset. In Genomics every question is really an information problem • In molecular biology, experiments are small and designed to test a specific hypothesis clearly and directly. • In genomics, experiments are massive and not designed for a single hypothesis. • Every biology question about genomics data corresponds to a computer science problem: how to find the desired pattern in a dataset. Human Genome Sequencing • The experimental part (the actual sequencing) was easy. It was the information problem that was hard. • Assembly: the high frequency of repeats in the human genome can fool you into joining the wrong fragments Purely Sequence-Based Assembly Celera believed they could assemble the whole human genome from shotgun sequence fragments in this way. But this approach failed. They had to use the public domain map data to resolve problems in their assembly. Genome Annotation • Genes are what biologists really want, not just the genome sequence. • Unfortunately, most of the 32,000 gene annotation is based on gene prediction, not measured experimental evidence. • It is likely that 50% of the reported genes are wrong in details (individual exons, boundaries) or entirely. • The Drosophila annotation has recently been shown to be deeply flawed. • An information problem that is still not solved. Definition of Bioinformatics Bioinformatics is the study of the inherent structure of biological information. • Data-driven: let the data speak for themselves. • non-random patterns in the data. • Measure significance of patterns as evidence for competing hypotheses. Computational Challenges • Cluster genes by expression pattern over the course of the cell cycle. • Identify groups of genes that are coexpressed, co-regulated. • Identify regulatory elements in common to the promoters of these genes, that make them be expressed at the same time. Solving the Information Problem • Modeling the problem: choosing what to include, and how to describe them. • Relating this to known information problems. • Algorithms for solution. • Complexity: amount of time & memory the algorithm requires. Completeness Changes Everything • In molecular biology cleverness is finding a way to answer a question definitively by ignoring 99.99% of genes. You can’t see them, so the experiment must exclude them. • In genomics cleverness is discovering what becomes when possible when you can see everything. Have to switch our deepest assumptions. What specifically can you learn from Everything? E.g. Protein function prediction: • genomic neighbors method • phylogenetic profiles • domain fusion (Rosetta Stone method) Microarray gene expression analysis • meaningful signal not in just a few genes Example: Ortholog Prediction • Orthologs: two genes related by speciation events alone. “the same gene in two species”, typically, same function. • Paralogs: two genes related by at least one gene-duplication & divergence event. Homology: an ortholog or a paralog? • Experimentally very hard to answer. Genomics Requires Statistical Measures of Evidence • Evaluate competing hypotheses under uncertainty--automatically? • based on statistical tendencies, not “proofs” • false positives, false negatives • the need for cross-validation • the need for experimental validation • best role: experiment interpretation and planning Measures of Evidence • SNP identification from sequence data • Genome annotation: Gene evidence? Keys • explicit, realistic likelihood models, priors measured from tons of real data. • Explicit evaluation of alternative models. • Real posteriors, w/ measures of uncertainty. Integrating Independent Evidence • Typically, a calculation works with one kind of data; hard to integrate very different data. • Likelihood Models provide easy way to integrate many different types of data: if they are really different, just multiply them • Independence; Factorization! Statistical Problems • Microarray analysis: hierarchical clustering? • Genome annotation: gene prediction? • COGs: no statistics at all… • Protein function prediction: distance metrics instead of probabilistic modeling, no posteriors. From Reductionism to Systems Analysis • Mol. Biol.: dissect a complex phenomenon into its smallest pieces; characterize each. • Very hard to put the pieces back together again: Given AB, AC: A+B+C = ? • Genomics: The cell as test-tube. Able to see A+B+C (+D+E+…) working together. • Study how all the components work together as a system. Study system behavior. Cell-Cycle Regulated Genes by whole genome mArray Automated Discovery of Cell Cycle Regulatory Elements Rosetta Stone Assumption: Fusion of functionally-linked domains In organism 1: A A In organism 2: A' B B' Implies proteins A and B may be functionally linked PHYLOGENETIC PROFILE METHOD From Hypothesis-Driven to Data-Driven Science • Mol. Biol.: can’t see 99.99% of genes, so use black-box logic based on controls: keep everything the same except for one small change. Isolate a specific causeeffect. • In reality you rarely have the perfect control. • Hypothesis driven: can only see what you look for: a few genes, a few controls. • Interpretable: ask a YES-NO question. From Hypothesis-driven to Data-driven Science • Genomics: measure all genes at once. • Don’t have to assume a hypothesis as basis for designing the experiment. • Objective: let the data speak for themselves. • Reality: vast amounts of data, very complex, hard to interpret. “System Science” or just “Stupid Science”? Stupid Science: Data-driven Science Done Wrong • No hypothesis. • Assumptions: alternative models not explicitly enumerated, weighed. • Statistical basis of model either neglected or only implicit (and therefore poor). • No cross-validation: just one form of evidence. • Greedy algorithms, sensitive to noise. • Measures of significance weak or absent, both computationally and experimentally. Data-driven Science Done Right • Multiple competing hypotheses. • Alternative models explicitly included, computed, to eliminate assumptions. • Statistical models clear, well-justified. • Multiple, independent types of evidence. • Robust algorithms w/ well demonstrated convergence to global optimum. • Rigorous posterior probability calculated for all possible models of the data. Priors derived from data. False +/- measured. Implications of Data-driven Science • To get strong posteriors that can distinguish multiple models, you need LOTS of data. • Genomics is creating an unprecedented avalanche of data, opportunities. • A change in the nature of data: “lost” data in old notebooks, journals, heads; vs. electronic databases that can be queried, analyzed. • The end of (purely) human analysis. • Don’t confuse observations & interpretations. Bioinformatics as Prediction • Given a protein sequence, bioinformatics would seek to predict its fold. • Given a genome sequence, bioinformatics would seek to predict the locations and exon-intron structures of its genes. • The ultimate test: make a blind prediction (when no experimental data A new kind of Bioinformatics • The massive experimental data produced by genomics projects has created a demand for a fundamentally different kind of bioinformatics, which we can characterize (with some exaggeration) as a mix of three principles: CHEAT • Don’t even try to predict anything. • Just say, “Give us all your experimental data that contain the answer to this question, and THEN we’ll tell you what we think the answer is!” • Focus is on statistically accurate measurement of the strength of the evidence for different interpretations of the experimental data. Steal Other People’s Data • The massive amount of data being produced in the public domain is an opportunity for heavy duty data-mining, using statistics to expose patterns that would otherwise be missed in this huge dataset. Trust No One What kind of data do we want? • RAW EXPERIMENTAL DATA, ideally straight from the sequencing machines. • INTERPRETED DATA is untrustworthy. • Actually, bioinformatics PREDICTIONS are contaminating the experimental databases! Chromatographic Evidence G G T G Hs#S785496 zu42c08.r1 G G T C C C G G T G A Hs#S1065649 oz03ho7.x1* A T C C C Science by Computer? • No human scientist will ever look at all these data. • To make discoveries in these data, scientific judgment of evidence must be formalized as a computation. • Computational Inference about hidden states H from observable states posteriorO: likelihood prior Bayes’ Law p(O | H ) p( H ) p ( H | O) p(O | h) p(h) h Sum over all hidden states Diversity Kills Bayes Law • Posterior probability p(M|obs) assumes all observations came from one model. • e.g. gene prediction: predict “best gene structure”. Completely ignores possibility of alternative splicing. • What if there are multiple, different models in reality? Observations will appear contradictory… • Must treat world as a hidden mixture of models: don’t know how many; don’t weight When data is Mixed Up… No correlation? height weight Real Results are Hidden Baseball players Basketball players Good correlation within each group! height …or Completely Wrong weight Sumo wrestlers Overall correlation line height Basketball players weight Mixture Evidence must be convincing! height Mixture Evidence must be convincing! weight Or we could arbitrarily split up the data any way we like, to generate any desired (ridiculous) conclusion! height Not just one Genome! a Hidden Mixture • Generalize linear model S to partial order graph with hidden edge r67 probabilities rij: S1 S2 S3 S4 SNP S5 S6 S7 S8 S9 S10 S11 r69 splice Cf. Gene Prediction: assume only one model possible (no alternative splicing), so treat rij as binary (0 or 1). Evidence Confidence 1-r C A G G T C r T A G G C G 1 p(obs | r ) p( r )dr •Odds ratio that a feature exists: 0 log log p(r > 0 | obs) p(obs | r 0) Pr( r 0) •Does the probability of the observations drop catastrophically when we eliminate a given model feature (ie. Set its r=0)? •That means there are some observations that cannot be explained well any other way! This is strong evidence. t 1 -t p(obs | t > 0) = 10-3 p(obs | t = 0) = 10-7.2 Evidence – Confidence LOD VALUE of 4.2 Sorting out EST complexity • Need to allow for real divergences within an alignment e.g. chimeras, paralogs, alt. splicing… • Detect clustering errors by dividing alignment into groups of divergent sequences (possible paralogs). • Apply graph theory (mathematics of branching structures) to deal with this. • Developed new multiple sequence alignment method to do this: Partial Order Alignment • Two cases: with genomic sequence, or Linear Alignment: assumes NO Structural Divergences Cluster AA702884 C vs. T polymorphism Novel SNP, not previously identified. Linear MSA • simple assembly • substitutions • simple indels Major Divergences within an Alignment: “Partial Order” Branching Loops • multiple domains • chimeric sequences • paralogous genes • alternative splicing or polyadenylation • not simple indels • alternative splicing • paralogous genes • multiple domains Find Optimal Traversals Use graph theory to find the minimum number of traversals needed to completely encode the alignment. Completely encoded by a single traversal Can only be encoded by two distinct traversals Assign each EST to the traversal that encodes it Separation of Paralogous Groups #Unigene clusters Partial Order structure in Unigene 4500 4000 3500 3000 2500 2000 1500 1000 500 0 Series1 1 2 3 4 5 6 7 8 9 10 #of distinct bundles Most Unigene clusters contain mutually inconsistent sequences (e.g. branching, correlated substitutions suggesting paralogs, etc.) Pairwise Multiple Sequence Alignment O(N2) pairwise distances; find minimum spanning tree; iteratively align via shortest edges. 3 2 4 1 Multiple Domains Proteins may share a domain, but differ elsewhere: One domain may be observed in many proteins. One protein may contain many domains. As a linear MSA: Should it be stored as... In linear MSAs, indel placement is often arbitrary. This leads to arbitrary differences in Or as... gap penalties charged in later alignments... PO Alignment of Multi Domain Sequences MATK ABL1 GRB2 CRKL M A KINASE M SH3 A SH2 G G C SH3 SH3 C Data Convergence: the Genome is the Glue • Biology has become highly specialized, fragmented: natural result of reductionism. • But ultimately most activities attach to a gene or group of genes. • Because of evolution, genes connect to each other through orthology (across species) and paralogy (duplication events). • Discovery is connecting previously unrelated facts about phenotypes and causes Examples • Human proteins work in yeast. Use yeast to figure out protein-protein interactions… • Drosophila get high on cocaine. Use them to study addiction, therapies. • C. elegans on Prozac... • Spiders build crazy webs on caffeine... Human Mouse Expansion of Gene Families Star Topologies: O(N2) power in O(N) links mutants Regulatory sites phenotypes Alternative splicing Model organisms activities ligands expression genes domains proteins polymorphisms screens development mapping Disease associations folds motifs Genomics & Bioinformatics • Incredible increase in experimental data production is making possible entirely new analyses. • Bioinformatics required for interpretation of the meaning of the raw data. • A lot of discovery is possible, but… Evidence Matters! • The genome is a very complex place. • Every possible trap for naïve bioinformatics analysis is actually there: repeats, paralogs, polymorphisms • Rigorous statistical measurement of our confidence is the only thing that will keep us from making silly mistakes. Sources of Uncertainty Experimental Factors Type of errors caused: (+) false positive, (-) false negative Chimeric ESTs (+) in methods that simply compare ESTs. Genomic contamination EST fragmentation (+) in methods that don’t screen for pairs of mutually exclusive splices. (+) in methods that don’t screen for fully valid splice sites (which requires genomic mapping, intronic sequence.) (+/-) in methods that don’t correct misreported orientation, or don’t distinguish overlapping genes on opposite strands (+/-). Single pass EST sequencing error can be very high locally (e.g. >10% at the ends). Need chromatograms. Where ESTs end cannot be treated as significant. EST coverage limitations, bias Genomic coverage, assembly errors (-). Most genes have very few ESTs, from even fewer tissues. The main barrier to alternative splice detection. (-) in methods that map ESTs on the genome. Short contigs may cause >25% false negatives. RT / PCR artifacts EST orientation error, uncertainty Sequencing error Modrek & Lee, Nature Genetics (in press). Bioinformatics Factors Alignment size limitations (-) in methods that can’t align >102, >103 sequences Alignment degeneracy (+/-). Alignment of ESTs to genomic is frequently degenerate around splice sites. “Pathological” assemblies (+/-). What should assembly programs do when the assembled reads disagree in regions (e.g. alt. splicing)? Programs vary. Non-standard splice sites? (+) in methods that don’t fully check splice sites; (-) in methods that do restrict to standard splice sites. Arbitrary cutoff thresholds (+/-) in methods that use cutoffs (e.g. “99% identity”). Rigorous measures of evidence (+/-). How can the strength of experimental evidence for a specific splice form be measured rigorously? Mapping ESTs to the genome (-) in methods that map genomic location for each EST. Paralogous genes (+) in all current methods, but mostly in those that don’t map genomic location or don’t check all possible locations. Biological Interpretation Factors Defining the coding region Predicting ORF in novel genes; splicing may change ORF. Predicting impact in UTR Relatively little has been proved for UTR effects. Predicting impact in protein Motif, signal, domain prediction, and functional effects. Assessing and correcting for bias Our genome-wide view of function is under construction. Until then, we have unknown selection bias. Spliceosome errors? Is splicing perfect? I.e. does it only make correct forms? What’s truly functional? Just because a splice form is real (i.e. present in the cell) doesn’t mean it’s biologically functional. Conversely, even an mRNA isoform that makes a truncated, inactive protein might be a biologically valid form of functional regulation.

© Copyright 2026