Total of this assignment is 220pts, but 100% =... assignment is worth 7%. Some solutions below are just sketches.

CS/SE 2C03. Sample solutions to the assignment 3.

Total of this assignment is 220pts, but 100% = 195 pts. There are 25 bonus points. Each

assignment is worth 7%. Some solutions below are just sketches.

If you think your solution has been marked wrongly, write a short memo stating

where marking in wrong and what you think is right, and resubmit to me during

class, office hours, or just slip under the door to my office. The deadline for a

complaint is 2 weeks after the assignment is marked and returned.

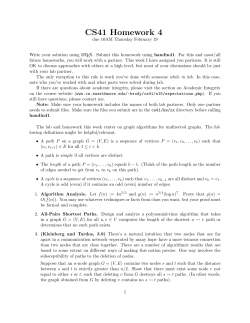

1.[20] Consider the following graph

4

3

h

5

a

2

b

3

5

f

1

3

2

c

5

4

5

3

l

1

j

2

4

7

i

3

3

e

2

d

1

k

4

a.[10] For the above directed graph use Bellman-Ford algorithm to find shortest paths

from a to the other vertices. Give both paths and distances. Give all steps of the

algorithm.

1

Solution:

b.[10] For the above directed graph use Floyd’s algorithm to find the shortest distances

between all pairs of vertices. Also construct the matrix P that allows to recover

the shortest paths. Recover the paths from a to d and from f to c. Give all steps of

the algorithm.

2

3

4

5

6

7

8

9

10

2.[10] Suppose characters a, b, c, d, e, f, g, h, i, j have probabilities 0.02, 0.02,

0.03, 0.04, 0.05, 0.10, 0.10, 0.13, 0.20, 0.31, respectively. Construct an optimal

Huffman code and draw the Huffman tree. Use the following rules:

a. Left: 0, right: 1

For identical probabilities, group them from the left to right.

What is the average code length?

1.0

0

1

0.6

0

0.29

0

1

Code

Length

probability

average

0

0.03

1 c

0.02

a

0.02

b

a

000000

6

0.02

0.12

b

000001

6

0.02

0.12

1

0.1

f

0.2

i

0.1

g

0.09

0

1

0

0.04

0.13

h

1

0.07

1

0

0.31

0.2

j 0

1

0.16

0

0.4

1

0.04

d

c

00001

5

0.03

0.15

0.05

e

d

00010

5

0.04

0.2

Total average =

e

00011

5

0.05

0.25

F

100

3

0.1

0.3

g

101

3

0.1

0.3

h

001

3

0.13

0.39

i

11

2

0.2

0.4

j

01

2

0.31

0.62

2.85

3.[10] Exercise 4 on page 190 of the course text.

Let S = a1a2 ... an, and S’ = b1b2 ... bk, where k ≤ n.

Take b1 and compare with a1 then with a2 etc., until a match b1 = ai1 , for some i1, is

found. If a match is not found, S’ is not a subsequence of S.

Then set up a new S’ as S’ = b2b3 ... bk, and new S as S= ai1+1ai1+2 ... an, and repeat the

same process. Then repeat it again for S’ = b3b4 ... bk, etc., until S’ becomes an empty

sequence. If a match is found for each bi, then S’ is a subsequence of S, otherwise it is not.

Since we are always moving to the right the complexity is proportional to n+k.

11

4.[10] Exercise 3 on page 107 of the course text.

In principle this is almost the same algorithm as for topological sorting. We need one

more variable, say RV, to store the number of vertices that have not been visited yet. At

each step this variable is decreased by one. If a graph contains a cycle, at some point

there will be no vertex without incoming arcs and RV will be bigger than 1. If this

happens we output ‘graph has a cycle’, otherwise a topological sorting is produced.

Note. This solution assumes that directed graphs are represented by having both the list

of standard adjacent vertices and the list of ‘reverse’ adjacent vertices.

5.[10] Exercise 2 on page 246 of the course text.

Only small modification of the standard algorithm is required. Note that in the procedure

‘Merge-and-Count’ from page 224, if ai = bj then NOTHING is done, just go to another

step of the procedure. We just have to replace ‘If bj is the smaller’ by ‘If bj > 2ai’. The

rest of the algorithm is the same.

6.[30] Exercise 3 on page 246 of the course text.

This is much more complex problem. The solution below is a complete full solution.

Such a level of details is not required for this course, I just provide it so you can see how

it should be done on, say grad level, or 4th year level.

Let's call a card that occurs more than n/2 times, a majority element. Evidently, there can

only be one or zero majority elements, since if there were two (elt1 and elt2), the number

of occurrences of elt1 and the number of occurrences of elt2 would sum to more than n.

The key observation we will use is the following:

If there is a majority element, then it is a majority in one of the two halves of the desk.

Otherwise the number of occurrences of it in the first half is at most n/4 and in the

second half, it's also n/4, so the total number of occurrences is at most n/2, but we need

at least n/2 + 1 occurrences to get a majority element.

This observation naturally leads to a recursive algorithm: Find the majority element of

each half, if one exists. At most we'll get two possible candidates, and we need to check

for each of them, if it occurs more than n/2 times.

A more formal description of the algorithm follows:

MajorityFinder(Cards):

if size(Cards) == 1: return (True, Cards[0])

if size(Cards) == 2: return (Cards[0]==[Cards[1], Cards[0])

12

(Maj1, MajElt1) = MajorityFinder(Cards[0:n/2])

(Maj2, MajElt2) = MajorityFinder(Cards[n/2+1:n])

if NOT (Maj1 OR Maj2): return (False, Cards[0])

if Maj1:

count = 0

for i = 0 to n-1:

if Equality(MajElt1, Cards[i]): count += 1

if count > n/2:

return (True, MajElt1)

if Maj2:

count = 0

for i = 0 to n-1:

if Equality(MajElt2, Cards[i]): count += 1

if count > n/2:

return (True, MajElt2)

return (False, Cards[0])

Proof of Running Time:

We can see that every time we call the algorithm, we recurse on each half, and then

Perform at most a linear amount of work (in the worst case, we could perform both of the

for loops, and the body of each for loop is a constant number of operations). We see that

in the base case (size = 2 or 1), we perform a constant amount of work.

This gives us the following recurrence:

T(n) = 2*T(n/2) + cn

T(2) = c'

T(1) = c''

This is the same recurrence as mergesort, and so we can see both that the algorithm

terminates, and that the running time is O(n log n) (Master Theorem).

Proof of correctness (bonus of 10 -15 for doing this) :

We use strong induction.

The inductive hypothesis is that on an input of a deck of n cards, Majority sort correctly

returns True and the identity of the majority element if one exists, and False if one does

not.

The base cases are n=1 (in which case there is always a majority, that the code clearly

returns), and n=2 (in which case there is a majority iff the two cards are identical, and

our code correctly returns True if this is the case and False if it is not. If the two

cards are identical, we can return either as they are the same).

13

Inductive step:

So if we have a deck of n+1 cards, with n≥2 we have that (n+1)/2 < n. This means that

For the recursive calls, we can use the inductive hypothesis to correctly determine

whether the first half and second half of the deck have majority elements.

Let us assume that there is no majority element. Then we want our code to return False,

with any arbitrary card id. The only way our code can return True, is if in one of the loop

bodies, we see count > n/2. But in each loop body we see that count is initialised to 0,

and incremented once everytime we see a card equal to a possibly majority element. This

means that if count > n/2 then a majority element exists, since we've seen it more than n/2

times. This can't happen in this case, so our code returns false.

So our code behaves correctly if there is no majority element.

Suppose there is a majority element. Then by the observation, and by the inductive

hypothesis, it must be either MajElt1 or MajElt2. Furthermore, if MajElt1 and MajElt2

are

the same, then it must be the case that either Maj1 or Maj2 are true. (Again, by the

observation, that says that a majority element of the whole deck

of cards must be a majority element of one half of the deck of cards). Any time we see a

majority element of one of the half decks, then we check if it is a majority element of the

full deck. We will return True iff the number of times it occurs in the full deck is

greater than n/2, which is exactly the condition that it is a majority element, and we

return the element that are are considering as a candidate for majority.

7.[10] Stanley Cup Series Odds. Suppose two teams, A and B, are playing a match to see

who first win n games for some particular n. The Stanley Cup Series is such a

match with n=4. Suppose A has a probability pi of winning the i-th game (so B has

1-pi probability of winning the i-th game). Let P(i,j) be the probability that if A

needs i games to win, and B needs j games, that A will eventually win the match.

The set of all P(i,j), i,j = 1,...,n, is called a ‘table of odds’. Use the dynamic

programming technique to design an algorithm that produces such table of odds.

Your algorithm should have time complexity not worse that O(n2). Show the

solution for n=4, p1 = 0.6, p2 = 0.5, p3 = 0.4, p4 = 0.3.

Solution:

First note that P(0,j) = 1 for all j, since this means that A has won the match

already, and P(i,0) = 0 for all i, since this means that B has won the match already.

Hence create an nn array of P(i,j) and fill it with 0 for all P(i,0) and with 1 for

all P(0,j).

If team A needs i games to win, then it has won n-i games already. Thus, we

obtain the following recurrence for the remaining i and j:

14

P(i,j) = pn-i P(i-1,j) + (1-pn-i ) P(i,j-1)

The rest is just a plain calculation.

8.[15] Exercise 2 on page 313 of the course text.

a.[5]

A possible counter example is very simple:

l

h

Week 1

10

21

Week 2

10

11

Since h2 = 11 < l1 + l2 = 20, the algorithm will produce 20 while the solution is 21.

b.[10] For i=1,2, 3 do it manually (i.e. consider all cases and find the maximium)

For i>3:

opt[i] = max(li + opt[i-1], hi + opt[i-2])

value = opt[n]

9.[20] Exercise 3 on page 314 of the course text.

a.[5]

A possible counter example is the following:

The algorithm gives 2 for the longest path from v1 to v5, while the correct answer

is 3. The ‘greedy rule’ in line 5: ‘for which j is as small as possible’ is the cause.

b.[15] The solution is to get rid of ‘greediness’. Note that the question only asks

for a number, a path could be a side-effect (as in shortest paths algorithms).

A possible solution:

15

To simplify the code assume that vertices are numbered by natural numbers

starting from 1, i.e. vi = i.

Set the array D[1..n] as D[i] = 0 for all a-1,...,n.

For w=1, ..., n do

While there is an edge out of the vertex w

For all vertices u such that the edge (w,u) exists

set D[u]=max{D[u],D{w}+1}

End For

Return D[n]

To recover the longest path we need to use an array of ‘predecessors’ (as in for

example Dijkstra’s algorithm). The modified algorithm is:

Set the array D[1..n] as D[i] = 0 for all a-1,...,n.

Set the array P[1..n] as P[i] = 0 for all a-1,...,n.

For w=1, ..., n do

While there is an edge out of the vertex w

For all vertices u such that the edge (w,u) exists

If D[u]<D[w]+1 then

begin D[u]=D[w]+1; P[u]=w endIf

End For

Return D[n]

Retrieve the longest path using standard ‘predecessor procedure’ starting from n.

Bonus of 10 pts for this.

10.[30]

Solutions to the programming questions will not be posted.

11.[30]

Solutions to the programming questions will not be posted.

16

© Copyright 2026