Wisconsin Educator Effectiveness System Developmental Pilot

Wisconsin Educator Effectiveness System Developmental Pilot Process Manual for Teacher Evaluation of Practice DRAFT June 27, 2012 1 Dear Wisconsin Education Leaders: The great state of Wisconsin has been recognized as an educational leader in this nation for some time as our work has always been focused around student achievement. It’s no surprise that as a nation, educational reform initiatives are directed at teacher quality as it is the most significant factor impacting student achievement. Wisconsin’s record of educational achievement is the result of the hard work of so many quality educators and education leaders to improve outcomes for all students. For the past two years, the state has been working to develop a new evaluation system for teachers and principals – a system that focuses on professional growth and student achievement. Together, stakeholder groups representing teachers, administrators, school boards, higher education, and others have developed the Wisconsin Educator Effectiveness System which is grounded on the 2011 Interstate Teacher Assessment and Support Consortium (InTASC) Model Core Teaching Standards and—for principals—the 2008 Interstate School Leaders Licensure Consortium (ISLLC) Educational Leadership Policy Standards. The implementation of this evaluation system represents the best thinking of stakeholders statewide. This Wisconsin Educator Effectiveness Process Guide is an essential tool to ensure the success of this effort. The intent of the Educator Effectiveness System is to support educators in professional development for the benefit of our students. In addition to this process guide, training and ongoing professional development will strengthen district implementation as the state works toward successful statewide implementation of the system in 2014-15. This work represents a significant change for our educational system, and a transformation such as this takes time and practice. This gradual implementation will allow schools to be well supported and successful, and provides feedback opportunities that will help to inform the refinement of the components as we near statewide implementation. Continued communication and feedback from districts and stakeholder groups will help the state of Wisconsin to develop one of the best evaluation systems in the country. Sincerely, Tony Evers, PhD State Superintendent 2 TABLE OF CONTENTS Letter from State Superintendent Tony Evers Developmental Pilot Introduction Section 1: The Teacher Evaluation Process at a Glance Section 2: Classroom Practice Measure - Framework for Teaching Section 3: The Teacher Practice Evaluation Process – Steps, Tasks and Forms Orientation Self-Reflection and Educator Effectiveness Plan Evaluation Planning Session Observations and Evidence Collection Pre- and Post-Observation Feedback Rating of Practice Final Evaluation Conference Use of Evaluation Results Developmental Pilot Evaluation Process Responsibilities Section 4: Evaluation of Developmental Pilot Purpose and Basic Design Participating Districts’ Roles in the Evaluation of the Developmental Pilot Teacher Evaluation During the Developmental Pilot Section 5: Definitions Section 6: Frequently Asked Questions Appendices APPENDIX A: Wisconsin Framework for Educator Effectiveness Design Team Report and Recommendations APPENDIX B: Danielson 2011 Framework for Teaching Evaluation Rubric APPENDIX C: DRAFT Wisconsin Teacher Self-Rating Form APPENDIX D: DRAFT Wisconsin Teacher Educator Effectiveness Plan 3 APPENDIX E: DRAFT Wisconsin Teacher Observation/Artifact Form APPENDIX F: DRAFT Wisconsin Teacher Pre-Observation (Planning) Form APPENDIX G: DRAFT Wisconsin Teacher Post-Observation (Reflection) Form APPENDIX H: DRAFT Wisconsin Teacher Final Evaluation Form APPENDIX I: Evidence Sources 4 DEVELOPMENTAL PILOT INTRODUCTION Thank you for participating in the Developmental Pilot of the process for evaluating teaching practice. Your involvement in this pilot is a critical part of learning how this evaluation process is carried out and can potentially be improved. The pilot builds on a six-month design process carried out by Wisconsin educators. The focus this year is only on the teacher professional practice components of the Educator Effectiveness System which includes the use of a rubric, evidence sources, and a process and timeline for carrying out evaluation activities. This pilot does not include outcome measures that will be part of the full Educator Effectiveness System. The outcome measures will be pilot tested in 2013-14 (for more information, see Wisconsin Framework for Educator Effectiveness Design Team Report and Recommendations, Appendix A) This guide is organized into six sections to help evaluators and teachers as they test the process for evaluating teaching: Section 1 provides a brief overview of the evaluation process Section 2 gives an overview of the 2011 Framework for Teaching©, the rubric for teacher professional practice evaluation Section 3 provides an in-depth description of the process for teacher evaluation Section 4 describes the Developmental Pilot Section 5 lists terminology and definitions of key terms used in this guide Section 6 addresses answers to frequently asked questions 5 SECTION 1: THE TEACHER EVALUATION PROCESS AT A GLANCE The Wisconsin Teacher Evaluation system is structured on a performance management cycle. Figure 1 identifies the key components in the cycle. Orientation SelfReflection and Educator Effectiveness Plan Use of Evaluation Results Final Evaluation Conference Evaluation Planning Session Observations and Evidence Collection Rating of Practice Pre- & PostObservation Discussions Figure 1: Teacher Evaluation Cycle Orientation: Teacher and evaluator review the evaluation policy and procedures, evaluation rubrics, timelines, and forms. Self-Reflection and Educator Effectiveness Plan: Teacher reviews the Framework for Teaching, selfassesses, sets goals, and completes the Educator Effectiveness Plan (EEP). Evaluation Planning Session: Teacher and evaluator meet to review EEP and set evidence collection schedule. Observations and Evidence Collection: Throughout the year, teachers are observed and other evidence is gathered by teachers and evaluators for the evaluation. Teachers should receive ongoing feedback. Pre- and Post-Observation Discussions: at least one observation includes a pre-observation discussion and a post-observation discussion between teacher and evaluator. Rating of Practice: Throughout the year, evaluator gathers evidence to rate practice according to the rubric. The ratings should occur after all evidence is collected for the relative components and domains. 6 Final Evaluation Conference: Teacher and evaluator meet to review evidence collected, EEP, progress made on goals, and Professional Practice Rating. Use of Evaluation Results: Teachers personalize professional development based on evaluation results. They set new goals for following year’s EEP. 7 SECTION 2: TEACHING EVALUATION OF PRACTICE BASED ON FRAMEWORK FOR TEACHING Data for teacher evaluation will come from classroom observations and other evidence sources. Charlotte Danielson’s 2011 Framework for Teaching©, a research-based model for assessing and supporting teaching practice, has been adopted for evaluating teachers. The infrastructure and support for utilizing Danielson’s Framework will be accessed through Teachscape. Teachscape provides a combination of software tools, the latest research-based rubrics, electronic scheduling, and observation and data collection tools necessary for carrying out the evaluation process. The Framework is organized into 4 domains and 22 components. Evidence can be gathered for all components, although only domains 2 and 3 are usually observed during a classroom lesson. The full rubrics can be found in Appendix B. A complete description of the Domains and Components, as well as indicators and descriptions of performance levels is available in The Framework for Teaching Evaluation Instrument, 2011 Edition, which participants in the Developmental Pilot will receive. The four Framework domains are as follows: Domain 1: Planning and Preparation Defines how a teacher organizes the content that the students are to learn—how the teacher designs instruction. All elements of the instructional design—learning activities, materials, assessments, and strategies—should be appropriate to both the content and the learners. The components of domain 1 are demonstrated through the plans that teachers prepare to guide their teaching. The plan’s effects are observable through actions in the classroom. Domain 2: The Classroom Environment Consists of the non-instructional interactions that occur in the classroom. Activities and tasks establish a respectful classroom environment and a culture for learning. The atmosphere is businesslike; routines and procedures are handled efficiently. Student behavior is cooperative and non-disruptive, and the physical environment supports instruction. The components of domain 2 are demonstrated through classroom interaction and are observable. Domain 3: Instruction Consists of the components that actually engage students in the content. These components represent distinct elements of instruction. Students are engaged in meaningful work that is important to students as well as teachers. Like domain 2, the components of domain 3 are demonstrated through teacher classroom interaction and are observable. Domain 4: Professional Responsibilities Encompasses the teacher’s role outside the classroom. These roles include professional responsibilities such as self-reflection and professional growth, in addition to contributions made to the schools, the district, and to the profession as a whole. The components in domain 4 are demonstrated through teacher interactions with colleagues, families, and the larger community. Framework for Teaching 8 Domain 1: Planning and Preparation 1a Demonstrating Knowledge of Content and Pedagogy 1b Demonstrating Knowledge of Students 1c Setting Instructional Outcomes 1d Demonstrating Knowledge of Resources 1e Designing Coherent Instruction 1f Designing Student Assessments Domain 4: Professional Responsibilities 4a Reflecting on Teaching 4b Maintaining Accurate Records 4c Communicating with Families 4d Participating in a Professional Community 4e Growing and Developing Professionally 4f Showing Professionalism Domain 2: Classroom Environment 2a Creating an Environment of Respect and Rapport 2b Establishing a Culture for Learning 2c Managing Classroom Procedures 2d Managing Student Behavior 2e Organizing Physical Space Domain 3: Instruction 3a Communicating With Students 3b Using Questioning and Discussion Techniques 3c Engaging Students in Learning 3d Using Assessment in Instruction 3e Demonstrating Flexibility and Responsiveness The Framework for Teaching© defines four levels of performance for each component. The levels of performance describe teaching practice for a specific lesson (not the teacher). The levels of performance are defined as follows: Highly Effective (Level 4) Effective (Level 3) Minimally Effective (Level 2) Ineffective (Level 1) Refers to teaching that does not convey understanding of the concepts underlying the component. This level of performance is doing harm in the classroom. Refers to teaching that has the necessary knowledge and skills to be effective, but its application is inconsistent (perhaps due to recently entering the profession or recently transitioning to a new curriculum, grade level, or subject). Refers to successful, professional practice. The teacher consistently teaches at a proficient level. It would be expected that most experienced teachers would frequently perform at this level. Refers to professional teaching that innovatively involves students in the learning process and creates a true community of learners. Teachers performing at this level are master teachers and leaders in the field, both inside and outside of their school. Evidence of teaching practice relative to the Framework for Teaching components will be gathered through classroom observations, artifacts such as student work samples and logs of parent communications, and conversations about practice with the evaluator. Teachers will typically demonstrate varying degrees of proficiency on the different components. This variation is expected. Perfection may 9 be the ideal, but no one can perform at the highest levels all of the time. New teachers may perform at the basic level most of the time while working toward proficiency. Experienced teachers should be practicing at the proficient level for most components most of the time. Teachers may be at the distinguished level on some components, while demonstrating proficiency in other areas. The following is an example of the rating rubric with descriptions of levels of performance pertaining to component 1a: Knowledge of Content and Pedagogy, which falls under the domain of Planning and Preparation (see Appendix B for full rubric). 1a. Knowledge of Content and Pedagogy Ineffective In planning and practice, teacher makes content errors or does not correct errors made by students. Teacher’s plans and practice show little understanding of prerequisite relationships important to student’s learning of the content. Teacher shows little or no understanding of the range of pedagogical approaches suitable to student’s learning of the content. Minimally Effective Teacher is familiar with the important concepts in the discipline but displays lack of awareness of how these concepts relate to one another. Teacher’s plans and practice indicate some knowledge of prerequisite relationships, although such knowledge may be inaccurate or incomplete. Teacher’s plans and practice reveal a limited range of pedagogical approaches to the discipline or to the students. Effective Teacher displays solid knowledge of the important concepts of the discipline and the way they relate to one another. Teacher’s plans and practice reflect accurate knowledge of prerequisite relationships among topics and concepts. Teacher’s plans and practice reflect familiarity with a wide range of pedagogical approaches in the discipline. Highly Effective Teacher displays extensive knowledge of the important concepts of the discipline and the ways they relate both to one another and to other disciplines. Teacher’s plans and practice reflect knowledge of prerequisite relationships among topics and concepts and provide a link to necessary cognitive structures needed by students to ensure understanding. Teacher’s plans and practice reflect familiarity with a wide range of pedagogical approaches in the discipline, anticipating student misconceptions. 10 SECTION 3: THE TEACHER PRACTICE EVALUATION PROCESS – STEPS, TASKS AND FORMS The following section details the eight steps in the process for Teacher Evaluation, including descriptions of each task, timing, teacher and evaluator responsibilities and necessary forms used during the Developmental Pilot. Step 1: Orientation – Summer of 2012 The orientation process for the Developmental Pilot starts with training for teachers and evaluators. The Department of Public Instruction hosts this training for three full days during the summer of 2012. In addition to covering the expectations and formative aspects of the Developmental Pilot, the training familiarizes participants with Danielson’s 2011 Framework for Teaching© and its use in evaluations of teacher practice. The training also includes instruction on how to use Teachscape© software for communication, data collection, and accessing professional resources. All teacher evaluators (typically principals) participating in the Developmental Pilot receive the training but have additional on-line training. The on-line training allows evaluators do practice evaluations at any time. It includes a certification assessment so that observers will be able to match expert evaluators before using the 2011 Framework in “real-time.” Step 2: Self-Reflection and Educator Effectiveness Plan - September Each teacher participating in the Pilot will self-assess his or her practice during September, by reflecting on practice and completing the Wisconsin Teacher Self-Rating Form (see Appendix C). The self-reflection informs the development of the Educator Effectiveness Plan (EEP). The selfreflection process begins with a review of the Framework for Teaching© and the completion of the SelfRating Form. After self-rating, the teacher creates two to three professional growth goals related to Framework components that were identified as areas for development. The two to three professional growth goals guide the evaluation activities for the year, but all of the components from the Framework for Teaching© will be assessed. The teacher then incorporates these professional growth goals into the creation of the EEP. The teacher includes the following information on the EEP (see Appendix D): 1. 2. 3. 4. 5. Two to three goals related to practice1 Domain related to the goal Actions needed to meet the goals, including how the goals will be measured Timelines for the completion of the goals What evidence will be collected to demonstrate progress Teachers should identify SMART goals: SMART goals are Specific, Measureable, Attainable, Realistic and Timely. These goals should be relevant to current practice and classroom needs. 1 During the Developmental Pilot, the goals should relate to specific components of the 2011 Framework for Teaching. In the future, the goals may also relate to school improvement goal(s); Professional Development Plan (PDP) goal(s); team goal(s) and/or Student Learning Objective (SLO) goal(s). 11 The teacher submits the completed EEP and the Self-Rating Form to the evaluator prior to the Evaluation Planning Session. Step 3: Evaluation Planning Session – September or October Also during September or October, the teacher meets with his or her evaluator in an Evaluation Planning Session. At this session, the teacher and evaluator collaborate to complete the following activities: 1. Review and agree upon the goals, actions, resource needs and measures of goal completion to meet the EEP 2. Set the evaluation schedule including scheduled observations and meetings and/or methods of collecting other sources of evidence (see Appendix I for descriptions of other sources) Step 4: Observations, Evidence Collection & On-going Feedback – October through March Observations and Evidence Collection take place from October through March. The teacher is observed by his or her evaluator2. The following are the minimum observations to be conducted: Frequency 1 announced observation 1 unannounced observation 3-5 informal and unannounced observations (walkthroughs) Duration 45 minutes or (2) 20-minute observations 45 minutes or (2) 20-minute observations At least 5 minutes Other evidence shall be provided by the teacher as agreed upon in the Evaluation Planning Session, such as: Lesson/unit plans Classroom artifact o Teacher-created assessments o Grade Book o Syllabus Communications with families o Logs of phone calls/parent contacts/emails o Classroom newsletters Logs of professional development activities Verbal and/or written feedback should follow each observation. In addition to observations, additional evidence identified during the Evaluation Planning Session is collected and shared during this period, as agreed upon during the Evaluation Planning Session. The 2 For purposes of the pilot, the administrator will be the primary observer but a peer mentor/evaluator may also be implemented. In future years, observations may be done by a combination of administrator and peer mentor. 12 Observation/Artifact Form (see Appendix E)3 is used to document evidence gathered pertaining to a teacher’s professional practice. Step 5: Pre- & Post-Observation Discussion - October through March Discussions between teachers and evaluators take place throughout the year, and can be formal conferences or informal means of delivering feedback (written or verbal). At least one of the observations must include both a pre- and a post-observation discussion, which involves the use of the PreObservation (Planning) Form and the Post-Observation (Reflection) Form. Teachers complete the Pre-Observation (Planning) Form (see Appendix F) in advance of the preobservation discussion. This form helps shape the dialog of the pre-observation discussion and allows the teacher to “set the stage” for the lesson. The information allows the teacher to identify the context of the classroom, the specifics of the lesson focus and its intended outcomes. The teacher may submit this information in writing or come prepared to have a dialog with the evaluator during the pre-observation discussion.4 The Post-Observation (Reflection) Form (see Appendix G) also helps frame the dialog and resulting feedback from the observed lesson during the post-observation discussion. Both the teacher and evaluator can use the questions to identify areas of strength and suggestions for improvement. The post-observation discussion can also focus on classroom teaching artifacts (lesson plans, student work samples, etc.) that are related to the classroom observation. Both the pre-and post-observation forms and discussions can also help provide evidence of reflective skills that are relevant to Domain 4 of the Danielson Framework for Teaching©. Step 6: Rating of Practice – February through end of school year Throughout the school year, evaluators collect and teachers provide evidence of teaching practice. This evidence is used to rate a teacher’s practice, using the rubric and appropriate levels of performance. Although evidence is collected throughout the year, evaluators should not make ratings of practice until adequate information is obtained to assess each component. This will likely occur during the second half of the school year. Evaluators will need to organize the observation/artifact forms used to record evidence and any notes compiled during the year (e.g., notes from the post-observation discussions and/or other feedback sessions). Once the evaluator determines that there is enough evidence for each component, they will select the rubric level that best matches the evidence of practice for that component. Step 7: Final Evaluation and Final Evaluation Conference – April - June 3 Observations and artifact collection using Teachscape will be accessed through the Teachscape ReflectLive feature. 4 These forms will also be accessible using Teachscape ReflectLive. 13 The Final Evaluation Conference takes place during April, May or June. During this conference, the teacher and his/her supervising administrator meet to discuss achievement of the goals identified in the EEP and review collected evidence. The teacher submits the completed EEP prior to the Final Evaluation Conference. The administrator then provides written feedback for the goals and components identified in the EEP. In addition to the EEP, other collected evidence is used by the evaluator to rate each of the components from the four domains and twenty-two components. The evaluator then completes the Final Evaluation Form (Appendix H) and reviews it with the teacher during the Final Evaluation Conference. The teacher has the opportunity to comment on the final evaluation results on the Final Evaluation Form. Step 8: Use of Evaluation Results – end of evaluation year, beginning of following year Results from the evaluation process inform performance goals and the teacher’s Educator Effectiveness Plan for the following year. During the Developmental Pilot, NO evaluation results will be used for employment purposes or other high-stakes decision-making. 14 Developmental Pilot Evaluation Process Responsibilities The following lists the responsibilities for Teachers and for the Supervising Administrator during the evaluation process. Teacher responsibilities Actively participate in evaluation activities. Attend the evaluation Orientation. Reflect on practice and, using the 2011 Framework for Teaching©, complete the Self-rating of performance. Based on the Self-rating of performance, identify 2-3 goals related to practice to include in an Educator Effectiveness Plan (EEP). Submit the Self-rating of performance and the EEP to the evaluator prior to the Evaluation Planning Session. Meet with evaluator for the Evaluation Planning Session, discuss actions needed to achieve goals identified in EEP, agree upon measures of goal completion, determine evidence sources; schedule observations and outline timing/process for other evidence collection. For formal observations be prepared for pre-observation and post-observation meetings. The teacher may submit this information in writing or come prepared to discuss the information. Work collaboratively with the peer mentor or secondary observer (if applicable). Provide the supervising administrator with other evidence as appropriate prior to Final Evaluation. Prepare for the Final Evaluation and provide any evidence requested by the supervising administrator. Meet with the supervising administrator for the Final Evaluation Conference, review EEP and goal achievement; sign off on Professional Practice Rating. Use Evaluation results to inform performance goals and professional development planning for the following year. Supervising Administrator responsibilities: Attend training sessions and be certified in 2011 Framework for Teaching© evaluation. Participate in the Orientation. Review EEP prior to the Evaluation Planning Session. Schedule and facilitate the Evaluation Planning Session, discuss actions needed to achieve goals identified in EEP, agree upon measures of goal completion; determine evidence sources; schedule observations and outline timing/process for other evidence collection. Complete a minimum of one announced observation of 45 minutes or two 20-minute observations. Complete a minimum of one pre-conference and post-conference observation with the teacher. Complete one unannounced observation of 45 minutes or two 20-minute observations. Complete 3-5 informal and unannounced observations (walkthroughs), of at least 5 minutes. Provide constructive, written or verbal formative feedback within one week of the observations. Monitor and review evidence collection throughout the year. 15 Prepare for and schedule the Final Evaluation Conference, review EEP and evidence collected, assign rating level for each component, and determine Professional Practice Rating. Facilitate the Final Evaluation Conference using the Final Evaluation form; review goal achievement, provide formative feedback, identify growth areas for the following year, and provide Professional Practice Rating. 16 SECTION 4: EVALUATION OF DEVELOPMENTAL PILOT Purpose and Basic Design During the 2012-13 school year, the Developmental Pilot of the Wisconsin Educator Effectiveness System will be evaluated by researchers from the Wisconsin Center for Education Research (WCER) at UW-Madison. The purpose of the evaluation is to study the quality of implementation of certain aspects of the Educator Effectiveness System and to provide responsive feedback to stakeholders as these aspects are tested by schools and districts. Parts of the evaluation will include analysis of implementation data, observations, surveys of teachers and principals, and interviews and focus groups of participating teachers, principals, and others. Topic areas will include understanding, attitudes, training received and needed, data utilization, and feedback on the pilot implementation. Since parts of the Educator Effectiveness System are still in development, the evaluation will not yet focus on impact on teacher effectiveness or student learning. The success of the evaluation will be determined by the pilot’s use of information obtained for improving the execution of the system to match its intended design. In subsequent years, the evaluation will gradually change to focus more on impact as the system’s aspects become more routinized. Participating Districts’ Roles in the Evaluation of the Developmental Pilot Districts and the individual educators participating in the Developmental Pilot are expected to help facilitate access to the data necessary for evaluating the Pilot. Data collection may include participating in individual or group interviews (focus groups), completing surveys, and/or sharing documents relevant to the system as requested by DPI and the evaluation team at WCER. This feedback will be critical as DPI works to learn from educators in the pilot and make needed adjustments in the System. Since the Educator Effectiveness System is still in development, any outcomes, including educator evaluation ratings, should NOT be used for employment or other high-stakes decisions. If an educator is identified during the Pilot as having serious performance deficiencies, that individual should be removed from participation in the pilot study and the district should implement their existing personnel support structures to address the situation. Teacher Evaluation During the Developmental Pilot Teachers will be evaluated by their supervising administrator (the principal or a designee). Those districts piloting the teacher practice evaluation process will include a small number of teachers (about 3) and their evaluators (principal or associate principal) and peer evaluators. 17 SECTION 5: DEFINITIONS The following are definitions for terminology relevant to the teacher practice evaluation system during the Developmental Pilot. Announced observation – a formal and pre-scheduled observation. It may be preceded by a preobservation discussion and followed by a post-observation discussion where verbal and/or written feedback is provided by the evaluator to the teacher. Artifacts – forms of evidence that support an educator’s evaluation. They may include lesson plans, examples of student work with teacher feedback, professional development plans and logs of contacts with families. Artifacts may take forms other than documents, such as videos of practice, portfolios, or other forms of evidence. Components - the descriptions of the aspects of a domain, there are 22 components in the 2011 Danielson Framework for Teaching©. Developmental Pilot – the limited test of a system to further refine its components and processes. The Wisconsin Model Educator Effectiveness System is undergoing a Developmental Pilot in 2012-2013 in approximately 100 volunteer districts across the state. Domains - There are four domains, or broad areas of teaching responsibility, included in the 2011 Framework for Teaching©: Planning & Preparation, Classroom Environment, Instruction, and Professional Responsibilities. Under each domain, 5-6 components describe the distinct aspects of a domain. Educator Effectiveness System– the Wisconsin state model for teacher and principal evaluation, built by and for Wisconsin educators. Its primary purpose is to support a system of continuous improvement of educator practice—from pre-service to inservice—that leads to improved student learning. The Educator Effectiveness System is legislatively mandated by 2011 Wisconsin Act 166. Educator Effectiveness Plan (EEP) – a document that lists the specific year-long goals for an educator, along with the actions, resource needs required to attain these goals and the measures necessary to evaluate the progress made on them. The EEP also allows for a review of progress made on each goal at the end of the year and serves as the organizer for feedback given by the educator’s evaluator during the Final Evaluation Conference. Evaluation Planning Session – A conference in the fall during which the teacher and his or her primary evaluator discuss the teacher’s Self-Rating and Educator Effectiveness Plan, agree upon goals and actions needed to meet goals, set an evaluation schedule and schedule any announced observations and the timing and process for other evidence collection. Evaluation Rubric – an evidence-based set of criteria across different domains of professional practice that guide an evaluation. Practice is rated across four rating categories that differentiate effectiveness, with each rating tied to specific look-fors to support the ratings. 18 Evidence collection – the systematic gathering of evidence that informs an educator’s practice evaluation. In the Educator Effectiveness System, multiple forms of evidence are required to support an educator’s evaluation and are listed in this guide in Appendix D. Final Evaluation Conference – the teacher and his/her evaluator meet to discuss achievement of the goals identified in the Educator Effectiveness Plan, review collected evidence, and discuss results and ratings. Formative Evaluation – the systematic gathering of information with the purpose of understanding an educator’s strengths and weaknesses in order to improve teaching and learning. Framework – the combination of the evaluation rubric, the collection and rating of evidence sources, and the process for evaluating an educator. The Framework is the basis for the evaluation process and the determination of an overall performance rating. Goal setting /Professional growth goals – establishing practice related goals are an important part of professional practice. Goals are set as educators prepare for their Educator Effectiveness Plan, and they are monitored by the educator during the year. Indicators/Look-fors – observable pieces of information for evaluators to identify or “look-for” during an observation or other evidence gathering. Indicators are listed in the Sources of Evidence (Appendix D). Inter-Rater Agreement – the extent to which two or more evaluators agree in their independent ratings of educators’ effectiveness. Observations – one source of evidence informing the evaluation of a teacher. Observations may be announced (scheduled in advance, possibly with a pre- and/or post-observation conference) or unannounced; formal (lengthy and with conferences) or informal (short and impromptu). Observations are carried out by the educator’s evaluator, who looks for evidence in one or more of the components of the Framework for Teaching© evaluation rubric. Orientation – The first step in the Educator Effectiveness evaluation process, the Orientation takes place prior to or at the beginning of the school year. Educators receive training in the use of their professional practice frameworks, the related tools and resources, timelines for implementation, and expectations for all participants in the system. Peer evaluator - A teacher who is trained to do informal observations. A peer evaluator may be a department-head or grade-level lead or other instructional leader. Peer evaluators are trained in the Framework and Educator Effectiveness process. Post-observation conference - a conference that takes place after a formal observation during which the evaluator provides feedback verbally and in writing to the teacher. Pre-observation conference - a conference that takes place before a formal observation during which the evaluator and teacher discuss important elements of the lesson or class that might be relevant to the observation. 19 Student Learning Objectives (SLOs) - a specific learning outcome measuring student achievement and/or growth throughout the year. More details on Student Learning Objectives can be found in the Student Learning Objectives process manual. Self-rating of performance – teachers will complete a self-assessment at the beginning of the year and will review it prior to each conference. This self-assessment will ask educators to reflect on their past performance, relevant student learning data, prior evaluation data, and professional goals for the upcoming year. Unannounced Observation – an observation that is not scheduled in advance. No pre-observation conference is held with an unannounced observation, but written or verbal feedback is expected within seven days. Walkthrough - a short (5 minute minimum) informal and unannounced observation of a teacher’s practice in the classroom. 20 SECTION 6: FREQUENTLY ASKED QUESTIONS The following are some frequently asked questions specific to the teacher practice evaluation system during the Developmental Pilot. For a more complete listing of FAQs, please see [Web link] 1) What is the Educator Effectiveness System? In 2011 the Wisconsin Educator Effectiveness System was endorsed within Wisconsin Act 166. The purpose is to provide a fair, valid and reliable state evaluation model for teachers and principals that supports continuous improvement of educator practice resulting in improved student learning. 2) How was the Educator Effectiveness System developed? State Superintendent Tony Evers convened an Educator Effectiveness System Development Team that included a broad representation of educators and education stakeholders to develop a framework for teacher and principal evaluation. A full list of participating organizations is available on the DPI website. The Design Team’s main recommendations were embraced in Act 166. 3) How will the Educator Effectiveness System be implemented? The Educator Effectiveness System will be implemented in 3 stages over 4 years: Stage 1: 2011-12: Evaluation process developed for a) teacher evaluation of practice; b) teacher developed Student Learning Objectives; c) principal evaluation of practice Stage 2: 2012-13: Developmental Pilot test of the 3 evaluation processes in school districts that have volunteered to participate and provide feedback for improvement. Principal School Learning Objectives will be tested in the spring of 2013. 2013-14: Full Pilot test of the Educator Effectiveness models for Teacher Effectiveness and Principal Effectiveness. These models will include the measures of practice and measures of student outcomes (to be developed in 2012-2013). This full pilot will occur in a sample of schools in all districts. Stage 3: 2014-15: Educator Effectiveness System implemented state-wide. The system will be fully implemented statewide, but will still be the subject on an external evaluation to address the impact and need for any additional improvements. 4) Have other states done this? Yes, many states have developed or are in the process of developing new teacher and principal evaluation systems that include both professional practice and student outcome measures. A number of states have passed legislation requiring the implementation of a statewide system and are on a timeline similar to Wisconsin. 5) How will staff understand the Educator Effectiveness System? All teachers participating in the Pilot will receive the same comprehensive training as administrators and evaluators. A series of informational webinars will be released to participating districts throughout the pilot years for use by all stakeholder groups. 21 The Framework for Educator Effectiveness is available and the process manuals for the Pilot Phase will be available on the DPI Educator Effectiveness website. 6) Who will be evaluated using the new Educator Effectiveness System? In 2012-13 principals and teachers will be evaluated. During 2012-13, teachers include PK – 12 (prekindergarten through grade 12) core content/grade level teachers and teachers of music, art and physical education. Assistant/associate principals and teacher specialist positions will be added later, once the evaluation processes are adapted for those educators. 7) Who will evaluate teachers? During the pilot stage (2012-14), an administrator will be the primary observer. A peer mentor/observer may also be utilized if agreed to by the teacher. Once the Educator Effectiveness System is fully implemented, observations may be made by a combination of administrators and peer mentors/observers. 8) How will evaluators be trained? Prior to the implementation of an Educator Effectiveness System, evaluators (including peer mentors and observers involved in this process) will be required to complete a comprehensive certification training program approved by DPI. Certification will include training on bias and consistency (i.e., inter-rater agreement). 9) Will the Educator Effectiveness System be used to make employment and/or compensation decisions? Not yet. During the pilot stage, 2012-14, pilot participants will be evaluated for formative purposes. Data collected during the pilot stage (2012-14) will not be used to make employment decisions because the system is still in development. Once the system is fully developed and tested, districts may choose to apply the systems to human resource decisions, including targeted professional development. Any performance ratings will not be made public. 10) Do walkthroughs count as observations? Yes. The process manuals call for a series of observations which include formal, as well as informal and unannounced observations such as walkthroughs. 11) Does every observation need to have a pre- and post- conference? No. Pre- and post- conferences are required for at least one formal observation. Teachers may request additional pre/post conferences with the evaluator or with a peer mentor/observer. 12) What will the evaluation cycle look like? 22 During the Pilot stage, 2012-14, evaluation activities will be used for formative purposes only. Once fully implemented in 2014-15, the Educator Effectiveness System requires annual evaluations, with a summative evaluation once in a three year cycle. The other two years will be formative, unless there is a determination that performance requires more comprehensive evaluation. 13) How will this evaluation system affect a teacher’s daily work load? The Educator Effectiveness cycle (outlined in the Process Manual for Teacher Evaluation) calls for Orientation, an Evaluation Planning Session, a series of observations, and a Final Evaluation conference with the evaluator. These events represent basic performance management processes that are common in high performing organizations. They will be coordinated by the teacher and the evaluator/mentor/observer. Teachers will also be required to collect classroom artifacts as evidence of ongoing effectiveness. This process will be addressed during training for the Educator Effectiveness System. 14) What if a teacher does not agree with the evaluation? During the developmental pilot, there will be no high stakes decisions connected with the evaluation process. If a teacher disagrees with their evaluation of practice, s/he can comment about the disagreement on the final evaluation form. Most districts already have an appeals process for personnel evaluations, but if not, they will need to develop an appeals process once the Educator Effectiveness System is fully implemented. 15) How will teachers and others provide feedback on the pilot experience? An external study will be conducted with pilot districts to collect ongoing feedback through interviews, surveys and document analysis. The data collected will provide ongoing feedback which will be used by the DPI Educator Effectiveness work groups to make improvements to the system. A designated email will be set up for on-going feedback to DPI on the Educator Effectiveness System, though this email is not intended to be used for responses to questions or technical support. 16) Based on the results of the pilot programs, will changes be made? Yes. The Pilot stage is intended to improve the Educator Effectiveness System and meet its intended purpose: to provide a fair, valid and reliable state evaluation model for teachers and principals that supports continuous improvement of educator practice resulting in improved student learning. 17) Will all districts be required to implement the same system, or is there flexibility? There is some flexibility. This system is intended to be fair and consistent across the state. While each district will have some flexibility in choosing standards and rubrics to assess teacher and principal professional practice, there will be similar features required to assess student and school outcomes (e.g., use of Student Learning Objectives and Value –Added Measures of achievement). All models must be approved through an equivalency process that will be defined by DPI. 23 18) Who should a district contact for technical advice? There will be designated contacts at DPI for questions about the developmental pilot. Technical assistance questions can be presented to the DPI contact. 24 APPENDIX A: Wisconsin Framework for Educator Effectiveness Design Team Report and Recommendations 25 APPENDIX B: Danielson 2011 Framework for Teaching Evaluation Rubric (to come) 26 APPENDIX C: DRAFT Wisconsin Teacher Self-Rating Form The self-rating process allows teachers to reflect on their practice and prior evaluations and prepare for the development of their Educator Effectiveness Plan. Using the Wisconsin Teaching Effectiveness Rubric, review the components within each domain and rate yourself accordingly. Based on that rating, identify an area of strength or an area for development related to that component. Domain 1 1.a 1.b 1.c 1.d 1.e 1.f Planning and Preparation Demonstrating Knowledge of Content and Pedagogy Demonstrating Knowledge of Students Setting Instructional Outcomes Demonstrating Knowledge of Resources Designing Coherent Instruction Designing Student Assessments Ineffective Minimally Effective Effective Highly Effective Effective Highly Effective Effective Highly Effective Domain 1: Planning and Preparation - Strength or Area for Development Based on the above ratings, identify a strength or area for development: Why did you make this assessment (what evidence was used to make the assessment)? Domain 2 2.a The Classroom Environment Creating Environment of Respect and Rapport 2.b Classroom Observations & Feedback 2.c 2.d Managing Classroom Procedures Managing Student Behavior Ineffective Minimally Effective Domain 2: The Classroom Environment – Strength or Area for Development Based on above rating, identify a strength or area for development: Why did you make this assessment (what evidence was used to make the assessment)? Domain 3 Instruction Ineffective Minimally Effective 27 3.a 3.b 3.c 3.d 3.e Communication with Students Questioning and Discussion Techniques Engaging Students in Learning Using Assessment in Instruction Demonstrating Flexibility and Responsiveness Domain 3: Instruction – Strength or Area for Development Based on above rating, identify a strength or area for development: Why did you make this assessment (what evidence was used to make the assessment)? Domain 4 4.a Professional Responsibilities Reflecting on Teaching 4.b Maintaining Accurate Records 4.c 4.d 4.e 4.f Communicating with Families Participating in a Professional Community Growing and Developing Professionally Showing Professionalism Ineffective Minimally Effective Effective Highly Effective Domain 4: Professional Responsibilities – Strength or Area for Development Based on above rating, identify a strength or area for development: Why did you make this assessment (what evidence was used to make the assessment)? 28 Additional comments about ratings, strengths, or areas for development: 29 APPENDIX D: DRAFT Teacher Educator Effectiveness Plan Teacher___________________________________________________ Grade(s)/Subject(s)______________________ Date Reviewed___________________ School_________________________________________________________________________Evaluator__________________________________________ Describe goal: Identify domain(s) and components related to goal: Strategies Timeline Potential Obstacles Resources Evidence Sources How will you measure progress on goal? Describe goal: 30 Identify domain(s) and components related to goal: Strategies Timeline Potential Obstacles Resources Evidence Sources How will you measure progress on goal? Describe goal: Identify domain(s) and components related to goal: 31 Strategies Timeline Potential Obstacles Resources Evidence Sources How will you measure progress on goal? 32 APPENDIX E: DRAFT Wisconsin Teacher Observation/Artifact Form Teacher_______________________________________________________ Date______________________________ School________________________________________ Observer ___________________________________________ Briefly describe the observation location and activity(s), and/or artifact: List the relevant component(s) for this observation and/or artifact: What evidence did you note during the observation and/or from the artifact that applies to the rubric(s)? Feedback to Teacher: 33 APPENDIX F: DRAFT Wisconsin Teacher Pre-Observation (Planning) Form 1.) To which part of your curriculum does this lesson relate? 2.) How does this learning “fit” in the sequence of learning for this class? 3.) Briefly describe the students in this class, including those with special needs. 4.) What are your learning outcomes for this lesson? What do you want the students to understand? 5.) How will you engage the students in the learning? What will you do? What will the students do? Will the students work in groups, or individually, or as a large group? Provide any worksheets or other materials the students will be using. 6.) How will you differentiate instruction for different individuals or groups of students in the class? 7.) How and when will you know whether the students have learned what you intend? 8.) Is there anything that you would like me to specifically observe during the lesson? APPENDIX G: DRAFT Wisconsin Teacher Post-Observation (Reflection) Form 34 1.) In general, how successful was the lesson? Did the students learn what you intended for them to learn? How do you know? 2.) If you were able to bring samples of student work, what do those samples reveal about those students’ levels of engagement and understanding? 3.) Comment on your classroom procedures, student conduct, and your use of physical space. To what extent did these contribute to student learning? 4.) Did you depart from your plan? If so, how, and why? 5.) Comment on different aspects of your instructional delivery (e.g. activities, grouping of students, materials, and resources). To what extend were they effective? 6.) If you had a chance to teach this lesson again to the same group of students, what would you do differently? 35 APPENDIX H: DRAFT Wisconsin Teacher Final Evaluation Form Teacher______________________________________School______________________________________Grade Level_____________________ Evaluator_____________________________________Date__________________________ Domain Component Ineffective (1) 1: Planning and Preparation 1.a Demonstrating Knowledge of Content and Pedagogy 1.b Demonstrating Knowledge of Students 1.c Setting Instructional Outcomes 1.d Demonstrating Knowledge of Resources 1.e Designing Coherent Instruction 1.f Designing Student Assessments Evidence/Artifact(s) used for evidence: Comments: 2: Classroom Environment 2.a Creating Environment of Respect and Rapport 2.b Classroom Observations & Feedback 2.c Managing Classroom Procedures 2.d Managing Student Behavior Evidence/Artifact(s) used for evidence: Comments: 3.a Communication with Students 3: Instruction 3.b Questioning and Discussion Techniques 3.c Engaging Students in Learning 3.d Using Assessment in Instruction 3.e Demonstrating Flexibility and Rating Minimally Effective (2) Effective (3) Highly Effective (4) Responsiveness Artifact(s) used for evidence: Comments: 4: Professional Responsibilities 4.a Reflecting on Teaching 4.b Maintaining Accurate Records 4.c Communicating with Families 4.d Participating in a Professional Community 4.e Growing and Developing Professionally 4.f Showing Professionalism Artifact(s) used for evidence: Comments: Total Ratings for Professional Practice Overall Professional Practice Rating: _________________________________ Key strengths: 37 Areas for development: Teacher comments on rating (optional) Teacher Signature_______________________________________________________________ Date__________________________ Evaluator Signature_______________________________________________________________ Date__________________________ 38 APPENDIX I: Teacher Evidence Sources Component 1a: Demonstrating knowledge of content and pedagogy 1b: Demonstrating knowledge of students Evidence Required or Strongly encouraged (Bold) Evaluator/Teacher conversations Lesson/unit plan Observation Evaluator/Teacher conversations Lesson/unit plan Observation Student / Parent Perceptions Domain 1: Planning and Preparation Indicator/“look-fors” (* = Danielson 2011) - Descriptive feedback on student work Adapting to the students in front of you Scaffolding based on student response Questioning process Teachers using vocabulary of the discipline Accuracy or inaccuracies in explanations 1. - Artifacts that show differentiation Artifacts of student interests and backgrounds, learning style, outside of school commitments (work, family responsibilities, etc.) Differentiated expectations based on assessment data/aligned with IEPs Obtaining information from students about: background/ interests, relationships/rapport 1. Same learning target, differentiated pathways Students can articulate the learning target when asked Targets reflect clear expectations that are aligned to standards Checking on student learning and adjusting future instruction Use of entry/exit slips Diversity of resources (books, websites, articles, media) Guest speakers College courses Collaboration with colleagues Connections with community (businesses, non-profit organizations, social groups) Evidence of teacher seeking out resources (online or other people) 1. - 1c: Setting instructional outcomes Evaluator/Teacher conversations Lesson/unit plan Observation - 1d: Demonstrating knowledge of resources Evaluator/Teacher conversations Lesson/unit plan Observation Evidence Collection - 2. 3. 2. 3. 4. 2. 3. 1. 2. 3. Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Lesson plans/unit plans Observations Notes taken during observation Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection) Lesson plans/unit plans Observations Notes taken during observation Optional Student / Parent surveys Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Lesson plans/unit plans Observations Notes taken during observation Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Lesson plans/unit plans Observations Notes taken during observation lesson plan 39 1e: Designing coherent instruction (use of appropriate data) Evaluator/Teacher conversations Lesson/unit plan Observation Pre observation form Learning targets Entry slips/Exit slips - Grouping of students Variety of activities Variety of instructional strategies Student voice and choice Same learning target, differentiated pathways Linking concepts/outcomes from previous lessons 1. 2. 3. 4. 1f: Designing student assessment Evaluator/Teacher conversations Lesson/unit plan Observation Formative and summative assessments and tools - Component 2a: Creating an environment of respect and rapport Evidence Required or Strongly encouraged (Bold) Evaluator / Teacher conversations Observations Video Illustrations of response to student work Alignment of assessment to learning target Plan for bringing student assessment into your instruction (feedback loop from student within assessment plan) Uses assessment to differentiate instruction Expectations are clearly written with descriptions of each level of performance Differentiates assessment methods to meet individual students needs. Students have weighed in on the rubric or assessment design Domain 2: The Classroom Environment Indicator/”look fors” (* = Danielson 2011) - Respectful talk and turn taking Respect for students’ background and life outside the classroom* Teacher and student body language* Physical proximity* Warmth and caring* Politeness* Encouragement* Active listening* Fairness* Response to student work: Positive reinforcement, respectful feedback, displaying or using student work 1. 2. 3. 4. Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Lesson plans/unit plans Observations Notes taken during observation Optional Pre observation form Learning targets Entry / exit slips Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Lesson plans/unit plans Observations Notes taken during observation Optional Formative and Summative assessments and tools (i.e. rubrics, scoring guides, checklists) Student developed assessments Evidence Collection 1. 2. 3. Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Use questions on observation forms (especially describing students in class) Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic) Observer takes notes during pre- and postobservation conferences Optional Video Response to student work 40 2b: Establishing a culture for learning Observations Student Assignments Lesson plan Video/Photos - Belief in the value of the work* High expectations, supported through both verbal and nonverbal behaviors* Expectation and recognition of quality* Expectation and recognition of effort and persistence Confidence in students’ ability evident in teacher’s and students’ language and behaviors* Expectation for all students to participate* Use of variety of modalities Student Assignments: Rigor, Rubrics Used, Teacher Feedback, Student Work Samples Use of Technology: Appropriate Use 1. 2. 3. 2c: Managing classroom procedures Observations Syllabus Parent Communication - Smooth functioning of all routines* Little or no loss of instructional time* Students playing an important role in carrying out the routines* Students knowing what to do, where to move* 1. 2. 2d: Managing student behavior Observations Disciplinary records/plans (content) Student / Parent Feedback Parent Communications - Clear standards of conduct, possibly posted, and referred to during a lesson* Teacher awareness of student conduct* Preventive action when needed by the teacher* Fairness* Absence of misbehavior* Reinforcement of positive behavior* Culturally responsive practices Time on task, posting classroom rules, positive reinforcement. 1. 2. Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic) Observer takes notes during pre- and postobservation conferences Observer interacts with student about what they are learning. Student Assignments Teacher provides examples of student work. Optional Lesson plan Video / Photo Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic) Observer takes notes on what is happening at what time, tracking student engagement / time on task, classroom artifacts on procedures. Optional Syllabus Communications to Students / Parents Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic) Observer may tally positive reinforcement vs. punitive disciplinary action. Optional Disciplinary records/plans (content) Student / Parent Feedback Parent Communications 41 2e: Organizing physical space Component 3a: Communicating with students Observations Video/Photos Online Course Structure Evidence Required or Strongly encouraged (Bold) Observations Assessed Student work Communications with students Handouts with instructions Formative Assessments - Pleasant, inviting atmosphere* Safe environment* Accessibility for all students Furniture arrangement suitable for the learning activities* Effective use of physical resources, including computer technology, by both teacher and Students* Established traffic patterns Domain 3: Instruction Indicator/”look-fors” (* = Danielson 2011) 1. - 1. - 3b: Using questioning and discussion techniques Observations Lesson Plan Videos Student Work Discussion Forums - 3c: Engaging students in learning Observations Lesson plans - Clarity of the purpose of the lesson* Clear directions and procedures specific to the lesson activities* Absence of content errors and clear explanations of concepts* Students comprehension of content* Correct and imaginative use of language Assessed student work - specific feedback Use of electronic communication: Emails, Wiki, Web pages Formative Assessments: Exit / Entry Slips 2. Observation Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic). Observer records classroom physical features on standard form or makes a physical map. Optional Photos, Videos Online course structure Evidence Collection 2. Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic). Dialogue with students and accurate / precise dialogue. Observer collects examples of written communications (emails / notes) Assessed Student Work Teacher provides samples of student work & written analysis after each observation or end of semester 3. Optional Electronic Communication Handouts with instructions Formative Assessments Questions of high cognitive challenge, formulated by both students and teacher* Questions with multiple correct answers, or multiple approaches even when there is a single correct response* Effective use of student responses and ideas* Discussion in which the teacher steps out of the central, mediating role* High levels of student participation in discussion* Student Work: Write/Pair/Share, student generated discussion questions, online discussion 1. Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic). Observer tracks student responses. 2. Optional Lesson Plan Videos Student Work Discussion Forums Activities aligned with the goals of the lesson* Student enthusiasm, interest, thinking, problem- 1. Observations Observer “scripts” lesson or takes notes on 42 Student work Use of technology/instructional resources - 3d: Using assessment in instruction Observations Formative / Summative Assessment Tools Lesson plans Conversations w / Evaluator - 3e: Demonstrating flexibility and responsiveness Component 4a: Reflecting on teaching Observations Lesson plans Use of supplemental instructional resources Student Feedback Evidence Required or Strongly encouraged (Bold) Evaluator/Teacher Conversations Observations Teacher PD goals/plan - solving, etc.* Learning tasks that require high-level student thinking and are aligned with lesson objectives* Students highly motivated to work on all tasks and persistent even when the tasks are challenging* Students actively “working,” rather than watching while their teacher “works”* Suitable pacing of the lesson: neither dragging nor rushed, with time for closure and student* Reflection* Student – student conversation Student directed or led activities / content Teacher paying close attention to evidence of student understanding* Teacher posing specifically created questions to elicit evidence of student understanding* Teacher circulating to monitor student learning and to offer feedback* Students assessing their own work against established criteria* Assessment tools: use of rubrics Formative / Summative assess tools: frequency, descriptive feedback to students Lesson plans adjusted based on assessment Incorporation of student interests and events of the day into a lesson* Visible adjustment in the face of student lack of understanding* Teacher seizing on a teachable moment* Lesson Plans: Use of formative assessment, use of multiple instructional strategies Domain 4: Professional Responsibilities Indicator/”look-fors” (* = Danielson 2011) - Revisions to lesson plans Notes to self / journaling Listening for analysis of what went well and didn’t go well specially – designed form (paper or electronic). Observer tracks student participation, time on task, examines student work, and teacher / student interactions. 2. Optional Lesson plans Student work Use of technology/instructional resources 1. Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic). Formative / Summative Assessment Tools Teacher provides formative and summative assessment tools or data 2. 3. Optional Lesson plans Conversations w / Evaluator 1. Observations Observer “scripts” lesson or takes notes on specially – designed form (paper or electronic). Takes notes on teacher taking advantage of teachable moments 2. Optional Lesson plans Use of supplemental instructional resources Student Feedback Evidence Collection 1. Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) 43 Student / Parent Feedback - 4b: Maintaining Accurate Records 4c: Communicating with families Evaluator/Teacher Conversations Lesson/unit plan Grade Book Artifact – teacher choice Systems for data collection Logs of phone calls/parent contacts/emails Observation during parent teacher meeting or conference - - 4d: Participating in a professional community Observation Attendance at PD sessions Mentoring other teachers Seeking mentorship - Specific examples of reflection from the lesson Ability to articulate strengths and weaknesses Capture student voice (survey, conversation w/ students) Varied data sources (observation data, parent feedback, evaluator feedback, peer feedback, student work, assessment results) Information about individual needs of students (IPs, etc.) Logs of phone calls/parent contacts/emails Lunch count Field trip data Assessment data Student’s own data files (dot charts, learning progress, graphs of progress, portfolios) Interaction with PTA or parent groups or parent volunteers Daily assignment notebooks Requiring parents to discuss and sign off on assignments Proactive or creative planning for parent-teacher conferences (including students in the process) Asking parents how they think their student is doing Regular parent “check-ins” Take or teach school-level professional development sessions Collegial planning (not working in isolation) Inviting people into your classroom Using resources (specialists, support staff) Treating people with respect Supportive and collaborative relationships with colleagues 2. Optional Grade Book PD Plan Student / Parent Survey Observations Notes taken during observation 1. Evaluator/Teacher conversations: Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Lesson plans/unit plans 2. 3. Optional Grade Book PD Plan Progress Reports 1. Logs of communication with parents Teacher log of communication (who, what, why, when, “so what”?) Progress reports, etc. 1. 2. 3. Observations Notes taken during observation Attendance at PD sessions Optional PLC agendas Evidence of community involvement Evidence of mentorship or seeking to be mentored 44 4e: Growing and developing professionally 4f: Demonstrating professionalism Evaluator/Teacher Conversations Observation Lesson/unit plan Professional development plan Mentoring involvement Attendance or presentation at professional organizations / conferences / workshops / PLCs Membership in professional associations or organizations Action research - Evaluator/Teacher conversations Observation of participation in PLC meetings or school leadership team meetings Scheduling and allocation of resources School and out-of-school volunteering - Academic inquiry, continuous improvement, lifelong learning 1. 2. 3. - Obtaining additional resources to support students individual needs above and beyond normal expectations (i.e., staying late to meet with students) Doing what’s best for kids rather than what is best for adults Mentors other teachers Draws people up to a higher standard Having the courage to press an opinion respectfully Being inclusive with communicating concerns (open, honest, transparent dialogue) Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) Lesson plans/unit plans Observations Notes taken during observation 4. Optional PD plan PLC agendas Evidence of participating in PD Evidence of mentorship or seeking to be mentored Action research 1. Evaluator/Teacher conversations Guiding questions Documentation of conversation (e.g., notes, written reflection, etc.) 2. Optional Teacher provides documents to evaluator at end of year/semester Written reflection Parent and student survey Observing teacher interacting with peers/students/families Record of unethical behavior 45

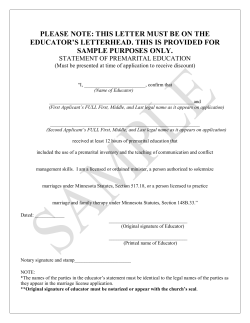

© Copyright 2026