Object and Event Extraction for Video Processing and Representation

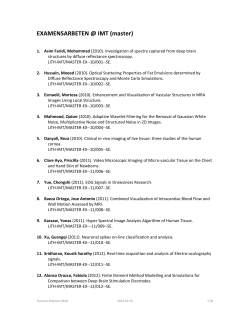

INRS-T´el´ecommunications

Institut national de la recherche scientifique

Object and Event Extraction

for Video Processing and Representation

in On-Line Video Applications

par

Aishy Amer

Th`ese

pr´esent´ee pour l’obtention

du grade de Philosophiae doctor (Ph.D.)

en T´el´ecommunications

Jury d’´evaluation

Examinateur externe

Dr. D. Nair

Examinateur externe

Prof. H. Schr¨oder

Examinateur interne

Prof. D. O’Shaughnessy

Codirecteur de recherche Prof. A. Mitiche

Directeur de recherche

Prof. E. Dubois

c Aishy Amer, Montr´eal, 20 d´ecembre 2001

°

National Instruments, Texas

Universit¨at Dortmund, Allemagne

INRS-T´el´ecommunications

INRS-T´el´ecommunications

Universit´e d’Ottawa

úG YË @ð

ø Q»X

úÍ @

úG ñk @ð

ø YË @ð

úG @ñk @

ø Q»X

:

h ð QË @

X @P

úÍ @

úÍ @

éÒÊ¿

Qº

Acknowledgments

I have enjoyed and benefited from the pleasant and stimulating research environment

at the INRS-T´el´ecommunications. Merci a` tous les membres du centre pour tous les

bons moments.

I am truly grateful to my advisor Prof. Eric Dubois for his wise suggestions and continuously encouraging support during the past four years. I would like also to express

my sincere gratitude to Prof. Amar Mitiche for his wonderful supervision and for the

active contributions to improve the text of this thesis. I also thank Prof. Konrad

for his help during initial work of this thesis. I am also indebted to my colleagues,

in particular Carlos, Fran¸cois, and Souad, for the interesting discussions and for the

active help to enhance this document.

My special thanks go to each member of the dissertation jury for having accepted to

evaluate such a long thesis. I have sincerely appreciated your comments and questions.

Some of the research presented in this text was initiated during my stay at Universit¨at Dortmund, Germany. I would like to thank Prof. Schr¨oder for advising my initial

work. I am also grateful to my German friends, former students, and colleagues, in

particular to Dr. H. Blume, who have greatly helped me in Dortmund. Danke sch¨on.

My deep gratitude to my sisters, my brothers, my nieces, and my nephews who have

supplied me with love and energy throughout my graduate education time. My special thanks go also to Sara, Alia, Marwan, Hakim, and to all my friends for being

supportive despite the distances.

I would like to express my warm appreciations to Hassan for caring and being patient

during the past four years. His understanding provided the strongest motivation to

finish this writing.

BñP

\

à ñºK

Ï

ÕÎ ªÜ @

à@

X A¿

CJjJË @

.

.

é¯ð

ÕÎ ªÒÊË

Õ¯

\

,

ú¯

ú¯

éK Q®Ë @

AÒ»

.

úG YK A @

Ð Y¯ @ð

éJÒÊªË @

.

É¿

éÊgQÜÏ @

á k

QÓ A«

2001

é A«

,

Èð@

à ñK A¿

JÓ

,

QÔ

«

@

ú¯

@ñK X

.

¼P @

Q

JºË @

.

X AJ B @

Ñêʯ

ÑêË

Q

Ð@

g @

áÓ

èQ» A

úÎ ë @

:

ɾK ð

.

éK QªË @

.

,

,

úÍ ñð

úæK Q ¯

X AJ B @

úÍ @

è Yë

úÍ AªË @ð

@ YJ»

áÓ ð

ú¯ ð

Ð Y® K @

á £ñË @

AëP ñJ»

ð

Ì

è AJ m

'@

'

H AK Ym

@

.

.

úG ñÒÊ«

á ÜÏ

Q¢«

H A¯ AK

.

vii

Abstract

As the use of video becomes increasingly popular, and wide spread through, for

instance, broadcast services, Internet, and security-related applications, providing

means for fast, automated, and effective techniques to represent video based on its

content, such as objects and meanings, are important topics of research. In a surveillance application, for instance, object extraction is necessary to detect and classify

object behavior, and with video databases, effective retrieval must be based on highlevel features and semantics. Automated content representation would significantly

facilitate the use and reduce the costs of video retrieval and surveillance by humans.

Most video representation systems are based on low-level quantitative features or

focus on narrow domains. There are few representation schemes based on semantics;

most of these are context-dependent and focus on the constraints of a narrow application and they lack, therefore, generality and flexibility. Most systems assume simple

environments, for example, without object occlusion or noise.

The goal of this thesis is to provide a stable content-based video representation

rich in terms of generic semantic features and moving objects. Objects are represented

using quantitative and qualitative low-level features. Generic semantic features are

represented using events and other high-level motion features. To achieve higher

applicability, content is extracted independently of the type and the context of the

input video.

The proposed system is aimed at three goals: flexible content representation,

reliable stable processing that foregoes the need for precision, and low computational

cost. The proposed system targets video of real environments such as those with

object occlusions and artifacts.

To achieve these goals, three processing levels are proposed: video enhancement

to estimate and reduce noise, video analysis to extract meaningful objects and their

spatio-temporal features, and video interpretation to extract context-independent

semantics such as events. The system is modular, and layered from low-level to

middle-level to high-level where levels exchange information.

The reliability of the proposed system is demonstrated by extensive experimentation on various indoor and outdoor video shots. Reliability is due to noise adaptation

and due to correction or compensation of estimation errors at one step by processing

at subsequent steps where higher-level information is available. The proposed system

provides a response in real-time for applications with a rate of up to 10 frames per

second on a shared computing machine. This response is achieved by dividing each

processing level into simple but effective tasks and avoiding complex operations.

ix

Extraction d’objets et d’´

ev´

enements

pour le traitement et la repr´

esentation

de s´

equences vid´

eo dans des applications en ligne

par Aishy Amer

R´

esum´

e

Table

I.

II.

III.

IV.

V.

VI.

des mati`eres

Contexte et objectif

Page ix

Aper¸cu sur les travaux r´ealis´es

Page xi

Approche propos´ee et m´ethodologie Page xii

R´esultats

Page xviii

Conclusion

Page xix

G´en´eralisation possible

Page xxii

I. Contexte et objectif

L’information visuelle s’est int´egr´e `a tous les secteurs de communication moderne, mˆeme aux services de largeur de bande basse comme la communication mobile. Ainsi, des techniques efficaces pour l’analyse, la description, la manipulation

et la r´ecup´eration d’information visuelle sont des sujets importants et pratiques de

recherche.

La s´equence vid´eo est soumise a` diverses interpr´etations par diff´erents observateurs et la repr´esentation vid´eo bas´ee sur le contenu peut varier selon les observateurs

et les applications. Plusieurs syst`emes existants abordent les probl`emes en essayant

de d´evelopper une solution g´en´erale pour toutes les applications vid´eo. D’autres se

concentrent sur la r´esolution de situations complexes, mais supposent un environnement simple, par exemple la r´esolution d’un probl`eme dans un environnement sans

x

R´esum´e

occlusion et sans bruit ou artefact.

La recherche dans le domaine de traitement vid´eo consid`ere les donn´ees vid´eo

comme ´etant des pixels, des blocs ou des structures globales pour repr´esenter le contenu vid´eo. Cependant, ceci n’est pas suffisant pour des applications vid´eo avanc´ees.

Dans une application de surveillance, par exemple, la repr´esentation vid´eo concernant l’objet n´ecessite la d´etection automatique d’activit´es. Pour des bases de donn´ees

vid´eo, la r´ecup´eration doit ˆetre bas´ee sur la s´emantique. Par cons´equent, une

repr´esentation vid´eo bas´ee contenu est devenue un domaine de recherche fort actif.

Des exemples de cette activit´e sont les standards multim´edia comme MPEG-4 et

MPEG-7 et divers projets de surveillance et recherche dans des bases de donn´ees

visuelles[129, 130].

´

Etant

donn´e la quantit´e croissante de stockage de donn´ees vid´eo, le d´eveloppement

de techniques automatiques et efficaces pour la repr´esentation vid´eo bas´ee sur le contenu, est un probl`eme d’importance croissante. Une telle repr´esentation vid´eo vise une

r´eduction significative de la quantit´e de donn´ees vid´eo en transformant une s´equence

vid´eo de quelques centaines ou milliers d’images en un petit jeu d’information. Cette

r´eduction de donn´ees a deux avantages : premi`erement une grande base de donn´ees

vid´eo peut ˆetre efficacement consult´ee en se basant sur son contenu et, deuxi`emement,

l’utilisation de m´emoire est r´eduite significativement.

Le d´eveloppement de la repr´esentation a` base de contenu exige la r´esolution de

deux probl`emes cl´es : D´efinir les contenus vid´eo int´eressants et les attributs appropri´es

pour repr´esenter ce contenu. L’´etude des propri´et´es du syst`eme visuel humain (SVH)

aide a` r´epondre `a certaines de ces questions. En regardant une s´equence vid´eo, le

SVH est plus sensible aux zones qui se d´eplacent et plus g´en´eralement aux objets

mobiles et a` leurs caract´eristiques. L’int´erˆet dans cette th`ese porte tout d’abord sur

les caract´eristiques de haut niveau des objets (c’est a` dire : la s´emantique) puis `a

ceux de bas niveau (par exemple : la texture). La question principale est alors :

quel est le niveau de s´emantique de l’objet et quelles sont les caract´eristiques les plus

importantes pour des applications vid´eo base´ees contenu ? Par exemple, quelles sont

les descriptions intentionnelles de haut niveau? Une observation importante consiste

`a ce que le sujet de la majorit´e de la s´equence vid´eo soit un objet en mouvement

[105, 72, 56] qui ex´ecute des activit´es et agit pour cr´eer des ´ev´enements. De plus,

le SVH est capable de rechercher des activit´es et des ´ev´enements int´eressants en

parcourant rapidement une s´equence vid´eo. Quelques syst`emes de repr´esentation

vid´eo mettent en oeuvre un tel “flipping” en sautant quelques images sur la base de

dispositifs de bas niveau (par exemple, utilisant l’extraction d’image clefs sur la base

de la couleur) ou en extrayant des indices globaux. Cela peut cependant omettre des

donn´ees int´eressantes parce que le “flipping” doit ˆetre bas´e sur des activit´es d’objet

ou des ´ev´enements combin´es avec des caract´eristiques `a bas niveau comme la forme.

xi

Ceci permettra une recherche et une surveillance vid´eo flexible.

Pour repr´esenter efficacement le signal vid´eo bas´e sur le contenu comme des objets

et des ´ev´enements, trois syst`emes de traitement vid´eo sont n´ecessaires: L’am´elioration

vid´eo pour r´eduire le bruit et l’artefact, l’analyse vid´eo pour extraire les caract´eristiques

vid´eo de bas niveau et l’interpr´etation vid´eo pour d´ecrire le contenu s´emantique.

II. Aper¸cu sur les travaux r´

ealis´

es

R´ecemment, des syst`emes vid´eo incorporant des repr´esentations vid´eo a` base de

contenu ont ´et´e d´evelopp´es. La plupart de la recherche les concernant se concentre

sur des techniques d’analyse vid´eo sans les int´egrer a` un syst`eme vid´eo fonctionnel.

Cela aboutit a` des techniques int´eressantes, mais souvent sans rapport a` l’aspect pratique. En outre, ces syst`emes repr´esentent le signal vid´eo par des caract´eristiques

globales de base comme le mouvement global ou les images clefs. Peu de syst`emes de

repr´esentation vid´eo ont trait´e ce sujet en utilisant des objets. En effet, la plupart

d’entre eux utilisent seulement des caract´eristiques de base comme le mouvement ou

la forme. Les repr´esentations vid´eo a` base d’objets les plus d´evelopp´ees se concentrent dans des domaines ´etroits (par exemple, des sc`enes de football ou le control du

trafic routier). Les syst`emes qui incorporent des repr´esentations vid´eo a` base d’objet

utilisent une description quantitative des donn´ees vid´eo et des objets. Les utilisateurs dans des applications vid´eo avanc´ees comme la recherche vid´eo ne connaissent

pas exactement a` quoi ressemble le signal vid´eo. Ils n’ont pas d’information quantitative exacte: le mouvement, la forme, ou la texture. Donc, des repr´esentations

vid´eo qualitatives faciles a` utiliser pour la recherche vid´eo ou la surveillance sont essentielles. Dans la recherche vid´eo, par exemple, les outils de r´ecup´eration vid´eo les

plus existant demandent `a l’utilisateur un croquis ou une vue de vid´eo, par exemple,

l’utilisateur fait la recherche apr`es examen de la base de donn´ees. Cependant, examiner de grandes bases de donn´ees, peut prendre beaucoup de temps, particuli`erement

pour des sc`enes complexes (c’est `a dire, la sc`ene r´eelle du monde). Des utilisateurs

doivent pouvoir d´ecrire un signal vid´eo par des descripteurs qualitatifs sont essentiels

pour le succ`es de telles applications.

Il y a peu d’ordre dans les activit´es et les ´ev´enements de vues vid´eo. Plusieurs

travaux sur la d´etection et la classification d’´ev´enements se concentrent sur la fa¸con

d’exprimer les ´ev´enements en utilisant des techniques d’intelligence artificielle comme,

par exemple, le raisonnement et l’inf´erence. Autres syst`emes de repr´esentation vid´eo

`a base d’´ev´enements sont developp´es pour des domaines sp´ecifiques.

Malgr´e la grande am´elioration de la qualit´e des syst`emes d’acquisition vid´eo modernes, le bruit reste toujours un probl`eme qui complique les algorithmes de traitement

du signal vid´eo. De plus, des artefacts de codage divers se retrouvent dans un signal

xii

R´esum´e

vid´eo transmis de mani`ere num´erique. Donc, la r´eduction du bruit et des artefacts est

toujours une tˆache importante dans les applications vid´eo. Les artefacts tant sonores

que num´eriques affectent la qualit´e de repr´esentation vid´eo et devraient ˆetre pris en

compte. Tandis que la r´eduction du bruit a ´et´e sujet de plusieurs publications (peu

de m´ethodes traitent des contraintes en temps r´eel) l’impact du codage d’artefact sur

l’ex´ecution du traitement de vid´eo n’est pas suffisamment ´etudi´e.

` cause de progr´es r´ealis´e en micro-´electronique, il est possible d’inclure des techA

niques sophistiqu´ees de traitement vid´eo, dans les services et les appareils. Cependant, l’aspect temps r´eel de ces nouvelles techniques est crucial pour une application

g´en´erale de ces techniques. Plusieurs applications vid´eo n´ecessitant une repr´esentation

du contenu vid´eo `a haut niveau se retrouvent dans un environnement en temps r´eel,

exigeant donc une performance en temps r´eel. Peu d’approches de repr´esentation a`

base de contenu prennent cette contrainte en consid´eration.

III. Approche propos´

ee et m´

ethodologie

L’objectif de cette th`ese est de d´evelopper un syst`eme pour la repr´esentation

vid´eo `a base de contenu par un syst`eme d’extraction automatis´e d’objets int´egr´e

`a un syst`eme de d´etection d’´ev´enements sans l’interaction de l’utilisateur. Le but

est de fournir une repr´esentation a` base de contenu riche en termes d’´ev´enements

g´en´eriques et de traiter une large gamme d’applications vid´eo pratiques. Les objets

sont repr´esent´es par des caract´eristiques a` bas niveau quantitatives et qualitatives.

Les ´ev´enements quant `a eux, sont repr´esent´es par des caract´eristiques haut niveau

des objets telles que les activit´es et les actions.

Cette ´etude ´evoque trois questions importantes : 1. Les repr´esentations d’objets

flexibles qui sont facilement d´etectables pour la r´ecapitulation, l’indexation et la manipulation vid´eo, 2. L’interpr´etation du signal vid´eo fiable et stable qui pr´ec`ede le

besoin de la pr´ecision et 3. Economie en temps de calcul. Ceci n´ecessite la contribution d’algorithmes qui r´epondent `a ces trois questions pour la r´ealisation des

applications vid´eo bas´ees sur le contenu et destin´ees au consommateur, comme la

surveillance et la recherche dans des bases de donn´ees vid´eo. Ces algorithmes doivent

se concentrer sur les questions pratiques d’analyse vid´eo orient´ee vers les besoins de

syst`emes vid´eo bas´es sur l’objet et l’´ev´enement.

Vue vidéo

Amélioration vidéo

Analyse vidéo

orienté object

Interprétation vidéo

orienté événement

Descripteurs

d’objets &

d’événements

Figure 1: Synoptique du syst`eme propos´e.

Le syst`eme propos´e est con¸cu pour des situations r´eelles avec des occlusions

xiii

d’objets, des changements d’illumination, du bruit et des artefacts. Pour produire

une repr´esentation vid´eo de haut niveau, la structure propos´ee implique trois ´etapes

(voir figure 1) : am´elioration, analyse et interpr´etation. Le signal vid´eo original

est pr´esent´e `a l’entr´ee du module am´elioration vid´eo et la sortie en est une version

am´elior´ee. Ce signal vid´eo am´elior´e est alors trait´e par le module d’analyse vid´eo qui

produit une description bas niveau de ce vid´eo. Le module interpr´etation vid´eo re¸coit

ces descriptions `a bas niveau et produit une description de haut niveau du signal vid´eo

original. Les r´esultats d’une ´etape sont int´egr´es pour soutenir les ´etapes suivantes

qui corrigent ou soutiennent a` leur tour les pas pr´ec´edents. Par exemple, un objet

suivi `a une ´etape est soutenu par la segmentation bas niveau. Les r´esultats du suivi

sont a` leur tour int´egr´es dans la segmentation pour la confirmer. Cette approche,

par analogie au syst`eme visuel humain (SVH), trouve des objets o`

u la d´etection partielle et l’identification pr´esentent un nouveau contexte qui approuve a` son tour la

nouvelle identification [103, 3]. Le syst`eme peut ˆetre vu comme une structure de

m´ethodes et d’algorithmes pour construire des syst`emes d’interpr´etation de sc`enes

dynamiques automatiques. La robustesse des m´ethodes propos´ees sera d´emontr´ee

par une exp´erimentation vaste sur des s´equences vid´eo bien connues. La robustesse

est le r´esultat de l’adaptation au bruit vid´eo et aux artefacts est due au traitement

qui consid`ere les erreurs (obtenues) `a une ´etape pour la correction ou la compensation

`a l’´etape suivante.

La structure propos´ee dans cette th`ese est con¸cue pour des applications o`

u une

interpr´etation du vid´eo d’entr´ee est n´ecessaire (“quel est le sujet de la s´equence”).

Ceci peut ˆetre illustr´e par deux exemples : surveillance et recherche vid´eo. Dans

un syst`eme de surveillance vid´eo, une alarme peut ˆetre activ´ee dans le cas o`

u le

syst`eme propos´e d´etecte un comportement d’objets particulier. Dans un syst`eme de

r´ecup´eration vid´eo, les utilisateurs peuvent rechercher un vid´eo en fournissant une

description qualitative, utilisant une information comme les attributs d’objet (par

exemple, la forme), les rapports spatiaux (par exemple : l’objet i est pr`es de l’objet

j), l’emplacement (par exemple : l’objet i est au fond de l’image) et les caract´eristiques

s´emantiques ou de haut niveau (par exemple, action d’objet : l’objet i se deplace a`

gauche et est ensuite occlus; ´ev´enement : le d´eplacement ou le d´epˆot d’objets). Le

syst`eme de r´ecup´eration peut alors trouver les trames dont le contenu correspond

le mieux a` la description qualitative. Une propri´et´e d´esirable pour les strat´egies de

repr´esentation vid´eo est de fournir une r´eponse `a des questions simples bas´ees sur

l’observation, comme par exemple, comment s´electionner des objets (qui est dans la

sc`ene), d´ecrire leur action (qu’est-ce qu’il/elle fait) et d´eterminer leur emplacement

(“o`

u” l’action a eu lieu) [72, 56]. En l’absence d’une application sp´ecifique, un mod`ele

g´en´erique doit ˆetre adaptable (par exemple, avec de nouvelles d´efinitions d’actions et

d´ev´enements).

xiv

R´esum´e

Sans consid´eration au temps r´eel, une approche de repr´esentation vid´eo `a base de

contenu pourrait perdre son applicabilit´e. En outre, la robustesse au bruit et artefacts

du codage est importante pour une utilisation de la solution. Le syst`eme propos´e est

con¸cu pour r´ealiser un ´equilibre entre efficacit´e, qualit´e de la solution et temps de

calcul. Le syst`eme repr´esent´e dans la figure 2 se d´ecrit ainsi:

• Le chapitre de l’am´elioration vid´eo classifie d’abord le bruit et les artefacts

dans le vid´eo puis utilise une nouvelle m´ethode pour l’estimation du bruit et

une autre pour la r´eduction spatiale du bruit (le Chapitre 2). La technique

d’estimation du bruit propos´ee produit des ´evaluations fiables dans des images

ayant des r´egions lisses et/ou structur´ees. Cette technique est une m´ethode `a

base de blocs qui prend en compte la structure de l’image en consid´eration et qui

utilise une mesure autre que la variance pour d´eterminer si un bloc est homog`ene

ou non. Elle n’utilise aucun seuil et automatise la proc´edure avec laquelle les

m´ethodes `a base de blocs proc`edent pour la moyennisation des variances de

blocs. Cette nouvelle technique de r´eduction spatiale du bruit utilise un filtre

pass-bas ayant une complexit´e r´eduite pour ´eliminer le bruit spatial non corr´el´e.

L’id´ee de base est d’utiliser un ensemble de filtres pass-haut pour d´etecter la

direction de filtrage la plus appropri´ee. Le filtre propos´e r´eduit le bruit dans

l’image en pr´eservant la structure, et est adapt´e `a la quantit´e du bruit estim´ee.

• L’analyse vid´eo est bas´ee principalement sur l’extraction des objets significatifs et des caract´eristiques quantitatives a` bas niveau a` partir de la vid´eo. La

m´ethode se fait en quatre ´etapes (les Chapitres 3-6):

◦

◦

◦

◦

segmentation des objets bas´ee sur la d´etection du mouvement,

estimation du mouvement bas´ee objet,

fusion de r´egions,

suivi d’objets bas´e sur une combinaison non lin´eaire de caract´eristiques

spatio-temporelles.

L’algorithme propos´e extrait les objets vid´eo importants pouvant ˆetre utilis´es

comme index dans les vid´eo bas´es sur la repr´esentation d’objets flexibles et pour

analyser la vid´eo afin de d´etecter les ´ev´enements li´es aux objets en vue d’une

repr´esentation et une interpr´etation s´emantique.

• La m´ethode de segmentation d’objets propos´ee classifie les pixels des images

vid´eo comme appartenant a` des objets distincts bas´es sur le mouvement et des

caract´eristiques de contour (le Chapitre 4). Elle comporte des proc´edures

simples, et est r´ealis´ee en quatre ´etapes :

◦ binarisation des images en entr´ee bas´ee sur la d´etection du mouvement.

◦ d´etection morphologique des fronti`eres.

◦ analyse des contours et la squ´eletisation.

xv

◦ ´etiquetage des objets.

La tˆache la plus critique est la binarisation qui doit ˆetre fiable dans toute la

s´equence vid´eo. L’algorithme de binarisation m´emorise le mouvement d´etect´e

pr´ec´edemment pour adapter le processus. La d´etection de fronti`eres fait appel

`a de nouvelles op´erations morphologiques dont les calculs sont r´eduits de facc

importante. L’avantage de la d´etection morphologique consiste en la g´en´eration

de fronti`eres continues d’un seul pixel de largeur. L’analyse de contour transforme les fronti`eres en contours et ´elimine les contours non d´esir´es. Cependant,

les petits contours sont seulement ´elimin´es s’ils ne peuvent ˆetre associ´es `a des

contours pr´ec´edemment extraits, c’est a` dire, si un petit contour n’a aucun contour correspondant dans l’image pr´ec´edente. Les petits contours se trouvant

compl`etement `a l’int´erieur d’un grand contour sont fusionn´es avec ce dernier

selon des crit`eres d’homog´en´eit´e.

• L’estimation du mouvement d´etermine l’´etendue et la direction du mouvement,

de l’objet extrait (le Chapitre 5). Dans l’approche propos´ee, l’information

extraite de l’objet (par exemple, la taille, la boite a` contour minimal (MBB :

Minimum bounding box), la position, la direction du mouvement) est utilis´ee

dans un processus `a base de r`egles ayant trois ´etapes : la correspondance d’objet,

l’estimation du mouvement MBB bas´e sur le d´eplacement des cˆot´es du MBB,

ce qui rend le processus d’estimation ind´ependant du signal d’intensit´e et du

type de mouvement de l’objet.

• La m´ethode de suivi suit et associe les objets en mouvement et enregistre leurs

caract´eristiques temporelles. Elle transforme les objets segment´es provenant du

processus de segmentation a` des objets de vid´eo (le Chapitre 6). Le principal

probl`eme des syst`emes de suivi est leur fiabilit´e en cas d’occlusion, d’ombrage

et de division d’objets. La m´ethode de suivi propos´ee est bas´ee sur un syst`eme

de vote non-lin´eaire pour r´esoudre le probl`eme de correspondances multiples.

Le probl`eme d’occlusion est att´enu´e par une proc´edure de d´etection simple

bas´ee sur les d´eplacements estim´es du MBB de l’objet, suivie d’une proc´edure

de pr´ediction bas´ee m´ediane qui fournit un estim´e raisonnable pour les objets

occlus (partiellement ou compl`etement). Les objets sont suivis une fois qu’ils entrent en sc`ene et aussi pendant l’occlusion, chose tr´es importante pour l’analyse

d’activit´e. Des r`egles de plausibilit´e pour la coh´erence, l’allocation d’erreur (error allowance) et le contrˆole sont propos´ees pour un suivi efficace pendant de

longues p´eriodes. Une contribution importante au niveau du suivi est la fusion

des r´egions fiables qui am´eliore la performance du syst`eme d’analyse vid´eo en

entier. L’algorithme propos´e a ´et´e d´evelopp´e pour des applications vid´eo bas´ees

sur le contenu comme la surveillance ou l’indexation et la r´ecup´eration.

• L’interpr´etation vid´eo est principalement concern´ee par l’extraction de car-

xvi

R´esum´e

act´eristiques vid´eo qualitatives et s´emantiques (le Chapitre 7). Son objectif

principal est de fournir des outils de repr´esentation par une combinaison des

caract´eristiques a` bas niveau, ainsi que des donn´ees vid´eo a` haut niveau. Cette

int´egration est essentielle pour faire face au contenu visuel g´en´erique ´enorme

contenu dans une s´equence vid´eo. L’accent est donc mis sur la r´ealisation d’une

proc´edure de d´etection d’´ev´enements g´en´eriques simple, robuste et automatique. Pour identifier les ´ev´enements, une description qualitative du mouvement de l’objet est un pas important vers l’association des caract´eristiques de

` cette fin,

base au processus de r´ecup´eration des caract´eristiques haut niveau. A

le comportement du mouvement des objets vid´eo est analys´e pour repr´esenter

les ´ev´enements ainsi que les actions importantes. Cela signifie que les caract´eristiques de base peuvent ˆetre combin´ees de mani`ere `a produire un effet sur

celles de haut niveau. D’abord des descriptions qualitatives des caract´eristiques

bas niveau des objets ainsi que les relations entre objets sont d´eriv´ees. Ensuite, des m´ethodes automatiques pour la repr´esentation vid´eo des contenus

haut niveau bas´ees sur les ´ev´enements sont propos´ees.

Le but du vid´eo est, en g´en´eral, de documenter les ´ev´enements et les activit´es

des objets ou ensemble d’objets. Les utilisateurs g´en´eralement recherchent des

objets vid´eo qui v´ehiculent un certain message [124] et ils captent et retiennent

en m´emoire [72, 56] : 1) des ´ev´enements (“ce qui est arriv´e”), 2) des objets

(“qui est dans la sc`ene”), 3) des emplacements (“o`

u cela arrive”), et 4) le temps

(“quand cela est arriv´e”).

Les utilisateurs sont ainsi attir´es par les objets et leurs caract´eristiques et se

concentrent d’abord sur les caract´eristiques a` haut niveau li´ees au mouvement.

En cons´equence, l’analyse vid´eo propos´ee est con¸cue pour :

◦ prendre des d´ecisions sur des donn´ees de plus bas niveau pour supporter

les niveaux suivants de traitement,

◦ repr´esenter qualitativement les objets ainsi que leurs caract´eristiques spatiales, temporelles et relationnelles,

◦ extraire les caract´eristiques s´emantiques des objets, qui sont g´en´eralement

utiles et

◦ fournir automatiquement et efficacement une r´eponse (op´eration en temps

r´eel).

xvii

Vue vidéo

Amélioration vidéo

Estimation & réduction

du bruit

Vidéo amélioré

Vidéo amélioré

Stabilisation de l’image

Extraction des attributs

globaux

Vidéo stable

Mise à jour de

Compensation du mouvement

l’arrière-plan

global

σn

Vidéo stable

Analyse vidéo

Segmentation d’objets basée sur

le mouvement => Pixels à objets

Pixels à vidéo objets

Estimation de mouvements

basée sur l’objet

Suivie d’objet basée sur le vote

=> Objets à vidéo objets

Descripteurs d’objets

spatio-temporal

Descripteurs globaux

de vue

Interprétation vidéo

Objets vidéo à évènements

Analyse & interprétation

des descripteurs à bas niveau

Détection & classification

d’événements

Résultats

Requète

(événements & objets)

Application basée sur l’objet et le mouvement

exemple: Décision basée sur l’événement

Figure 2: Diagramme de la structure propos´ee pour la repr´esentation de s´equence

vid´eo a` base d’objets et d’´ev´enements. Les contributions sont sch´ematis´ees par des

blocs de couleur grise et les interactions entre module sont marqu´ees par des fl`eches

en pointill´es. σn est l’´ecart-type du bruit d’image.

xviii

R´esum´e

IV. R´

esultats

Dans les applications vid´eo en temps r´eel, une analyse vid´eo orient´ee objet non

supervis´ee et rapide est n´ecessaire. Une ´evaluation tant objective que subjective et des

comparaisons montrent la robustesse de la m´ethode d’analyse vid´eo propos´ee sur des

images bruit´ees ainsi que sur des images pr´esentant des changements d’illumination,

alors que la complexit´e de cette m´ethode est r´eduite. Cette derni`ere utilise peu de

param`etres qui sont automatiquement ajust´es au bruit et aux changements temporels

dans la s´equence vid´eo (la figure 3 illustre un exemple d’analyse vid´eo de la s´equence

‘Autoroute’).

Cette th`ese propose un sch´ema d’interpr´etation vid´eo orient´e ´ev´enements. Pour

d´etecter les ´ev´enements, des descriptions perceptuelles des ´ev´enements communes

pour une large gamme d’applications sont propos´ees. Les ´ev´enements d´etect´es incluent : {entrer, apparaitre, sortir, disparaitre, se d´eplacer, arrˆeter, occluer/est Occlu´e,

a enlev´e/a ´et´e enlev´e, d´epos´e/a ´et´e d´epos´e, mouvement anormal }. Pour d´etecter

les ´ev´enements, le syst`eme propos´e contrˆole le comportement et les caract´eristiques

de chaque objet dans la sc`ene. Si des conditions sp´ecifiques sont rencontr´ees, les

´ev´enements li´es `a ces conditions sont d´etect´es. L’analyse des ´ev´enements est faite en

ligne, c’est a` dire, les ´ev´enements sont d´etect´es quand ils arrivent. Des caract´eristiques

sp´ecifiques telles que le mouvement ou la taille de l’objet sont m´emoris´ees pour chaque

image et sont compar´ees aux images suivantes dans la s´equence. La d´etection des

´ev´enements n’est pas bas´ee sur la g´eom´etrie des objets, mais sur leurs caract´eristiques

et relations dans le temps. La th`ese propose des mod`eles approximatifs mais efficaces

pour d´efinir les ´ev´enements utiles. Dans diverses applications, ces mod`eles approximatifs mˆeme s’ils ne sont pas pr´ecis, sont ad´equats.

Des exp´eriences utilisant des s´equences vid´eo bien connues ont permis de v´erifier

l’efficacit´e de l’´etude propos´ee (par exemple la figure 4). Les ´ev´enements d´etect´es

sont suffisamment communs pour une large gamme d’applications vid´eo pour aider

la surveillance et la recherche vid´eos. Par exemple:

1) le d´eplacement/d´epˆot d’objets dans un site de surveillance peut ˆetre contrˆol´e et

d´etect´e d`es qu’il arrive,

2) le d´eplacement des objets en mouvement peut ˆetre contrˆol´e et annonc´e, et

3) le comportement de clients dans des magasins ou des passages souterrains peut

ˆetre surveill´e.

Le syst`eme en entier (l’analyse vid´eo et l’interpr´etation) n´ecessite en moyenne entre 0.12 et 0.35 secondes pour traiter les donn´ees entre deux images. Typiquement,

la vid´eo surveillance est enregistr´e `a une vitesse de 3 `a 15 images par seconde. Le

syst`eme propos´e produit une r´eponse en temps r´eel pour des applications de surveillance avec une vitesse de 3 a` 10 images par seconde. Afin d’augmenter la performance

xix

du syst`eme pour des applications o`

u la fr´equence des images est ´elev´ee, l’optimisation

du code est n´ecessaire. Une acc´el´eration du processus peut ˆetre r´ealis´ee, i) en optimisant l’impl´ementation des occlusions et de la s´eparation d’objets, ii) en optimisant

l’impl´ementation des techniques de d´etection du changement et iii) en travaillant

avec des valeurs de type entier au lieu des r´eels (quand c’est appropri´e) et avec des

op´erations d’addition au lieu des multiplications.

V. Conclusion

Cette ´etude a apport´e un nouveau mod`ele pour le traitement et la repr´esentation

vid´eo d’une fa¸con ind´ependante du contexte et bas´e sur les objets et les ´evenements.

Le traitement et la repr´esentation vid´eo bas´es sur objet-´ev´enement sont n´ecessaires

pour la recherche automatique de base de donn´ees vid´eo et pour la surveillance

vid´eo. Ce mod`ele repr´esente la s´equence vid´eo en termes d’objets, un ensemble

riche d’´ev´enements g´en´eriques pouvant supporter des applications vid´eo bas´ees sur le

contenu et orient´ees utilisateur. Il permet une analyse efficace et flexible et une interpr´etation de la s´equence vid´eo dans des environnements r´eels o`

u occlusions, changements d’illumination, bruit et artefacts peuvent survenir.

` partir du mod`ele propos´e, le traitement se fait sur des niveaux, allant du bas

A

niveau au haut niveau en passant par un niveau interm´ediaire. Chaque niveau est organis´e de facon modulaire et est responsable d’un certain nombre d’aspects sp´ecifiques

d’analyse. Les r´esultats du traitement du niveau inf´erieur sont int´egr´es pour appuyer

le traitement aux niveaux sup´erieurs.

Les trois niveaux de traitement sont :

Am´

elioration vid´

eo Une nouvelle m´ethode pour le filtrage du bruit spatial, pr´eservant

la structure et `a complexit´e r´eduite a ´et´e d´evelopp´ee. Cette m´ethode de filtrage est

soutenue par une proc´edure qui estime correctement le bruit dans l’image. Le bruit

estim´e est aussi utilis´e pour soutenir l’analyse vid´eo qui suit (le Chapitre 2).

Analyse vid´

eo Une m´ethode d’extraction d’objets vid´eo significatifs et de leurs caract´eristiques d´etaill´ees a ´et´e d´evelopp´ee. Elle est bas´ee sur une segmentation fiable

et efficace du point de vue calcul, ainsi que sur le suivi d’objets. Elle est tol´erante

aux erreurs et peut corriger et d´etecter les erreurs. Le syst`eme peut fournir une

r´eponse en temps r´eel pour des applications de surveillance a` une vitesse de 3 a` 10

images par seconde. La m´ethode de suivi est efficace et applicable `a une large classe de

s´equences vid´eo. L’efficacit´e du syst`eme d’analyse vid´eo a ´et´e d´emontr´ee par plusieurs

exp´eriences stimulantes (les Chapitres 3-6).

Interpr´

etation vid´

eo Un syst`eme d’interpr´etation vid´eo ind´ependant du contexte a

´et´e d´evelopp´e. Il permet une repr´esentation vid´eo assez riche en termes d’´ev´enements

g´en´eriques et de caract´eristiques qualitatives d’objets, ce qui fait de lui un syst`eme

xx

R´esum´e

StartP:1

ObjID: 1

StartP:2

ObjID: 2

StartP:3

ObjID: 3

StartP:4

ObjID: 4

StartP:5

ObjID: 5

(a) La trajectoire de l’objet dans le plan image.

StartP:1

ObjID: 1

StartP:2

ObjID: 2

StartP:3

ObjID: 3

StartP:4

ObjID: 4

StartP:5

ObjID: 5

0

50

100

0

50

100

150

x

y 150

200

StartP:1

ObjID: 1

StartP:2

ObjID: 2

StartP:3

ObjID: 3

StartP:4

ObjID: 4

StartP:5

ObjID: 5

200

250

250

300

350

0

50

100

150

200

250

Img No.

(b) La trajectoire pour la direction horizontale.

300

300

0

50

100

150

200

250

300

Img No.

(c) La trajectoire pour la direction verticale.

Figure 3: Trajectoire des objets dans le s´equence ‘Autoroute’. Fig. 3(b) and (c)

permet une interpr´etation du comportement du mouvement de l’objet: par exemple,

O2 d´emarre `a gauche de l’image et se d´eplace jusqu’`a la fronti`ere de l’image. Des

objets divers entrent dans la sc`ene `a plusieurs reprises. Quelques objets se d´eplacent

vite tandis que d’autres sont plus lents. Le syst`eme suit tous les objets r´eguli`erement.

xxi

Figure 4: Images d’´ev´enements clefs de la s´equence ‘Hall’. Cette s´equence est un

exemple d’une application de surveillance a` l’int´erieur. ´ev´enement clef: O6 est d´epos´e

par l’objet O1 .

xxii

pouvant ˆetre utilis´e pour une large gamme d’applications. Des descripteurs d’objet

qualitatifs sont extraits par quantification des descriptions param´etriques des objets.

Pour extraire les ´ev´enements, des changements de mouvement et des caract´eristiques

sont continuellement trait´ees. Les ´ev´enements sont d´etect´es quand les conditions qui

les d´efinissent sont rencontr´ees. Des exp´eriences utilisant des s´equences vid´eo bien

connues ont d´emontr´e l’efficacit´e de la technique propos´e (le Chapitre 7).

VI. G´

en´

eralisation possible

Il y a un certain nombres de questions a` consid´erer si on veut am´eliorer la performance du syst`eme propos´e et ´elargir ses domaines d’applications.

• Temps d’ex´

ecution L’impl´ementation peut ˆetre optimis´ee pour une ex´ecution

plus rapide.

• Segmentation d’objet Dans le contexte du codage vid´eo MPEG, les vecteurs

de mouvement sont disponibles. Une extension imm´ediate de la technique de

segmentation propos´ee est d’int´egrer l’information du mouvement a` partir du

flot MPEG (MPEG-stream) pour supporter la segmentation d’objet. Cette

int´egration aurait pour but d’am´eliorer la segmentation sans une augmentation

significative du coˆ

ut de calcul.

• Estimation du mouvement Le mod`ele de mouvement propos´e peut ˆetre encore am´elior´e pour permettre une estimation plus pr´ecise. Une extension directe serait d’examiner les d´eplacements des extensions diagonales de l’objet

et d’adapter l’estimation du mouvement pr´ec´edemment ´evalu´e pour une plus

grande stabilit´e.

• Points culminants et ombres Le syst`eme peut profiter de la d´etection d’ombres

et de la compensation de leurs effets.

• Stabilisation de l’image Les techniques de stabilisation d’image peuvent ˆetre

utilis´ees pour permettre une analyse des donn´ees vid´eo `a partir de d´eplacement

de cam´eras et de changement d’arri`ere-plans.

• Interpr´

etation vid´

eo Un ensemble plus large d’´ev´enements peut ˆetre consid´er´e pour servir un ensemble plus grand d’applications. Une interface peut

ˆetre con¸cue pour faciliter l’interaction entre le syst`eme et l’utilisateur. La

d´efinition d’une telle interface exige une ´etude du besoin des utilisateurs de

ces applications vid´eo. Une classification des objets en mouvement et un mouvement de d´esordre (clutter motion) tel que le mouvement des arbres dans le

vent, peut ˆetre utilis´e pour rejeter des ´ev´enements. Une classification possible

est de diff´erencier entre un mouvement avec but (celui d’un v´ehicule ou d’une

personne) et un mouvement sans but.

Contents

R´

esum´

e

vii

1 Introduction

1.1 Background and objective . . . . . .

1.2 Review of related work . . . . . . . .

1.3 Proposed approach and methodology

1.4 Contributions . . . . . . . . . . . . .

1.5 Thesis outline . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

2 Video Enhancement

2.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . .

2.2 Noise and artifacts in video signals . . . . . . . . . . . .

2.3 Modeling of image noise . . . . . . . . . . . . . . . . . .

2.4 Noise estimation . . . . . . . . . . . . . . . . . . . . . .

2.4.1 Review of related work . . . . . . . . . . . . . . .

2.4.2 A homogeneity-oriented noise estimation . . . . .

2.4.3 Evaluation and comparison . . . . . . . . . . . .

2.4.4 Summary . . . . . . . . . . . . . . . . . . . . . .

2.5 Spatial noise reduction . . . . . . . . . . . . . . . . . . .

2.5.1 Review of related work . . . . . . . . . . . . . . .

2.5.2 Fast structure-preserving noise reduction method

2.5.3 Adaptation to image content and noise . . . . . .

2.5.4 Results and conclusions . . . . . . . . . . . . . .

2.5.5 Summary . . . . . . . . . . . . . . . . . . . . . .

3 Object-Oriented Video Analysis

3.1 Introduction . . . . . . . . . . . . .

3.2 Fundamental issues . . . . . . . . .

3.3 Related work . . . . . . . . . . . .

3.4 Overview of the proposed approach

3.5 Feature selection . . . . . . . . . .

3.5.1 Selection criteria . . . . . .

3.5.2 Feature descriptors . . . . .

3.6 Summary and outlook . . . . . . .

xxiii

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

1

3

4

8

9

.

.

.

.

.

.

.

.

.

.

.

.

.

.

11

11

13

15

16

16

18

20

24

24

24

28

29

31

33

.

.

.

.

.

.

.

.

39

39

41

42

44

46

46

48

51

xxiv

3.6.1

3.6.2

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Outlook . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4 Object Segmentation

4.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . .

4.2 Overall approach . . . . . . . . . . . . . . . . . . . . .

4.3 Motion detection . . . . . . . . . . . . . . . . . . . . .

4.3.1 Related work . . . . . . . . . . . . . . . . . . .

4.3.2 A memory-based motion detection method . . .

4.3.3 Results and comparison . . . . . . . . . . . . .

4.4 Thresholding for motion detection . . . . . . . . . . . .

4.4.1 Introduction . . . . . . . . . . . . . . . . . . . .

4.4.2 Review of thresholding methods . . . . . . . . .

4.4.3 Artifact-adaptive thresholding . . . . . . . . . .

4.4.4 Experimental results . . . . . . . . . . . . . . .

4.5 Morphological operations . . . . . . . . . . . . . . . . .

4.5.1 Introduction . . . . . . . . . . . . . . . . . . . .

4.5.2 Motivation for new operations . . . . . . . . . .

4.5.3 New morphological operations . . . . . . . . . .

4.5.4 Comparison and discussion . . . . . . . . . . . .

4.5.5 Morphological post-processing of binary images

4.6 Contour-based object labeling . . . . . . . . . . . . . .

4.6.1 Contour tracing . . . . . . . . . . . . . . . . . .

4.6.2 Object labeling . . . . . . . . . . . . . . . . . .

4.7 Evaluation of the segmentation method . . . . . . . . .

4.7.1 Evaluation criteria . . . . . . . . . . . . . . . .

4.7.2 Evaluation and comparison . . . . . . . . . . .

4.8 Summary . . . . . . . . . . . . . . . . . . . . . . . . .

5 Object-Based Motion Estimation

5.1 Introduction . . . . . . . . . . . . . . . . . .

5.2 Review of methods and motivation . . . . .

5.3 Modeling object motion . . . . . . . . . . .

5.4 Motion estimation based on object-matching

5.4.1 Overall approach . . . . . . . . . . .

5.4.2 Initial estimation . . . . . . . . . . .

5.4.3 Motion analysis and update . . . . .

5.5 Experimental results and discussion . . . . .

5.5.1 Evaluation criteria . . . . . . . . . .

5.5.2 Evaluation and discussion . . . . . .

5.6 Summary . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

51

52

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

53

53

54

54

56

58

60

61

61

61

64

66

67

67

71

72

75

76

77

77

81

81

81

82

84

.

.

.

.

.

.

.

.

.

.

.

91

91

92

94

95

95

96

98

102

102

103

107

xxv

6 Voting-Based Object Tracking

6.1 Introduction . . . . . . . . . . . . . . . . . .

6.2 Review of tracking algorithms . . . . . . . .

6.3 Non-linear object tracking by feature voting

6.3.1 HVS-related considerations . . . . . .

6.3.2 Overall approach . . . . . . . . . . .

6.3.3 Feature selection . . . . . . . . . . .

6.3.4 Feature integration by voting . . . .

6.3.5 Feature monitoring and correction . .

6.3.6 Region merging . . . . . . . . . . . .

6.3.7 Feature filtering . . . . . . . . . . . .

6.4 Results and discussions . . . . . . . . . . . .

6.5 Summary and outlook . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

109

109

110

112

112

113

116

117

122

125

127

129

131

.

.

.

.

.

.

.

.

.

.

.

.

.

.

145

145

145

147

149

150

153

153

153

155

156

163

165

169

170

8 Conclusion

8.1 Review of the thesis background . . . . . . . . . . . . . . . . . . . . .

8.2 Summary of contributions . . . . . . . . . . . . . . . . . . . . . . . .

8.3 Possible extensions . . . . . . . . . . . . . . . . . . . . . . . . . . . .

181

181

181

184

Bibliography

185

7 Video Interpretation

7.1 Introduction . . . . . . . . . . . . . . . . . .

7.1.1 Video representation strategies . . .

7.1.2 Problem statement . . . . . . . . . .

7.1.3 Related work . . . . . . . . . . . . .

7.1.4 Proposed framework . . . . . . . . .

7.2 Object-based representation . . . . . . . . .

7.2.1 Spatial features . . . . . . . . . . . .

7.2.2 Temporal features . . . . . . . . . . .

7.2.3 Object-relation features . . . . . . .

7.3 Event-based representation . . . . . . . . . .

7.4 Results and discussions . . . . . . . . . . . .

7.4.1 Event-based video summary . . . . .

7.4.2 Key-image based video representation

7.5 Summary . . . . . . . . . . . . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

A Applications

195

A.1 Video surveillance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

A.2 Video databases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

A.3 MPEG-7 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

xxvi

B Test Sequences

200

B.1 Indoor sequences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

B.2 Outdoor sequences . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

C Abbreviations

203

Chapter 1

Introduction

Video is becoming integrated in various personal and professional applications such

as entertainment, education, tele-medicine, databases, security applications and even

low-bandwidth wireless applications. As the use of video becomes increasingly popular, automated and effective techniques to represent video based on its content such

as objects and semantic features are important topics of research. Automated and

effective content-based video representation is significant in dealing with the explosion of visual information through broadcast services, Internet, and security-related

applications. For example, it would significantly facilitate the use and reduce costs

of video retrieval and surveillance by humans. This thesis develops a framework for

automated content-based video representation rich in terms of object and semantic

features. To keep the framework generally applicable, objects and semantic features

are extracted independently of the context of a video application. To test the reliability of the proposed framework, both indoor and outdoor real video environments

are used.

1.1

Background and objective

Given the ever-increasing amount of video and related storage, maintenance, and processing needs, developing automatic and effective techniques for content-based video

representation is a problem of increasing importance. Such video representation aims

at a significant reduction of the amount of video data by transforming a video shot

of some hundreds or thousands of images into a small set of information based on

its content. This data reduction has two advantages: large video databases can be

efficiently searched based on video content and memory usage is reduced significantly.

Despite the many contributions in the field of video and image processing, the

scientific community has debated the low impact on applications: video is subject

to different interpretation by different observers and video description can vary ac-

2

Introduction

cording to observers and applications [141, 103, 78, 73]. Many video processing and

representation techniques address problems by trying to develop a solution that is

general for all video applications. Some focus on solving complex situations but

assume a simple environment, for example, without object occlusion, noise, or artifacts. Video processing and representation research has mainly extracted video data

in terms of pixels, blocks, or some global structure to represent video content. This

is not sufficient for advanced video applications. In a surveillance application, for

instance, object-related video representation is necessary to automatically detect and

classify object behavior. With video databases, advanced retrieval must be based

on high-level object features and semantic interpretation. Consequently, advanced

content-based video representation has become a highly active field of research. Examples of this activity are the setting of multimedia standards such as MPEG-4 and

MPEG-7, and various video surveillance and retrieval projects [129, 130].

Developing advanced content-based video representation requires the resolution

of two key issues: defining what are interesting video contents and what features are

suitable to represent these contents. Properties of the human visual system (HVS)

help in solving some aspects of these issues: when viewing a video, the HVS is, in

general, attracted to moving objects and their features; it focuses first on the highlevel object features (e.g., meaning) and then on the low-level features (e.g., shape).

The main questions are: what level of object features and semantic content is most

important and most common for content-oriented video applications? Are high-level

intentional descriptions such as what a person is thinking needed? Is the context of

a video data necessary to extract useful content?

An important observation is that the subject of the majority of video is related

to moving objects, in particular people, that perform activities and interact creating

object meaning such as events [105, 72, 56]. A second observation is that the HVS is

able to search a video by quickly scanning (“flipping”) it for activities and interesting

events. In addition, to design widely applicable content-based video representations,

the extraction of video content independently of the context of the video data is

required. It can be concluded that objects and event-oriented semantic features are

important and common for a wide range of video applications.

To effectively represent video, three video processing levels are required: video

enhancement to reduce noise and artifacts, video analysis to extract low-level video

features, and video interpretation to describe content in semantic-related terms.

3

1.2

Review of related work

Recently, video systems supporting content-based video representations have been

developed1 . Most of these systems focus on video analysis techniques without integrating them into a functional video system. These techniques are interesting but

often irrelevant in practice. Furthermore, many systems represent video either by

low-level global features such as global motion or key-images. Some video representation systems implement flipping of video content by skipping some images based on

low-level features (e.g., using color-based key-image extraction) or extracting global

features. However, this may miss important data. Video flipping based on object

activities or related events combined with low-level features such as shape allow a

more focused yet flexible video representation for retrieval or surveillance.

Few video representation systems are based on objects; most of these use only

low-level features such as motion or shape to represent video. In addition, many

object-based video representations focus on narrow domains (e.g., soccer games or

traffic monitoring). Furthermore, some assume a simple environment, for example,

without object occlusion, noise, or artifacts. Moreover, systems that address objectbased video representations use a quantitative description of the video data and objects. Users of advanced video applications such as retrieval do not exactly know what

the video they are searching for looks like. They do not have exact quantitative information (do not memorize) the motion, shape, or texture. Therefore, user-friendly

qualitative video representations for retrieval or surveillance are essential. In video

retrieval, most existing video retrieval tools ask the user to sketch, to select an example of a video shot, e.g., after browsing the database, the user is looking for, or to

specify quantitative features of the shot. Browsing in large databases can be, however, time-consuming and sketching is a difficult task, especially for complex scenes

(i.e., real world scene). Providing users with means to describe a video by qualitative

descriptors is essential for the success of such applications.

There are few representation schemes concerning events occurring in video shots.

Much of the work on event detection and classification focuses on how to express

events using artificial intelligence techniques using, for instance, reasoning and inference methods. In addition, most high-level video representation techniques are

context-dependent. They focus on the constraints of a narrow application and they

lack, therefore, generality and flexibility.

Despite the large improvement of the quality of modern acquisition systems, noise

is still a problem that complicates video processing algorithms. In addition, various

coding artifacts are introduced in digitally transmitted video, for example, using the

1

Pertinent literature and specific applications of the proposed methods and algorithms are reviewed at the respective sections of the main chapters of this thesis.

4

Introduction

MPEG-2 video standard. Therefore, noise and artifact reduction is still an important

task and should be addressed. While noise reduction has been subject of many publications (where few methods deal with real-time constraints), the impact of coding

artifacts on the performance of video processing is not sufficiently studied.

Due to progress in micro-electronics, it is possible to include sophisticated video

processing techniques in video services and devices. Still, the real-time aspect of

new techniques is crucial for a wide application of these techniques. Many video

applications that need high-level video content representation occur in real-time environments so that their real-time performance is a critical requirement. Few of the

content-based representation approaches take this constraint into account.

1.3

Proposed approach and methodology

The objective of this thesis is to develop a modular automatic low-complexity functional system for content-based video representation with integrated automated object and event extraction systems without user interaction. The goal is to provide

stable representation of video content rich in terms of generic semantic features and

moving objects. Objects are represented using quantitative and qualitative low-level

features. The emphasis is on stable moving objects rather than in the accuracy of

their boundaries. Generic semantic meaning is represented using events and other

high-level object motion features, such as trajectory. The system should provide stable video representation for a broad range of practical video applications of indoor

and outdoor real environments of different contexts.

The proposed end-to-end system is oriented to three requirements: 1. flexible object representations that are easily cooperatively searched for video summarizing, indexing and manipulation, 2. reliable, stable processing of video that foregoes the need

for precision, and 3. low computational cost. This thesis contributes algorithms that

answer these three issues for the realization of content-based and consumer-oriented

video applications such as surveillance and video database retrieval. It focuses on

practical issues of video analysis oriented to the needs of object- and event-oriented

video systems, i.e., it focuses on the so-called “original core” of the problem as defined

in [141]. The proposed processing and representation target video of real environments

such as those with object occlusions, illumination changes, noise, or artifacts.

To achieve these requirements, the proposed system involves three processing modules (Fig. 1.1): enhancement, analysis, and interpretation. The input to the video

enhancement module is the original video and its output is an enhanced version of it.

This enhanced video is then processed by the video analysis module which outputs

low-level descriptions of the enhanced video. The video interpretation module takes

these low-level descriptions and produces high-level descriptions of the original video.

5

Video shot

Video Enhancement

Enhanced

video

σn

Object-oriented

Video analysis

Objects &

features

Event & object-oriented

Video interpretation

Event- &

objectdescriptors

Figure 1.1: Abstract diagram of the proposed system. σn is the estimated standard

deviation of the input image noise.

The proposed system can be viewed as a framework of methods and algorithms to

build automatic dynamic scene interpretation and representation. Such interpretation

and representation can be used in various video applications. Besides applications

such as video surveillance and retrieval, outputs of the proposed framework can be

used in a video understanding or a symbolic reasoning system. The proposed system is

designed for applications where an interpretation of the input video is needed (“what

is this sequence about?”). This can be illustrated by two examples: video surveillance

and retrieval. In a video surveillance system, an alarm can be activated in case the

proposed system detects a particular behavior of some objects. In a video retrieval

system, users can query a video by qualitative description, using information such

as object features (e.g., shape), spatial relationships (e.g., object i is close to object

j), location (e.g., object i is at the bottom of the image), and semantic or high-level

features (e.g., object action: object i moves left and then is occluded; event: removal

or deposit of objects, or object j stops and changes direction). The retrieval system

can then find the video frames whose contents best match the qualitative description.

An advantage of such a representation strategy is that it allows the construction of

user-friendly queries based on the observation that the interpretation of most people

is often imprecise. When viewing a video, they mainly memorize objects and related

semantic features. For example, who is in the scene, what he/she is doing, and

where the action takes place? People do not usually memorize quantitative object

features [72, 56]. In the absence of a specific application, such a generic model allows

scalability (e.g., by introducing new definitions of object actions or events).

The proposed system is designed to balance demands for effectiveness (solution

quality) and efficiency (computational cost). Without real-time consideration, a

content-based video representation approach could lose its applicability. Furthermore, robustness to image noise and coding artifacts is important for successful use

of the proposed solution. These goals are achieved by adaptation to noise and artifacts, by detection and correction or compensation of estimation errors at the various

processing levels, and by dividing the processing system into simple but effective tasks

so that complex operations are avoided. In Fig. 1.2, a block diagram of the proposed

system is displayed where contributions are underlaid with gray boxes, module interactions are marked by a dashed arrowed line, R(n) represents the background image

6

Introduction

of the video shot, and σn is the noise standard deviation. The system modules are:

Video shot

Video enhancement

Noise estimation &

reduction

Enhanced video

Enhanced video

Image stabilization

Stable video

Global feature extraction

Background update

R(n)

Video analysis

Pixels to video objects

Object-based

motion estimation

Global-motion compensation

σn

Stable video

Motion-based object segmentation

=> Pixels to objects

Voting-based object tracking

=> Objects to video objects

Spatio-temporal

object descriptors

Global shot descriptors

Video interpretation

Analysis & interpretation

Video objects to events

of low-level descriptors

Event detection & classification

Results

Requests

(Events & Objects)

Object & Event-based application

e.g., event-based decision-making

Figure 1.2: The proposed framework for object-and-event based video representation.

• The video enhancement module is based on new methods to estimate the image

noise and to reduce the image noise to facilitate subsequent processing.

• Image stabilization is the process of removing unwanted image changes. There

are global changes due to camera motion, jitter [53] and local changes due to unwanted (e.g., moving of background objects) object motion. Image stabilization

facilitates object-oriented video analysis by removing irrelevant changes. It can

be performed by global motion compensation or by object update techniques.

Global motion can be the result of camera motion or illumination change. The

latter can produce apparent motion. Robust estimation techniques aim at estimating accurate motion from an image sequence. Basic camera motions are

pan (right/left motion), zoom (focal length change), and tilt (up/down mo-

7

tion). Different parametric motion models can be used to estimate global motion [54, 25, 68, 144]. In practice, as a compromise between complexity and

flexibility, 2-D affine motion models are used [54]. Global motion compensation

stabilizes the image content by removing camera motion while preserving object

motion. Several studies show the effectiveness of using global motion compensation in the context of motion-based segmentation [85, 6, 144, 54, 25, 68, 144].

Also, background update is needed in object segmentation that uses image

differencing based on a background image (cf. Section 4.3). In such object

segmentation, the background image needs to be updated, for example, when

background objects move or when objects are added to or subtracted from the

background image. Various studies have addressed background update and

shown its usefulness for segmentation [35, 70, 65, 36, 50].

• The video analysis module extracts video objects and their low-level quantitative features. The method consist of four steps: motion-detection-based object segmentation, object-based motion estimation, region merging, and object

tracking based on a non-linear combination of spatio-temporal features. The

object segmentation classifies pixels of the video images into objects based on

motion and contour features. To focus on meaningful objects, the proposed

object segmentation uses a background image which can be extracted using a

background updates method. The motion estimation determines the magnitude

and direction of the motion, both translation and non-translation, of extracted

object. The tracking method tracks and links objects as they move and registers

their temporal features. It transforms segmented image objects of the object

segmentation module into video-wide objects. The main issue in tracking systems is reliability in case of occlusion and and object segmentation errors. The

proposed method focuses on solution to these problems. Representations of

object and global video features to be used in a low-level content-based video

representation are the output of the video analysis method.

• The video interpretation module extracts semantic-related and qualitative video

features. This is done by combining low-level features and high-level video data.

Semantic content is detected by integrating analysis and interpretation of video

content. Semantic content is represented by generic events independently of

the context of an application. To identify events, a qualitative description of

the object motion is an important step towards linking low-level features to

high-level feature retrieval. For this purpose, the motion behavior and low-level

features of video objects is analyzed to represent important events and actions.

The results of this processing step are qualitative descriptions of object features

and high-level descriptions of video content based on events.

Within the proposed framework, results of one step are integrated to support

8

Introduction

subsequent steps that in turn correct or support previous steps. For example, object

tracking is supported by low-level segmentation. Results of the tracking are in turn