How to Protect Your Privacy Using Search Engines?

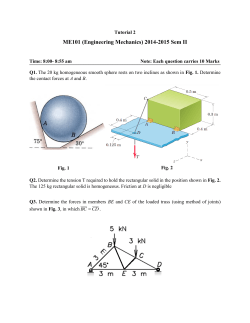

How to Protect Your Privacy Using Search Engines? Albin Petit ∗ Thomas Cerqueus Sonia Ben Mokhtar † Lionel Brunie Universit´e de Lyon, CNRS INSA-Lyon, LIRIS, UMR5205, F-69621, France [email protected] Harald Kosch Universit¨at Passau, Innstrasse 43, 94032 Passau, Germany [email protected] Abstract Contribution Our goal is to design a solution that helps people to protect their privacy while using a Web search engine. In fact, users, through their millions of queries sent every day to search engines, disclose a lot of information (e.g., interests, political and religious orientation, health status). This data, stored for each user in a profile, can possibly be used for other purposes by the search engine (for instance, commercial purpose) without any agreement from the user. Based on [2], we propose a new solution that allows a user protecting her own privacy while using a search engine. Our solution combines a new efficient unlinkability protocol with a new accurate indistinguishability protocol. Results demonstrate that our solution ensures a high privacy protection: less queries are de-anonymized than solutions from the state of the art. Besides, our solution is efficient (low latency and high throughput) and accurate (low irrelevant results introduce). The unlinkability is ensured by anonymizing the query while the indistinguishability is performed by obfuscating the query. Categories and Subject Descriptors K.4.1 [Computers and society]: Public Policy Issues—privacy General Terms Security, Algorithms Keywords Privacy, Web search, Unlinkability, Indistinguishability Introduction Querying search engines (e.g., Google, Bing, Yahoo!) is by far the most frequent activity performed by online users. As a direct consequence, search engines use all these queries to profile users. User profiles are needed to improve recommender systems (i.e., recommendations or rankings). As a business model, they are also earning money using user profiles through targeted advertising. However, these user profiles may contain sensitive information (e.g., interests, political and religious orientation, health status) that could be used for other purposes without users’ agreement. Solutions already exists to protect users’ privacy in the context of Web search. They consist either in hiding the identity of the user (unlinkability) or in obfuscating the content of the query (indistinguishability). In the first case, they usually hide user’s identity by querying search engines through anonymous networks. It prevents search engines to aggregate queries in a user profile. In the second type of solutions, noise is introduced in the query to distort the user profile created by the search engine. As a consequence, this user profile is not accurate (i.e., users’ interests cannot be retrieved). Nevertheless, these solutions are not totally satisfactory. [1] shows that a significant proportion of queries can be either de-anonymized (i.e., retrieve its requester’s identity) or cleaned (i.e., eliminate the noise deliberately introduced). Besides, as shown on the poster, a straightforward solution combining unlinkability and indistinguishability solutions still fails in protecting users in a satisfactory manner. ∗ PhD student † Presenter Query anonymization A query is composed of two types of information: the identity of the requester and the content of the query. Query anonymization consists in removing information about the identity of the requester (basically her IP address). To do so, it is necessary to send the user’s query through two servers: the first one, the receiver, knows the identity of the user while the second one, the issuer, knows the content of the request. The user ciphers her request with the public key of the issuer and sends the cipher text to the receiver. The receiver forwards the message to the issuer. Then, the issuer deciphers the message (with its private key) and sends the user’s query to the search engine. The issuer ciphers the results returned by the search engine and sends this cipher text back to the receiver. The receiver forwards the message to the user. Finally the user retrieves the results of her query by deciphering the message. Query obfuscation This step consists in misleading the search engine about the requester’s identity to protect queries against deanonimization. This is done by combining user query with multiple fake queries separated by the logical OR operator. Nevertheless, this protection can easily be broken if fake queries are not appropriately generated. To solve this issue, fake queries are generated based on previous real queries made by all users in the system. Previous queries are published by the issuer in an aggregated format for privacy reasons. References [1] Peddinti, Sai Teja, and Nitesh Saxena. “Web search query privacy: Evaluating query obfuscation and anonymizing networks.” Journal of Computer Security 22.1 (2014): 155-199. [2] A. Petit, S. Ben Mokhtar, L. Brunie, and H. Kosch, “Towards efficient and accurate privacy preserving web search,” in Proc. of the 9th Workshop on Middleware for Next Generation Internet, 2014. How to Protect Your Privacy Using Search Engines? PROBLEMATIC & MOTIVATION CONTRIBUTION Querying search engines (e.g., Google, Bing, Yahoo!) is by far the most frequent activity performed by online users for efficiently retrieving content from the tremendously increasing amount of data populating the Web. As a direct consequence, search engines are likely to gather, store and possibly leak sensitive information about individual users (e.g., interests, political and religious orientations, health status). In this context, developing solutions to enable private Web search is becoming absolutely crutial. Privacy Proxy PRIVACY PROXY and Accurate W SEARCH Efficient ENGINE RECEIVER ISSUER PEAS: Private, ❷ ❶ Petit, Thomas Cerqueus, Harald Kosc PEAS:E(Q⊕k Private, Efficient and Accurate Web SearchSoniaAlbin Q) xQ,E(Q⊕k Ben Mokhtar, and Lionel Brunie Q) ❸Q USER Albin Petit, Thomas Cerqueus, Sonia Ben Mokhtar, and Lionel Brunie {R} ❻ PRIVATE WEB SEARCH UNDER ATTACK ❹ R xQ,{R} Universit´e de Lyon, CNRS INSA-Lyon, LIRIS, kQ UMR5205, F-69621, France Email: [email protected] ❺ xQ <> Client Universit¨at Pas Innstrasse 43, 94032 Pas Email: harald.kosch@u Universit´e de Lyon, CNRS INSA-Lyon, LIRIS, UMR5205, F-69621, France Email: [email protected] Harald Kosch Universit¨at Passau Innstrasse 43, 94032 Passau, Germany kQ [email protected] Email: a 11 1 b a e 4 a b 11 The User 1 ciphers (RSA encryption), with a the issuer’s public key, the e 4 b of her aggregation query Q and a new symmetric cryptographicc key kdQ she c e b generates. This cipher-text is sent to the Receiver (❶). Then, the Receiver a 3 forwards thec message to the Issuer along with a identifier x that the 1unique Q d PEAS: Private, Efficient and Accurate Web Search e Receiver assigns to the query (❷). The Issuer usesd its private bkey to retrieve c PEAS: Private, Efficient and Ac Albin Petit, Thomas Cerqueus, Harald Kosch a the plaintext, and then submits the initial to Brunie the Search Engine Universit¨at Passau Sonia query Ben Mokhtar,Q and Lionel a Innstrasse 43, 94032 Passau, Universit´e de Lyon, CNRS Albin Petit,Germany Thomas Cerqueus, (❸). Upon receiving the results (❹), the issuer encrypts (AES cencryption), d Email: [email protected] INSA-Lyon, LIRIS, UMR5205, F-69621, France Sonia Ben Mokhtar, and Lionel Brunie b Email: [email protected] e Inn Universit´e de Lyon, CNRS b with the user's key kQ, the Search Engine's answer R and returns cipherE INSA-Lyon, LIRIS, UMR5205, F-69621, France a text along with the user's identifier xQ to the Receiver (❺). Finally, Email: [email protected] c a Receiver retrieves the identifier and sends the c a d the user identity using e b a e PEAS: Private, Efficient Accurate Web Search b encrypted results to the User (❻). The latter uses and her key kQ to retrieve the e b Albin Petit, Thomas Cerqueus, Harald Kosch e b results of its query Q. Universit¨at Passau Sonia Ben Mokhtar, and Lionel Brunie Attacks based on a similarity metric 13 Fig. 1. Example of graph extracted from the co-occurrence matrix. QUERY Q 1 Fig. 1. Example of graph extracted from the co-occurrence matrix. sim(q,PU) = sn ❸ ❷ Fig. 4. Example of graph extracted from the co-occurrence matrix. 10 a 1 . 0 0 1 ❶ . 5 1 0 1 0 USER PROFILE PU Fig. 4. Example of graph extracted fro Fig. 2. Example of graph extracted from the co-occurrence matrix. 0 0 d 0 . 1 0 n 0 1 ❶ Normalized dot product ❷ Sort ❸ Exponential smoothing 4 Fig. 2. Example of graph extracted from the co-occurrence matrix. ���������� 17 d Co-occurence matrix Fig. 3. Example of graph extracted from the co-occurrence matrix. ��������� ❸ Fig. 6. Example of graph extracted from the co-occurrence matrix. Co-occurence graph e b ���� ���� ������������ � �� Fig. 2. Example of graph extracted from the co-occurrence matrix. Fig. 3. Example of graph extracted from the co-occurrence matrix. MAXIMAL CLIQUES USER ������ NON a MAXIMAL CLIQUES OR � ���� ���� � ������������ � � ���� � ���������������������� � USER PROFILE �� � a Query OR FQ1 ORa … OR FQk Fig. 6. Example of graph extracted from the co-occurrence matrix. keywords have Fig. 3.not Example of graph extracted from the co-occurrence matrix. #keywords = been used by the user frequency ���������� USER 17 SEARCH ENGINE PRIVACY PROXY ISSUER RESULTS R’ The first part of our solution is a privacy-preserving proxy that divides the user’s identity and her query between two non-colluding servers: the Receiver and the Issuer. As such, the receiver knows the identity of the requester without learning her interests, while the issuer reads queries without knowing their provenance. However, unlinkability solutions are not enough to protect users’ privacy. To solve this issue, we combine the privacy proxy with an obfuscation mechanism to mislead the adversary about the requester’s identity (by combining user queries with multiple fake queries using the OR operator). We propose to generate fake queries based on an aggregated history of past requests gathered from users of our system (i.e., Group Profile). Privacy “ Low percentage of de-anonymized queries Sonia BEN MOKHTAR Lionel BRUNIE ���������������������������������������� ����������������������������������������� ������������������� �������������������� � � � � ���������������������� � Performance “ 3 times higher than ” ����� ����� ����� ����� �� ���� ������������� �� ��� ��� ��� ��� ��������������� ��� ��� Accuracy “ In 95% of cases, 80% Albin PETIT ���� ��� ��� ��� ��� �� ” Onion Routing CONTACTS ������������� ������� RECEIVER R’ Q’ ���������� ������������� FILTERING Q’ Harald KOSCH [email protected] [email protected] [email protected] [email protected] of the expected results are returned ” ���� ��� ��� ���� ��� ������ �� �� ���� ��� ��� ��� �� ��� ��� ��� ��� ���� ������� ���� ������ �� �� ��� ��� ��� ! 13 ��� ��� 17 Fig. 6. Example of graph extracted f Group Profile ������������������ ������������� R OBFUSCATION 4 c d The user generates k fake queries which have a corresponding graph that is either (i) a maximal clique, (ii) a non-maximal clique, or (iii) a noncomplete graph. These fake queries must have the same characteristics as the initial queries (i.e., number of keywords and usage frequency). Finally, the obfuscated query is created by aggregating all queries together separated by a logical OR operator. ���������� ������������� Q 1 b e b Fig. 3. Example of graph extracted from the co-occurrence matrix. User Profile Fig. 6. Exa d 17 c OVERVIEW OF THE CONTRIBUTION c 13 c d 17 d e 4 OBFUSCATED QUERY OR Fake Query c 17 Fig. 11 3. Example of graph extracted from the co-occurrence matrix. Fig. 5. Example of graph extracted f 1 b e b Query c a NON CLIQUES 5 �� ���� 1 ���������� ��������������������� ����������������� 10 ���� ���� ���� ��� ��� ������ ��� ��� ��������� ������ ����� �� ���� b 10 ” 5 by aggregating k fake queries a d e Fig. 5. Example of graph extracted from the co-occurrence matrix. Fig. 6. Example of graph extracted from the co-occurrence matrix. 5 “Hides users’ queries e a 13 17 17 �� Fig. 5. Exa c Fig. 4. Example of graph extracted f 11 4 10 GOOPIR 1 Fig. 2. Example of graph extracted from the co-occurrence matrix. ������ ��������� 1 d Fig. 1. Example of graph extracted from the co-occurrence matrix. 5 “Sends periodically fake queries ” ���� ��� ��� ��� �� Maximal cliques c a a The Issuer publishes periodically in a privacy-preserving way a Group a a c d e b Profile (❶). To do so, it aggregates users’ queries in a co-occurence matrix. b e b The goal of the obfuscation step is to mislead the adversary with fake e b queries. Consequently, users need to retrieve (from this matrix)c potential past d c b c d queries by first computing a co-occurence graph (❷) and then extracting all c d maximal cliques (❸). 5 TRACKMENOT 10 ������ ❷ 5 c �� Harald Kos 13 Universit¨ at Pa Fig. 4. Exa Innstrasse 43, 94032 Pa a Universit´e de Lyon, CNRS 10 ��� e Fig. 1. Example of graph extracted from the co-occurrence matrix. Albin Petit, Thomas Cerqueus, Sonia Ben Mokhtar, and Lionel Brunie 11 c 17 1 a a d e Email: harald.kosch@ INSA-Lyon, LIRIS, UMR5205, F-69621, France a e 4 b Email: [email protected] c 17 d 11 e Fig. 6. Example of graph extracted fro 1 b 10 11 ethe co-occurrence matrix. b e Fig. 3. Example of graph extracted from 4 b c d e b 0 4 Fig. 2. Example of graph extracted from the co-occurrence matrix. a 13 c d 17 13 13 11 c d 1 a c d Fig. 1. Example of graph extracted from the co-occurrence matrix. 0 0 e extracted b c of graph d from the co-occurrence matrix. Fig. 2. 4Example c 17 d Fig. 4. Example of graph extracted from the co-occurrence matrix. a b 0 0 Fig. 5. Example of graph extracted from the co-occurrence matrix. 5 b c d e ��� b e b 10 aa b b c 1 0 0 5 e 5 0 0 17 4 13 4 Fig. 4. Example of graph extracted from the co-occurrence matrix. 5 ❶ ISSUER a 0 1 0 10 11 a 1 d PEAS: Private, Efficient W b c d and Accurate a 5 “Hides the identity of the requester ” ���������� ����������������������� ��� 13 cc Innstrasse 43, 94032 Passau, Germany Universit´e de Lyon, CNRS d d Email: [email protected] INSA-Lyon, LIRIS, UMR5205, F-69621, France a c Fig. Example of of graph from the the co-occurrence matrix. 5.1.Example graphextracted extracted from co-occurrence matrix. Email:Fig. [email protected] Obfuscation a ���� Fig. 5. Example of graph extracted fro 11 1 4 Results UNLINKABILITY 11 10 c 1 . 1 1 5 b 0 . 0 0

© Copyright 2026