Economics 375: Introduction to Econometrics Homework #4 This

Economics 375: Introduction to Econometrics

Homework #4

This homework is due on February 28th.

1.

One tool to aid in understanding econometrics is the Monte Carlo experiment. A Monte

Carlo experiment allows a researcher to set up a known population regression function

(something we’ve assumed we can never observe) and then act like a normal econometrician,

forgetting for the moment the population regression function, and seeing how closely an OLS

estimate of the regression comes to the true and known population regression function.

Our experiment will demonstrate that OLS is unbiased (something that the Gauss Markov

Theorem should convince you of). The idea behind Monte Carlo experiments is to use the

computer to create a population regression function (which we usually think of as being

unobserved), then acting like we “forgot” the PRF, and using OLS to estimate the PRF. Thus, a

Monte Carlo experiment allows a researcher to understand if OLS actually comes “close” to the

PRF or not. In Stata, this is easy. Start by creating opening Stata and creating a new variable

titled x1. The easiest way to do this is to enter two commands in the command box without my

brackets {set obs 20} {gen x1 = _n in 1/20}. The first command tells stata that you are going to

use a data set with 20 observations. The second sets the value of x1 equal to the index number

(always starting at one and increasing by one for each observation). To create a random normal

variable type the following in the command line gen epsilon = rnormal(). This generates a new

series of 20 observations titled "epsilon" where each observation is a random draw from a normal

distribution with mean zero and variance one. In this case, gen represents the generate

command, epsilon is the name of a new variable you are creating that is a random draw from a

normal distribution. The generate command is a commonly used command in Stata. It might be

worth reading the help menu on this command (type: help generate in the command line).

After creating epsilon, we are ready to create our dependent variable y. To do this, let’s create a

population regression where we know the true slope and intercept of the regression. Since my

favorite football player was Dave Krieg of the Seattle Seahawks (#17) and my favorite baseball

player was Ryne Sandberg (#23), we will use these numbers to generate our dependent variable.

In Stata use the gen command to create y where:

yi = 17 + 23x1i + epsiloni

Your command will look something like: gen y = 17 + 23*x1 + epsilon

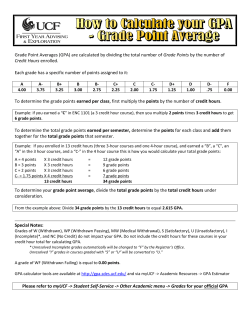

At this point, if you’ve done everything correctly, you should have data that looks something

like:

Using your created data, use Stata’s reg command to estimate the regression:

yi = B0 + B1x1i

a. Why didn’t you include epsilon in this regression?

Generally, econometricians do not observe the error term of any regression (if they did, they would not need to

estimate the regression since knowing the value of Y, X and the value of the error term would allow the

econometrician to perfectly observe the PRF).

b. What are your estimates of the true slope coefficients and intercept? Perform a hypothesis

test that B1 = 23. What do you find?

When I estimate my regression, I get:

. reg y x

Source

SS

df

MS

Model

Residual

352132.22

16.159413

1

18

352132.22

.897745166

Total

352148.379

19

18534.1252

y

Coef.

x1

_cons

23.01135

16.50865

Std. Err.

.0367422

.4401408

t

626.29

37.51

Number of obs

F( 1,

18)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

20

.

0.0000

1.0000

1.0000

.94749

P>|t|

[95% Conf. Interval]

0.000

0.000

22.93416

15.58395

23.08854

17.43335

Note: this will differ from what you obtained because my epsilon will differ from yours.

Ho: B1 = 23

Ha: B1 ≠ 23

t = (23.01135 – 23)/.0367422 = .308

tc,18,95% = 2.101

I fail to reject the null and conclude that I do not have enough evidence to state that the slope differs from 23.

c. When you turn this homework into me, I will ask the entire class to tell me their estimates of

the true, B0, and B1. I will then enter these estimates in a computer, order each from smallest to

largest, and then make a histogram of each estimate. What will this histogram look like? Why?

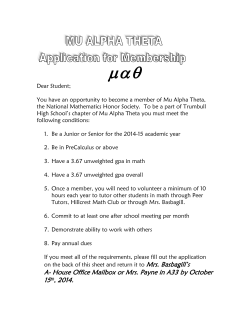

I performed 10,000 different experiments exactly as described above. I found:

It is pretty apparent that the estimates of B0 and B1 are normally distributed around the true population means of 17

and 23. The Gauss Markov theorem indicates that these distributions have the smallest variance—as long as our

classical assumptions are correct. Are they in this case?

Interestingly, when I performed this monte carlo experiment, I found the standard deviation of my 10,000 estimates

of B0 to equal .4612 and for B1 to equal .0386. For a moment, consider the variances of the slope and intercept we

discovered in class:

2

2

^ σ ∑ Xi

Var B 0 =

n ∑ x i2

σ2

^

Var B1 =

∑ x i2

In our data, the sum of the xi2 = 665. Since the variance of the regression is equal to 1 (by virtue of setting up

epsilon), the

1

^

Var B1 =

=.0015 and taking the square root gives the standard error of B1-hat of .0387—very

665

close to the monte carlo estimate of .0386.

∑ d. Use Stata to compute . The square root of this is termed the “standard error of the

regression.” Does it equal what you would expect? Why or why not?

.

In my case = .897. In this case, my best guess at the variance of the (usually unknown) error term is

.897. Since we know that the variance is actually equal to one and that there may be sampling error when stata

draws from a distribution with variance equal to one, my estimates turn out well.

2.

On the class webpage, I have posted a Stata file entitled “2002 Freshmen Data” This data

is comprised of all complete observations of the 2002 entering class of WWU freshmen

(graduating class of around 2006). The data definitions are:

aa: a variable equal to one if the incoming student previously earned an AA

actcomp: the student’s comprehensive ACT score

acteng: the student’s English ACT score

actmath: the student’s mathematics ACT score

ai: the admissions index assigned by WWU office of admissions

asian, black, white, Hispanic, other, native: a variable equal to one if the student is that ethnicity

f03 and f04: a variable equal to one if the student was enrolled in the fall of 2003 or the fall of

2004

gpa: the student’s GPA earned at WWU in fall 2002

summerstart: a variable equal to one if the student attended summerstart prior to enrolling in

WWU

fig: a variable equal to one if the student enrolled in a FIG course

firstgen: a variable equal to one if the student is a first generation college student

housing: a variable equal to one if the student lived on campus their first year at WWU

hrstrans: the number of credits transferred to WWU at time of admission

hsgpa: the student’s high school GPA

male: a variable equal to one if the student is male

resident: a variable equal to one if the student is a Washington resident

runstart: a variable equal to one if the student is a running start student

satmath: the student’s mathematics SAT score

satverb: the student’s verbal SAT score

Some of these variables (the 0/1 or “dummy” variables) will be discussed in the future.

Admissions officers are usually interested in the relation between high school performance and

college performance. Consider the population regression function:

gpai = β0 + β1hsgpai + εi

a. Use the “2002 Freshmen Data” to estimate this regression. How do you interpret your

estimate of β1? Why does this differ from what you found in homework #3?

I find:

. reg gpa hsgpa

Source

SS

df

MS

Model

Residual

195.606722

860.667118

1

2079

195.606722

.413981298

Total

1056.27384

2080

.507823962

gpa

Coef.

hsgpa

_cons

1.001795

-.7431143

Std. Err.

.0460869

.1628574

t

21.74

-4.56

Number of obs

F( 1, 2079)

Prob > F

R-squared

Adj R-squared

Root MSE

P>|t|

0.000

0.000

=

=

=

=

=

=

2081

472.50

0.0000

0.1852

0.1848

.64341

[95% Conf. Interval]

.9114138

-1.062495

1.092176

-.4237337

A unit increase in high school GPA increases college GPA by 1.001 units.

H0: β1 = 0

HA: β1 ≠ 0

t = (1.001 – 0)/.046 = 21.74

tc,95%,2161= 1.96

Reject H0 and conclude that high school GPA does impact college GPA.

b. When I was in high school, my teachers told me to expect, on average, to earn one grade

lower in college than what I averaged in high school. Based on the results of your regression,

would you agree with my teachers?

If my teachers were correct, then the population regression function would be Fall02GPAi = -1 + 1×HSGPAi + εi.

Note, that only under this population regression function would students earning any hsgpa would end up having

exactly a one unit lower college gpa.

At first glance, one might look at our regression estimates and quickly conclude that the intercept is not equal to -1

so my teachers were incorrect. However, our estimated intercept of -.79 is an estimate; how likely does -.79 result

when the true intercept is -1 is a question that can only be answered using a hypothesis test:

H0 : β0 = -1

HA : β0 ≠ -1

t = (-.74 - -1)/.162 = 1.59

tc,95%,2077 = 1.96

I would fail to reject this hypothesis and conclude that my intercept is statistically no different than -1 which is what

I would need for my college GPA to be one unit less than my high school GPA.

However, this too would be an incorrect approach as it only tests one of the two needed requirements (note, the

slope must equal one AND the intercept must equal -1). What I really need to test is:

H0 : β0 = -1 & β1 = 1

HA : (β0 ≠ -1 & β1 ≠ 1) or (β0 = -1 & β1 ≠ 1) or (β0 ≠ -1 & β1 = 1)

In this case, the alternative hypothesis simply states all of the options not included in the null hypothesis.

To test this, let us impose the null hypothesis: Fall02GPAi = -1 + 1×HSGPAi + εi.. This statement is something that

I cannot estimate, after all there are no coefficients in it. However, if the null hypothesis is true, then it must be that

Fall02GPAi +1 - HSGPAi = εi. If we square both sides and add them up, then I will have a restricted residual sum of

squares. I obtain this in stata by:

. gen restresid = gpa +1 - hsgpa

(33 missing values generated)

. gen restresid2 = restresid^2

(33 missing values generated)

. total restresid2

Total estimation

Number of obs

Total

restresid2

1004.833

=

2081

Std. Err.

[95% Conf. Interval]

30.02165

945.9573

I can produce an f-test using this information: F =

1063.708

(.

.)/

./(

)

=174.12

Fc,2,2079 ≈3.00

In this case, I reject the null hypothesis and conclude my high school teachers were wrong.

c. Now, consider the multivariate regression:

GPA = β0 + β1hsgpa + β2SatVerb + β3SatMath+ β4Runningstart+ β5Fig+ β6FirstGen

d. What is your estimate of β1? How do you interpret your estimate of β1? Why does this differ

from what you found in homework #3?

I find:

174.12142

. reg gpa hsgpa satverb satmath runningstart fig firstgen

Source

SS

df

MS

Model

Residual

288.775092

767.498748

6

2074

48.129182

.370057256

Total

1056.27384

2080

.507823962

gpa

Coef.

hsgpa

satverb

satmath

runningstart

fig

firstgen

_cons

.8877086

.0021184

.0006516

-.0800822

.2122322

-.0498571

-1.867454

Std. Err.

.044665

.0001952

.000203

.036424

.0386718

.0286369

.1747998

t

19.87

10.86

3.21

-2.20

5.49

-1.74

-10.68

Number of obs

F( 6, 2074)

Prob > F

R-squared

Adj R-squared

Root MSE

P>|t|

=

=

=

=

=

=

2081

130.06

0.0000

0.2734

0.2713

.60832

[95% Conf. Interval]

0.000

0.000

0.001

0.028

0.000

0.082

0.000

.8001157

.0017357

.0002536

-.1515137

.1363926

-.1060172

-2.210255

.9753015

.0025012

.0010496

-.0086507

.2880718

.006303

-1.524652

.

In this case, the coefficient on hsgpa tells me that an increase in high school gpa of one unit increases college gpa by

.887 units, holding SAT scores, running start, fig participation and first generation status constant. This last phrase

(holding…) is important because it recognizes that the impact of high school gpa on college gpa has been purged of

the impact of these other variables. It is for this reason that it differs from the estimates you found in part a.

e. Test to see if the variables hsgpa, SatVerb, SatMath, Runningstart, Fig and FirstGen predict a

significant amount of the variation in WWU first quarter GPA.

Ho : β1 = β2 = β3 = β4 = β5 = β6 = 0 ↔ R2 = 0

Ho : R2 ≠ 0

This requires an F-test where the restricted model forces the null hypothesis to be true—that is it forces all slope

coefficients to be equal to zero. I can do that in stata by:

. reg gpa

Source

SS

df

MS

Model

Residual

0

1056.27384

0

2080

.

.507823962

Total

1056.27384

2080

.507823962

gpa

Coef.

_cons

2.783631

My F-statistic is

Std. Err.

.0156214

(.

.)/

./(

)

t

178.19

Number of obs

F( 0, 2080)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

2081

0.00

.

0.0000

0.0000

.71262

P>|t|

[95% Conf. Interval]

0.000

2.752996

2.814266

=130.06

My Fc,95%,6,2074 ≈ 2.10

I reject the null and conclude that hsgpa, satverb, satmath, runningstart, fig, and firstgen statistically explain some of

the variation in college gpa. Said another way, I conclude R2 is not zero.

Note the F-statistic I find is exactly the same one Stata reports in the second line of the right column above the

regression results.

f. Does SatVerb and SatMath predict WWU first quarter GPA? Test this!

This is equivalent to testing

Ho : β2 = β3 = 0

Ho : (β2 = 0 & β3 ≠ 0) or (β2 ≠ 0 & β3 = 0) or (β2 ≠ 0 & β3 ≠ 0)

My restricted regression is:

130.06244

. reg gpa hsgpa runningstart fig firstgen

Source

SS

df

MS

Model

Residual

211.95788

844.31596

4

2076

52.98947

.406703256

Total

1056.27384

2080

.507823962

gpa

Coef.

hsgpa

runningstart

fig

firstgen

_cons

1.014023

-.0180178

.1766598

-.1303211

-.7631823

My f-statistic is

Std. Err.

.0457579

.0379149

.0403859

.0294277

.1623353

(.

.)/

./(

)

t

22.16

-0.48

4.37

-4.43

-4.70

Number of obs

F( 4, 2076)

Prob > F

R-squared

Adj R-squared

Root MSE

P>|t|

0.000

0.635

0.000

0.000

0.000

=

=

=

=

=

=

2081

130.29

0.0000

0.2007

0.1991

.63773

[95% Conf. Interval]

.9242872

-.092373

.0974587

-.188032

-1.081539

1.103759

.0563375

.2558609

-.0726103

-.4448252

= 103.79

Fc,95%,2,2074 ≈ 3.00

I reject the null hypothesis and conclude that satverb and satmath do predict fall quarter college GPA.

g. Offer two reasons why the coefficient on runningstart is negative. Is this coefficient

statistically different than zero? Is it “economically” important?

From the full regression, I would reject the null hypothesis that the runninstart coefficient is zero at the 10%, but not

the 5% level. For the moment, let us say that the 10% level is an acceptable rejection level for our purposes. If this

is the case, then I conclude that students in the running start program do worse as freshmen than those entering as

traditional students. Why might this be? There are a large number of reasons. Here I offer a few:

1. It might be that running start students enroll in more difficult courses their fall quarter (perhaps thinking that they

are prepared for them given their prior history);

2. Running start students may be worse students than traditional students in some ways unmeasured by the included

independent variables.

© Copyright 2026