Depth extraction of 3D objects using axially

Depth extraction of 3D objects using axially distributed image sensing Suk-Pyo Hong,1Donghak Shin,2,*Byung-Gook Lee,2 and Eun-Soo Kim1 1 2 HoloDigilog Human Media Research Center(HoloDigilog), 3D Display Research Center(3DRC), Kwangwoon University, Wolgye-Dong, Nowon-Gu, Seoul 139-701, South Korea Institute of Ambient Intelligence, Dongseo University, 47, Jurye-Ro, Sasang-Gu, Busan 617-716, South Korea * [email protected] Abstract: Axially distributed image sensing (ADS) technique is capable of capturing 3D objects and reconstructing high-resolution slice plane images for 3D objects. In this paper, we propose a computational method for depth extraction of 3D objects using ADS. In the proposed method, the highresolution elemental images are recorded by simply moving the camera along the optical axis and the recorded elemental images are used to generate a set of 3D slice images using the computational reconstruction algorithm based on ray back-projection. To extract depth of 3D object, we propose the simple block comparison algorithm between the first elemental image and a set of 3D slice images. This provides a simple computation process and robustness for depth extraction. To demonstrate our method, we carry out the preliminary experiments of three scenarios for 3D objects and the results are presented. To our best knowledge, this is the first report to extract the depth information using an ADS method. ©2012 Optical Society of America OCIS codes: (100.6890) Three-dimensional image processing; (110.6880) Three-dimensional image acquisition; (110.4190) Multiple imaging. References and links 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. T. Okoshi, Three-dimensional Imaging Techniques (Academic Press, 1976). J.-S. Ku, K.-M. Lee, and S.-U. Lee, “Multi-image matching for a general motion stereo camera model,” Pattern Recognit. 34(9), 1701–1712 (2001). A. Stern and B. Javidi, “Three-dimensional image sensing, visualization, and processing using integral imaging,” Proc. IEEE 94(3), 591–607 (2006). K. Itoh, W. Watanabe, H. Arimoto, and K. Isobe, “Coherence-based 3-D and spectral imaging and laserscanning microscopy,” Proc. IEEE 94(3), 608–628 (2006). J.-H. Park, K. Hong, and B. Lee, “Recent progress in three-dimensional information processing based on integral imaging,” Appl. Opt. 48(34), H77–H94 (2009). Y.-M. Kim, K.-H. Hong, and B. Lee, “Recent researches based on integral imaging display method,” 3D Research 1(1), 17–27 (2010). M. DaneshPanah, B. Javidi, and E. A. Watson, “Three dimensional imaging with randomly distributed sensors,” Opt. Express 16(9), 6368–6377 (2008). D. Shin and B. Javidi, “3D visualization of partially occluded objects using axially distributed sensing,” J. Disp. Technol. 7(5), 223–225 (2011). D. Shin and B. Javidi, “Three-dimensional imaging and visualization of partially occluded objects using axially distributed stereo image sensing,” Opt. Lett. 37(9), 1394–1396 (2012). J.-H. Park, S. Jung, H. Choi, Y. Kim, and B. Lee, “Depth extraction by use of a rectangular lens array and onedimensional elemental image modification,” Appl. Opt. 43(25), 4882–4895 (2004). J.-H. Park, Y. Kim, J. Kim, S.-W. Min, and B. Lee, “Three-dimensional display scheme based on integral imaging with three-dimensional information processing,” Opt. Express 12(24), 6020–6032 (2004). G. Passalis, N. Sgouros, S. Athineos, and T. Theoharis, “Enhanced reconstruction of three-dimensional shape and texture from integral photography images,” Appl. Opt. 46(22), 5311–5320 (2007). D.-H. Shin, B.-G. Lee, and J.-J. Lee, “Occlusion removal method of partially occluded 3D object using subimage block matching in computational integral imaging,” Opt. Express 16(21), 16294–16304 (2008). J.-H. Jung, K. Hong, G. Park, I. Chung, J.-H. Park, and B. Lee, “Reconstruction of three-dimensional occluded object using optical flow and triangular mesh reconstruction in integral imaging,” Opt. Express 18(25), 26373– 26387 (2010). #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23044 15. D.-C. Hwang, D.-H. Shin, S.-C. Kim, and E.-S. Kim, “Depth extraction of three-dimensional objects in space by the computational integral imaging reconstruction technique,” Appl. Opt. 47(19), D128–D135 (2008). 16. B.-G. Lee, H.-H. Kang, and E.-S. Kim, “Occlusion removal method of partially occluded object using variance in computational integral imaging,” 3D Research 1(2), 2–10 (2010). 17. M. DaneshPanah and B. Javidi, “Profilometry and optical slicing by passive three-dimensional imaging,” Opt. Lett. 34(7), 1105–1107 (2009). 18. J.-S. Jang and B. Javidi, “Three-dimensional synthetic aperture integral imaging,” Opt. Lett. 27(13), 1144–1146 (2002). 19. A. Stern and B. Javidi, “3-D computational synthetic aperture integral imaging (COMPSAII),” Opt. Express 11(19), 2446–2451 (2003). 20. Y. S. Hwang, S.-H. Hong, and B. Javidi, “Free view 3-D visualization of occluded objects by using computational synthetic aperture integral imaging,” J. Disp. Technol. 3(1), 64–70 (2007). 21. M. Cho and B. Javidi, “Three-dimensional visualization of objects in turbid water using integral imaging,” J. Disp. Technol. 6(10), 544–547 (2010). 22. R. Schulein, M. DaneshPanah, and B. Javidi, “3D imaging with axially distributed sensing,” Opt. Lett. 34(13), 2012–2014 (2009). 23. D. Shin, M. Cho, and B. Javidi, “Three-dimensional optical microscopy using axially distributed image sensing,” Opt. Lett. 35(21), 3646–3648 (2010). 24. D. Shin and B. Javidi, “Visualization of 3D objects in scattering medium using axially distributed sensing,” J. Disp. Technol. 8(6), 317–320 (2012). 1. Introduction Acquiring depth information from three-dimensional (3D) objects in real world is an important issue in many diverse fields of computer vision, 3D display and 3D recognition. Three-dimensional (3D) passive imaging makes it possible to extract depth information by recording different perspectives of 3D objects [1–9]. Among 3D passive imaging technologies, integral imaging has been widely studied for depth extraction [10–17]. In original structure of integral imaging, the lens array is used to record 3D objects. However, the recorded elemental images have low-resolution so that the extracted depth is poor. To overcome this problem, synthetic aperture integral imaging (SAII) was proposed [18–21], in which the multiple cameras or moving cameras are used to acquire depth information. Even though it can provide high-resolution depth information, the system structure of integral imaging is complex because of 2D grid array structure of many cameras or moving a camera along both horizontal and vertical direction. Recently, a simple modification structure of SAII was proposed. This is called an axially distributed image sensing (ADS) method [8,22–24], in which longitudinal perspective information is obtained by translating a camera along its optical axis. The high-resolution elemental images recorded from ADS can be used for acquiring high-resolution depth information of real-world 3D objects. However, it has a disadvantage of the limitation of the object pickup zone. Despite this limitation, the ADS is an attractive way to provide high-resolution elemental images and simple axial movement. In this paper, we propose a new depth extraction method of 3D objects using ADS. In the proposed method, we first record the high-resolution elemental images by moving the camera along the optical axis. Then, the recorded elemental images are reconstructed as a set of slice plane images using the computational reconstruction algorithm based on ray back-projection. Finally the depth information of 3D objects is extracted by the block comparison algorithm between first elemental image and many slice plane images. The proposed depth extraction is implemented by simple summation of elemental images and comparison of intensity distribution between blocks from first elemental image and slice plane images. This can avoid the complex corresponding search process in the conventional depth extraction algorithm. Therefore our method provides simple computation process and robustness for depth extraction. To show the usefulness of the proposed method, we carry out the preliminary experiments of 3D objects and the results are presented. 2. Depth extraction method using ADS The proposed depth extraction method using ADS is shown in Fig. 1. It is divided into three sub-parts: ADS pickup part, digital reconstruction part and the depth extraction part. #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23045 Fig. 1. Proposed depth extraction processes using ADS 2.1 ADS pickup The ADS pickup part of 3D objects is shown in Fig. 2, in which a single camera records elemental images by moving the camera along the optical axis [7]. Fig. 2. Pickup process in ADS We suppose that the focal length of imaging lens is defined as g. Then, the different elemental images are captured along optical axis (z axis) if 3D objects are located at a distance z1 away from the first camera. We can record k elemental images by shifting a camera with k-1 times. Then, the last camera is located at zk. If Δz is the separation distance between two adjacent cameras in ADS pickup process as shown in Fig. 2, the ith elemental image is captured from the camera located at a distance of di = Z-z1-(i-1)Δz from the object. Since we can capture each elemental image at a different camera position, it contains the object image with different scale level. In other words, when i = 1, the object image is the smallest in elemental image because the camera position is farthest from 3D object. While, the object image is largest when i = k. 2.2 Slice image reconstruction using the recorded elemental images The second part of the proposed depth extraction method is digital reconstruction. The aim of the digital reconstruction part is to generate slice plane image using the recorded elemental images from the first part of ADS pickup. The digital reconstruction process is shown in Fig. 3. The digital reconstruction process of 3D objects is the inverse process of ADS pickup. It can be implemented on the basis of an inverse mapping procedure through a pinhole model [7]. Each camera in the ADS pickup part is modeled as pinhole and elemental image at the original camera position as shown in Fig. 3. We assume that a reconstruction plane for the computational reconstruction of the 3D image is located at distance z = L. Each elemental image is inversely projected through each corresponding pinhole to the reconstruction plane at L. Then, the ith inversely projected elemental image is magnified with mi = (L-zi)/g. At the reconstruction plane, all inversely mapped elemental images are superimposed each other with the different magnifications. In Fig. 3, we assume that Ei is the ith elemental image with the size of p × q, and IL is the superimposed image of all the inversely mapped image of the elemental image at the reconstruction plane L. IL is given by #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23046 IL = 1 k k U i =1 i Ei (1) where Ui is upsampling operator for magnification of Ei at the reconstruction plane of z = L-zi and the size of IL is m1p × m1q. Since the superimposition of all elemental images can require large computation load due to the large magnification factor, we can modify Eq. (1) by using the downsampling operator Dr of image by a factor of r. Then, it becomes IL ≈ 1 k k D U i =1 r i Ei (2) In order to generate the 3D volume information, we repeat this process for different distances. Fig. 3. Principle of digital reconstruction in ADS 2.3 Depth extraction process In the depth extraction part of the proposed method, we extract depth information using many slice plane images from digital reconstruction described in Eq. (2). The reconstructed slice plane images consist of different mixture images with focused images and blurred images according to the reconstruction distances. The focused images of 3D object are reconstructed only at the original position of 3D object. While, blurred images are shown out of original position. Based on this principle, we want to find the focused image part in the reconstructed slice plane images. The depth extraction algorithm used in this paper is shown in Fig. 4. This is based on separation between focused image and defocused image. To find the focused image part in each slice plane image, we use block comparison between the first elemental image and slice plane images. Note that the first elemental image is composed of only focused images of 3D objects. We select block images in both first elemental image and the slice plane images. The block images have the same position as shown in Fig. 4. Then we apply block comparison algorithm into two selective block images. Here, block comparison calculates intensity error between the block image of the first elemental image (DrU1E1) and a set of block images of slice images (IL) reconstructed at L. The intensity error between the blocks along the reconstruction distance (L) can be defined as the sum of absolute difference (SAD): #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23047 b b SAD L = | D rU 1E 1 (x + i , y + j ) − I L (x + i , y + j ) | (3) i =1 j =1 where the block size is b × b. Using SAD result, the depth of each point (x,y) can be extracted by finding L value where SADL is minimized over the range of L. This can be mathematically formulated as: Lˆ (x , y ) = arg min SAD L (x , y ) (4) L where the size of Lˆ is m1p/r × m1q/r. The depth map can be obtained by calculating depth of all points in the images. Fig. 4. Block comparison algorithm between the first elemental image and slice images 2.4 Depth accuracy in ADS The accuracy of extracted depth information is dependent of the step size of L value. We can calculate the minimum step size of δ value using Fig. 5. As shown in Fig. 5, when we suppose two L values (L and L + δ), the inversely magnified elemental image is superimposed at the reconstruction plane. If the object is located a distance d from the axis and c is the pixel size of the camera sensor, the elemental image should be superimposed at different pixel of the reconstructed plane to separate the reconstructed plane image. The minimum step size of δ value is obtained when the pixel position in the reconstruction plane has the difference of 1 pixel. Then, it becomes δ= (L − z 1 )c . d (5) For practical parameters, we simulated Eq. (5) as shown in Fig. 6. As d increases, δ can be reduced. When c = 5 μm, d = 100 mm, L = 500 mm, and z1 = 300 mm, the minimum step size δ becomes 10 μm. #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23048 Fig. 5. Ray diagram for calculation of minimum step size Fig. 6. Calculation results of minimum step size for practical parameters. 3. Experiments and results To demonstrate our depth extraction method using ADS, we performed the preliminary experiments for three scenarios composed of different 3D objects. The experimental structure is shown in Fig. 7. #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23049 Fig. 7. Three scenarios for optical experiments object First objects were composed of simple characters ‘k’ and ‘w’. Second scenario has two objects of ‘car’ and ‘sign’ toys. Last one is three ‘car’ objects with continuous depth. Front object and behind object are positioned between approximately 350 mm and 500 away from the first camera, respectively. They are located at approximately 70 mm from the optical axis. We use a Nikon camera (D7000) whose pixels are 3872 × 2592. The imaging lens with focal length f = 40 mm is used in this experiments. The camera is translated at Δz = 3 mm increments for a total of K = 41 elemental images and a total displacement distance of 120 mm. Among them, some elemental images for second scenario are shown in Fig. 8. Fig. 8. Four examples of the recorded elemental images for second object (a) first elemental image (b) 10th elemental image (c) 20th elemental image (d) 30th elemental image After recording the elemental images using ADS, we reconstruct slice plane image for 3D objects. The recorded 41 elemental images are used to the digital reconstruction algorithm using Eq. (2). In digital reconstruction process, the pinhole gap was 40 mm and the interval of reconstruction plane was 10 mm, which is much larger than the minimum step size of Eq. (5). To simplify the computational calculations, the downsampling factor was 1/U1 and then Ui was normalized by U1. The reconstruction plane was moved from 300 mm to 600 mm. Then we can reconstruct 31 slice plane images. Some reconstructed slice plane images for second objects of Fig. 7(b) are shown in Fig. 9. #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23050 Fig. 9. Reconstructed slice images for second objects (a) at 350 mm (b) at 420 mm (c) at 500 mm. The ‘car’ object was focused at L = 350 as shown in Fig. 9(a).While, the ‘sign’ object was generated clearly at L = 500 mm as shown in Fig. 9(c). And, the blurred image was obtained at L = 420 mm as shown in Fig. 9(b). Now, we estimated the depth of the object using the first reference elemental image and 31 slice plane images. We apply the block comparison algorithm to these images. The block size was 8 × 8. The estimated depths are shown in Fig. 10. The depth results were well extracted. This result reveals that the proposed method can extract the 3D information of object effectively. Fig. 10. Extracted depth map for three scenarios 4. Conclusion In conclusion, we have presented a depth extraction method using ADS. In the proposed method, the high-resolution elemental images were recorded by simply moving the camera along the optical axis and the recorded elemental images were used to generate a set of 3D slice images using the computational reconstruction algorithm based on ray back-projection. To extract depth of 3D object, the block comparison algorithm between the first elemental image and a set of 3D slice images was used. Since ADS provides high-resolution slice plane images of 3D objects, we can extract high-resolution 3D depth information. We performed the preliminary experiment of 3D object and presented the results to show the usefulness of the proposed method. #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23051 Acknowledgment This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MEST) (No. 2012-0009223) #172985 - $15.00 USD (C) 2012 OSA Received 20 Jul 2012; revised 17 Sep 2012; accepted 18 Sep 2012; published 24 Sep 2012 8 October 2012 / Vol. 20, No. 21 / OPTICS EXPRESS 23052

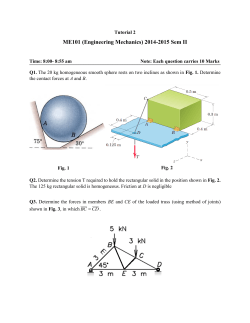

© Copyright 2026