Fisher Kernel for Deep Neural Activations

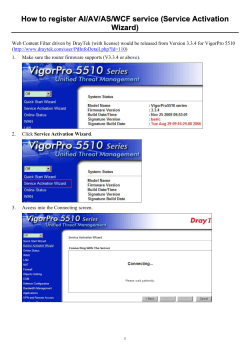

Exploiting the Power of Deep Learning with Constrained Data Anthony S. Paek Co-founder and CEO, Cldi Inc. Donggeun Yoo Ph.D Candidate, RCV Lab., KAIST Scene classification. Object detection. Fine-grained classification. Image retrieval. Attribute recognition. Domain transfer. Neural activation of a pre-trained CNN A generic image representation. Neural activation of a pre-trained CNN A generic image representation. Neural activation of a pre-trained CNN A generic image representation. Parameter transfer. Neural activation of a pre-trained CNN A generic image representation. Parameter transfer. Target DB Neural activation. Neural activation of a pre-trained CNN A generic image representation. Parameter transfer. Target DB Target classifier. Neural activation. Successful use of CNN activations. Object classification on PASCAL VOC 2007. [Oquab et al., CVPR 2014] 60.3% 77.70% Object detection on PASCAL VOC 2007. [Girshick et al., CVPR 2014] 34.30% 58.50% Scene classification on MIT Indoor 67. [Razavian et al., CVPRW 2014] 63.18% 68.24% Fine-grained classification on Oxford 102 Flowers. 72.70% 86.80% [Razavian et al., CVPRW 2014] Image retrieval on Oxford 5k. [Babenko et al., ECCV 2014] 44.80% 55.70% How have CNN activations been used so far? Single activation vector per image. (Most of works.) Pre-trained CNN. How have CNN activations been used so far? Average pooling of multiple activations per image. Pre-trained CNN. … Average pooling. How have been CNN activations used so far? Single activation: 72.36% on PASCAL VOC 2007 classification [Chatfield et al., BMVC 2014] Marginal improvement. Average pooling: 73.60% on PASCAL VOC 2007 classification. Our strategy: 1. Abundant amount of local activation statistics. 2. State-of-the-art Fisher kernel framework. [Perronnin et al., ECCV 2010] … Pre-trained CNN. … Multi-scale dense regions Fisher kernel. … Fast extraction of multi-scale dense activations. Idea: FC layers can be implemented with Conv layers. 227×227×3 FC 8 FC 7 FC 6 Conv. 5 Conv. 4 Conv. 3 Conv. 2 Conv. 1 322×322×3 FC 8 FC 7 FC 6 Conv. 5 Conv. 4 Conv. 3 Conv. 2 Conv. 1 Fast extraction of multi-scale dense activations. Idea: FC layers can be implemented with Conv layers. 227×227×3 FC 8 FC 7 FC 6 Conv. 5 Conv. 4 Conv. 3 Conv. 2 Conv. 1 322×322×3 FC 8 Conv. 7 Conv. 6 Conv. 5 Conv. 4 Conv. 3 Conv. 2 Conv. 1 Fast extraction of multi-scale dense activations. 4,096 … … … Multi-scale dense activations. Fast extraction of multi-scale dense activations. 4,096 … … Each activation vector comes from a single patch. Multi-scale dense activations. Fast extraction of multi-scale dense activations. We can extract thousands of rich activations (FC7) in a reasonable time. CPU: 2.6GHz Intel Xeon. GPU: GTX TITAN Black. Pooling: Fisher kernel. However, it does not show significant improvements. Single activation: 72.36% in VOC 2007. This approach: 74.96% Fisher kernel. … 7 scales. Fisher vector. SVM. Pooling: Fisher kernel. Devil is in the scale property of CNN activation ! … … Image scale. Pooling: Fisher kernel. Devil is in the scale property of CNN activation ! Dense-SIFT well captures low-level texture in small regions. PASCAL VOC 2007 Pooling: Fisher kernel. Devil is in the scale property of CNN activation ! CNN activation well captures object parts or entire objects. PASCAL VOC 2007 PASCAL VOC 2007 Solution: Average pooling of normalized Fisher vectors in each scale. Fisher kernel. Fisher kernel. 𝑙2 -norm. Fisher kernel. 𝑙2 -norm. … … … 7 scales. 𝑙2 -norm. Average pooling. Final representation. Solution: Average pooling of normalized Fisher vectors in each scale. It shows significant improvements in VOC 2007. Single activation: 72.36%()()()()()() Naïve Fisher: 74.96% (+2.60%) Proposed method: 83.20% (+10.84%) Solution: Average pooling of normalized Fisher vectors in each scale. Evaluation with three different visual recognition tasks. Scene classification: MIT Indoor 67. Object classification: PASCAL VOC 2007. Fine-grained classification: Oxford 102 Flowers. Baselines and ours. +7~18% to the single activation. +6~15% to the average pooling. State-of-the-art and ours (MIT Indoor 67) State-of-the-art and ours (PASCAL VOC 2007) FT: Fine-tuning CNN on VOC 2007. BB: Use of bounding box annotation in training. State-of-the-art and ours (Oxford 102 Flowers) One more thing: Obtaining a reliable object-confidence map. One more thing: Obtaining a reliable object-confidence map. 1) A single local activation can be encoded to a Fisher vector. Fisher kernel. Fisher vector. One more thing: Obtaining a reliable object-confidence map. 1) A single local activation can be encoded to a Fisher vector. 2) Each corresponding region is scored by SVM weights trained for classification. One more thing: Obtaining a reliable object-confidence map. 1) A single local activation can be encoded to a Fisher vector. 2) Each corresponding region is scored by SVM weights trained for classification. 3) We vote each region to form an object confidence map. Examples in PASCAL VOC2007. Note: No bounding box annotations are used in training. Examples in PASCAL VOC2007. Note: No bounding box annotations are used in training. Examples in PASCAL VOC2007. Note: No bounding box annotations are used in training. Examples in PASCAL VOC2007. Note: No bounding box annotations are used in training. (This work) (Coming up) Classification Detection mAP: 83.2% mAP: TBA (State-of-the-art) (State-of-the-art) ICCV 2015 (submitted) Anthony S. Paek Co-founder and CEO, Cldi Inc. [email protected] Appendix Experimental setup. • Number of scales: 7. [Shelhamer et al., 2014] • Pre-trained CNN: Caffe reference model. • Target activation layer: FC7. • PCA dimension: 128. • Number of Gaussians: 256.

© Copyright 2026