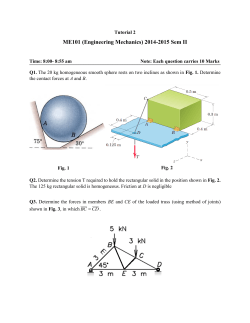

`Magasins Dessenne` at Malia, Crete - politeia â kripis

'Magasins Dessenne' at Malia, Crete: Photogrammetric 3D Restitution of the Archaeological Excavation In the framework of the project Kripis-Politeia by the Laboratory of Geophysical-Satellite Remote Sensing & Archaeoenvironment (GeoSat ReSeArch) of the Institute for Mediterranean Studies - Foundation for Research and Technology Hellas (IMS-FORTH), and under the auspices of the French Archaeological School at Athens (Dr. Maud Devolder), a photogrammetric session has been carried out for the 3D documentation of the trench excavation of the so called ‘Magasins Dessenne’ at Malia. Dr. Gianluca Cantoro supervised the acquisition of the measurements, while Mrs. Stephania Michalopoulou, Dr. Sylviane Dederix and Dr. Apostolos Sarris provided assistance during the fieldwork activities. The entire photogrammetric session was completed into two working days (July 24 and 25, 2013). h c r A e S H e T R R t O a F S o S e IM G 1. The fieldwork The fieldwork activity consisted of image acquisition with different photographic devices and methods, for the complete three-dimensional reconstruction of the entire excavation which was later covered by the local administration for the better preservation of the site. For the specific purpose of the work, three different devices were proposed: Professional DSLR camera manually controlled and moved; Compact camera mounted on a unmanned aerial remotely controlled vehicle; Combined infrared and RGB sensor used in gaming devices. The investigated area consisted of an excavated trench extended for about 420 square meters at the SouthWestern edge of the Minoan Palace at Malia [Coordinates 35.29266, 25.492234]. A number of rooms and corridors with standing structures of variable height has been excavated and fully documented with traditional topographic methods. In particular, the preliminary graphic documentation required the positioning of a number of measured target (around 5000 3D points in Greek coordinate system) onto the surface of walls and stones. These points -done with a red or blue marker in specific location of the structures and measured with a Total Station by Lionel Fadin - were still visible during the photogrammetric session and thus they have been reused to improve the accuracy and geo-location of 3D model during the photogrammetric session. The photogrammetric session was organized in three different phases: a) b) c) d) e) f) UAV photographs for bird-eye view and overall simplified 3D view; Target identification and photo-acquisition strategy; Photographs with sufficient overlapping and full site coverage; First rapid processing to identify possible lack of 3D points for three-dimensional reconstruction; Experimental Kinect scanning sessions of the Southern portion of the trench; Overall processing and output optimization. The different phases will be shortly outlined in the following paragraphs. 2. UAV photographs for bird-eye view and overall simplified 3D view The first phase in the field consisted of a low-altitude photogrammetric coverage of the entire excavation and the attached Minoan Palace. This was accomplished with the use of a compact RGB camera mounted on a remotely controlled flying device. The derived relatively low-quality 3D model provided a number of useful information and was essential for the forthcoming phases. Indeed, the first output was the production of an updated ortho-photo of the area under investigation with an approximate orientation and scaling. This ortho-photo (FIG. 1) was then overlaid with the topographic points and it was further geo-referenced. This way, the exact location of each topographic point was easily associated with the obtained photos (FIG. 2) to improve their correspondences in the 3D model. The retrieval of photo-stone-point association was then much simplified. 1 h c r A e S H e T R R t O a F S o S e IM G FIG. 1: Detail of the ortho-photo from UAV images FIG. 2: Particular of the topographic points over the UAV ortho-photo 2 The reason why this first 3D model was not sufficient for the purpose of the fieldwork not only resides in the medium-low quality and resolution of the used camera, but also due to the fact that images were taken from above, thus not documenting the façade of each wall but only their top view. In addition, some areas (SouthWestern corner of the trench) were completely covered by trees and bushes and were clearly visible only from the ground (FIG. 3). h c r A e S H e T R R t O a F S o S e IM G FIG. 3: Point cloud from UAV with the lack of 3D information below the tree’s canopy 3. Target identification and photo-acquisition strategy Given the available sketch plan of the excavation, a preliminary (arbitrary) division of areas (and relative photographs) to be processed was carried out. For the specific case, six different projects were created to simplify the processing and limit the errors. The overlapping of projects and their accurate georeferencing allowed a quick and efficient merging of them at the end of the process. Of great value for the photograph’s processing was the availability of targets on surface of stones and walls. An important step in image processing is indeed the improvement of automatic images’ matching by means of input coordinates for specific pixels. This step helps also in narrowing-down the image neighbors to be matched. Considering the large scale of the excavation and the consequent number of overlapping photographs needed to document it, it would have been too much time-consuming to compare all the 4000 pictures with each other. Although sufficient targets were easily identifiable in each photograph, the high density of them made the work sometimes hard by increasing exponentially the possibility of erroneous association with pixel in images. Indeed the perspective view of most of the images brought as consequence the coexistence of large portion of points to be projected in one single frame, regardless to their actual visibility: for example, considering a frontal view of a wall façade, the photogrammetric software uses also the points belonging to the back façade of the wall (not visible in the chosen photograph), creating obvious problems leading to potential errors (FIG. 4). 3 h c r A e S H e T R R t O a F S o S e IM G FIG. 4: 3D ground control points are projected on the photo-frame regardless to their actual visibility. Points such as 4554, 4552, 4551 in the photograph are located on a stone behind the wall depicted in the image. 4. Photographs with sufficient overlapping and full site coverage The basic principle of photogrammetry is the one stating that in order to get a single 3D point one needs at least three views of the same spot to be processed. If this is quite easily achievable for almost flat surfaces, irregular concavities and convexities of wall or stone surfaces make this quite a challenge. Indeed the achievement of this goal required an impressive amount of images to be processed. Furthermore, the need for high resolution and accuracy of the restitution made necessary the utilization of full size images with obvious increase of processing time. A total of 4471 photographs have been taken from the ground over the two working days, walking around any available structure or isolated stone from as many angles as possible. Photographs were taken with a Canon EOS 5D Mark II at highest resolution (21 Megapixels – full frame) with stock lenses and focus set to infinity. 5. First rapid processing to identify possible lack of 3D points for three-dimensional reconstruction The amount of data to be handled required a preliminary phase of pre-processing in low resolution. This first step helped to highlight possible issues, little documented areas or low quality images that could have deteriorated the entire model. This preliminary check resulted also in being the most time consuming process, because of the continuous interaction computer-human: indeed, most of this process could not be completely automated and required a number of trials and errors in order for the final output to be corrected. A first sparse point cloud was produced as the result of this initial processing and the merging of the different sub-groups of images processed together. This cloud was also the base for the construction of the surface mesh, which is now accessible on the web-portal of the project. Indeed, this is a compromise solution between a complete coverage without that many details, which would have make the model unusable on most computers (FIG. 5). Another output for this processing was also an accurately geo-referenced orthophoto and the micro-DEM for the excavation trench. 4 h c r A e S H e T R R t O a F S o S e IM G FIG. 5: Perspective view of the excavation from the photogrammetric 3D model with texture. 6. Experimental Kinect scanning sessions of the Southern portion of the trench An increasing popularity between the game developers is the use of Microsoft Kinect sensors as laser scanners. This application, known as “hacking” consists in the creation of software able to read and interpret the real-time information derived by the color camera and combine them with the field depth obtained by the laser sensor onboard these devices. Sensors like the Kinect (or also the Asus Xtion or other PrimeSense sensors) have the advantage of capturing the real world as a colored surface and point-cloud on-the-fly and in real scale. This means that they can be used as proper scanner for different real surfaces. An explorative test was conducted with Kinect on a specific area of the excavation. The software used to process the output of Kinect was Skanect. Unfortunately, the low intensity of the infrared projection inside the scanner does not allow its successful use in direct sunlight. For that reason, tests were conducted in the areas covered by the shadow of the tree canopy in the South-Eastern edge of the trench. The output from Skanect is a textured surface (FIG. 6), but if the mesh itself looks interestingly good and detailed (though slightly rounded and smooth), the color texture is still quite poor, due to the low-resolution camera of such a sensors. There are actually a number of different approaches and efforts put into the solution of this issue and we are confident that in a short time this problem will be solved and a proper 3Dscanner could be easily acquired with a small cost. 5 h c r A e S H e T R R t O a F S o S e IM G FIG. 6: Screenshot of an example of a simple KINECT scan with (left) and without texture 7. Overall processing and output optimization The basic cloud and mesh have a great value, since they constitute already a large part of the processing involving the accurate position and orientation of cameras together with their internal parameters’ calibration. Anytime a specific area in an excavation needs a higher level of detail (within even a millimeter accuracy), targeted processing can be carried out on the minimum subset of pictures. Furthermore, the already available model allows a number of accurate measurements (such as the width of a specific wall, its height, the average size of stones, the approximate volume of a wall and so on) that can be taken anytime with just a 3D mesh viewer (such as Meshlab or CloudCompare). The model can also be used to extract vector documentation to integrate in further studies, such as cross section or truly orthographic plan drawings. Gianluca Cantoro 6

© Copyright 2026