Vision in Brains and Machines - Redwood Center for Theoretical

Vision in Brains and Machines

Bruno A. Olshausen

Helen Wills Neuroscience Institute, School of Optometry

and Redwood Center for Theoretical Neuroscience

UC Berkeley

Brains vs. machines

“the brain doesn’t do things

the way an engineer would”

“we needn’t be constrained by

the way biology does things”

Brains vs. machines

“the brain doesn’t do things

“we needn’t be constrained by

“brains and machines use a common set of operating principles”

the way an engineer would”

the way biology does things”

Cybernetics/neural networks

Norbert Wiener

Warren McCulloch & Walter Pitts

Frank Rosenblatt

By the study of systems such as the perceptron, it is hoped that those

fundamental laws of organization which are common to all information

handling systems, machines and men included, may eventually be

understood.

— Frank Rosenblatt, Psychological Review, 1958.

Two modern developments that arose from the

confluence of ideas between disciplines

Information

theory

Natural scene

statistics

Signal and image

processing

Feature

detection

Machine

learning

Models of visual

cortex

The ‘Ratio Club’ (1952)

216 YNGVI zonERMAN

Gonloo, G. , R. Kjtchell , L. Strom , and Y.

Zotterman, The response pattern

Redundancy

reduction

I.

(Barlow, 1961)

of taste flbres in the chorda

1959 , 46, 119-- 132.

Jacobs , H. L., and M.

Acta physiol, scand.

tympani of the monkey,

L. Scott.

H.

B.

BARLOW

Physiological Laboratory, Cambridge UniversIty

Factors mediating food and liquid intake in

Studies on the prefct"ellt'c for sucrose or saccharinc solutions,

Poultry Sci. 1957 , 36, ~15.

Kare, M, R., R. Black , and E. G. Allison, The sense of taste in the fowl.

Poultry

. chiekells.

Possible Principles

Sel. 1957 , 36, 129-138.

Kitchell , R. L. ,

L. Strom

, and y, Zotterman. Electrophyslological studies of ther-

mal and taste reception in chickens

and pigeons.

Acta physlol, scand.,

46, 13~151.

Koketsu , K. Impulses from receptors in the tongue of a frog,

mea. Sci. 195 J , 2, 53-61.

Kusano , K. , and M. Sato. Properties of fungiform papillae

la1',

in frog s tongue.

1957

J.

G.

mammals.

Geschmacksinn,

, 2, 1-

impulses. J.

, E. v.

ZottermRn ,

tympani.

Zotterman ,

of Sensory Messages

Kywhu Mem,

The hypothesis

is that sensory relays

PhI/sial.

, 7, 324-338.

, and y, Zotterman. The water taste in

recode

sensory messages so Acta physlol.

,cond. 1954 , 32, 291-303.

Skand, Arch. Phl/slol.

ohrwalJ , H. Untersuchungen tiber den

that

their69.redundancy is reduced

but

IA9l

Pfaffmann , C. Gustatory afferent

243-258.

comparatively

little information is lost.

Handbuch der nlederen

Skrarulik

Li.ljestTand ,

Underlying the Transformations

1959

Y. Aclion

Sinne.

ceU, compo Phl/sIoI. 1941 ,

17,

Bd. 1. Leipzig: Thieme, 1926,

potentials in the glossopharyngeal nerve and in the chorda

Skalla. Arch. PhYstol.

1935, 72, 73-77,

Y. The response of the frog s taste fibres to the application of pure

Acta phI/stolt scand. a way of organizing

...it

constitutes

Experientia

Zotterman

Zotterman

the sensory InformatIon so that, on

the one hand, an internal model of

the environment causing the past

sensory inputs is built up, while on

the other hand the current sensory

situation is represented in a

concise way which simplifies the

task of the parts of the nervous

system responsible for learning and

conditioning.

water,

1949 ,

18, 181- 189,

The water taste in the frog,

Species differences in the water taste,

37, 00-70.

1950

6 (2), 57-58,

Acta physwl. scand.,

1956

A wing would be a most mystifying structure if one did not know

that birds flew. One might observe that it could be extended a considerable distance

, that it had

a smooth covering of feathers with

conspicuous markings ,

that it was operated by powerful muscles , and

that strength and lightness were prominent features of its construction, These are important facts , but by themselves they do not tell

us that birds fly. Yet without knowing this , and without understanding something of the principles of flight, a more detailed examination

of the wing itself would probably be unrewarding, I think that we

may be at an analogous point in our understanding of the sensory

side of the central nervous system, We have got our first batch of

facts from the anatomical , neurophysiological , and psychophysical

study of sensation and perception , and now we need ideas about what

operations are performed by the various structures we have examined.

For the bird' s wing we can say that it accelerates downwards the air

flowing past it and so derives an upward force which supports the

weight of the bird; what would be a similar summary of the most

important operation performed at a sensory relay?

It seems to me vitally important to have in mind possible answers

to this question when investigating these structures , for if one does

not one will get lost in a mass of irrelevant detail and fail to make the

.crucial observations, In this paper I shall discuss three hypotheses

according to which the answers would bc as follows:

1, Sensory relays are for detecting, in the incoming messages , cer-

passwords " that have a particular key significance for the animal.

2, They are filters , or recoding centers , whose "pass characteristics

tain "

217

.........

...

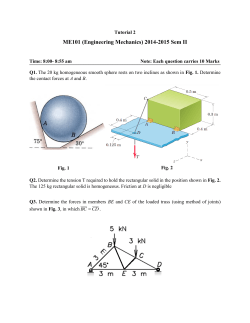

Models and experimentally-tested predictions

arising from the redundancy reduction theory

Notizen

coding characteristic is used in

911

digital image pro-

cessing, and is called " histogram equalization (5).

Note that this coding procedure amplifies inputs in

proportion to their expected frequency of occurrence , using the response range for the better resolution of common events , rather than reserving large

portions for the improbable.

1.0

• Histogram equalization

To

first order

Laughlin (1981); Bell & Sejnowski

use this coding procedure I

blowfly s compound eye (1995)

110m V

50ms

interneurons of the

see if the

0 0.

compared their contrast-response functions with the

contrast levels measured in natural scenes , such as

dry schlerophyll woodland and lakeside vegetation.

Relati ve intensities were measured across these

scenes using a detector which scanned horizontally,

like the ommatidium of a turning fly. It consisted of

a PIN photodiode, operating within its linear range

in the focal plane of a quartz lens. A combination of ....,

coloured glass filters (Schott , KG 3 + BG 38) was

used to givs, the detector a spectral sensitivity

similar to a fly monopolar cell (3). The scans

206were

cumu lative

probability

• Predictive coding

1.0

Srinivasan, Laughlin & Dubs (1982)

digitised at intervals of 0. 070

Joseph J. Atick and A. Norman Redlich

1.4 0

coHesponding to the angular sensitivity of a fly

photoreceptor (6). Contrast values were obtained by

• Whitening

averaged from 6 cells; range bars show total scatter. Inset

shows the averaged responses of an LMC to four contrast

steps (the cell hyperpolarises to increments and depolarises

to 300

decrements). The stimulus , a Siemens LD57C light

emitting diode (LED) subtending 20 and filling the centre

dividing each scan into intervals of 10 , 25 or 500

Within each interval the mean intensity, /, was

found , and substracted from every data point to

give the fluctuation about the mean LlI.

This

of the LMC's receptive field; was mounted in the centre of

the reflecting screen of diameter 160 . LED and screen

difference value was divided by the mean to give

the contrast AI/I.

The cumulative probability dis-

Atick & Redlich (1990; 1992);

levels (Fig. 2) was derived from

tribution of contrast

15000 readings. As expected , the range of contrasts

of the interval

van Hateren (1992; 1993);

used difference between the two larger

intervals

, was small.

Dan, Atick & Reid (1996)measured using thes techniques

contrast-response function was

developed for intraencountered increased with the width

, but the

, 25 0 and 500

The interneuron

+ 1.0

Fig. 2. The contrast-response function of light adapted fly

LMC's compared to the cumulative probability function

for natural contrasts (500 interval). Response is normalised

with the maximum amplitude to light off as 0. , and the

maximum

amplitude to an increment as 1. 0. Data points

1000

and convolved with a

Gaussian point spread function of half-width

contrast cd/I

100

brightnesses

were equalised by setting the mean LED

intensity so that its substitution for the screen in the centre

. of the field generated a negligible response. Contrast steps

...,J

!II

Q.)

were generated by setting the LED driving voltage to a

for 100 ms , and the corresponding contrast

new level

determined by recording the driving voltages and referring

these to the diode

s voltage/intensity curve, determined

Mean light levels where 2. 5 to 3. 0 log units above the

intensity producing a half maximal response from the dark

adapted LMc.

situ.

cellular recording in the intact retina of the blowfly,

Calliphora stygia

, 6). Individual LMC' s were light

adapted by a bright steaqy background and the

responses to sudden increments and decrements

about this level recorded (Fig. 2). Repeated responses to the same stimulus were averaged to

enhance the reliability of the data. The light adapted

contrast-response function approximates to the sig-

sponse equiprobability have a

marginal effect on 100

entropy (4), there should be little redundancy associated with the LMC response to natural scenes.

frequency,

c/deg concept

The successfulSpatial

application

of a central

from information theory,

entropy, to a neuron

V1 is highly overcomplete

Tempo ral recons tructio n o f the image

The homun culus also has to face t'he proble m that the image is often nioving

contin uously , but is only represe nted by impulses a t discret e mome nts in time. I n

these days he often has to deal with visual images derive d from cinema screens and

television sets t h a t repres ent scenes sample d a t quite long interva ls, and we know

LGN

afferents

IVb

layer 4

cortex

Barlow (1981)

C

0 1mm

I

E A tracing of the outlines of the granule cells of area 17 in layers I V b and IVc of

FIGUR8.

monkey cortex, where the incoming geniculate fibres termma te (from fig. 3 c of Hubel &

Wiesel 1972) The dots at the top lndlcate the calct~l atedseparation of the sample points

Sparse,

distributed codes

Dense codes

(e.g., ascii)

(e.g., grandmother cells)

. . .

. . .

N

2

Local codes

N

K

( )

(From Foldiak & Young, 1995)

N

4.00

d.

3.00

coo

0

I

Field (1987)

X~~~~~~~~~~. Ia

2.00

David J. Field

r~2

0

to

1.01

II

02

> 0.0c11

1,

¢oX 6.OC

ar

01=,

a s.ocII.

more recently by Daugman,1 a Gabor function represents a

minimum in terms of the spread of uncertainty in space and

spatial frequency (actually time and frequency in Gabor's

description). However, the Gabor code is mathematically

pure in only the Cartesian coordinates where all the Gabor

Gi~~~~~~~~~~~~~~~(."

11.,

channels are the same size in frequency and hence have

,)0

Vol.in4,space

No. 12/December

1987/J. Opt. Soc. Am. A

sensors that are all the same size

(i.e., all the rectangles in the diagrams in Fig. 1 are the same size). In such a

case, the Gabor code represents the most effective means of

packing the information space with a minimum of spread

and hence a minimum of overlap between neighboring units

in both space and frequency.

However, modifying the basic structure of the code to

permit a polar distribution such as that shown in our rosettes

(Fig. 3) alters the relative spread and overlap between neighbors. In this section some results are described that were

obtained with a function that partially restores some of the

destructive David

effects J.ofField

the polar mapping. This function will

be called the log Gabor function. It has a frequency rePhysiological Laboratory,

sponse University

described of

byCambridge, Cambridge CB2 3EG, UK

Relations between the statistics of natural images and the

response properties of cortical cells

Image D

2 4.OC

II.

0)

E. 3.OC

2379

Image E

1.0 2.0

3.0

I aatM

2.OC

Simple-cell receptive fields are well suited to encode images

with a small fraction of active units.

1.001

0.0 ,

David J. Field

6.005.00

4.00

d.

3.00

coo

0

I

2.00

to

3.0

1.0 2.0 3.0

G(f) = expi-[log(f/f 0 )]2 /2[log(oi/f0 )]2 I,

Received May 15, 1987; accepted August 14, 1987

(12)

Bandwidth (octaves)

that image-coding

is, the frequency

response is a Gaussian on a log freThe relative efficiency of any particular

scheme should be defined only in relation to the class of

Vol.

4,

No.

12/December

1987/J.

Opt. Soc.

Am.

A

2389 the

Fig. 12. Relative response of the different

subsets

of

sensors

as

a

quency

axis.

Figure

14

a comparison

images that the code is likely to encounter. To understand the provides

representation

of images between

by the mammalian

visual

function of the bandwidth. For example,

plottherefore

labeled 0.01

system, itthe

might

be useful to consider the statistics of images from the natural environment (i.e., images

represents the average response of the

toptrees,

1%ofrocks,

the sensors

relative

with

bushes,

etc). In this study, various coding schemes are compared in relation to how they represent

to the averageresponse of all the sensors.

If we consider

the

sensor

Image

natural

Gabor

codesA of

have

number

Image

Bof interesting

Image C

the information

in such

naturalimages.

images. The

coefficients

suchacodes

are represented

4 by arrays of mechanisms

6.00r

subject

thisrespond

plot

related

to

the

Image A response to be

C cantobelocal

Image

B to noise, thenthat

Image

regions

of space, spatial

mathematical

properties.

As orientation

described

by Gabor1

and For many classes

frequency, and

(Gabor-like

transforms).

signal-to-noise ratio. It suggests that spatial-frequency band2

of image, such codes will

not recently

be an efficient

means of representing

information.

the results

obtained with

more

by5.00

Daugman,1

a Gabor

function However,

represents

a

widths in the range of 1 octave produce

the highest

signal-to-noise

- and the spatial-frequency

six natural

images

suggest that the orientation

tuning

of

mammalian

simple

cells are well

ratio.

insuch

terms

of the

in

0o,0space and

suited for coding the minimum

information in

images

if thespread

goal of of

the uncertainty

code is to convert

higher-order redundancy (e.g.,

002

4.00

-0.0opixels)time

correlation between the

intensities

of neighboring

spatial

frequency

into first-order

(actually

and frequency

in(i.e.,

Gabor's

redundancy

the response distribution

0.02

0.05

of the tocoefficients).

Such

coding produces

a relatively

active sensors have a high response

the average.

If we

high

signal-to-noise

ratio

and permits information

to be

description).

However,

the

Gabor

code is mathematically

0.02

transmitted

with

only

a

subset

of

the

total

number

of

cells.

3.00

0.05

These

results support Barlow's theory that

the

goal

of

consider the fact that cortical neurones

are

inherently

rather

0.05

0.10

0.) Cartesian

pure intheonly

the

coordinates

where

all0.20the redundancy.

Gabor

G

i~~~~~~~~~~~~~~~(."

natural

vision

3

is toplot

represent

information

in

the

natural

X~~~~~~~~~~. Ia

environment

with

minimal

noisy in their response to a stimulus,

' this

can

be

0.10

11.,

channels are 0~0the2.00same size in frequency and hence have _~~0.20

,)0

considered a measure of the signal-to-noise ratio

of different

sensors

VI) all the same size in space (i.e., all the rectantypes of sensors. INTRODUCTION

Coding information into channels

with that are

to

2

2why

behave

1.00 in Fig. 1 are

gles in the diagrams

thecortical

sameneurons

size). might

In such

a in this way. Daugman'

approximately 1-octave bandwidths produces a representa02

points out that the Gabor coderepresents an effective means

Since Hubel and the cells classic

experiments

oncode

neurons

in

case,

the

represents

the most effective means of

tion in which a small proportion of Weisel'sl

represents

a Gabor

0.00

of filling the information space with functions that extend in

the visual cortex, we have moved a great deal0) closer to an

packing the information

spaceboth

withspace

a minimum

of spread

large proportion of the information with a high signal-to2and frequency.

However, this does not necessarunderstanding

of

the

behavior

and

connections

of

visual

noise ratio.

and

hence

a

minimum

of

overlap

between

neighboring

units

ily

imply

that

mustF be an efficient means of

cortical neurons.

A number

recent

Image D

Image Esuch a codeImage

0 early visual

Image D We have Image

so far Econsidered

only channels

for of

which

themodels of

C,,

r~2

0

I.1.0 2.0

1.01

II

02

> 0.0c11

1,

¢oX 6.OC

ar

0

a s.ocII.

1=,

in both spaceC,and6.00

frequency. representing the information in any

image. As we shall see,

processing bandwidth

have been quite

in accounting

ratio of the spatial-frequency

to theeffective

orientation

0 for a wide

2 4the basic

However,

modifying

structure

of

the

code

to

the efficiency of a code will depend on the statistics of the

range

of physiological

and psychophysical

observations.

0.) 5.0 - bandwidth is constant

(AF/AO

= 1.0). Figure

13 shows reinput

the images).

permit

a

polar

distribution

such

as that(i.e.,

shown

in our rosettes

For a wide variety of images, a

2However,

although

we

know

much

more about

how the

2 4.OC

II.

sults with various

different

aspect

ratios.

One

of

the

diffi0.)

1.0 2.0 early

3.0 stages of the visual system (Fig.

codewillbetween

be quite an

inefficient means of representing

3)information,

altersV)

the4.00k

relative

and overlap

neigh0)

process

there isspreadGabor

culties of such an analysis

is that the two-dimensional

Gabor

Ca.

0.02

0.01

information.

E

E. 3.OC

still

a

great

deal

0.02

of

disagreement

bors.

In

this

section

some

results

are

described

that

were

about

the

reasons

why

the

functions

are

not

polar

separable.

That

is,

the

spatial-fre0.02

c

__ _~ 0.02

I aatM

0.05

Clearly,

the definition

visual

an efficient, or optimal, code

system works

as it does.obtained

Theorieswith

of why

3.00ccortical that partially

a function

restores

some ofofthe

quency tuning is not

independent

of the orientation

tuning

0.0

0.05

0.20

depends

on

two

parameters:

the goal

neurons

of the code and the

behave

as

they

do havedestructive

varied widely

\ function

0.10

from Fourier

2.OC

(in degrees). Extending the5 orientation

bandwidth

actually

effects

of

the

polar

mapping.

This

will

0.20

6

0.20

7

0.20

statistics

of thea input.

analysis

to edge todetection.

However,

theory function.

extends the response

of the' channel

higher frequencies.

be

called no

thegeneral

log2.00Gabor

It has

frequency rehas emerged

a clear

With few exceptions (e.g., Refs. 15-18), theories of why

favorite.

Edge

detection

has

proved

With the 1/f amplitude

spectrum,asthe

response

of the

chan1.001

sponse described1.0C

by

to beprimarily

an effective

visual neurons behave as they do have failed to give serious

of coding

many types of images, but

nel will be dependent

on means

the lower

frequencies,

2

the evidence

that

cortical neurons

can generally

classified 0 )]consideration

with little effect produced

by the

extension.

Nonetheless,

(12) of the natural environment.

/2[log(oi/f0 )]2toI, the properties

G(f) =beexpi-[log(f/f

0.00'

0.0 ,

I.1.0 2.0 3.0

1.0 2.0 as3.0

0.25

1.00

4.00

0.25

1.00

4.00

0.25

4.00

edge

detectors

is

lacking

Our

present theories about the1.00

Refs.

the results of such an analysis are shown in Fig.(e.g.,

13. As

can8 and 9).

function

of cortical neurons

Bandwidth

(octaves)

Theofnotion

thatoptiis, the frequency

response

is based

a Gaussian

onona the

log bandwidth

frethe is

are

frequency

bandwidth

/ Orientation

visual

cortex

primarily

performs

be seen, an aspect

ratio

about that

0.5-1.0

somewhat

a globalSpatial

Fouriresponse

of such neurons to stimer transform

no longer

uli asuch

Fig. 12. Relative response

of the different

subsets

of is

sensors

as a given quency

serious consideration.

checkerboards,

mal, although

the effects

are small.

Theprovides

axis. Fig.

Figure

14

comparison

between

the

13. Relative

response

of

theasdifferent

subsets

as asine-wave

function ofgratings, long straight

-

.

..-------

0.10

Field (1994)

Simple-cell receptive fields

are well suited to maximize

non-Gaussianity (kurtosis) of

response histograms.

Internal

model

External

world

.

.

.

Sparse coding

image model

(Olshausen & Field, 1996;

Chen, Donoho & Saunders 1995)

I(x,y)

φi(x,y)

I(⇤x) =

M

X

ai

ai ⇥i (⇤x) + (⇤x)

i=1

image

neural

activities

(sparse)

features

other

stuff

Learned dictionary { i }

spatial frequency (cycles/window)

2.5 5.0

1.2 2.5

0 1.2

y

x

Olshausen & Field (1996)

Non-linear encoding

Outputs of sparse coding network (a i)

Pixel values

Image I(x,y)

Many other developments

• Divisive normalization Schwartz & Simoncelli (2001); Lyu (2011)

• Non-Gaussian, elliptically symmetric distributions

Zetzsche et al. (1999); Sinz & Bethge (2010); Lyu & Simoncelli (2009)

• Contours

Geisler et al. (2001, 2009); Sigman et al. (2001); Hoyer & Hyvarinen (2002)

• Complex cells, higher-order structure

Hyvarinen & Hoyer (2000); Karklin & Lewicki (2003, 2009); Berkes et al. (2009); Cadieu & Olshausen (2012)

Natural Scene Statistics

(Hancock, MA 1997)

Barlow Donoho Li

Applications of sparse coding

Denoising / Inpainting / Deblurring

Compressed sensing

Computer vision

Deep learning

building blocks,

staggering them

present

appropriately

Up toover

the two mechanisms

the an

and conversely).

wide region. have

' off'-centre,

A

complexgeniculate

units. One

only of

simple fields

useopposite

the corresponding

type ('on '-centre

instead

as of

by ones

sponding

cellsneed

mayinhibitory

Similarendings

schemes by

explain thewebehaviour

be proposed

replace of

ones,to provided

corretheother

excitatory

complex

field, and

this would tend

higher-order

to excite 19

thewe

cell. any of the

may replace

connexions.

direct inhibitory

In Text-fig.

activate scheme

one or more

ofshould,

these simple

cells wherever

fellpossibility

itthe

within the of

however,

consider

proposed

In would

the

one

280 and staggeredexcitatory

H. HUBEL

AND

T. N. WIESEL

axis orientation,

along aD.

horizontal

light

line. An&edge

of

1961).

Wiesel,

(Hubel

suppression

afferent

firing

of

in

its

main

of simple cortical cells with fields of type C, Text-fig. 2, all with vertical

because

of FIG. 38. Wiring

centrefrom

its field

an of

'off'-centre

on illuminating

Text-figs. 5cell

One may imagine

andis6.suppressed

that it receives

afferents

a set

diagrams

that

visual system is clear from studies of the lateral geniculate body, where

account

for the properties

of hypercomple

the

mechanisms

occur

in

place

level.

such

takes

That

inhibition

at

a

lower

order cell.

cells. A: hypercomplex

cell responding

tonic excitation,

field isofpresumed

to be

result

of withdrawal

thesome

its position, will

excite

simple-field

cells, leadingof

to excitation

of the higher-i.e. the

single

stopped

edge (as in Figs. 8 th

rupted lines.

Any vertical-edge

stimulus an

falling

across this rectangle,

part of regardless

inhibitory

the receptive

firing

suppression

of

on illuminating

The boundaries of the fields are staggered within an area outlined by the inter11) receives

projections

from

two co

the

excitatory

synapses.

Here

Text-fig.

model

of

is

based

on

The the

19

left and an inhibitory region to the right of a vertical straight-line boundary.

cells, one excitatory

to the hypercomple

neurone has a receptive field arranged as shown to the left: an excitatory region to

afferents.

cell (E),

the other

inhibitory

(I). Th

are imagined

to project

to a single

cortical

cell of higher

order. Eachby

projecting

'on'-centre

unequally

reinforced

be produced

were

the

two

flanks

if

fields. A number of cells with simple fields, of which three are shown schematically,

citatory

complex

cell has iti receptive

E, would

regions, as

flanking

asymmetry

in field

left. 20.

An Possible

to the Text-fig.

scheme forofexplaining

the organization

of complex

receptive

in the region

indicated

by the left

region below and to the right of the boundary, and 'on' centres above and

tinuous)

rectangle;

the inhibitory

ce

be formed by having geniculate afferents with 'off' centres situated in the

its field in the area indicated

by the

their field centres appropriately placed. For example, field-type G could

(interrupted)

rectangle.

The hypercomple

by supposing that the afferent 'on'- or 'off'-centre geniculate cells have

field thus includes

both areas, one bein

In a similar way, the simple fields of Text-fig. 2D-G may be constructed

Hubel & Wiesel (1962, 1965)

Hypercomplex

Complex

activating

region,

the other the antagonis

receptive-field diagram to the left of the figure.

Stimulating

the left region

alone resu

then have an elongated 'on' centre indicated by the interrupted lines in the excitation

of the cell, whereas

stimulat

synapses are supposed to be excitatory. The receptive field of the cortical cell will

regions

together

is without

effec

a straight line on the retina. All of these project upon a single cortical cell, and the both

proposed

to explain

the prope

the upper right in the figure, have receptive fields with 'on' centres arranged along scheme

fields. A large number of lateral geniculate cells, of which four are illustrated in of a hypercomplex

cell responding

Text-fig.

receptive

20.

hypothetical

simple

The

of

organization

illustrated

explaining

the

has a complex field like thatdouble-stopped

Text-fig. 19. Possible scheme for cell

slit (such as that desc

their exact retinal positions. An example of such a scheme is given in

for the differ

all have identical axis orientation, but would differ from one another inin Figs. 16 and 17, except

or the hypercomplex

ce

having cells with simple fields as their afferents. These simple fields wouldin orientation,

in Fig. 27). The cell re

noted in Part I suggests that cells with complex fields are of higher order,small spikes

input

from

a complex

cell

body. Rather, the correspondence between simple and complex fieldsexcitatory

posing that these cells receive afferents directly from the lateral geniculate vertically

oriented

field is indicated

The properties of complex fields are not easily accounted for by sup-left by a continuous

rectangle;

two

occur.

tional

complex

cells inhibitory

to the

not been distinguished, but there is no reason to think that both do not

complex

cell have

vertically

oriented

CAT VISUAL CORTEX1

143flanking

cortical fields.

the first

one above

and

Simple

Neocognitron

(Fukushima 1980)

image

feature pooling feature pooling

objects

extraction

extraction

“LeNet”

(Yann LeCun et al., 1989)

articles

‘HMAX’

(Riesenhuber & Poggio, 1999; Serre, Wolf & Poggio, 2005)

n extension of

simple cells4,

‘S’ units in the

matching, solid

nits6, performonlinear MAX

he cell’s inputs

odel’s propersummation of

e two types of

invariance to

different posiover afferents

ap in space

e ‘complex’

mulus size,

nvariance!

a simplified

View-tuned cells

Complex composite cells (C2)

Composite feature cells (S2)

Complex cells (C1)

Simple cells (S1)

weighted sum

MAX

network to recover the data from the code.

minimizing the discrepancy between the original data and its reconstruction. The required

gradients are easily obtained by using the chain

rule to backpropagate error derivatives first

through the decoder network and then through

the encoder network (1). The whole system is

Deep Belief Networks

Department of Computer Science, University of Toronto, 6

King’s College Road, Toronto, Ontario M5S 3G4, Canada.

(Hinton & Salakhutdinov, 2006)

*To whom correspondence should be addressed; E-mail:

[email protected]

Decoder

30

W4

500

Top

RBM

T

T

W 1 +ε 8

W1

2000

2000

T

500

1000

1000

W3

1000

T

W 2 +ε 7

W2

T

T

W 3 +ε 6

W3

RBM

500

500

T

W4

30

1000

W4

W2

2000

Code layer

500

RBM

W3

1000

W2

2000

2000

W1

W1

T

W 4 +ε 5

30

W 4 +ε 4

500

W 3 +ε 3

1000

W 2 +ε 2

2000

W 1 +ε 1

It is difficult

nonlinear autoen

hidden layers (2–

autoencoders typi

with small initial

early layers are

train autoencoder

the initial weights

gradient descent

initial weights req

algorithm that lea

time. We introduc

for binary data, ge

and show that it

data sets.

An ensemble

ages) can be mo

work called a Bre

(RBM) (5, 6) in w

are connected t

detectors using s

nections. The pi

units of the RB

observed; the fea

Bhidden[ units. A

the visible and h

given by

X

Eðv, hÞ 0 j

iZpix

j

RBM

Pretraining

X

i, j

Encoder

Unrolling

Fine-tuning

Fig. 1. Pretraining consists of learning a stack of restricted Boltzmann machines (RBMs), each

where vi and hj a

and feature j, bi a

the cortex. They also demonstrate that convolutional

DBNs (Lee et al., 2009), trained on aligned images of

faces, can learn a face detector. This result is interesting, but unfortunately requires a certain degree of

Le etconstruction:

al. 2012)their training

supervision(Quoc

during dataset

images (i.e., Caltech 101 images) are aligned, homogeneous and belong to one selected category.

‘Google Brain’

logical and co

Lyu & Simonc

As mentioned

of local conne

ments, the fir

pixels and the

lapping neighb

The neurons in

input channel

second sublay

(or map).3 W

responses, the

the sum of th

is known as L

normalization

Our style of

ules, switch

ance layers,

HMAX (Fuk

1998; Riesenh

been argued t

brain (DiCarlo

pooling

sparse coding

Figure 1. The architecture and parameters in one layer of

Although we

not convoluti

across differe

We visualize histograms of activation values for face

images and random images in Figure 2. It can be seen,

even with exclusively unlabeled data, the neuron learns

to differentiate between faces and random distractors.

Specifically, when we give a face as an input image, the

neuron tends to output value larger than the threshold,

0. In contrast, if we give a random image as an input

image, the neuron tends to output value less than 0.

‘Google Brain’

(Quoc Le et al. 2012)

Figure 2. Histograms of faces (red) vs. no faces (blue).

The test set is subsampled such that the ratio between

faces and no faces is one.

Figure 3. Top: Top 48 stimuli of the best neuron from

test set. Bottom: The optimal stimulus according t

merical constraint optimization.

4.5. Invariance properties

We would like to assess the robustness of the fac

tector against common object transformations,

logical and computational models (Pinto

the cortex. They also demonstrate that convolutional

et al., 2008;

translation,

scaling and out-of-plane rotation. F

2

In

this

section,

we

will

present

two

visualization

techLyu & Simoncelli, 2008; Jarrett et al.,we2009).

DBNs (Lee et al., 2009), trained on aligned images of

chose a set of 10 face images and perform di

niques

verify

the optimal stimulus of the neuron is

faces, can learn a face detector.

Thisto

result

is if

interAs mentioned above, central to our approach

use e.g., scaling and translating. For

tions tois the

them,

esting, but unfortunately requires

a certain

of method is visualizing the most

indeed

a face.degree

The first

of local connectivity between neurons.

In

our

experiof-plane rotation, we used 10 images of faces rota

supervision during dataset construction:

their

training

responsive

stimuli

in thements,

test set.

Since

the test

the first

sublayer

has set

receptive

of 18x18

infields

3D (“out-of-plane”)

as the test set. To check th

images (i.e., Caltech 101 images)

are aligned,

homoge- can reliably detect near optimal

is large,

this method

pixels and the second sub-layer poolsbustness

over 5x5ofoverthe neuron, we plot its averaged resp

neous and belong to one selected

category.

stimuli

of the tested neuron.

second approach

lapping The

neighborhoods

of features (i.e., pooling size).

over the small test set with respect to changes in s

is to perform numerical The

optimization

to first

findsublayer

the op-connect to pixels in all

neurons in the

3D rotation (Figure 4), and translation (Figure 5

channels

(orErhan

maps) et

whereas

timal stimulus (Berkes &input

Wiskott,

2005;

al., the neurons in the

sublayer

pixels of only6 Scaled,

one channel

2009; Le et al., 2010). Insecond

particular,

we connect

find thetonormtranslated faces are generated by stan

3

While

the

first

sublayer

outputs

linear

filter

(or

map).

cubic interpolation. For 3D rotated faces, we used 1

bounded input x which maximizes the output f of the

Building high-level features using large-scale unsupervised learning

4.4. Visualization

responses, the pooling layer outputs the square root of

the sum of the squares of its inputs, and therefore, it

is known as L2 pooling.

Our style of stacking a series of uniform modules, switching between selectivity and tolerance layers, is reminiscent of Neocognition and

HMAX (Fukushima & Miyake, 1982; LeCun et al.,

1998; Riesenhuber & Poggio, 1999).

It has also

been argued to be an architecture employed by the

brain (DiCarlo et al., 2012).

Although we use local receptive fields, they are

not convolutional: the parameters are not shared

across different locations in the image.

This is

Deep learning

(Krizhevsky, Sutskever & Hinton, 2012)

0

image layer 1

layer 2

(96x55x55) (256x27x27)

layer 3

layer 4

layer 5 layer 6

(384x13x13) (384x13x13) (256x13x13) (4096)

60 million weights!

layer 7

(4096)

classification

(1000)

Performance

graph credit Matt

Zeiler, Clarifai

place cells

grid cells

.

.

face cells

.

.

.

?

.

.

.

.

‘Gabor filters’

0

Can deep learning provide insights into cortical representation?

(and vice-versa)

?

Deep learning

(Krizhevsky, Sustkever & Hinton, 2012)

Learned first-layer filters

Visualization

filters

learned at intermediate

layers

Visualizing of

and

Understanding

Convolutional

Neur

(Zeiler & Fergus 2013)

Layer 2

Visualization

filters

learned at intermediate

layers

Visualizing of

and

Understanding

Convolutional

Neur

(Zeiler & Fergus 2013)

LayerLayer

3

2

Visualization

filters

learned at intermediate

layers

Visualizing of

and

Understanding

Convolutional

Neur

(Zeiler & Fergus 2013)

LayerLayer

34

Layer

2

Layer 5

http://cs.stanford.edu/people/karpathy/cnnembed/

Visual metamers of a deep neural network

(Nguyen,Yosinki & Clune 2014)

Figure 1.

Evolved images that are unrecognizable to humans,

Figure 5. Simple segmentation

from

(a) The 3-shapes set consisted of b

Binding

byphases.

synchrony

shapes (square, triangle, rotated triangle). (b) Visible states after synchronizatio

(Reichert & Serre, 2014)

image in the MNIST+shape dataset, a MNIST digit and a shape were drawn each w

decode

0

/2

phase

mask

input

3/2

2

eceives input from a recurrent neural network. The system is trained end-to-end using

backpropagation to minimize classification error on a modified MNIST dataset. Remarkably, the

model learns to perform a visual search over the image, correcting mistaken movements

caused by distractors. Furthermore, the cone cells tile themselves in a similar fashion to those

ound in the human retinae. This layout is composed of a high acuity region at the center (low

variance gaussians) surrounded by low acuity (high variance gaussians). These initial results

ndicate the possibility of using deep learning as a mechanism to discover the optimal tiling cone

cells in a data driven manner. With the emergent visual search behavior learned by our model,

we can also investigate the optimal saliency map features for selecting where to attend next.

Learning how and where to attend

(Cheung, Weiss & Olshausen, work in progress)

Classification

Network

Location

Network

Recurrent

Network

Glimpse

Network

Image

Diagram of our neural network attention model

Red line shows the movements (saccades) by our attention model over the MNIST d

modified for visual search

The goal of computer vision

In order to gain new insights about visual representation

we must consider the tasks that vision needs to solve.

• How and why did vision evolve?

• What do animals use vision for?

Visual Navigation in Box Jellyfish

799

jumping spider

sand wasp

Figure 1. Rh

of the Upper

box jellyfish

(A and B) In f

lia maintain

the medusa

heavy crysta

rhopalium c

such that

oriented. Th

straight upw

body orienta

ated on the

eyes directe

(C) Modelin

peripheral p

angular sen

ceptors are

What do you see?

not

ect(s)

ect(s)

not

Lorenceau & Shiffrar (1992);

Murray, Kersten, Schrater, Olshausen & Woods (2002)

Perceptual “explaining away”

(d)

or

or not

Target object(s)

Occlusion object(s)

?

Image measurements Auxilliary image measurements

(Kersten"Explaining

& Yuille, 2003)Away"

Perceptual

Just as principles of

optics govern the

design of eyes,

so do principles of

information processing

govern the design of

brains.

© Copyright 2026