Why kNN is slow Making kNN fast What you see What algorithm sees

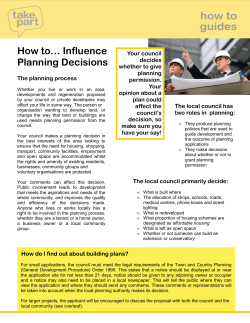

Why kNN is slow

What you see

Making kNN fast

What algorithm sees

Y

10

8

6

Training: O(1), but testing: O (nd)

Try to reduce d: reduce dimensionality

Training set:

−

{(1,9), (2,3), (4,1),

(3,7), (5,4), (6,8),

(7,2), (8,8), (7,9), (9,6)}

simple feature selection, since PCA/LDA etc. are O(d3)

Try to reduce n: don't compare to all training examples

−

Testing instance:

idea: quickly identify m << n potential near neighbours

4

2

(7,4)

2

4

6

8

10

X

K-D trees: low dimensionality, numeric data:

compare one-by-one

to each training instance

0

0

−

Nearest neighbors?

−

n comparisons

each takes d operations

−

O(d' n'), d'<<d, n'<<n, works for sparse (discrete) data, exact

locality-sensitive hashing: high-d, sparse or dense

1

O(d log n), only works when d << n, inexact: may miss neighbours

inverted lists: high dimensionality, sparse data

compare only to those, pick k nearest neighbours → O(md) time

O(d H), H<<n... number of hashes, inexact: may miss neighbours

Copyright © Victor Lavrenko, 2010

K-D tree example

Build a K-D tree:

Data structure used by search engines (Google, etc.)

−

{(1,9), (2,3), (4,1), (3,7), (5,4), (6,8), (7,2), (8,8), (7,9), (9,6)}

−

list of training instances that contain a particular attribute

−

pick random dimension, find median, split data points, repeat

−

main assumption: most attribute values are zero (sparseness)

Find nearest neighbours for a new point: (7,4)

find region containing new point

−

compare to all points in region

−

up the tree

x=6

Y

−

fetch and merge inverted lists for attributes present in example

−

O(n'd'): d' … attributes present, n' … avg. length of inverted list

D1: “send your password”

D2: “send us review”

D3: “send us password”

D4: “send us details”

D5: “send your password”

D6: “review your account”

(1,9)

(3,7)

(5,4)

(7,2)

(9,6)

4

(6,8)

(7,9)

(8,8)

Copyright © Victor Lavrenko, 2010

0

(2,3)

(4,1)

y=8

2

y=4

Given a new testing example:

10

−

6

Inverted list example

8

2

Copyright © Victor Lavrenko, 2010

0

2

4

6

8

10

X

spam

ham

spam

ham

spam

ham

new email: “account review”

Copyright © Victor Lavrenko, 2010

send

1 2 3 4 5

your

1 5 6

review

account

password

2 6

6

1 3 5

4

k Nearest Neighbours Summary

Key idea: distance between training and testing point

−

important to select good distance function

Can be used for classification and regression

Simple, non-linear, asymptotically optimal

−

assumes only smoothness

−

“let data speak for itself”

Value k selected by optimizing generalization error

Naive implementation slow for large datasets

−

use K-D Trees (low d) or inverted indices (high d)

5

Copyright © Victor Lavrenko, 2010

© Copyright 2026