Computationally Efficient Algorithms for Parallelized Digital Filters Applying Sample-by-Sample Processing

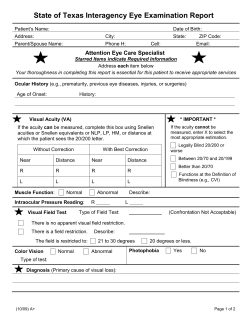

Computationally Efficient Algorithms for Parallelized Digital Filters Applying Sample-by-Sample Processing Alexandra Groth∗ Abstract — Recently, efficient structures for block digital filtering with low computational complexity have been proposed [1, 2, 3]. However, for critical applications the increase in system delay caused by block processing is unacceptable. Under these conditions parallelized filters being based on sampleby-sample processing (SBSP) have to be applied because they do not introduce extra delay [4, 5]. In this contribution a structure for SBSP filtering with reduced computational burden is proposed. Savings of up to 35% are obtained. 1 INTRODUCTION Heinz G. G¨ockler∗ trix implementable with low computational burden and a correction network. In section 2 this novel efficient SBSP filtering structure is deduced, before examples and their computational complexity are presented in section 3. Finally, in section 4 an alternative structure is outlined and its properties are discussed. 2 DERIVATION OF AN EFFICIENT SBSP FILTER STRUCTURE A parallelized filter applying SBSP is depicted in In order to realize digital systems to be operated at Fig. 1. The original transfer function H(zi ) can replaced with the transfer matrix clock speeds beyond technological feasibility, suit- equivalently be P ) = H(z ) = H(z s i ably parallelized filters have to be applied. These parallelized filters can be clocked either by sample- − P −1 −1 H0 zs P HP −1 · · · zs P H1 by-sample processing (SBSP) or by block process- −2 −1 ing (BP). In the latter case, various efficient block H0 ··· zs P H2 zs P H1 .. .. . processing approaches have recently been published . . . . . . . [1, 2, 3] all being based on [6]. P −3 P −1 − PP−2 − − HP −2 zs P HP −3 · · · zs P HP −1 According to [6, 7], significant savings in com- zs − P −2 − P −1 putational expenditure for linear or cyclic convoH0 zs P HP −1 zs P HP −2 · · · lutions can be obtained by an appropriate diago(1) nalization of the transfer matrix. As a result, three where Hp , p = 0, ..., P − 1, are the polyphase commatrices with a reduced overall number of multipli- ponents of type 1 of H(zi ). Note that in contrast cations are obtained. In case of BP this technique to BP the up- and downsamplers of the serial-tocan directly be applied [1, 2, 3] to the transfer ma- parallel and of the parallel-to-serial interfaces opertrix of the parallelized system, if the input data ate with time shifts pTi [4]. block is extended by the input samples of the previous block. All block processing approaches lead however to an increase in system delay by (P − 1)Ti , where Ti is the input sampling period and P the number of parallel branches. Since an increase in system delay is crucial in a multitude of applications, the focus of this contribution is on SBSP and the derivation of efficient SBSP structures. Note that in case of SBSP the methods of [6] cannot directly be adopted. Hence, the case of SBSP has to be put down to [6] by a transformation of the trans- Figure 1: Parallelized Filter with Sample-byfer matrix of the parallelized filter. To this end, the Sample Processing (SBSP) transfer matrix is split into a cyclic convolution maTo derive an efficient structure, (1) has to be ∗ Ruhr-Universit¨ at Bochum, Digital Signal Processing split into a cyclic convolution matrix Z and a corGroup, Universit¨ atsstr. 150, D-44780 Bochum, Germany. Email: [email protected], Tel: +49-234-3222869, rection network. As a result, Z requires only few Fax: +49-234-3214100. computation, whereas the latter system maintains the calculation of the correct output values. Note that Z can only be realized efficiently according to [6, 7] if it does not contain any shimming delay. Step 1: Blocking at a sampling instant lP Ti with l ∈ Z: By blocking the input samples the shimming delays are removed from the transfer matrix H. As a result, H can be substituted by a blocking matrix b, a new transfer matrix V H0 HP −1 · · · H1 H1 zs H0 ··· H2 . . .. .. .. .. V = (2) . . HP −2 zs HP −3 · · · HP −1 HP −1 zs HP −2 · · · zs H0 and a de-blocking matrix d, which reestablishes the correct sampling instants for the outputs of the matrix: 1 1 z − P1 − PP−1 s zs V diag . H = dV b = diag .. .. . . P −1 1 − − zs P zs P (3) Although any other sampling instant lP Ti + pTi with p ∈ [0, .., P − 1] were possible for blocking, p = 0 was chosen, for convenience, without any loss of generality. Step 2: Causal Representation of Matrix V : In order to obtain a causal and hence an implementable representation of (2), V ist split into its causal part W H0 HP −1 · · · H1 H1 0 ··· H2 . . .. .. .. .. W = (4) . . HP −2 0 · · · HP −1 HP −1 0 ··· 0 and its non causal terms. A multiplication of the non causal terms with the blocking and de-blocking matrices of (3) leads to the causal (P − 1) × (P − 1) correction matrix C: H0 0 ··· 0 −1 H0 ··· 0 zs P H1 C= . . .. .. . . . . . . P −2 P −3 − − zs P HP −2 zs P HP −3 · · · H0 (5) and the new representation of the original transfer matrix (3): · ¸ 0 0 H = dW b + . (6) 0 C In the following, however, a more convenient form of W is used. To this end, W is represented by an upper triangular matrix X= HP −1 0 .. . ··· ··· .. . H1 H2 .. . H0 H1 .. . 0 0 ··· ··· HP −1 0 HP −2 HP −1 (7) according to · W = X 0 I (P −1)×(P −1) 1 0 ¸ . (8) Step 3: Supplementing of the Matrix X: Since X is not yet a type of matrix, which is realizable with a reduced number of multiplications, the matrix X is supplemented in such a way that a P × P circulant matrix Z representing a cyclic convolution is obtained: HP −1 · · · H1 H0 H0 ··· H2 H1 .. .. .. .. Z= . (9) . . . . HP −3 · · · HP −1 HP −2 HP −2 · · · H0 HP −1 To this end we have added a lower triangular matrix to X which is subtracted again by the correction network to leave the overall result unchanged. Since the error caused by this supplemention is identical to zs−1 C, it can be combined with the correction network without any additional multiplication (Fig. 2): · H = dZ 0 I 1 0 ¸ · b+ 0 0 0 C(1 − zs−1 ) ¸ . (10) Note that a cyclic convolution marix instead of a linear convolution matrix is chosen leading to less computational complexity [6]. Step 4: Effizient Realisation of the Matrix Z: According to [6] a cyclic convolution matrix Z can be factorized into three matrices, with a reduced overall number of multiplications: Z = BGA, (11) where G is a diagonal matrix. In addition, different decompositions algorithms [7] allow a trade off between the number of multiplications and the number of additions. Figure 3: Filter with SBSP and P = 4 N P P −1 2 + P − 1 = 6N + 3 additions. As a result, the overall number of multiplications of the novel approach is given by 11N rather than 16N , whereas the overall number of additions is given by 11N +13 instead of 16N − 4. Hence, the computational expenditure caused by multiplications is reduced by 31%, whereas the reduction in additions depends on the length N of the polyphase components. For N = 7 e.g. the computational expenditure is reduced by 17%. A survey of expenditure for some other common values of P is given in Tab. 1. Note that a slightly different ratio between multiplications and additions can be obtained by using another factorizaFigure 2: Calculation of output values by a blocked tion algorithm of the cyclic convolution matrix Z cyclic convolution plus correction network (step 3) [7]. 3 EXAMPLE In order to illustrate the savings in computational complexity by the novel SBSP approach, we choose the number of parallel branches to be P = 4. An efficient realization [7] of the 4 × 4 cyclic convolution matrix Z = BGA requires 5N multiplications and 5N + 10 additions (Fig. 3), where N represents the length of the polyphase components Hp . Since the correction matrix C concorrection terms, the correction netsists of P P −1 2 = 6N multiplications and work requires N P P −1 2 4 ALTERNATIVE STRUCTURE In compliance with Tab. 1 it is obvious that the number of correction terms P P −1 2 increases rapidly with P . Since these terms cannot efficiently be calculated, the number of correction terms has to be reduced. To this end, blocking can be introduced at two different sampling instants lP Ti and lP Ti + P/2Ti . As a result, P/2 additional input samples are known at the time instant of the second blocking, such that the correct output values are obtained for those intermediate calculations. For twofold blocking the transfer matrix H has P 2 3 4 5 7 8 9 Cyclic M 2N 4N 5N 10N 16N 14N 19N New structure convolution Correction network A M A 2N + 2 N N +1 4N + 7 3N 3N + 2 5N + 10 6N 6N + 3 10N + 21 10N 10N + 4 16N + 54 21N 21N + 6 14N + 32 28N 28N + 7 19N + 55 36N 36N + 8 Original structure M 4N 9N 16N 25N 49N 64N 81N A 4N − 2 9N − 3 16N − 4 25N − 5 49N − 7 64N − 8 81N − 9 Savings M N 2N 5N 5N 12N 22N 26N A N −5 2N − 12 5N − 17 5N − 30 12N − 67 22N − 47 26N − 72 Example for N = 7 M A 25% 8% 22% 3% 31% 17% 20% 3% 24% 5% 34% 24% 32% 20% Table 1: Computational Complexity (M = multiplications, A = additions) to be partitioned according to · ¸ B1 H= B2 (12) with B 1 and B 2 being P/2 × P matrices. Finally each matrix is realized in terms of the novel algorithm of section 2. Since exclusively the first P/2 or the last P/2 output values have to be calculated, the number of correction terms is minimized. Hence, computational complexity caused by cyclic convolutions is nearly doubled, whereas the number of correction terms is reduced to 0.5P (0.5P − 1). As a consequence, the modified approach (M2 ) has only an advantage over the previous approach (M1 ) if the overall number M of multiplications is reduced, i.e. if: M 2 < M1 (13) 1 7 1 3 N P 2 + P N − 2tN < N P 2 + P N − tN 4 2 2 2 or 8P − 4t < P 2 (14) Here, the variable t ≥ 1 depends on the cyclic convolution algorithm chosen. Assuming the worst case t = 1 the modified approach becomes advantageous for P ≥ 8. Similarly the number of blocking instants can further be increased leading to a greater variety of structures. However, an increase in blocking instants comes along with an increase of the minimum P . As a result, three blocking instants make only sense for P ≥ 12, four blocking instants for P ≥ 16, respectively. 5 CONCLUSION A novel systematic and rigorous derivation of a parallelized filter structure being based on SBSP is presented applying a novel matrix decomposition approach. In contrast to a BP approach this technique does not introduce any extra delay. As a result, a reduction of computational burden up to 35% is achieved. Additionally, an alternative structure allows even greater savings for P ≥ 8. References [1] M. Vetterli, Running FIR and IIR Filtering Using Multirate Filter Banks, IEEE Trans. on Acoust., Speech, Signal Processing, vol. 36, no. 5, pp. 730-738, May 1988 [2] Z.-J. Mou and P. Duhamel, Short-Length FIR Filters and Their Use in Fast Nonrecursive Filtering, IEEE Trans. on Signal Processing, vol. 39, no. 6, pp. 1322-1332, June 1991 [3] I.-S. Lin and S. K. Mitra, Overlapped Block Digital Filtering, IEEE Trans. on Circuits and Systems, vol. 43, no. 8, pp. 586-596, August 1996 [4] A. Groth and H. G. G¨ockler, Signal-FlowGraph Identities for Structural Transformations in Multirate Systems, Proc. European Conf. on Circuit Theory and Design ECCTD’01, Espoo, Finnland, vol. II, pp. 305308, August 2001 [5] A. Groth, Effiziente Parallelisierung digitaler Systeme mittels ¨ aquivalenter Signalflussgraph-Transformationen, Ph.D. Thesis, Ruhr-Universit¨at Bochum, To be published in 2003. [6] A. Winograd, Arithmetic Complexity of Computations, CBMS-NSF Regional Conference Series, Society for Industrial and Applied Mathematics (SIAM), 1980 [7] R. E. Blahut, Fast Algorithms for Digital Signal Processing, Addison-Wesley, Reading, Massachusetts, April 1985

© Copyright 2026