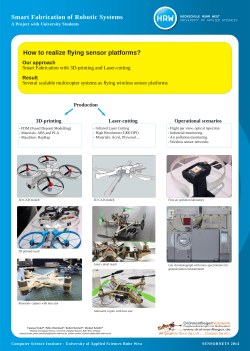

Applied Imagery Pattern Recognition Workshop