Volunteer Computing David P. Anderson Space Sciences Lab U.C. Berkeley

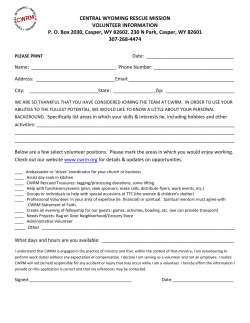

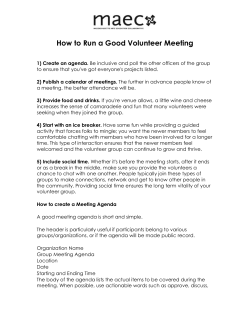

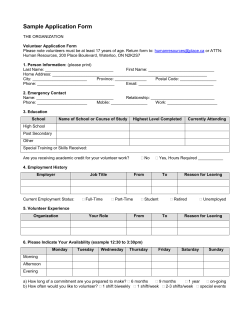

Volunteer Computing David P. Anderson Space Sciences Lab U.C. Berkeley May 7, 2008 Where’s the computing power? Volunteer computing Individuals (~1 billion PCs) Government (~50M PCs) Companies (~100M PCs) A brief history of volunteer computing 1995 2000 2005 distributed.net, GIMPS Projects SETI@home, Folding@home Climateprediction.net IBM World Community Grid Einstein, Rosetta@home Platforms Popular Power Entropia United Devices, Parabon BOINC The BOINC project Based at UC Berkeley Space Sciences Lab Funded by NSF since 2002 Personnel What we do director: David Anderson other employees: 1.5 programmers lots of volunteers develop open-source software enable online communities What we don’t do branding, hosting, authorizing, endorsing, controlling The BOINC community Projects Volunteer programmers Alpha testers Online Skype-based help Translators (web, client) Documentation (Wiki) Teams The BOINC model Your PC BOINC-based proje Climateprediction.net Oxford; climate study Rosetta@home U. of Washington; biology MalariaControl.net STI; malaria epidemiology World Community Grid IBM; several applications ... Attachments Simple (but configurable) Secure Invisible Independent No central authority Unique ID: URL The volunteer computing ecosystem Projects Public Teach, motivate volunteer Do more science Involve public in science Participation and computing power BOINC 330K active participants 580K computers ~40 projects 1.2 PetaFLOPS average throughput about 3X an IBM Blue Gene L Folding@home (non-BOINC) 200K active participants 1.4 PetaFLOPS (mostly PS3) Cost per TeraFLOPS-year Cluster: $124K Amazon EC2: $1.75M BOINC: $2K The road to ExaFLOPS CPUs in PCs (desktop, laptop) GPUs .25 ExaFLOPS = 10M x 100GFLOPS x 0.25 avail Mobile devices (cell phone, PDA, iPod, Kindle) 1 ExaFLOPS = 4M x 1 TFLOPS x 0.25 avail. Video-game consoles (PS3, Xbox) 1 ExaFLOPS = 50M PCs x 80 GFLOPS x 0.25 avail. .05 ExaFLOPS = 1B x 100MFLOPS x 0.5 avail Home media (cable box, Blu-ray player) 0.1 ExaFLOPS = 100M x 1 GFLOPS x 1.0 avail But it’s not about numbers The real goals: And that means we must: enable new computational science change the way resources are allocated avoid return to the Dark Ages make volunteer computing feasible for all scientists involve the entire public, not just the geeks solve the “project discovery” problem Progress towards these goals: nonzero but small BOINC server software Goals Clients high performance (10M jobs/day) scalability scheduler (CGI) shared memory (~1K jobs) feeder MySQL DB (~1M jobs) Various daemons Database tables Application Platform App version Job resource usage estimates, bounds latency bound input file descriptions Job instance Win32, Win64, Linux x86, Java, etc. output file descriptions Account, team, etc. Data model Files have both logical and physical names immutable (per physical name) may originate on client or server may be “sticky” may be compressed in various ways transferred via HTTP or BitTorrent app files must be signed Upload/download directory hierarchies Submitting jobs Create XML description Put input files into dir hierarchy Call create_work() input, output files resource usage estimates, bounds latency bound creates DB record Mass production bags of tasks flow-controlled stream of tasks self-propagating computations trickle messages Server scheduling policy Request message: platform(s) description of hardware CPU, memory, disk, coprocessors description of availability current jobs queued and in progress work request (CPU seconds) Send a set of jobs that are feasible (will fit in memory/disk) will probably get done by deadline satisfy the work request Multithread and coprocessor support Application platform app appversions version List of platforms, Coprocessors #CPUs scheduler client jobs avg/max #CPUs, coprocessor usage command line app planning function job Result validation Problem: can’t trust volunteers computational result claimed credit Approaches: Application-specific checking Job replication do N copies, require that M of them agree Adaptive replication Spot-checking How to compare results? Problem: numerical discrepancies Stable problems: fuzzy comparison Unstable problems Eliminate discrepancies compiler/flags/libraries Homogeneous replication send instances only to numerically equivalent hosts (equivalence may depend on app) Server scheduling policy revisited Goals (possibly conflicting): Send retries to fast/reliable hosts Send long jobs to fast hosts Send demanding jobs (RAM, disk, etc.) to qualified hosts Send jobs already committed to a homogeneous redundancy class Project-defined “score” function scan N jobs, send those with highest scores Server daemons Per application: Transitioner work generator validator assimilator manages replication, creates job instances triggers other daemons File deleter DB purger Ways to create a BOINC server Install BOINC on a Linux box Run BOINC server VM (Vmware) lots of software dependencies need to worry about hardware Run BOINC server VM on Amazon EC2 BOINC API Typical application structure: boinc_init() loop ... boinc_fraction_done(x) if boinc_time_to_checkpoint() write checkpoint file boinc_checkpoint_completed() boinc_finish(0) Graphics Multi-program apps Wrapper for legacy apps Volunteer’s view 1-click install All platforms Invisible, autonomic Highly configurable (optional) BOINC client structure schedulers, data servers screensaver application local TCP BOINC library core client GUI Runtime system user preferences, control Some BOINC projects Climateprediction.net Einstein@home LIGO scientific collaboration gravitational wave detection SETI@home Oxford University Global climate modeling U.C. Berkeley Radio search for E.T.I. and black hole evaporation Leiden Classical Leiden University Surface chemistry using classical dynamics More projects LHC@home QMC@home Univ. of Muenster Quantum chemistry Spinhenge@home CERN simulator of LHC, collisions Bielefeld Univ. Study nanoscale magnetism ABC@home Leiden Univ. Number theory Biomed-related BOINC projects Rosetta@home Tanpaku University of Washington Rosetta: Protein folding, docking, and design Tokyo Univ. of Science Protein structure prediction using Brownian dynamics MalariaControl The Swiss Tropical Institute Epidemiological simulation More projects Predictor@home SIMAP Tech. Univ. of Munich Protein similarity matrix Superlink@Technion Univ. of Michigan CHARMM, protein structure prediction Technion Genetic linkage analysis using Bayesian networks Quake Catcher Network Stanford Distributed seismograph More projects (IBM WCG) Dengue fever drug discovery Human Proteome Folding U. of Texas, U. of Chicago Autodock New York University Rosetta FightAIDS@home Scripps Institute Autodock Organizational models Single-scientist projects: a dead-end? Campus-level meta-project UC Berkeley: Lattice ~8 applications from various institutions Extremadura (Spain) ACT-R community (~20 universities) IBM World Community Grid U. Maryland Center for Bioinformatics MindModeling.org 1,000 instructional PCs 5,000 faculty/staff 30,000 students 400,000 alumni consortium of 5-10 universities SZTAKI (Hungary) Conclusion Volunteer computing Individuals (~1 billion PCs) Contact me about: Using BOINC Research based on BOINC Companies (~100M PCs) Government (~50M PCs) [email protected]

© Copyright 2026