Answering Questions in Surveys Winter 2014 IN THIS ISSUE

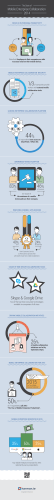

Winter 2014 ● Issue 17 (4) IN THIS ISSUE Technical Report Answering Questions in Surveys How to discourage satisficing in favor of optimizing to reduce primacy effects in surveys. (pages 3-4) President's Message...................................1 WEBINAR: Mapping Techniques ..............2 52 Reports in Book’s 4th Edition ................2 Now Accepting Student Award Entries ........2 Courses in April 2015: ................. 5, 6, 7 Meet the Instructors and Invited Speakers for the Fall Courses ....................................7 New directions in survey research... (pgs. 3 & 4 ) NEWS & EVENTS Winter 2014 Issue 17(4) President's Message PAGE # Answering Questions News & Events ................... 1,2 December Webinar................ 2 Student Award ........................ 2 Technical Report ................ 3,4 April 2015 Courses ............ 5,6 Instructor Bios ........................ 7 TECHNICAL REPORTS: 2014 17(4) Answering Questions in Surveys 17(3) Unfolding We will be presenting a new course in April on Survey Design that will occur immediately following our Advertising Claims Substantiation course (April 13-15.) We hope that you will consider these training opportunities as you make plans for 2015. We felt that there is a need to review and communicate some important developments in survey design and analysis that have occurred in the last few years. The concepts in this course have numerous applications in marketing, product development, ad claims, and regulatory affairs. Our technical report in this issue concerns how people answer questions in surveys and, in particular, how to account for and overcome primacy effects, often seen in check-all-that-apply (CATA) surveys. Best regards, Daniel M. Ennis President, The Institute for Perception 17(2) Confidence Intervals and Consumer Relevance 17(1) Rotations in Product Tests and Surveys 2013 W H AT W E D O : 16(4) How to Find Optimal Combinations of Brand Components Client Services: Provide full-service product and concept testing for product 16(3) How to Diagnose the Need for 3D Unfolding Education: Conduct internal training, external courses, and online webinars 16(2) Transitioning from Proportion of Discriminators to Thurstonian IFPrograms™: License proprietary software to provide access to new modeling tools 16(1) When Are Two Products Close Enough to be Equivalent? 2012 15(4) Proper Task Instructions and the Two-out-of-five Test 15(3) Efficient Representation of Pairwise Sensory Information To download previously published technical reports and papers from our website, become a colleague at www.ifpress.com development, market research and legal objectives on product testing, sensory science, and advertising claims support Research: Conduct and publish basic research on human perception in the areas of methodology, measurement and modeling COURSE CALENDAR: APRIL 13 - 15, 2015 ..................The Greenbrier - White Sulphur Springs, WV Advertising Claims Support: Case Histories and Principles APRIL 16 - 17, 2015 ..................The Greenbrier - White Sulphur Springs, WV Designing Effective Surveys: The Science of Answering Questions S AV E - T H E - DAT E for the following courses held at The Greenbrier: NOVEMBER 2-6, 2015 ..................... Sensory & Consumer Science Courses W E B I NAR CAL E N DAR : December 11, 2014 Mapping Techniques to Link Consumer and Expert Data March 19, 2015 Hiding in Plain Sight: Finding New Opportunities Using Graph Theory Recordings of all previously presented webinars can be ordered at www.ifpress.com Mission Statement: To develop, apply, and communicate advanced research tools for human perceptual measurement. Detailed information and registration for all courses and webinars is available at www.ifpress.com R E C E N T LY P U B L I S H E D PA P E R S : Ennis, J. M. and Christensen, R. (2014). (In Press) A Thurstonian comparison of the tetrad and degree of difference tests. Food Quality and Preference. Ennis, J. M., Rousseau, B., and Ennis, D. M. (2014). Sensory difference tests as measurement instruments: A review of recent advances. Journal of Sensory Studies, 29, 89-102. Ishii, R., O’Mahony, M., and Rousseau, B. (2014). Triangle and tetrad protocols: Small sensory differences, resampling and consumer relevance. Food Quality and Preference, 31, 49-55. To Contact Us... www.ifpress.com [email protected] 804-675-2980 804-675-2983 7629 Hull Street Road Richmond, VA 23235 PAGE 1 NEWS & EVENTS 2.5 D A Y C O U R S E APRIL 13 - 15, 2015 1.5 D A Y C O U R S E APRIL 16 - 17, 2015 See full course descriptions on pages 5, 6, and 7 o f t h i s n e w s l e t t e r. WHO SHOULD ATTEND These professional courses are recommended for attorneys specializing in advertising law, market research managers, product developers, in-house counsels, sensory and consumer scientists, and packaging/product testing specialists. “In this age of science we must build legal foundations that are sound in science as well as in law.” – Stephen G. Breyer, Associate Justice of the U.S. Supreme Court NEW EDITION AVAILABLE NOW! RECOMMENDED by Science Books and Films, American Association for the Advancement of Science Webinar Series THURSDAY December 11 at 2:00 PM EST TIME & DATE: Tools and Applications of Sensory and Consumer Science Mapping Techniques to Link Consumer and Expert Data 52 Technical Report Scenarios Based on Real-life Problems Taught by: Dr. Benoît Rousseau Now in its fourth printing, this book is a must-have tool for professionals in product testing, consumer research, and advertising claims support. It contains our most significant and useful technical reports from the last 16 years. Readers will easily relate to the problems and solutions in each 2-page scenario. And for deeper study, the reader will find a list of published papers on a variety of related subjects. Multivariate analyses are commonly used to study differences among products in a multidimensional sensory space and to relate them to their hedonic assessments by consumers. Such analyses help uncover drivers of acceptability, study consumer population segmentation and generate the sensory profiles of target products. In this webinar, we will review commonly used methods such as factor analysis, preference mapping (internal and external) and unfolding techniques based on individual consumer ideal points. This latter approach provides very valuable insights into consumer sensory segmentation. The webinar is approximately 75 minutes in length, including a 60-minute talk and a 15-minute Q&A session. Drs. Daniel Ennis, Benoît Rousseau, and John Ennis use their combined expertise to guide readers through problems in areas such as: Drivers of Liking® Landscape Segmentation Analysis® Ratings & Rankings Claims Support Optimizing Product Portfolios Combinatorial Tools Difference Tests Designing Tests & Surveys Probabilistic Multidimensional Scaling Also included are 27 tables for product testing methods so the reader can interpret results from discrimination methodologies such as the tetrad test, triangle test, same-different method, duo-trio test, replicated testing, and others. 176 pgs., $95 (plus shipping and VA sales tax, where applicable) ► ORDER ONLINE AT www.ifpress.com/books PAGE 2 ► REGISTER ONLINE AT www.ifpress.com/webinars Now Accepting Applications for the 2014 Institute for Perception Student Award January 17, 2015 TECHNICAL 2014 REPORT Answering Questions in Surveys Issue 17(4) Catherine Sears and Daniel M. Ennis Background: Surveys are conducted to study three main categories: attitudes and beliefs, events and behaviors, and subjective experience. Product, concept, and brand testing can be viewed as types of surveys falling into the third category, subjective experience. This view encourages cross-disciplinary awareness of the survey and polling literature, as scientists in these fields grapple with many of the same problems that occur in sensory and consumer science. One idea that has developed in survey research, highlighted in a review of the main accomplishments of the field over the 20th century, is the idea of the satisficingoptimizing continuum arising from a process model of the way that people answer questions1. In this technical report, we consider the implications of these ideas in a scenario involving a comparison of answers to questions in a check-all-that-apply (CATA) format and an applicability scoring format2. Scenario: You are a consumer Read the phrases and, insights manager in a coffee for each phrase, mark company and are exploring the the box if the phrase emotional responses of condescribes how drinking sumers to coffee drinking in coffee makes you feel. order to develop an emotion lexicon. With a large list of I feel energized emotion terms your typical approach would be to use a I feel guilty check-all-that-apply (CATA) I feel elated list as shown in Figure 1. CATA lists are commonly used in survey research and Figure 1. Three items have been increasing in popu- from a set of one hundred larity within sensory science in in a CATA survey. recent years2. Yet as you review the results, you notice that respondents are tending to check mainly the earlier presented items and ignoring items toward the end of the survey. You also wonder what it means when a box is unchecked. Does the respondent mean to indicate that the item truly does not apply? Or was the item skipped unintentionally? □ □ □ Answering Questions: When a respondent answers a question in a consumer survey, we would like to believe that all of the steps in Figure 2 are faithfully executed. Ideally, a respondent would first comprehend, interpreting the question and deducing its intent by settling on the meaning of each word and establishing relations among the concepts evoked by the words. Next, we assume that the respondent engages in retrieval, searching their memories for relevant information. The respondent then integrates this retrieved information into a judgment and finally makes carefully Figure 2. A process model for answering a survey question. considered response selections, mapping their judgment onto response choices available to them, actively looking for a best fit3,4. Warwick et al.5 and Krosnick1 have theorized that this model of survey response, optimizing, is what happens when respondents are internally satisfied by successful performance, believe survey results will help employers improve working conditions, want an intellectual challenge, feel altruistic, or are looking for emotional catharsis. Yet most people are unlikely to faithfully execute each of these four steps in response to each and every question and may expend less energy by satisficing. In fact, energy minimization may be a motivating factor in how people elect to answer questions. In considering the behavior of different subjects and even the same subject in a given test, we may observe a continuum from strong satisficing to optimizing as shown in Figure 3 corresponding to the degree to which the steps in Figure 2 are executed properly1. Figure 3. The satisficing − optimizing continuum. A respondent engaged in weak satisficing executes all four steps in Figure 2 – comprehension, retrieval, judgment and response selection – but does so less thoroughly than a respondent who is optimizing. Instead of generating the most accurate answers, respondents settle for merely satisfactory ones. Respondents who are engaged in strong satisficing skip the retrieval and judgment steps altogether. They would interpret each question superficially and select what seems to be a reasonable answer without referring to any internal psychological cues relevant to the question1. Teachers of unmotivated students know this behavior quite well. CATA and Satisficing: Smyth et al.6 found that CATA lists in written format have a tendency to induce satisficing behavior in which respondents limit their processing effort by quickly selecting the first reasonable response in a CATA list. The respondents’ weak satisficing behavior causes primacy effects, a disproportionate selection of items appearing early in the list of options. In Smyth et al.’s research, for CATA respondents who spent the mean response time or less, eight of ten questions were significantly more likely to be endorsed when they appeared in the first three positions in the list than when they appeared in the last three positions. These patterns of primacy were only shown by those who spent less time, suggesting that these patterns were from a lack of cognitive processing6. Interestingly, the satisficing-optimizing model predicts recency effects in oral interviews and these effects have been observed in practice. Ares et al.7 applied Smyth et al.’s work to sensory categories and showed that when consumers complete CATA questions for sensory characterization repeatedly, visual processing of PAGE 3 TECHNICAL REPORT Issue 17(4) 2014 information is reduced and respondents may therefore leave items blank, even when relevant. A blank option in CATA does not necessarily mean “does not apply.” Respondents may leave an option blank for a number of reasons including that the option doesn’t apply, they are neutral or undecided, or they overlooked it. Applicability Scoring: A related technique to CATA is applicability scoring, which requires respondents to mark what is both applicable and also not applicable2. (This is sometimes called “forced-choice CATA,”a phrase avoided here to prevent confusion with forced-choice sensory testing methods such as m-AFC.) Access to both responses is needed to conduct statistical analyses to compare products or concepts using McNemar’s test. CATA, on the other hand, only requires a check when the item applies to the object being scored and is therefore not amenable to this type of analysis. Applicability scoring2 may also lead respondents to more deeply process items and to score more options7. Respondents take longer to answer in the applicability scoring format than in the CATA format, perhaps because they need to commit to an answer for every item, and therefore are more likely to think of reasons that the options do or do not apply6. Applicability scoring carries with it a risk of acquiescence bias, in which respondents who are truly neutral check the “does apply” option. Respondents may acquiesce for a number of reasons, including social desirability, or because they are following the rules of ordinary conversation, in which they feel they must contribute something in response to a question8. However, by including a test neutral category, Smyth et al.6 did not find strong evidence for acquiescence bias in applicability scoring. Read the phrases and, for each phrase, mark the box on the left if the phrase describes how drinking coffee makes you feel. Mark the box in the middle if the phrase does not describe how drinking coffee makes you feel. Mark the box on the right if you do not have an opinion. Does Does Not Sure/ Apply Not Apply Cannot Decide I feel energized I feel guilty I feel elated □ □ □ □ □ □ □ □ □ Figure 4. Three items from a set of one hundred in an applicability survey. Applicability Scoring and the Emotion Survey: You conduct an applicability scoring experiment with your respondents using the same emotion terms used in the CATA experiment. Despite Smyth et al.’s demonstration that a neutral category does not draw out “yeses” in applicability scoring, you include a “Not Sure/Cannot Decide” option, as shown in Figure 4, to ensure that respondents are not selecting “yes” because they are undecided9. The applicability scoring survey provides different results than the CATA methodology. Respondents take significantly longer to PAGE 4 answer, and, as shown in Figure 5 according to the scenario, the primacy effect has decreased, theoretically the result of reduced satisficing behavior. Figure 5 dramatizes the proportion of checked boxes in CATA or “yes” responses in applicability scoring that one would expect to see; all questions have been randomly rotated. You now have greater justification to conclude that a blank checkbox means that the respondent ignored the question. Figure 5. Primacy effects: Applicability vs. CATA scoring. Conclusion: When the results of a poll or survey are reported, ideal conditions for respondent motivation are often not considered or they may even be assumed. Yet, respondents sometimes operate less than ideally and may be displaying satisficing behavior. While surveys, including product and concept tests, usually ensure against bias in the realms of the survey design, we must also consider respondent behavior. If respondents are “quick-clicking” without thoroughly executing the steps in Figure 2, the data is questionable at best, regardless of how representative those respondents are of the relevant population, or how carefully the questions are rotated. Avoiding the use of CATA for extensive surveys and considering applicability scoring instead is one potential way to discourage satisficing in favor of optimizing and thus reduce primacy effects. References 1. Krosnick, J. A. (1999). Survey research. Annual Review of Psychology, 50(1), 537-567. 2. Ennis, D. M. and Ennis, J. M. (2013). Analysis and Thurstonian scaling of applicability scores. Journal of Sensory Studies, 28(3), 188-193. 3. Cannell, C., Miller, P., and Oksenberg, L. (1981). Research on interviewing techniques. Sociological Methodology, 12, 389-437. 4. Tourangeau, R. and Rasinski, K.A. (1988). Cognitive process underlying context effects in attitude measurement. Psychological Bulletin, 103, 299-314. 5. Warwick, D. and Lininger, C. (1975). The sample survey: Theory and practice. New York: McGraw Hill. 6. Smyth, J., Dillman, D., Christian, L., and Stern, M. (2006). Comparing check-all and forced-choice question formats in web surveys. Public Opinion Quarterly, 70(1), 66-77. 7. Ares, G., Etchemendy, E., Antúnuez, L., Vidal, L., Giménez, A., and Jaeger, S. (2014). Visual attention by consumers to check-all-thatapply questions: Insights to support methodological development. Food Quality and Preference, 32, 210-220. 8. Grice, P. (1975). Logic and conversation. In P. Cole and J. Morgan (Eds.), Syntax and semantics 3: Speech acts (pp. 41-58). New York: Academic Press. 9. Jaeger, S., Cadena, R., Torres-Moreno, M., Antúnez, L., Vidal, L., Giménez, A., Hunter, D., Beresford, M., Kam, K., Yin, D., Paisley A., Chheang, S., and Ares, G. (2014). Comparison of check-all-that-apply and forced-choice Yes/No question formats for sensory characterisation. Food Quality and Preference, 35, 32-40. 3:10 – 4:00 | Consumer Relevance The purpose of this course is to raise awareness of the issues involved in surveys and product tests to provide the type of evidentiary support needed in the event of a claims dispute. The course speakers have decades of experience as instructors, scientific experts, jurors, and litigators in addressing claims with significant survey and product testing components. National Advertising Division® (NAD® ) and litigated cases will be used to examine and reinforce the information discussed. Instructors: Dr. Daniel M. Ennis, Dr. Benoît Rousseau, Dr. John M. Ennis, Anita Banicevic, Christopher A. Cole, Hal Hodes, Don Lofty, David G. Mallen, Michael Schaper, Annie M. Ugurlayan, and Lawrence I. Weinstein * Approximately 12 credits for CLE: Accreditation will be sought for registrants in jurisdictions with CLE requirements. MONDAY (APRIL 13, 8am - 4pm) 8:00 – 9:00 | Introduction ♦ Introduction and scope ♦ Survey research and history of surveys in litigation ♦ Admissibility of expert testimony 9:10 – 10:00 | Claims ♦ A typical false advertising lawsuit ♦ Puffery, falsity, and injury with examples: P&G vs. Kimberly-Clark (2008), ♦ ♦ ♦ ♦ ♦ ► Litigated Case: SC Johnson vs. Clorox – Goldfish in Bags, 241 F.3d 232 (2nd Cir. 2001) 5) NAD Case #5197 (2010) Unilever US (Dove® Beauty Bar) 6) NAD Case #5443 and NARB #178 (2012) Colgate-Palmolive Co. (Colgate Sensitive Pro-Relief Toothpaste) TUESDAY ♦ How respondents answer questions: Models of survey response, optimizing and satisficing, order effects ♦ Filters to avoid acquiescence and no opinion responses ♦ Survey questions: Biased, open-ended vs. closed-ended ♦ Steps to improve survey questions 7) NAD Case #4305 (2005) The Gillette Co. (Venus Divine® Shaving System for Women) 8) NAD Case #4981 (2009) Campbell Soup Co. (Campbell’s Select Harvest Soups) 9:10 – 10:00 | The Right Method, Design, Location, and Participants ♦ Test options: Monadic, sequential, direct comparisons ♦ Test design issues: Within-subject, matched samples, position and sequential effects, replication ♦ Choosing a testing location and defining test subjects 9) NAD Case #5049 (2009) The Procter & Gamble Co. (Clairol Balsam Lasting Color) 10) NAD Case #5425 (2012) Church & Dwight Co., Inc. (Arm & Hammer® Sensitive Skin Plus Scent) 11) NAD Case #4614 (2007) Ross Products Division of Abbott Labs. (Similac Isomil Advance Infant Formula) 12) NARB Panel #101 (NAD Case #3506) (1999) American Express vs. Visa ♦ “To sue or not to sue” 10:10 – 11:00 | A Motivating Case; NAD - Inside and Out 1) NAD Case #5416 (2012) LG Electronics USA, Inc. (Cinema 3D TV & 3D Glasses) ♦ Advertising self-regulation and the NAD process ♦ The NAD: View from the outside 11:10 – Noon | ASTM Claims Guide; Methods and Data ♦ Review of the ASTM Claims Guide: Choosing a target population, product selection, sampling and handling, selection of markets ♦ Claims: Superiority, unsurpassed, equivalence, non-comparative ♦ Methods: Threshold, discrimination, descriptive, hedonic ♦ Data: Counts, ranking, rating scales 10:10 – 11:00 | Analysis - Interpretation and Communication ♦ The essence of hypothesis testing ♦ Common statistical analyses: Binomial, t-test, analysis of variance, chi-square test, non-parametric tests, scaling difference and ratings ♦ Determining statistical significance and confidence bounds ♦ The value of statistical inference in claims support 13) NAD Case #4906 (2008) Bayer vs. Summit VetPharm, (Vectra 3D and Vectra) 14) NAD Case #5090 and NARB Panel #157 (2009) Bayer vs. Summit VetPharm, (Vectra 3D and Vectra) ► Litigated Cases: SmithKline Beecham Consumer Healthcare, L.P. vs. Johnson & Johnson-Merck Consumer Pharms. Co. (S.D.N.Y. 2001); ALPO Petfoods, Inc. vs. Ralston Purina Co. (D.D.C. 1989), aff’d (D.C. Cir. 1990); Avon Products vs. S.C. Johnson & Son, Inc. (S.D.N.Y. 1994); McNeil-PPC, Inc. vs. Bristol-Myers Squibb Co. (2d Cir. 1991); McNeil-PPC, Inc. vs. Bristol-Myers Squibb Co. (2d Cir. 1991); FTC vs. QT, Inc. (N.D. Ill. 2006) Noon – 1:00 LUNCH 1:00 – 2:00 | Sensory Intensity and Preference; | Attribute Interdependence ♦ Sensory intensity and how it arises ♦ Liking and preference and how they differ from intensity ♦ Attribute interdependencies 2) NAD Case #4306 (2005) The Clorox Co. (Clorox® Toilet Wand™ System) 3) NAD Case #4385 (2005) Bausch & Lomb, Inc. (ReNu with MoistureLoc) 4) NAD Case #4364 (2005) Playtex Products, Inc. (Playtex Beyond Tampons) 2:10 – 3:00 | Requirements for a Sound Methodology ♦ ♦ ♦ ♦ Validity: Ecological, external, internal, face, construct Bias: Code, position Reliability Task instructions – importance and impact (APRIL 14, 8am - 4pm) 8:00 - 9:00 | Consumer Takeaway Surveys Shick vs. Gillette (2005), P&G vs. Ultreo, SDNY (2008) ♦ Motivating Case: 3D TV Drivers of liking Setting action standards for consumer-perceived differences Linking expert and consumer data Clinical vs. statistical significance Consumer relevance in litigation 11:10 – Noon | Test Power ♦ ♦ ♦ ♦ The meaning of power Planning experiments and reducing cost Sample sizes for claims support tests Managing Risks: Advertiser claim, competitor challenge 15) NAD Case #3605 (1999) Church & Dwight Co., Inc. (Brillo Steel Wool Soap Pads) 16) NAD Case #4248 (2004) McNeil, PPC, Inc. (Tylenol Arthritis Pain) Noon – 1:00 LUNCH 1:00 – 2:00 | Testing for Equivalence ♦ How the equivalence hypothesis differs from difference testing ♦ The FDA method for qualifying generic drugs – lessons for ad claims ♦ Improved methods for testing equivalence 17) NAD Case #5490 (2012) Colgate-Palmolive Co. (Colgate Optic White Toothpaste) Register for courses online at www.ifpress.com/short-courses PAGE 5 2:10 – 3:00 | Ratio, Multiplicative, and Count-Based Claims 10:10 – 11:00 | Surveys in Litigation and Before the NAD ♦ The difference between ratio and multiplicative claims ♦ Why ratio claims are often exaggerated ♦ Count-based claims (e.g.,“9 out of 10 women found our product reduces wrinkles”) ♦ ♦ ♦ ♦ ♦ ♦ ♦ 18) NAD Case #5416 (2012) LG Electronics USA, Inc. (Cinema 3D TV & 3D Glasses) 19) NAD Case #4219 (2004) The Clorox Company (S.O.S.® Steel Wool Soap Pads) 20) NAD Case #5107 (2009) Ciba Vision Corp. (Dailies Aqua Comfort Plus) 21) NAD Case #5617 (2013) Reckitt Benckiser (Air Wick® Freshmatic ® Ultra Automatic Spray) 3:10 – 4:00 | “Up To” Claims ♦ Definition and support of an “up to” claim ♦ FTC opinion with litigated case example ♦ Statistical models and psychological models 22) NAD Case #5263 (2010) Reebok International, LTD (EasyTone Women’s Footwear) WEDNESDAY (APRIL 15, 8am - Noon) 8:00 - 9:00 | What to Do with No Difference/ No Preference Responses ♦ No preference option analysis ♦ Power comparisons: Dropping, equal and proportional distribution ♦ Statistical models and psychological models 23) NAD Case #4270 (2004) Frito-Lay, Inc. (Lay’s Stax® Original Potato Crisps) 24) NAD Case #5453 (2012) Ocean Spray Cranberries, Inc. (Ocean Spray Cranberry Juice) 9:10 – 10:00 | Venues Within and Outside the USA ♦ ♦ ♦ ♦ ♦ False advertising litigation in Canada – differences from USA Canadian advertising substantiation requirements Advertising dispute resolution outside the USA and Canada When a case spans multiple venues Class action lawsuits ► Litigated Case: Rogers Communications/Chatr vs. Commissioner of Competition (2013) 25) NAD Case #5249 and NARB Panel #172 (2010) Merial LTD (Frontline® Plus) 10:10 – Noon | Applying Course Principles and Concepts ♦ Group exercise: Develop support strategy for an advertising claim to include: engagement of all stakeholders, wording of the claim, takeaway, design and execution of a national product test, product procurement, analysis, and report ♦ Course summary and conclusion History of surveys in Lanham Act cases Hearsay objections Federal Rules of Evidence – Rule 703 Daubert effects Admissibility of expert testimony (reliability and relevance) Survey critiques NAD and litigated case examples 11:10 – Noon | How People Answer Questions ♦ ♦ ♦ ♦ Rules of ordinary conversation Context, sequence, and order of question effects Telescoping and memory Open-ended vs. closed-ended questions Noon – 1:00 LUNCH 1:00 – 2:00 | Optimizing and Satisficing ♦ ♦ ♦ ♦ ♦ A model for answering a question Predicting primacy and recency effects Causes of acquiescence Motivations to optimize “Don’t know” and “no preference” questions 2:10 – 3:00 | Survey Design ♦ Developing the concepts to be measured ♦ Operationalizing the concepts into survey items ♦ Cognitive interviewing and pretesting ♦ Data acquisition: Internet, phone, mail, mall intercepts ♦ When is a control needed? ♦ Selecting a proper control ● NAD Case #4305 (2005) The Gillette Co. (Venus Divine® Shaving System for Women) 3:10 – 4:00 | Sampling ♦ Defining a proper universe ♦ Types of sampling: Probability and non-probability, convenience, random, stratified, quota, cluster FRIDAY (APRIL 17, 8am - Noon) 8:00 - 9:00 | Identifying and Removing Sources of Bias This course offers an in-depth look at how people answer questions in surveys. We will take you through the process of operationalizing survey concepts, how to improve survey item comprehension, validity, and reliability. A process model for answering a question will be shown to distinguish optimizing behavior from satisficing behavior. Instructors: Dr. Daniel M. Ennis, Dr. Benoît Rousseau, Dr. John M. Ennis, David H. Bernstein, Kathleen (Kat) Dunnigan, and Nancy J. Felsten ♦ ♦ ♦ ♦ ♦ ♦ Sampling Participation and non-response Uncontrolled individual differences Code and order Leading questions Interviewer effects 9:10 – 10:00 | Analysis of Data and Statistical Issues ♦ Introduction and purpose of the course ♦ Exercise: Design a short questionnaire to survey consumer takeaway ♦ ♦ ♦ ♦ ♦ ♦ ♦ 9:10 – 10:00 | Surveys: Definition and Purpose 10:10 – Noon | Workshop: Designing a Survey ♦ What is a survey? ♦ Purpose of conducting surveys ♦ Events and behaviors, attitudes and beliefs, subjective experiences PAGE 6 ♦ Small group exercise to design a survey ♦ Survey design critique and group discussion ♦ Course summary and conclusion * Approximately 8 credits for CLE: Accreditation will be sought for registrants in jurisdictions with CLE requirements. THURSDAY (APRIL 16, 8am - 4pm) 8:00 – 9:00 | Introduction to Survey Design Types of data Psychometric properties of the survey items Types of validity Precision Weighting Predictive models: Linear, logistic Confidence intervals R E G I S T R AT I O N & I N S T R U C TO R BIOS ADVERTISING CLAIMS SUPPORT COURSE HOW TO REGISTER DESIGNING EFFECTIVE SURVEYS COURSE Register online at www.ifpress.com/short-courses where payment can be made by credit card. If you prefer to be invoiced, please call 804-675-2980 for more information. April 13 - 15, 2015 (2.5 days)..............................$1,975* April 16 - 17, 2015 (1.5 days)..............................$1,250* Register for both courses and save $100 ....................... $3,125* ___________________________________________________ *A 20% discount will be applied to each additional registration when registered at the same time, from the same company, for the same course. *The Institute for Perception offers reduced or waived course fees to non-profit entities, students, judges, government employees and others. Please contact us for more information. Fee includes all course materials, continental breakfasts, break refreshments, lunches, and group dinners. Scientific Team Dr. Daniel M. Ennis - President, The Institute for Perception. He holds doctorates in food science and mathematical and statistical psychology with 35 years of experience in product testing theory and applications for consumer products. Danny consults globally and has served as an expert witness in a wide variety of advertising cases. Dr. Benoît Rousseau - Sr. Vice President, The Institute for Perception. Benoît received his food engineering degree from AgroParisTech in Paris, France and holds a PhD in sensory science and psychophysics from the University of California, Davis. He manages sensory and consumer science projects with clients in the US and abroad. Dr. John M. Ennis - Vice President of Research Operations,The Institute for Perception. John is the winner of the 2013 Food Quality and Preference Award for “Contributions by a Young Researcher.” He has a strong interest in the widespread adoption of best practices throughout sensory science. Legal Team LOCATION: These two courses will be held at The Greenbrier ® in White Sulphur Springs, WV. Renowned for its standard of hospitality and service, this hotel is an ideal location for executive meetings and consistently receives a AAA 5-Diamond rating. LODGING: Lodging is not included in the course fee and partici- pants must make their own hotel reservations. A block of rooms is being held at The Greenbrier at a special rate of $195 (plus resort fees & taxes). To make a reservation, please call 1-877-661-0839 and mention you are attending the Institute for Perception courses (note: the special rate is not available through online reservations.) To learn more about The Greenbrier, visit their website at www.greenbrier.com. TRANSPORTATION: Nearby airports include the Greenbrier Valley Airport (LWB, 15 min.), Roanoke, VA (ROA, 1 hr. 15 min.), Beckley, WV (BKW, 1 hr.), and Charleston, WV (CRW, 2 hrs.). CANCELLATION POLICY: Registrants who have not cancelled two working days prior to the course will be charged the entire fee. Substitutions are allowed for any reason. Anita Banicevic - Partner, Davies Ward Phillips & Vineberg in Toronto, Canada. Anita has represented clients in contested misleading advertising proceedings and investigations initiated by Canada’s Competition Bureau, and advises domestic and international clients on Canadian competition and advertising and marketing law. Don Lofty - Retired Corporate Counsel, at SC Johnson. Don has many years of experience in anti-trust and trade regulation, with emphasis on advertising law, including practice before the NAD. He was the head of the Marketing and Regulatory Legal Practice Group and managed the Legal Compliance Program at SC Johnson. David H. Bernstein - Partner, Debevoise & Plimpton in New York City. David regularly represents clients in advertising disputes in courts nationwide, before the NAD, NARB and television networks, in front of state and federal regulators, and in arbitration proceedings. He is also an adjunct professor at NYU and GWU law schools. David G. Mallen - Partner, Loeb & Loeb in New York City. David specializes in advertising law, claim substantiation, and legal issues in media and technology. He was previously the Deputy Director of the NAD where he analyzed legal, communication, and claim substantiation issues, and resolved hundreds of advertising disputes. Christopher A. Cole - Partner, Crowell & Moring in Washington, DC. Chris practices complex commercial litigation and advises the development, substantiation, and approval of advertising and labeling claims. He has represented several leading consumer products and services companies and has appeared many times before the NAD. Michael Schaper - Partner, Debevoise & Plimpton in New York City. Mike focuses on various types of complex civil litigation in the areas of antitrust and intellectual property law, as well as merger review and other antitrust counseling. Kathleen (Kat) Dunnigan - Senior Staff Attorney, the NAD. Kat has worked for the Legal Aid Society’s Juvenile Rights Division, the Center for Appellate Litigation, and Center for HIV Law and Policy. She has also litigated employment discrimination, civil rights claims, and many employment cases before the New Jersey Supreme Court. Annie M. Ugurlayan - Senior Attorney, the NAD. Annie has handled over 150 cases, with a particular focus on cosmetics and food cases. She is a published author, Chair of the Consumer Affairs Committee of the New York City Bar Association, and a member of the Board of Directors of the New York Women’s Bar Association Foundation. Nancy J. Felsten - Advertising Attorney, Davis Wright Tremaine in New York City. Nancy advises advertising agencies and corporate clients on advertising, marketing and promotional matters. She frequently represents clients in false advertising challenges before the NAD, television networks, the FTC, and State Attorneys General. Lawrence I. Weinstein - Partner, Proskauer Rose in New York City. Larry is co-head of the firm’s renowned False Advertising and Trademark Group. His practice covers a broad spectrum of intellectual property law, false advertising, trademark, trade secret, and copyright matters, as well as sports, art and other complex commercial cases. Hal Hodes - Staff Attorney, the NAD. Prior to joining the NAD, Hal worked in private practice where he represented hospitals and other health care practitioners in malpractice litigation. Hal has also served as an attorney at the New York City Human Resources Administration representing social services programs. To read more extensive biographies, please visit www.ifpress.com/short-courses/ PAGE 7

© Copyright 2026