ERROR BOUNDS FOR APPROXIMATE

c 2007 Society for Industrial and Applied Mathematics

SIAM J. NUMER. ANAL.

Vol. 45, No. 6, pp. 2510–2536

ERROR BOUNDS FOR APPROXIMATE EIGENVALUES

OF PERIODIC-COEFFICIENT LINEAR

DELAY DIFFERENTIAL EQUATIONS∗

ED BUELER†

Abstract. We describe a new Chebyshev spectral collocation method for systems of variablecoefficient linear delay differential equations with a single fixed delay. Computable uniform a posteriori bounds are given for this method. When the coefficients are periodic, the system has a unique

compact nonnormal monodromy operator whose spectrum determines the stability of the system.

The spectral method approximates this operator by a dense matrix of modest size. In cases where

the coefficients are smooth we observe spectral convergence of the eigenvalues of that matrix to those

of the operator. Our main result is a computable a posteriori bound on the eigenvalue approximation

error in the case that the coefficients are analytic.

Key words. Chebyshev, collocation, delay differential equations, eigenvalues, monodromy

operator, a posteriori, spectral methods

AMS subject classifications. 34K06, 34K20, 65Q05, 65F15, 65L60

DOI. 10.1137/050633330

1. Introduction. Consider the linear delay differential equation (DDE)

(1.1)

x

¨ + x˙ + (δ + 0.1 cos(πt))x = b x(t − 2),

with δ, b ∈ R. This is a delayed, damped Mathieu equation [21].

Question: For which values of δ, b is (1.1) asymptotically stable in

the sense that all solutions go to zero as t → ∞?

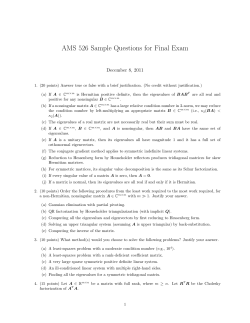

A practical and visual answer to this question, for (δ, b) ∈ [0, 20] × [−10, 10] in

(1.1), is the numerically produced stability chart in Figure 1.1.

Questions like this one arise in the stability analysis of many DDE models,

including nonlinear models. Indeed, linear periodic DDEs frequently occur as the

“variational equation” for perturbations of a periodic solution of a nonlinear DDE

[18], and thus questions of this type are important in nonlinear dynamics as well.

Stability charts like Figure 1.1 are useful in applications, including biology [25] and

engineering [29] stability problems. In the context of machine tool vibrations, for

instance, stability charts allow the choice of parameters which minimize regenerative

vibrations and maximize throughput and quality [20, 37].

The stability chart in Figure 1.1 is produced pixel-by-pixel by numerically approximating the spectral radius of the compact monodromy operator associated to DDE

(1.1). This operator is defined in section 2. Its eigenvalues are called multipliers. For

each (δ, b) parameter pair there is a monodromy operator, and the pixel is marked

as stable if the computed spectral radius is less than one. By “computed spectral

radius” we mean this: Numerical methods applied to equations like (1.1) can usually be formulated as giving a square matrix which “approximates” the monodromy

∗ Received by the editors June 9, 2005; accepted for publication (in revised form) May 14, 2007;

published electronically November 28, 2007. This work was supported in part by NSF grant 0114500.

http://www.siam.org/journals/sinum/45-6/63333.html

† Department of Mathematics and Statistics, University of Alaska, Fairbanks, AK 99775 (ffelb@

uaf.edu).

2510

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2511

10

unstable

b

5

0

(δ,b)=(1,1/2)

(asymptotically) stable

−5

−10

0

5

10

δ

15

20

Fig. 1.1. Stability chart for a delayed, damped Mathieu equation.

operator, in the intended sense that the largest (magnitude) eigenvalues of the matrix

approximate the largest multipliers of the operator [7, 10, 21, 24]. Standard numerical methods compute the eigenvalues of the matrix [17], and thus we get a computed

spectral radius of the monodromy operator.

An example of the problem which motivates this paper is this: Based on Figure

1.1 we expect that the particular parameter pair (δ, b) = (1, 1/2) will be stable. In

fact, for this parameter pair we get, from the spectral method introduced in this

paper, the numerical value 0.612992319912 for the spectral radius of the monodromy

operator. Based on many computations, by himself and others, of the eigenvalues

of monodromy operators (the “multipliers”) of periodic linear DDEs using spectral

methods [8, 15, 21, 24], the author believes this value is trustworthy. In fact, the

author believes that all twelve digits given are correct. But can one actually bound

the difference between the actual multipliers and their approximations? Is the bound

computable, and is it close to the actual error?

Figure 1.2 shows what we want, namely, to have the computed large eigenvalues

of the matrix approximation within “error circles” in which the actual multipliers are

known to reside. This figure results from applying our main result, which will be

stated in Theorem I. It gives the desired kind of computable bounds for a class of

DDEs which includes (1.1). We see, in particular, that the (δ, b) = (1, 1/2) case of

(1.1) is stable because all error circles are within the unit disc.

This paper is concerned with linear periodic-coefficient DDEs with fixed delays.

For such equations there is a Floquet theory [30] somewhat analogous to that for

ordinary differential equations (ODEs). For constant-coefficient DDEs the multipliers

can be determined, in theory or numerically, by finding the roots of a characteristic

equation. For linear, periodic-coefficient DDEs with “integer” delays [19], if a Floquet

transition matrix for certain linear ODEs associated to the DDE can be found exactly,

then complex variable techniques can also in theory determine the multipliers [19, section 8.3]. (The Floquet transition matrix is the fundamental solution evaluated at

one period of the coefficients of a periodic-coefficient linear ODE.) In general, however, ODE fundamental solutions must themselves be approximated. Furthermore,

an approximation of the monodromy operator itself is of interest in many problems,

2512

ED BUELER

0.5

0

−0.5

−0.4

−0.2

0

0.2

0.4

0.6

0.8

Fig. 1.2. Computed multipliers (dots) of (1.1) with (δ, b) = (1, 1/2). Application of the technique of this paper (with 76 Chebyshev collocation points, in particular) gives an error radius of 0.016

around the computed multipliers (solid circles) for those actual multipliers μ such that |μ| ≥ 0.2

(dotted circle). The equation is seen to be stable because all eigenvalues are within the unit circle

(dashed).

including in cases where the eigenfunctions of the operator are important.

The DDEs addressed in this paper are d-dimensional systems of the form

(1.2)

y˙ = A(t)y + B(t)y(t − τ ),

where τ > 0 is fixed and where A(t), B(t) are d × d continuous matrix-valued coefficients. Higher-order scalar equations like (1.1) can, of course, be written as first-order

systems of form (1.2). The Chebyshev collocation method for initial value problems

(IVPs), described next and in section 3, makes no further restrictions on the form of

the problem. To possess a unique monodromy operator, however, the coefficients A, B

of the DDE must have common period T . We also assume T = τ . The T = τ case

is of importance in applications [20], and it is a technically easier first case in which

to address the computation of error bounds on computed multipliers. To estimate

the eigenvalue approximation error for our spectral method we will require the coefficients A, B to be analytic. Without some strong smoothness assumption we cannot,

of course, expect spectral convergence of either solutions or eigenvalues.

This paper presents several new techniques for the numerical analysis of DDEs.

First we describe a new Chebyshev spectral collocation method for solving DDE

IVPs of the form (1.2). The modest amount of notation necessary to describe the

method in detail is given in section 3, but the essentials of the method can be communicated without it. Indeed, we choose N collocation points and then replace the

DDE (1.2) by its collocation approximation

(1.3)

ˆN v = M

ˆ Av + M

ˆ B w,

D

ˆN , M

ˆ A , and M

ˆ B are square matrices of typically modest size—e.g., 30 to 300

where D

rows—defined in section 3, and where v, w are vectors of collocation values of y(t) and

y(t − τ ), respectively. The solution of the linear system (1.3) by Gauss elimination (or

other dense matrix methods) completes the spectral method. We give computable a

posteriori error bounds on the uniform error of this method (Theorem 3.4).

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2513

Second, we make an observation which is almost trivial, but which nonetheless

gives a new spectral method for approximating the monodromy operator associated

to DDE (1.2). We observe that the matrix which computes v from w, in (1.3), is a

matrix approximation of the monodromy operator:

−1

ˆN − M

ˆA

ˆB.

(1.4)

UN = D

M

We have seen in many examples [9, 10] that the eigenvalues of UN are excellent

approximations of the multipliers.

In fact, spectral methods have been applied to DDEs many times in the past

[5, 13, 23, 24]. Though the above specific spectral method is new to our knowledge,

in that it uses collocation at the Chebyshev extreme points, the results just stated

should not be surprising to many readers.

Our third new technique is very different from the existing literature, however. It

says that one can compute bounds on the errors in the eigenvalues of UN .

Theorem I (Theorem 6.4 gives precise statement and proof). If the coefficient

functions A(t), B(t) in (1.2) are analytic on a closed interval of length T, and if the

delay is equal to the period (τ = T ), then the distance from a large multiplier μ to the

nearest of the computed eigenvalues λi of UN is bounded:

min |μ − λi | ≤ ω cond(V˜ ).

Here “cond(V˜ )” is a computable condition number coming from a numerical diagonalization of UN . The other factor ω comes from a combination of a priori bounds,

derived from properties of the coefficients A, B in (1.2), and a posteriori estimates on

IVPs for (1.2) with predetermined initial functions (namely, Chebyshev polynomials).

We observe in many examples that ω decays exponentially, while cond(V˜ ) grows

slowly, as the number N of Chebyshev collocation points increases. Thus the estimate

is usably small in these cases, and we give two examples here.

The a posteriori estimates on IVPs just mentioned come from an apparently

new kind of result for spectral methods applied to ODEs (Theorem 3.4). A new

“bootstrapping” method for achieving good a posteriori bounds on the growth of

fundamental solutions to variable-coefficient ODEs is given in section 4. To prove

Theorem I we also give an apparently new theorem on eigenvalue perturbation for

diagonalizable operators on Hilbert spaces (Theorem 5.1 and Corollary 5.2). These

are all technical results for our current purposes, but they may be of independent

interest.

As noted, we address the case where the period of the coefficients T is equal to

the (single, fixed) delay τ . A preprint by the author [8] sketches the generalization

of the results to the situation in which T ≥ τ . Further generalization to multiple

“integer” delays [19] presents only resolvable bookkeeping difficulties [9].

Regarding previous work in this area, spectral methods were first applied to DDE

IVPs in [5] and [23]. More recently, collocation using the Gauss–Legendre points

has been applied to the problem of finding periodic solutions to nonlinear DDEs in

[13]. (Note that our problems have periodic coefficients but they do not, generally,

have periodic solutions.) There is also a substantial engineering literature addressing

stability—as opposed to solutions of IVPs—of ODEs and DDEs by spectral methods. For ODE problems, Sinha and Wu [28] use a Chebyshev Galerkin method, for

example. Engineering applications led the author and colleagues to use spectral methods to address DDE stability questions [10]. Luzyanina and Engelborghs address the

2514

ED BUELER

application of spectral methods, among others, to the stability of linear DDEs and

functional differential equations, and numerical evidence for spectral convergence of

the multipliers is given [24, Table 2].

A recent paper by Breda, Maset, and Vermiglio [7] shows that for constantcoefficient DDEs with fixed discrete and distributed delays, a class of Runge–Kutta

methods approximate the multipliers with a polynomial (in the mesh/step size) rate

of convergence; spectral convergence does not, of course, occur. No computable error

bounds are given.

The Chebyshev spectral method used here for DDEs first appeared in preprint

form in [8]. Gilsinn and Potra [15] have recently sketched an a priori proof of the

convergence of the numerical multipliers by this method. In [15] convergence is under

mesh-refinement (“h-refinement”), based on the proof techniques of [5]. The regularity

of the DDE to which the convergence argument applies is unspecified. No estimate of

the rate of convergence is given.

Mesh-refinement has not been considered in the current paper, as one cannot

achieve the spectral convergence necessary to have good eigenvalue estimation; in this

paper we address only “p-refinement,” where one increases the degree of polynomial

approximation.

We believe that this paper represents the first quantitative technique for bounding

the eigenvalue approximation error for a large class of continuous, infinite-dimensional

systems whose dynamics are represented by nonnormal compact operators which are

not already represented as integral operators with known kernels (compare [1]), or

as Toeplitz operators (see [35] and the literature cited there), or which come from

constant-coefficient equations (e.g., [7]). Note that the monodromy operators here

are described by formula (2.4), so that they are operators of the form (finite rank

operator) plus (integral operator). Finding the kernel of the integral operator part

generally requires approximation of the fundamental solution of a variable-coefficient

ODE, however. This is itself a nontrivial task in general [22].

2. The monodromy operator for a linear, periodic DDE. Consider the

linear DDE system

(2.1)

y(t)

˙ = A(t) y(t) + B(t) y(t − 2)

for y(t) ∈ Cd . Suppose A, B are continuous matrix-valued functions on I = [−1, 1]

which extend to not-necessarily-continuous periodic functions on the real line with

period T = 2. The normalization of the period and the delay to 2, and the choice

of interval [−1, 1], are convenient for describing the Chebyshev collocation method

(below). This normalization can be achieved by scaling and shifting the independent

variable t in any linear periodic DDE with T = τ , of course.

The monodromy operator U for (2.1) is defined as follows by considering the

IVP [18]. Suppose y(t) solves (2.1) with initial condition y(t) = f (t) for t ∈ I; y(t) is

then defined for t ∈ [−1, ∞). For s ≥ 1, define ys (t) = y(t + s − 1) for t ∈ I; this is

standard shift notation for DDEs [18]. Note that ys is a Cd -valued function defined

on I and that y1 = f . Define U to act on a soon-to-be-specified space of functions

on I:

(2.2)

U f = y3 .

Note U maps a function on I back to a function on I; such a form is essential if U is

to have a spectrum.

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2515

Thus the monodromy operator acts by solving an ODE IVP

(2.3)

z(t)

˙ = A(t)z(t) + B(t)f (t),

z(−1) = f (1),

for t ∈ I, to give U f = z. (Also, z = y3 , but we want to clearly show the ODE

IVP.) This linear, nonhomogeneous ODE problem can be solved by integration if the

solution to the corresponding homogeneous ODE problem is known. In fact,

t

(2.4)

(U f )(t) = ΦA (t)f (1) +

ΦA (t)ΦA (s)−1 B(s)f (s) ds,

−1

where ΦA (t) is the fundamental solution of z˙ = A(t)z. (By definition ΦA (t) solves

Φ˙ A = AΦA on I and ΦA (−1) = Id , where Id is the identity on Cd .) Note that the

second summand in this formula for the monodromy operator is an integral operator

of Volterra type. Knowledge of the kernel of this integral operator, namely, k(t, s) =

ΦA (t)ΦA (s)−1 B(s), generally requires the numerical solution of an ODE problem,

however.

We emphasize that y2n+1 = U n f solves, by “the method of steps,” the IVP

consisting of (2.1) and y(t) = f (t), t ∈ I. More precisely, if 2n − 1 ≤ t ≤ 2n + 1,

then y(t) = (U n f )(t − 2n), and so y(t) is determined by steps for all of [−1, ∞). The

(asymptotic) stability of (2.1) is therefore given by the condition that the spectral

radius of U be less than unity. (On the other hand, the degree of nonnormality

of U will determine how much caution is required in extracting meaning from the

multipliers of the linearization (variational equation) of a nonlinear DDE around a

periodic solution; compare [35].)

Note that the solutions of linear DDEs with periodic coefficients are not themselves periodic. Also, the solutions are generally only regular in pieces. For instance,

even if the initial function f is smooth on the interval I, and if A, B are smooth on

all of R, nonetheless some derivative of y(t) is generally not continuous at t = 1.

An alternative form for the monodromy operator uses a fundamental solution to

the DDE itself [18, 15]. In the periodic-coefficient case of the current paper, formula

(2.4) is equivalent to, but easier to use than, this alternate form. Our reasons for

the “easier to use” claim are necessarily complicated, but they relate to the piecewise

regularity of the eigenfunctions of the DDE problem. See Lemma 4.3 below.

We still have not specified the space on which U acts, and it is vital to choose a

usable space. Inspection of formula (2.4) shows that if f is continuous on I, then U f

is continuous on I. It is appropriate to fix notation.

Definition 2.1. Let C(I) be the space of scalar continuous functions on I. Let

C = C(I) ⊗ Cd be the space of Cd -valued continuous functions.

Certainly a monodromy operator U : C → C is well defined from formula (2.4).

Its spectrum determines the stability of DDE (2.1). On the other hand, it will be

desirable to work in a Hilbert space because we need tools for eigenvalue perturbation.

In addition, the output of U is more regular than the input. We will suffer no loss

of generality if we restrict our attention to a Hilbert space which is a subspace of C

because, in particular, the eigenfunctions of U : C → C will in every case be in our

chosen subspace.

The Hilbert space we choose is defined via a well-behaved orthogonal basis of

nonperiodic, smooth functions which do a good job of approximating functions in C,

namely, Chebyshev polynomials with a well-chosen normalization. As is well known,

these polynomials also do an excellent job of interpolating smooth functions on I; see

section 3. In the next two definitions, and the two lemmas which follow, we confine

2516

ED BUELER

ourselves to the scalar case (d = 1) for notational convenience. There will be no actual

loss of generality (as explained after Lemma 2.5).

Definition 2.2. Let L2 be the Hilbert space of complex-valued (measurable)

1

functions f on I such that −1 |f (t)|2 (1 − t2 )−1/2 dt < ∞, and define the inner prod−1/2

1

dt. (Note that “L2 ” will always refer to

uct f, g L2 = −1 f (t) g(t) 1 − t2

2

2

Chebyshev polynomials are defined as

a weighted L√ space.) The (L -)normalized

Tˆ0 (t) = (1/ π)T0 (t), Tˆk (t) = ( 2/π)Tk (t), where Tk (t) = cos(k arccos t) are the

standard Chebyshev polynomials. The set {Tˆk }∞

k=0 is an orthonormal (ON) basis of

2

2

2

ˆ

ˆ

L . For f ∈ L let fk = Tk , f L2 be the (L -)Chebyshev expansion coefficients of f .

∞

∞

Thus f (t) = k=0 fˆk Tˆk (t), with convergence in L2 , and f 2L2 = k=0 |fˆk |2 .

Unfortunately, the monodromy operator U is not bounded on L2 . In particular,

(2.4) refers to the point values of the input function. Therefore, we turn to a Hilbert

subspace of L2 ∩ C introduced by Tadmor [31].

∞

Definition 2.3. H 1 := {f ∈ L2 | k=0 (1 + k)2 |fˆk |2 < ∞}. For f, g ∈ H 1 we

∞

define the inner product f, g H 1 = k=0 (1 + k)2 fˆk gˆk . Let T˜k (t) = (1 + k)−1 Tˆk (t) be

the H 1 -normalized Chebyshev polynomials. The set {T˜k }∞

H 1.

k=0 is an ON basis of 1,2

1

The space H is a Sobolev space. It is not actually equivalent to WT = f ∈

2

L f L2 + f˙L2 < ∞ [31], but we will have no need for such an equivalence. Next,

we see that H 1 ⊂ C(I) and pointwise evaluation is bounded.

Lemma 2.4. If f ∈ H 1 , then f is continuous (it has a continuous representative)

and |f (t)| ≤ 0.9062f H 1 . In particular,

(2.5)

δ1 f ≡

∞

fˆk Tˆk (1) =

k=0

∞ k=0

1

−1

Tˆk (1)Tˆk (t)(1 − t2 )−1/2 f (t) dt

is a bounded linear functional f → f (1) on H 1 .

Proof. The proof is a standard exercise for Sobolev spaces. See [8] for a complete

proof.

To use H 1 we also need to know that if f is sufficiently regular on I, then f ∈ H 1 .

We give a criterion via the Fourier series of f (cos θ). In fact, if f ∈ C 1 (I), then the

1

[−π, π]; that is, f can be periodically extended

even function f (θ) = f (cos θ) is in Cper

1

with period 2π to be C on R. For k ∈ Z let fˆ (k) be the kth Fourier coefficient of

π

f ; that is, fˆ (k) = (2π)−1/2 −π e−ikθ f (θ) dθ. Then fˆk = fˆ (k) = fˆ (−k) for k > 0 and

π

fˆ0 = 2−1/2 fˆ (0). For functions g on [−π, π] define the norm g2F := −π |g(θ)|2 dθ.

Lemma 2.5.

(2.6)

(2.7)

f 2L2 =

f 2H 1 =

∞

1 ˆ

1

|f (k)|2 = f 2F , and

2

2

1

2

k=−∞

∞

(1 + k)2 |fˆ (k)|2 ≤ f 2F + f 2F .

k=−∞

Thus C 1 (I) ⊂ H 1 ⊂ C(I) and

(2.8)

f 2H 1 ≤ 2πf 2∞ + 2πf˙2∞

if f ∈ C 1 (I).

Proof. Again the proof is standard and is found in [8].

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2517

Now we return to the general Cd -valued (nonscalar) case. By mild abuse of

we let L2 be the space of Cd -valued measurable functions f for which

notation,

1

2

|f (t)| (1−t2 )−1/2 dt < ∞; there is a corresponding inner product. Again by abuse

−1

∞

of the notation we let H 1 be the subspace of L2 for which k=0 (1 + k)2 |fˆk |2 < ∞,

where fˆk = Tˆk , f L2 ∈ Cd . We give H 1 the obvious inner product.

Since U f solves IVP (2.3), U maps C to C 1 and indeed U : H 1 → H 1 . We can

extract from (2.4) a useful estimate

of U H

1.

Lemma 2.6. Suppose ΦA (t)ΦA (s)−1 ≤ CA for all −1 ≤ s ≤ t ≤ 1. Let

a2 = 1 + A2∞ and c = 0.9062. Then

√

1/2 2

(2.9)

U H 1 ≤ 2πd caCA + B∞ c2 + πa2 CA

.

/2

t

Proof. Suppose f ∈ H 1 and let g(t) = −1 ΦA (s)−1 B(s)f (s) ds. Note that

U f H 1 ≤ ΦA (t)f (−1)H 1 + ΦA (t)g(t)H 1 . Letting ϕ(t) = ΦA (t)f (−1), we have a

bound from Lemma 2.5:

ϕ2H 1

≤ 2π

d

2 2

ϕk 2∞ + (Aϕ)k 2∞ ≤ 2πd CA

a |f (−1)|2 .

k=1

On the other hand, if ω(t) = ΦA (t)g(t), then ω˙ = Aω + Bf , and so by Lemma 2.5,

ω2H 1

= 2π

d

2

ωk 2∞ + ((Aω)k ∞ + (Bf )k ∞ ) .

k=1

But

ΦA (t)ΦA (s)−1 |B(s)| |f (s)| ds

−1 −1≤s≤t≤1

1

π

π

2

CA B∞ f L ≤

CA B∞ f H 1

|f (s)| ds ≤

≤ CA B∞

2

2

−1

|ω(t)k | ≤ |ω(t)| ≤

1

max

2 −1/2

by the

ds. Similarly, |(A(t)ω(t))k |

πCauchy–Schwarz inequality with weight (1−s )

1

A

C

B

f

.

On

the

other

hand,

|(B(t)f

(t))k | ≤ B∞ |f (t)| ≤

≤

∞

A

∞

H

2

cB∞ f H 1 . Thus

π

π

2

2

ω(t)2H 1 ≤ 2πdB2∞

+ A2∞ CA

+ c2 f 2H 1 .

CA

2

2

3. A Chebyshev collocation method for linear DDEs (and ODEs). First

we consider IVPs for (d ≥ 1)-dimensional ODE systems of the form

(3.1)

y(t)

˙ = A(t)y(t) + u(t)

for y(t), u(t) ∈ Cd , and A(t) a d × d matrix.

Recall that I = [−1, 1] and that C denotes Cd -valued continuous functions on

I. Denote f ∈ C by f = (f1 , . . . , fd ) . For f ∈ C let f ∞ = maxt∈I |f (t)|, where

“| · |” is the Euclidean norm on Cd . Note that | · | induces a norm on d × d matrices,

also denoted “| · |.” For continuous matrix-valued functions A(t) = (aij (t)) define

A∞ = maxt∈I |A(t)|.

2518

ED BUELER

To present our spectral method we need to recall the basics of, and give notation

for, polynomial interpolation and collocation at the Chebyshev points.

Definition 3.1. Let PN ⊂ C be the space of Cd -valued polynomials of degree

at most N . Note that PN has dimension l = d(N + 1). The Chebyshev collocation

(extreme) points in I are tj = {cos(jπ/N )} for j = 0, 1, . . . , N [33]. Note that

t0 = 1 > t1 > · · · > tN = −1.

On the function (vector) spaces C and PN we have collocation operators as follows.

Evaluation at N + 1 Chebyshev collocation points produces a vector in Cl . We regard

evaluation of continuous functions as a linear operator GN : C → Cl :

(3.2)

GN f = (f1 (t0 ), . . . , fd (t0 ), f1 (t1 ), . . . , fd (t1 ), . . . , . . . , . . . , f1 (tN ), . . . , fd (tN )) .

We will always order the scalar components of an element of Cl consistently with the

output of GN (if the element refers to the collocation values of a Cd -valued function).

Restricting GN to polynomials gives a bijection EN : PN → Cl . The inverse of EN ,

namely, PN : Cl → PN , “creates” a Cd -valued polynomial from collocation values;

see (3.3) below for a method for computing PN .

The composition of PN and GN is the interpolation operator IN = PN ◦ GN :

C → PN . That is, if p = IN f for f ∈ C, then p is a Cd -valued polynomial of degree

N such that p(tj ) = f (tj ).

One may implement polynomial interpolation by “discrete Chebyshev series.”

Concretely, suppose f ∈ C(I) is a scalar function. Recall that Tk (t) = cos(k arccos t)

are the standard Chebyshev polynomials. Then p = IN (f ) is given by

(3.3)

p(t) =

N

k=0

f˜k Tk (t),

f˜k =

N

Ckj f (tj ),

Ckj

j=0

2

=

cos

N γj γk

πjk

N

,

where γj = 2 if j = 0 or j = N and γj = 1 otherwise [26]. These formulas can also be

regarded as computing p = PN ({f (tj )}) if {f (tj )} is an arbitrary vector in Cl . They

may be implemented by a modification of the fast Fourier transform (FFT) [33].

A fundamental observation is that interpolation at the Chebyshev collocation

points is spectrally accurate for analytic functions. Indeed, it converges exponentially

with a known constant as follows. Note that an ellipse as described in the next lemma

always exists because the region of analyticity is open and contains I by assumption.

Lemma 3.2 (see [31]). Suppose f is analytic on the closed set I = [−1, 1]. That

is, suppose f is analytic in an open region R ⊂ C such that I ⊂ R. There exist

constants c > 0 and 0 ≤ ρ < 1 such that for all N ≥ 1 the interpolant p = IN f

satisfies f − p∞ ≤ cρN . Indeed, if E is an ellipse with foci ±1, and if the interior

of E is contained in R, and if the semimajor/-minor axes of E are of length S and

s, respectively, then f − p∞ ≤ c (S + s)−N for some c > 0.

The “Chebyshev (spectral) differentiation matrix” is the map

DN = EN ◦

d

◦ PN : C l → C l .

dt

The entries of DN are exactly known for all N [33]. In MATLAB, using cheb.m

from [33], DN = kron(cheb(N),eye(d)). The action of DN can also be computed

efficiently by a modification of the FFT [33].

The matrix DN is not invertible; it has kernel of dimension d. We do not quite

seek its inverse, however, though solving the differential equation (3.1) by the Chebyshev spectral method we are about to describe is a closely related task. We need to

2519

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

ˆ N as the

incorporate the initial condition from (3.1) before inverting, so we define D

invertible l × l matrix which is equal to DN in its first dN = l − d rows but has

1 ≤ k ≤ dN,

ˆ N )jk = 0,

(D

δj−dN,k−dN , dN + 1 ≤ k ≤ l,

ˆ N are zeroed, except that the identity

for dN + 1 ≤ j ≤ l. That is, the last d rows of D

Id is inserted in the lower right d × d block.

Now, recalling ODE (3.1), define a block-diagonal matrix and a vector

⎞

⎛

⎞

⎛

u(t0 )

A(t0 )

⎟

⎜

⎟

⎜

..

..

⎟

⎜

⎟

.

.

ˆA = ⎜

u

ˆ=⎜

M

⎟,

⎟.

⎜

⎠

⎝

⎝

A(tN −1 )

u(tN −1 )⎠

0d

y0

ˆN , M

ˆ A are l × l, while u

Here “0d ” denotes the d × d zero matrix. Both D

ˆ is l × 1.

Our approximation of the IVP for (3.1) is described by the following lemma. The

ˆN , M

ˆ A , and u

proof comes immediately from the definitions of D

ˆ.

Lemma 3.3 (our spectral method for ODE IVPs). Fix N ≥ 1. Suppose A(t)

is a continuous d × d matrix-valued function of t ∈ I, u(t) ∈ C, and y0 ∈ Cd . The

following are equivalent:

• p ∈ PN satisfies

p(t

˙ j ) = A(tj )p(tj ) + u(tj )

(3.4)

• v ∈ Cl satisfies

for 0 ≤ j ≤ N − 1

and

p(−1) = y0 ;

ˆN v = M

ˆ Av + u

D

ˆ.

The equivalence is p = PN v and v = EN p.

ˆN − M

ˆ A )−1 u

In practice one computes v = (D

ˆ by Gauss elimination to solve ODE

(3.1). For this spectral method we have the a posteriori estimates given in the next

theorem.

Theorem 3.4. Suppose A(t) is a continuous d × d matrix-valued function of

t ∈ I, u(t) ∈ C, and y0 ∈ Cd . Suppose y ∈ C satisfies the IVP (3.1). Suppose the

fundamental solution satisfies the bound

ΦA (t)ΦA (s)−1 ≤ CA for all − 1 ≤ s ≤ t ≤ 1.

(3.5)

Let N ≥ 1, let p ∈ PN be the Cd -valued polynomial described by Lemma 3.3, and let

˙

− A(−1)y0 − u(−1). Then

Rp = p(−1)

(3.6)

y − p∞ ≤ 2CA Ap − IN (Ap)∞ + u − IN (u)∞ + |Rp |

and

(3.7)

y˙ − p

˙ ∞ ≤ (2A∞ CA + 1) Ap − IN (Ap)∞ + u − IN (u)∞ + |Rp | .

The proof of Theorem 3.4 will be given momentarily, but some comments are in

order. The estimates on the right sides of (3.6) and (3.7) have the structure

(stiffness) [(sum of uniform interpolation errors) + (initial residual)].

2520

ED BUELER

In fact, we interpret CA as an estimate of the fastest possible exponential change in

solving y˙ = A(t)y, and we interpret Rp as the amount by which (3.1) is not satisfied

at the initial time −1. Section 8 gives practical methods for evaluating the uniform

interpolation errors in (3.6) and (3.7).

To prove Theorem 3.4 we need a bound on the degree N monic polynomial with

roots t0 , . . . , tN −1 . Such polynomials are uniformly exponentially small as N → ∞.

A proof of the following lemma, which seems not to appear in previous literature, is

given in [8].

Lemma 3.5. For N ≥ 1 let QN (t) = (t − t0 ) . . . (t − tN −1 ). For t = cos θ ∈ I =

[−1, 1], QN (t) = 21−N (cos(θ) − 1) sin(N θ)/ sin(θ). Thus QN (−1) = (−1)N N 22−N

and QN ∞ = N 22−N on I.

Proof of Theorem 3.4. Let q = IN (Ap) and w = IN (u). Since r = p˙ − q − w ∈ PN

and r(tj ) = 0 for j = 0, . . . , N − 1 by Lemma 3.3, it follows that there is z ∈ Cd

such that r = zQN . Evaluating at t = −1, we find Rp = z(−1)N N 22−N so |z| =

|Rp | 2N −2 /N . On the other hand,

y˙ − p˙ = A(y − p) + (Ap − q) + (u − w) − zQN ,

(3.8)

so

y(t) − p(t) =

t

−1

ΦA (t)ΦA (s)−1 (Ap(s) − q(s)) + (u(s) − w(s)) − zQN (s) ds.

Note that y(−1) = p(−1). Taking norms, and using Lemma 3.5 to see that zQN ∞ ≤

|Rp |,

|y(t) − p(t)| ≤ 2CA Ap − q∞ + u − w∞ + |Rp | .

This inequality implies (3.6). Finally, using (3.8) and Lemma 3.5, we find that (3.6)

implies (3.7).

Example 1. We apply Theorem 3.4 to the ODE IVP

(3.9)

y˙ = (2t + 1)y + (2t + 1) sin(3(t2 + t)),

y(−1) = 1.

The solution can be computed exactly by integration, and note that the solution

y(t) is entire because the coefficients are entire. The effectiveness of estimate (3.6) is

seen in Figure 3.1 below. As N increases, spectral convergence starts to occur only

when polynomial interpolation can “handle” the (complex) exponential rates present

in the fundamental solution ΦA (t) and the nonhomogeneity u(t) on the interval I; this

happens at N ≈ 10 here. The error y − p∞ decreases to approximately 10−14 , and

this is the level of rounding error because y∞ = 9.35. The main point, however, is

that the estimate from (3.6) nicely follows the error, though with a slowly growing

overestimation factor of roughly 103 when N = 40. Both the combined interpolation

error (the sum Ap−IN (Ap)∞ +u−IN (u)∞ ) and the initial residual Rp contribute

nontrivially to the estimate. Note that we use the optimal bound CA = e9/4 when

applying Theorem 3.4 in this example.

Now we return to DDE (2.1), which is our actual interest. Define

⎞

⎛

B(t0 )

⎟

⎜

..

⎟

.

ˆB = ⎜

M

⎟,

⎜

⎠

⎝

B(tN −1 )

Id

0d

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2521

3

10

0

10

−5

10

−10

10

−14

10

0

10

20

N

30

40

Fig. 3.1. Theorem 3.4 applied to scalar ODE (3.9) reveals spectral convergence in uniform

error y − p∞ on I (squares), where y is the solution and p is its polynomial approximation. The

a posteriori estimates (circles) (the right side of (3.6)) closely track the actual error. The combined

interpolation error (“+”) and the initial residual (“×”) are also shown; see the text.

where Id and 0d are the d × d identity and zero matrices, respectively. The insertion

of “Id ” in the lower left position represents the connection condition for the DDE

method of steps. The Chebyshev collocation approximation of DDE (2.1), or, more

particularly, of formula (2.3), is

(3.10)

ˆN v = M

ˆ Av + M

ˆBw

D

if vj ≈ z(tj ) and wj = f (tj ). Note the obvious fact that the IVP for DDE (2.1) is of

the same form as ODE (3.1), but with u = Bf . Theorem 3.4 can therefore be applied

to estimating errors in IVPs for (2.1).

The periodicity of the coefficients is not really relevant to solving a DDE of form

(2.1) by the method of steps, when we use method (3.10). (Without periodicity there

is no unique monodromy operator, however.) All that is required to use (3.10) to solve

ˆA

a nonperiodic DDE is that we must re-evaluate the collocated coefficient matrices M

ˆ

and MB on each successive interval. The length of the interval would usually be equal

to the delay if there is only one delay, in particular.

When the coefficients of (2.1) have period T = τ , the matrix which approximates

U is

−1

ˆN − M

ˆA

ˆ B ∈ Cl×l .

(3.11)

UN = D

M

In practice we need to assume that the matrix inverse in (3.11) exists in order to

find the entries of UN , but we naturally observe the computed condition number for

inversion. On the other hand, to compute the eigenvalues and eigenvectors of UN we

do not actually need the inverse. Vectors v such that UN v = λv evidently solve the

generalized eigenproblem

ˆBv = λ D

ˆN − M

ˆ A v.

(3.12)

M

The eigenvalues can be approximated by the QZ algorithm [17], for instance.

4. Bounds on ODE fundamental solutions. Before we move on to the main

goal of numerically approximating the monodromy operator and its eigenvalues, we

2522

ED BUELER

must find usable bounds on ODE fundamental solutions. In fact, we believe the results

of this section are of independent interest for those needing computable bounds on

the growth of solutions to variable-coefficient linear ODEs.

We recall existence and analytic continuation. The following result is proven by

a straightforward Picard iteration argument [8].

Lemma 4.1. Suppose A(z) ∈ Cd×d is (entrywise) analytic on a convex open set

E ⊃ I = [−1, 1]. Then there is a unique function ΦA (z), analytic on E, satisfying

z

A(ζ)ΦA (ζ) dζ.

(4.1)

ΦA (z) = Id +

−1

If |A(z)| ≤ α for z ∈ E, then |ΦA (z)| ≤ eα|z+1| for z ∈ E. Furthermore, Φ˙ A (t) =

A(t)ΦA (t) for t ∈ I.

One can easily show, in addition, that the transition matrix Ω(t) = ΦA (t)ΦA (s)−1 ,

˙

for −1 ≤ s ≤ t ≤ 1, has an a priori estimate. (Note that Ω satisfies Ω(t)

= A(t)Ω(t),

α(t−s)

˜

Ω(s) = Id .) By Gronwall’s inequality, in fact, |Ω(t)| ≤ e

if |A(τ )| ≤ α

˜ for

τ ∈ [s, t]. Unfortunately, this bound on Ω(t) is frequently not close to the actual maximum of |Ω(t)|. We can, however, use the collocation algorithm to approximate the

fundamental solution and then use a posteriori estimates from Theorem 3.4 to bound

the fundamental solution. There is a “bootstrapping” aspect to such a technique:

one must have some bound on the fundamental solution in order to apply Theorem

3.4, which leads to an improved bound. This is the content of the next lemma, for

which one should recall that ΨA (t) = (ΦA (t)−1 ) satisfies the “adjoint equation”

˙ A (t) = −A(t) ΨA (t) with ΨA (−1) = Id .

Ψ

Lemma 4.2. Consider the following IVPs:

(4.2)

y˙ s (t) = A(t)ys (t),

ys (−1) = es ,

s = 1, . . . , d,

where {es } is the standard basis for Cd .

Suppose that for each s we have a polynomial approximation ps of

ys , and that we y1 (t) . . . yd (t)

have estimates ys −ps ∞ ≤ μs ; see Theorem 3.4. Note that

Φ

(t)

=

A

is the fundamental solution to y˙ = A(t)y. Let ΦN (t) = p1 (t) . . . pd (t) , the apd

proximation of ΦA (t). If ξ 2 = s=1μ2s , then |ΦA (t) − ΦN (t)| ≤ ξ for all t ∈ I. Similarly, if ΨA (t) = z1 (t) . . . zd (t) is the fundamental solution to the adjoint equation z˙ = −A(t) z, and if ΨN (t) = q1 (t) . . . qd (t) , where qs (t) are polynomial

approximations to zs (t) satisfying zs − qs ∞ ≤ νs , so that ΨN (t) is the collocation

d

approximation of ΨA (t), then |ΨA (t) − ΨN (t)| ≤ ω for t ∈ I, where ω 2 = s=1 νs2 .

Also,

ΦA (t)ΦA (s)−1 ≤ (ξ + ΦN ∞ ) (ω + ΨN ∞ ) .

Proof. Note that

|ΦA (t)ΦA (s)−1 | ≤ (ΦA − ΦN ∞ + ΦN ∞ ) (ΨA − ΨN ∞ + ΨN ∞ ) .

But

1/2

d

d

us (ys (t) − ps (t)) ≤ max |u|

μ2s

=ξ

|ΦA (t) − ΦN (t)| = max |u|=1

|u|=1 s=1

s=1

using the Cauchy–Schwarz inequality, and similarly for |ΨA (t) − ΨN (t)|.

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2523

Example 2. Consider the second-order ODE x

¨ + x˙ + (10 + 9 cos(πt))x = 0. It is

a relatively stiff damped Mathieu equation corresponding to

0

1

A(t) =

−10 − 9 cos(πt) −1

in first-order form. A quick calculation gives A∞ ≈ 19.026. Thus C1 = e2A∞ ≈

3.5381 × 1016 is the a priori bound on |ΦA (t)ΦA (s)−1 | from Gronwall. We use the

collocation algorithm with N = 50 to find ΦN (t), ΨN (t) approximating ΦA (t), ΨA (t).

The a posteriori estimates from Theorem 3.4 are computed using CA = C1 , and,

as in Lemma 4.2, we find |ΦA (t)ΦA (s)−1 | ≤ C2 = 2.7117 × 109 . This is a significant

improvement, but also we can now iterate, using CA = C2 in the a posteriori estimates

to generate C3 , and so on. The result is a sequence of bounds

|ΦA (t)ΦA (s)−1 | ≤ 3.5381 × 1016 , 2.7117 × 109 , 19.627, 19.587, 19.587, . . . .

Each number on the right is a bound, with an a priori argument for the first and

a posteriori arguments for the remainder. Evidently they converge superlinearly to

about 19.6. By looking at the numerically approximated fundamental solution we see

that this is a nearly optimal bound. In any case, such improvements by many orders

of magnitude make further error estimation using Theorem 3.4 very practical.

In addition to the Lemma 2.6 estimate on the norm of U , we require, for the a posteriori estimation of eigenvalues, an a priori result on the polynomial approximation

of eigenfunctions of U .

These eigenfunctions each solve a homogeneous ODE. In fact, if U x = μx for

μ ∈ C, then y = μx solves y˙ = Ay + Bx. Thus if μ = 0, then x˙ = A + μ−1 B x.

Let Φμ (t) be the fundamental solution to this ODE, so Φ˙ μ = (A + μ−1 B)Φμ and

Φμ (−1) = Id . Note that x(t) = Φμ (t)x(−1).

Suppose A(z), B(z) are analytic on an ellipse E with foci ±1. (If A, B are analytic

on the compact set I = [−1, 1], then they have such a common regularity ellipse.)

Define M ∞E = maxz∈E |M (z)| for continuous matrix-valued functions M (z). From

Lemma 4.1

|Φμ (z)| ≤ exp A + μ−1 B∞E |z + 1|

is an a priori bound on the analytic continuation of Φμ (t) to z ∈ E. In fact, when we

consider below those multipliers μ with magnitude greater than a chosen level σ > 0

we will use the bound

(4.3)

|Φμ (z)| ≤ Cσ := exp (A∞E + σ −1 B∞E ) (S + 1) ,

where S is the major semiaxis of a common regularity ellipse E for A and B. This a

priori bound for |Φμ (z)| turns out to be one of the two troublesome constants in the

main Theorem 6.4 on multiplier estimation.

As announced, we now bound the interpolation error for eigenfunctions of U .

Lemma 4.3. Suppose A, B are analytic d × d matrix-valued functions with a

common regularity ellipse E ⊂ C with foci ±1 and sum of semiaxes eη = S + s > 1.

Suppose U x = μx for μ = 0 and xH 1 = 1. Let Φμ (z) be the unique analytic

continuation of Φμ (t) for z ∈ E and suppose Cμ is a bound of |Φμ (z)| for all z ∈ E.

If p = Ik x, then

√

(4.4)

x − pH 1 ≤ 8 d Cμ (sinh η)−1 k e−kη .

2524

ED BUELER

Proof. First, x(z) = Φμ (z)x(−1) is the analytic continuation of x(t), t ∈ I, to

z ∈ E. Furthermore, |x(z)| ≤ Cμ |x(−1)|. It follows from (4.16) of [31] that

x − p2H 1 ≤

d

8Cμ |x(−1)|(sinh η)−1 ke−kη

2

2

= d 8Cμ (sinh η)−1 ke−kη |x(−1)|2 .

j=1

Note that |x(−1)| ≤ 0.9062xH 1 = 0.9062 by Lemma 2.4.

Estimate (4.4), which bounds the polynomial approximation error for eigenfunctions of U , will be of great importance in controlling the eigenvalue approximation

error from our spectral method (Theorem I).

Definition 4.4. Let σ > 0. The a priori eigenfunction approximation error

bound for large eigenvalues of U, for k + 1 point Chebyshev interpolation, is

√

(4.5)

k = 8 dCσ (sinh η)−1 k e−kη ,

where Cσ > 0 is a bound on the analytic continuation of fundamental solutions:

|Φμ (z)| ≤ Cσ

for all z ∈ E and |μ| ≤ σ.

The crucial fact to note is that k decays exponentially with increasing k and that

the rate of decay is related to the size of the regularity ellipse E. Not surprisingly, in

other words, if the coefficients of the DDE are more regular, then the spectral method

is better at approximating the eigenfunctions. On the other hand, the constant Cσ

generally increases rapidly as E is expanded.

5. Eigenvalue perturbation for operators on Hilbert spaces. The eigenvalue perturbation result in this section generalizes the well-known Bauer–Fike theorem for matrices [4]. It may not be new. It is fairly close to the definition of the

eigenvalue condition number itself, introduced in [36]. It is also close to Proposition 1.15 in [11]. Nonetheless we need a precise statement, especially of Corollary 5.2,

and we cannot find such in the literature.

Recall that a separable infinite-dimensional Hilbert

isomor space is

isometrically

phic to the space of sequences l2 = {a = (a1 , a2 , . . . ) aj ∈ C,

|aj |2 < ∞}. Denote

the standard basis elements of l2 by δj . By definition, a bounded operator Λ ∈ L(l2 )

is diagonal in the standard basis if for each j, Λδj = λj δj for some λj ∈ C.

Theorem 5.1. Let X be a separable infinite-dimensional complex Hilbert space.

Suppose A ∈ L(X ) is diagonalizable in the sense that there is a linear, bounded map

V : l2 → X with bounded inverse and a diagonal (in the standard basis) operator

Λ ∈ L(l2 ) such that A = V ΛV −1 . Let B ∈ L(X ). If Bx = μx for μ ∈ C and x = 1,

then

min |μ − λ| ≤ V V −1 (B − A)x.

(5.1)

λ∈σ(A)

−1

Proof. If μ ∈ σ(A) there is nothing to prove. Otherwise, let RA = (A − μI) and

−1

RΛ = (Λ − μI) . Note that RA = V RΛ V −1 so that RΛ −1 ≤ V V −1 / RA .

Also, RΛ is diagonal and bounded with

−1

RΛ ≤ sup |λj − μ|

j

−1

= max |λ − μ|

λ∈σ(A)

=

−1

min |λ − μ|

,

λ∈σ(A)

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2525

where {λi } are the diagonal entries of Λ. Thus

min |λ − μ| ≤ RA −1 V V −1 .

(5.2)

λ∈σ(A)

On the other hand, if μ is an eigenvalue of B and x ∈ H 1 satisfies Bx = μx

−1

and x = 1, then (B − A)x = −(A − μI)x, or x = − (A − μI) (B − A)x. Taking

norms, it follows that 1 ≤ RA (B − A)x. Combine this with inequality (5.2) to

get estimate (5.1).

An easy modification of Theorem 5.1 applies to X ∼

= Cd , and the result obviously

implies the classical Bauer–Fike theorem. The hypotheses of Theorem 5.1 imply

that σ(A) is the closure of the set of diagonal entries of Λ. Because the spectrum

σ(A) ⊂ C is compact for any bounded operator A ∈ L(X ), the “min” in estimate

(5.1) is appropriate.

Theorem 5.1 can be generalized to Banach spaces which are isomorphic to sequence spaces (i.e., lp spaces). Specifically, we need to hypothesize an operator Λ, similar to A in the sense used in Theorem 5.1, for which (Λ − μI)−1 ≤ supλi |λi − μ|−1 ,

where {λi } is a dense subset of σ(A). Assuming A is similar to a diagonal operator

on lp suffices, for instance. (We will not use this generalization.)

Recall that if the coefficients A, B in DDE (2.1) are analytic, then there exist polynomials very close to the eigenfunctions of the monodromy operator U (see Lemma

4.3). This motivates the following corollary.

Corollary 5.2. Suppose the hypotheses of Theorem 5.1 hold. Suppose also that

p ∈ X satisfies x − p < . Then

min |μ − λ| ≤ V V −1 (B + A) + (B − A)p .

λ∈σ(A)

Proof. Note that (B − A)x ≤ (B − A)(x − p) + (B − A)p.

Let us describe how this corollary will be used, and in so doing sketch the strategy

for proving Theorem I. In section 3 we described a spectral method which produces

an l × l matrix approximation UN which approximates the monodromy operator U

for DDE (2.1). We will numerically diagonalize UN by standard methods for matrices

of modest size, for instance, by the QR or QZ algorithms for eigenvalues and inverse

iteration for eigenvectors [17]. Typically l is in the range 30 to 300 and UN is dense.

Thus it is a reasonable task to fully diagonalize UN in practice. (Note that in stating

our results we do not include errors made in these standard computations of finitedimensional linear algebra.)

We want to know how close the unknown eigenvalues of U (the multipliers) are

to the computed eigenvalues of UN . The operators U and UN act on different spaces,

the former on a Hilbert–Sobolev space H 1 and the latter on Cl . It turns out, how˜N which acts on H 1 . Furthermore, it

ever, that we can extend UN to an operator U

turns out (next section) that a diagonalization of UN can be boosted to an operator

˜N .

diagonalization of U

A numerical diagonalization of UN is, of course, an invertible matrix V ∈ Cl×l

and a diagonal matrix Λ ∈ Cl×l such that UN = V ΛV −1 . We do not assert that UN

is diagonalizable in every case. Rather, we assert that diagonalizability is the generic

case [34]. We necessarily incorporate the conditioning of the eigenvalue problem

for UN , and specifically the condition number V˜ V˜ −1 where V˜ is the operator

˜N . The above informal definitions, made precise

formed from the eigenfunctions of U

in the next section, allow us to outline our strategy for estimating the error in the

approximate multipliers:

2526

ED BUELER

(i) given a DDE of form (2.1), compute UN as described in section 3;

(ii) numerically diagonalize UN = V ΛV −1 ; denote the polynomial described by

the kth column of V by “pk ”;

˜N = V˜ Λ

˜ V˜ −1 , where U

˜N is an operator on the same

(iii) thereby diagonalize U

−1

˜

˜

space as U ; compute bounds for V , V (Lemma 6.2);

(iv) consider only the eigenvalues μ of U which satisfy |μ| ≥ σ for some σ > 0,

and, for the finitely many normalized eigenfunctions of U with multipliers μ

satisfying |μ| ≥ σ, note the a priori bound on degree k (≤ N ) polynomial

interpolation at the Chebyshev points (Lemma 4.3 and Definition 4.4);

(v) write each pk as a linear combination of the H 1 -normalized Chebyshev polynomials T˜0 , . . . , T˜N ;

(vi) by simply applying UN , approximately solve DDE IVPs with each initial

function T˜0 , . . . , T˜N ; record a posteriori estimates from Theorem 3.4;

˜N , and B = U ; estimate norm U (vii) use Corollary 5.2 with X = H 1 , A = U

˜N )p by estimates in steps

from Lemma 2.6; bound (B − A)p = (U − U

(iv) and (vi);

(viii) conclude with an upper bound on the distance min |μ − λi | as λi ranges over

the approximate multipliers (Theorem 6.4).

6. Complete statement and proof of Theorem I. The expression (3.11) for

UN , or of the corresponding generalized eigenproblem (3.12), is all that is needed to

rapidly approximate the monodromy operator and compute approximate multipliers.

This section is devoted to the additional task of producing computable error estimates

for the approximate multipliers.

We start with some definitions and a technical lemma in which we make the

˜N which acts on H 1 (as

diagonalized l × l matrix UN into a diagonalized operator U

does the monodromy operator U ). This will allow us to apply Corollary 5.2 with

˜N and B = U .

A=U

Recall that the polynomials PN are a subspace of our Hilbert space H 1 . Let

ΠN ∈ L(H 1 ) be orthogonal projection onto PN . Using the operator notation of

section 3, define the (finite rank) approximate monodromy operator

˜N = PN UN EN ΠN ∈ L(H 1 ).

U

(6.1)

˜N ) and an l × l matrix (UN ). Lemma

We now have two operators on H 1 (U and U

6.2 below shows that a numerical diagonalization of UN yields a diagonalization of

˜N . To prove this we will need to consider how to expand a Cd -valued polynomial

U

p(t) of degree N into the basis {T˜j ⊗ es } for j = 0, . . . , N and s = 1, . . . , d, where {es }

is the standard basis for Cd . In fact, if

zj

(6.2)

p(t) =

Tj (t) ⊗ es ,

γj,s T˜j (t) ⊗ es =

γj,s √

π(1 + j)

j, s

j=0,...,N

s=1,...,d

where zj =

(6.3)

√

2 if j ≥ 1 and z0 = 1, and if {e∗s } is the dual basis to {es }, then

γj,s =

√

π(1 + j)zj−1

N

Cjr e∗s (p(tr )) ,

r=0

where C = {Cjr } is the matrix in (3.3). Thus we can find the expansion coefficients

of p(t) given the values of p(t) at the collocation points. Formulas (6.2) and (6.3) are

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2527

perhaps memorable if we call them “H 1 -normalized discrete Chebyshev series for Cd valued polynomials.” The next definition gives notation for the expansion coefficients

associated to the diagonalization of UN .

Definition 6.1. Suppose UN = V ΛV −1 with Λ = (λj )lj=1 diagonal. Let vk be

the kth column of V (1 ≤ k ≤ l). Define pk (t) = PN vk , a Cd -valued polynomial of

degree N . Expand pk (t) in discrete Chebyshev series

pk (t) =

ΓVjd+s,k T˜j (t) ⊗ es ,

where the matrix of coefficients is defined by

ΓVjd+s,k =

√

π(1 + j)zj−1

N

Cjr e∗s (pk (tr )) =

√

π(1 + j)zj−1

r=0

N

Cjr (vk )rd+s ,

r=0

where C = {Cjr } is given by (3.3). Equivalently,

ΓV = C˜ ⊗ Id V, where C˜ = π/2 diag 2−1/2 , 2, 3, . . . , N + 1 C.

Note that ΓV is an invertible (l × l) matrix because V and C are invertible.

˜N . Recall that our meaning for

In the next lemma we diagonalize the operator U

such a diagonalization is given in the statement of Theorem 5.1. Denote a typical

element of l2 by a = (asj ), j = 0, 1, . . . , 1 ≤ s ≤ d, with asj ∈ C. Informally, the next

lemma describes an operator V˜ : l2 → H 1 which has matrix form

V

0

Γ

V˜ =

0 I

in the basis {T˜j ⊗ es } for H 1 and the standard basis for l2 . Here “I” is the isometry

on rows l + 1, l + 2, . . . , ∞.

Lemma 6.2. Let V˜ : l2 → H 1 be defined by

asj pjd+s (t) +

asj T˜j (t) ⊗ es .

V˜ a =

j=0,...,N

s=1,...,d

j>N

s=1,...,d

Then V˜ is bounded and boundedly invertible, and, recalling that“| · |” is the matrix

2-norm,

(6.4)

V˜ ≤ |ΓV | + 1,

V˜ −1 ≤ |(ΓV )−1 | + 1.

˜ ∈ L(l2 ) by (Λa)

˜ jd+s = λjd+s as if j < N and s = 1, . . . , d, while if k > l then

Define Λ

j

˜

˜

(Λa)k = 0. Then Λ is a diagonal operator on l2 of rank at most l, and we have the

˜N = V˜ Λ

˜ V˜ −1 .

diagonalization U

Proof. First we compute V˜ −1 : H 1 → l2 by its action on the basis {T˜j ⊗ es }:

V −1

V −1

(Γ

)

,

.

.

.

,

(Γ

)

,

0,

.

.

.

, 0 ≤ j ≤ N,

1,jd+s

l,jd+s

V˜ −1 T˜j ⊗ es =

δjd+s ,

j > N,

2

˜ −1 V˜ a = a for all a ∈ l2 .

if {δk }∞

k=1 is the standard basis for l . It is routine to check V

V

Estimates (6.4) follow from the definition of Γ and the fact that V˜ and V˜ −1 act

⊂ H 1 and the corresponding subspace of l2 .

isometrically between PN

2528

ED BUELER

Now, UN V = V Λ if and only if UN EN pk = λk EN pk for k = 1, . . . , l. Note that

V˜ δk = pk if k ≤ l and V˜ δk = T˜j ⊗ es , where k = jd + s, if k > l. Note that

k = jd + s > l if and only if j > N . Thus if k ≤ l, then

˜N V˜ δk = PN UN EN ΠN pk = PN UN EN pk = λk pk = V˜ Λ δk ,

U

˜N V˜ δk = PN UN EN ΠN (T˜j ⊗ es ) = 0. Thus U

˜N = V˜ Λ

˜ V˜ −1 .

while if k > l, then U

˜N ≤ (max1≤j≤l |λj |) V˜ V˜ −1 .

We will use the obvious bound U

To get a posteriori estimates on eigenvalues we need a posteriori estimates on

specific IVPs for DDE (2.1). The following lemma summarizes the application of

Theorem 3.4 for this purpose.

Lemma 6.3. Suppose f ∈ H 1 and let u(t) = B(t)f (t). Let p ∈ PN be the

approximation of y found from the spectral method applied to y˙ = Ay+u, y(−1) = f (1)

(Lemma 3.3). Then

(6.5)

˜ N f H 1 ≤

U f − U

√

1/2

2πd (2CA )2 + (2A∞ CA + 1)2

Z,

where CA satisfies (3.5) and where

˙

− A(−1)f (1) − B(−1)f (−1)|.

Z = Ap − IN (Ap)∞ + Bf − IN (Bf )∞ + |p(−1)

˜N f = p. Now combine the result of Theorem 3.4

Proof. Note that U f = y and U

with inequality (2.8) in Lemma 2.5.

We now give our main theorem on the multipliers of DDE (2.1).

Theorem 6.4 (precise form of Theorem I). Suppose that A, B in (2.1) are analytic d × d matrix-valued functions with common regularity ellipse E ⊃ I. Let N ≥ 1

and l = d(N + 1). Let σ > 0 and recall the definition of the a priori eigenfunction approximation error bounds for large eigenvalues, denoted k (Definition 4.4). Assume

that UN , defined by formula (3.11), is diagonalized: UN = V ΛV −1 with V invertible

l

and Λ = (λi )i=1 diagonal. Order the eigenvalues |λ1 | ≥ |λ2 | ≥ · · · ≥ 0. Suppose

we have a posteriori estimates for IVPs using the Chebyshev polynomials as initial

functions:

˜N (T˜j ⊗ es )H 1 ≤ ν s ,

U (T˜j ⊗ es ) − U

j

(6.6)

˜N is defined by (6.1)).

where j = 0, . . . , N and s = 1, . . . , d (Lemma 6.3; note that U

k

d

Let ξk2 = j=0 s=1 (νjs )2 .

Consider a large eigenvalue μ ∈ C of U : U x = μx for x ∈ H 1 , x = 1, and

|μ| ≥ σ. Let

ωk = k U + |λ1 | cond(V˜ ) + (1 + k )ξk

for k = 1, . . . , N . Then

(6.7)

min |μ − λi | ≤ min {ω1 , . . . , ωN } cond(V˜ ).

i=1,...,l

Note that U is estimated in Lemma 2.6 and cond(V˜ ) = V˜ V˜ −1 is estimated

in Lemma 6.2.

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2529

Proof. By Lemma 4.3, for each k = 1, . . . , N we have x − qk H 1 < k , where

˜N , B = U , p = qk , and = k :

qk = Ik x. Apply Corollary 5.2 with X = H 1 , A = U

˜N ) + (U − U

˜N )qk .

(6.8)

min |μ − λi | ≤ cond(V˜ ) k (U + U

k d

On the other hand, qk = j=0 s=1 αkjs T˜j ⊗ es is a linear combination of Chebyshev polynomials, so from the a posteriori bounds νjs ,

˜N )qk H 1 ≤

(U − U

k d

|αkjs |νjs ≤ qk H 1 ξk

j=0 s=1

≤ (xH 1 + qk − xk H 1 ) ξk ≤ (1 + k )ξk

by Cauchy–Schwarz. From (6.8) and Lemma 6.2 we conclude with estimate

(6.7).

The idea in Theorem 6.4 is that all quantities on the right side of inequality

(6.7) are known a priori, can be computed by a matrix eigenvalue package, or can

be computed a posteriori (Theorem 3.4). Furthermore, the quantities k and ξk are

exponentially small, though for the latter this is in an a posteriori and fixed-precisionlimited sense. Thus the computed eigenvalues of the matrix UN can be shown to be

exponentially close to those of the operator U as long as UN is “well diagonalizable”

in the sense that cond(V˜ ) is small (which follows if cond(V ) is small). Unfortunately,

cond(V˜ ), which depends on N , and Cσ , which does not, can be large. We observe

below in two examples that cond(V˜ ) grows slowly with N , however.

Bounds on fundamental solutions appear several times, at least implicitly, in

the statement of Theorem 6.4. Recall that Lemma 2.6 estimates U in terms of a

bound CA on |ΦA (t)ΦA (s)−1 |, s, t ∈ I, where ΦA (t) is the fundamental solution to

x(t)

˙

= A(t)x(t). Also, Theorem 3.4 uses CA in the a posteriori bounds on IVPs.

Fortunately, section 4 gives a technique for computing good bounds CA . Thus the

estimate for U is reasonable and the a posteriori quantities νjs are very small in

practice (within a few orders of magnitude of machine precision). On the other hand,

as noted, the bound Cσ on the analytic continuation Φμ (z) of the fundamental solution

Φμ (t) to x(t)

˙

= (A(t) + B(t)/μ) x(t), for the large multipliers with |μ| ≥ σ, which

appears in Definition 4.4 of k , is still a priori. Such a bound will inevitably be large.

The result is that k is large for small k but that k decreases exponentially with k.

The a posteriori quantities ξk are, in practice, bounded below because precision

is fixed. In Example 3 below using double precision, ξk ≈ 10−10 for k << N and thus

ωk ≥ 10−10 . But cond(V˜ ) ≈ 108 , so min{ωk } cond(V˜ ) ≈ 10−2 , which gives only two

digits of accuracy for the largest eigenvalue in that example. Application of Theorem

6.4 is a situation where 128-bit or 256-bit floating point representation would have

substantial consequences.

7. An example of the application of Theorem I. Let us apply Theorem 6.4

to the damped delayed Mathieu equation (1.1).

Example 3. Consider the parameter values (δ, b) = (1, 1/2) in (1.1).

Using the

√

2

(0)

same method as in Example 2, we find an a priori bound CA = e2 2+1.1 ≈ 35.99

−1

on the norm of Ω = ΦA (t)ΦA (s) . We improve this bound by a posteriori iterations

as in section 4 to a new bound CA = 4.613.

Recall that if x is an eigenfunction of U corresponding to eigenvalue μ, then

x˙ = (A + B/μ)x. Suppose we consider those eigenvalues |μ| ≥ σ = 0.2. Note that

2530

ED BUELER

20

10

10

10

εk

ξk

10

10

8

ωk

10

0

10

6

10

−10

10

4

10

−20

10

−30

10

0

2

20

40

k

60

80

10

0

20

40

60

80

100

N

Fig. 7.1. Behavior of constants (left figure) when Theorem 6.4 is applied to the delayed, damped

Mathieu equation (1.1) with (δ, b) = (1, 1/2) and N = 75. The minimum of ωk is about 5 × 10−11 .

Right figure: The estimate on cond(V˜ ) from Lemma 6.2 (circles) and the 2-norm condition number

of V (dots), as functions of N .

A, B are entire, so we are free to choose any common regularity ellipse E ⊃ I with

foci ±1. The analytic continuation Φμ (z) for z ∈ E of the fundamental solution Φμ (t)

of x˙ = (A + B/μ)x

√ is bounded by Cσ as in (4.3). Let S = 1.996 (a choice explained

below) and s = S 2 − 1 be semiaxes of E. Then from (4.3), Cσ = 1.303 × 1011 ; see

below. Note that eη = S + s = 3.724 determines the exponential rate of decrease

of k .

We now apply Theorem 6.4 with N = 75. The approximate multipliers are shown

in Figure 1.2. The right side of (6.7) gives 0.01595, which is the radius of the small

eigenvalue error bound circles in Figure 1.2.

The constants k , ξk , and ωk which appear in the statement of Theorem 6.4 are

shown in Figure 7.1. For this value of N the condition number estimate for V˜ given

in Lemma 6.2 is 3.5 × 108 . The estimate of cond(V˜ ) from Lemma 6.2 depends on N ,

as shown in Figure 7.1. We observe that it grows relatively slowly for large N . (The

conditioning of the multipliers will be addressed in section 8.) In any case, the error

bound (6.7) shows that the actual multipliers which exceed σ = 0.2 in magnitude lie

within the error bound circles around the approximate multipliers in Figure 7.1. The

DDE is, in particular, stable.

It is revealing to ask how to choose the regularity ellipse E ⊃ I in the case when

A(z), B(z) are analytic in large neighborhoods of I or even when A, B are both entire.

Such is the case if A, B are constant, for instance. The significance of the choice of

E to Theorem 6.4 is that the sum of its semiaxes eη = S + s controls the rate of

exponential decay of the a priori quantities k , but also that the size of E affects the

a priori bound Cσ which appears in Definition 4.4.

Fix σ > 0 and define Cσ as in Definition 4.4. Then, noting that the minor semiaxis

√

s = S 2 − 1 is a function of S, from (4.5) we find that k is a function of S:

(7.1)

√

exp ([A∞E + B∞E /σ](S + 1))

.

k (S) = 16 d k

((S + s)2 − 1) (S + s)k−1

In general, A∞E , B∞E depend on S.

Based on the evidence in examples like the one above, for sufficiently large N the

a posteriori estimates ξk on IVPs first increase significantly at about k = 3N/4. We

therefore choose S by seeking to minimize 3N/4 (S).

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2531

Example 4 (continuation of Example 3). For (1.1) with (δ, b) = (1, 1/2) we have

|A(z)|2F ≤ 2 + (1 + | cos πz|)2 . On E with foci ±1 and semiaxes S, s, it follows that

√

1/2

.

A∞E ≤ max |A(z)|F ≤ 2 + (1 + 1 + eπs )2

z∈E

The minor semiaxis s of E appears in this expression because the magnitude of cos(πz)

grows exponentially in the imaginary direction. Note that B∞E = 1/2. To find the

best S one must solve the problem

1/2

√

exp

2 + (1 + 1 + eπs )2

+ (2σ)−1 (S + 1)

min

.

S>1

((S + s)2 − 1) (S + s)(3N/4)−1

Upon inspection we see that this is a smooth and convex single-variable minimization

problem. The minimum S = 1.996 is used above.

8. Discussion. We start with another example of the use of the spectral method

to compute the multipliers of a DDE, and of Theorem 6.4 to estimate the errors in

the multipliers.

Example 5. Consider the simple scalar DDE

(8.1)

x˙ = −x + x(t − 2).

The multipliers are exactly known in the following sense [18, Theorem A.5]: The

characteristic equation of (8.1) is λ = −1 + e−2λ . The roots of this equation are the

“exponents,” and μ = e2λ are the multipliers. Supposing λ = α+iβ, the characteristic

equation can be written as a pair of real equations

(8.2)

α = −1 + e−2α cos 2β,

β = −e−2α sin 2β.

We find a single real exponent λ0 = 0. All other exponents are complex and have imaginary parts β satisfying the transcendental equation −β = sin(2β) exp(2 + 2β cot 2β),

which follows from (8.2). The solutions of this equation are easily seen to be simple

roots βk lying in the intervals (k − 1/2)π < βk < kπ for k = 1, 2, . . . . They can be

found by the usual highly reliable numerical methods (e.g., bisection). The multipliers are then μ0 = 1 and μ±k = exp(αk ∓ iβk ), where αk is found from βk by the

first equation of (8.2). Note that these multipliers are already ordered by decreasing

magnitude.

On the other hand, we can form UN by the methods of this paper and find its

(N )

N + 1 eigenvalues μk . The comparison of these numerical multipliers to the correct

values for N = 40 (N = 80) is seen in Figure 8.1. We observe that the largest 10

(30, respectively) multipliers are accurate to full precision. The remaining smaller

multipliers are quite inaccurate. This occurrence is no surprise as the eigenfunctions corresponding to the small multipliers are highly oscillatory and exponentially

damped. (It is well known that Chebyshev interpolation requires somewhat more

than 2 points per wavelength to achieve spectral accuracy [33, section 7].)

How does the impressive accuracy of the largest approximate eigenvalues compare

to estimate (6.7) from Theorem 6.4? As also shown in Figure 8.1, if σ = 0.1, then

estimate (6.7) decreases exponentially as a function of N to a minimum of 10−7 or

so at N ≈ 55. Thus the estimate remains roughly 8 orders of magnitude above the

actual errors in the large multipliers. This difference has two sources. First, the a

posteriori error estimates (6.6) coming from IVPs are too large by a few orders of

2532

ED BUELER

0

15

10

10

10

10

−5

10

5

10

0

10

−10

10

−5

10

−15

10

−10

20

40

k

60

10

80

0

20

40

N

60

80

Fig. 8.1. Left: Error in numerical multipliers for DDE (8.1) when N = 40 (circles) and N = 80

(dots). Right: Error bound (6.7) from Theorem 6.4, as a function of N, when σ = 0.1.

4

10

3

10

2

10

1

10

0

10

10

(N )

20

k

30

40

(N )

Fig. 8.2. Condition numbers sk of the approximate multipliers μk for N = 40; cond2 (V ) =

|V | |V −1 | (dashed line) and estimate of cond(V˜ ) (dotted line) from Lemma 6.2 are also shown.

magnitude; compare Example 1 in section 3. Second, the estimate of cond(V˜ ) is in

the range 103 –105 for 30 ≤ N ≤ 80.

In fact, how badly conditioned are the computed multipliers in the above example?

(N )

(N )

Recall that sk = |wk∗ vk |−1 are the condition numbers of the eigenvalues μk of UN

if wk and vk are the associated normalized left and right eigenvectors, respectively [36,

(N )

p. 68]. Figure 8.2 shows the computed sk for N = 40. We see that for k ≤ N/2 the

(N )

condition numbers sk are small (≈ 101 ). For k ≈ N , however, the condition numbers

reach a maximum greater than 103 . Recalling that these eigenvalues are ordered by

decreasing size, we see that the difficult-to-approximate eigenvalues are the very small

ones. These eigenvalues correspond to rapidly oscillating eigenfunctions for which we

cannot expect our spectral method to do a good job anyway. Note that a priori

estimates of these condition numbers, for the approximating matrix UN or for the

monodromy operator U , are not known.

A follow-up question is this: How does the conditioning of the individual eigenvalues relate to the matrix condition number used in Theorem 6.4? Figure 8.2

also shows cond2 (V ) = |V | |V −1 | for the numerical diagonalization UN = V ΛV −1 .

We have an illustration of a quite general phenomenon: for any matrix, max sk

is always within a small factor of cond2 (V ) [12]. Here the factor is about five.

ERROR BOUNDS FOR EIGENVALUES OF PERIODIC DDES

2533

The figure also shows our estimate of cond(V˜ ) = V˜ V˜ −1 from Lemma 6.2.

We see that our estimate of cond(V˜ ) is not problematic in this example. Indeed,

max sk ≤ (estimate of cond(V˜ ) from Lemma 6.2) ≤ cond2 (V ) in this and all other

examples computed by the author. (A general proof of this fact is not known.)

We are led to a question which we can state somewhat imprecisely as follows:

(N )

Let μk be the eigenvalues of a compact operator U , and let μk be the eigenvalues

of a matrix UN which approximates U . Is there a theorem which uses the computed

(N )

(N )

condition numbers sk to bound the errors |μk − μk |? One might say that estimate (6.7) is uniform in k, because our analysis is based on a Bauer–Fike-type result

(N )

(Corollary 5.2), while Figure 8.2 suggests |μk − μk | should depend on k.

Let us address the practical computation of the bounds in this paper. First, a

common task in computing the bound in Theorem 6.4 is the evaluation of a polynomial

on the interval I = [−1, 1] given its Chebyshev collocation values. This should be

done by barycentric interpolation [27, 6]. A comparison of barycentric interpolation

to more naive methods in computing the bounds here, which clearly shows the better

performance of the barycentric method, is given in [8].

Also, in using Theorem 3.4 we need estimates of α∞ where α(t) is a polynomial

or an analytic function on I. For such norms a transform technique is useful. Consider

the polynomial case first, as follows. Recall that Tk (t) = cos(k arccos t) is the standard

kth Chebyshev polynomial and that |Tk (t)| ≤ 1 for t ∈ I. If p is a polynomial and we

N

want p∞ , then we start by expanding p(t) = k=0 ak Tk (t) by discrete Chebyshev

series (3.3). It follows that

(8.3)

α∞ ≤

N

|ak |.

k=0

Estimate (8.3) uses the coefficients ak in the Chebyshev expansion of p. These are

easily calculated by the FFT [33]. Indeed, if v is a column vector of the collocation

values of p(t), then p∞ ≤ sum(abs(coefft(v))), where “coefft” is the MATLAB

function

function a = coefft(v)

N = length(v)-1; if N==0, a=v; return, end

U = fft([v; flipud(v(2:N))])/N;

% do t -> theta then FFT

a = ([.5 ones(1,N-1) .5])’.*U(1:N+1);

If α(t) is analytic on I, then we estimate α∞ by using (8.3) on a high degree

polynomial interpolant of α(t). Concretely, we start with a modest value of M for

(M )

Chebyshev interpolation and evaluate α(t) at collocation points tj

= cos(πj/M ),

j = 0, . . . , M . Efficiency in the FFT suggests using M which is one less than a power

of two, so perhaps M = 15. We use the FFT as above to calculate the coefficients ak

of the corresponding polynomial. We then determine whether the last few coefficients

are small. For example, if max{|aM −3 |, . . . , |aM |} < 10 m , where m is machine

precision, then we accept the estimate α∞ ≈ p∞ and use (8.3). If not, we double

M —actually, Mnew = 2(Mold + 1) − 1 for efficiency in the FFT—and try again. We

might stop if M = 212 − 1, for example. (A very similar issue to the one addressed in

this paragraph appears in [3], and the technique here mimics the one there.)

Example 6. Consider estimating u − I5 u∞ on I for u(t) = sin(2t). Our procedure with α = u − I5 u stops at N = 31 with estimate u − I5 u∞ ≤ 7.1 × 10−4 . By

contrast, the use of 1000 equally spaced points in [−1, 1] and barycentric interpolation

of p(t) = (I5 u)(t) yields u − I5 u∞ ≈ 6.8 × 10−4 at substantially greater cost.

2534

ED BUELER

A final technical concern is worth raising. It relates to the application of this paper

to some of the periodic-coefficient linear DDEs which appear in practice. If DDE (2.1)

came originally from an approximate periodic solution of a nonlinear DDE, as, for

instance, when using the techniques of [13] or [14], then the period T is known only

approximately (in general). The coefficients A, B are also known only approximately.

Errors in the multipliers of the linear DDE in such cases may well be dominated by

errors in computing the periodic solution to the original (nonlinear) DDE or by errors

in computing the coefficients in (2.1).

9. Conclusion. We have introduced what we believe is a new spectral method

for linear DDEs with a single fixed delay. The method uses collocation at the Chebyshev extreme points [33]. Extension of this method to multiple fixed delays is straightforward and has been implemented in MATLAB [9]. In the periodic-coefficient case,

with period equal to the delay for technical convenience, we use the spectral method

to compute a square matrix approximation to the monodromy operator associated to

the DDE. The eigenvalues of this matrix approximation are seen in examples to be

spectrally convergent to the eigenvalues of the monodromy operator (the multipliers).

Our main result uses new eigenvalue perturbation and a posteriori estimation

techniques to give computable error bounds on the eigenvalue approximation error.

Now, one would obviously like an a priori proof of the spectral convergence of our

collocation method when the coefficients of the DDE are analytic, but this is open.

That is, one would want to show spectral convergence, as the degree of polynomial

collocation increases, for the approximate solutions of ODEs and DDEs and for the

approximate eigenvalues of the monodromy operator.

We can list some open questions related to the approximation of the multipliers

by the technique of this paper:

• How can we get a better norm bound Cσ on Φμ (z), the analytical continuation

of the fundamental solution to x˙ = (A + B/μ)x, for |μ| ≥ σ > 0 and z in a

common regularity ellipse of A and B? (See Definition 4.4.)

• What is the best norm in which to compute cond(V˜ ), the condition number

of the infinite “matrix” of approximate eigenfunctions?

An additional question about the conditioning of the multipliers appears in section 8.

We have questions about the the monodromy operator U itself. For example,

under what conditions does U actually diagonalize? Better yet, what a priori estimates

can be computed for the conditioning of its eigenvalue problem? What can generally

be said about the pseudospectra [32] of U ? Do the eigenfunctions of U generically

form a Riesz basis [2, 16] or some other strongly linearly independent basis for H 1 ?

These latter questions do not affect our a posteriori methods, however.

Acknowledgments. This paper grows out of enjoyable work on engineering

applications of DDEs initiated and sustained by Eric Butcher. Our ideas developed