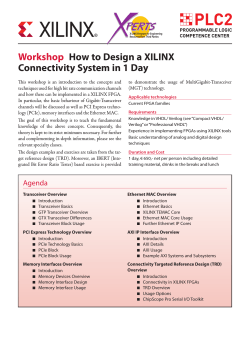

16nm UltraScale+ Devices Yield 2