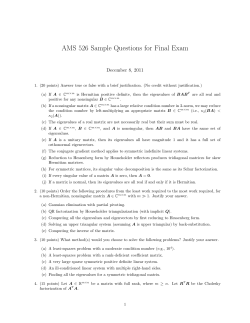

The boundary Riemann solver coming from the real - IMATI