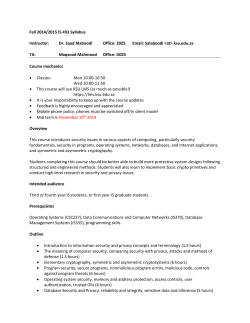

Internet research ethics - European Network of Research Integrity