Bayesian Inference Booth/UChicago Homework 4 RBG/Spring 2015

Bayesian Inference

Homework 4

Booth/UChicago

RBG/Spring 2015

Solutions.

1. For R code see students.R. Three applications of the conjugate normal Bayesian posterior updating calculations are required. Once these have been done we can generate

samples from the (three) posterior distribution(s) using using the rgamma function for

the variance parameter(s) and then rnorm for the mean(s). Then it helps to take the

square-root of the variance samples.

(a) From the output of the code described above we get the following:

> mu

mean

9.332052

6.900416

7.775035

sd

m

1 3.991309

2 4.492500

3 3.841439

1

2

3

>

2.5%

97.5%

1.1610551 17.19221

-1.8619420 15.93767

-0.1568245 15.62093

2.5%

97.5%

3.055323 5.317205

3.405953 6.032971

2.834764 5.242960

(b) The probabilities are obtained as:

> p123 <- mean(mu1.mc < mu2.mc & mu2.mc < mu3.mc)

> p132 <- mean(mu1.mc < mu3.mc & mu3.mc < mu2.mc)

> p231 <- mean(mu2.mc < mu3.mc & mu3.mc < mu1.mc)

> p213 <- mean(mu2.mc < mu1.mc & mu1.mc < mu3.mc)

> p321 <- mean(mu3.mc < mu2.mc & mu2.mc < mu1.mc)

> p312 <- mean(mu3.mc < mu1.mc & mu1.mc < mu2.mc)

> print(c(p123, p132, p231, p213, p321, p312))

[1] 0.0073 0.0042 0.6564 0.0921 0.2229 0.0171

(c) Before we can calculate similar probabilities for the predictive random variable(s)

we need to sample from the posterior predictive distribution(s).

>

>

>

>

>

>

>

y1.mc

y2.mc

y3.mc

py123

py132

py231

py213

<<<<<<<-

rnorm(length(mu1.mc), mu1.mc, sd1.mc)

rnorm(length(mu2.mc), mu2.mc, sd2.mc)

rnorm(length(mu3.mc), mu3.mc, sd3.mc)

mean(y1.mc < y2.mc & y2.mc < y3.mc)

mean(y1.mc < y3.mc & y3.mc < y2.mc)

mean(y2.mc < y3.mc & y3.mc < y1.mc)

mean(y2.mc < y1.mc & y1.mc < y3.mc)

1

> py321 <- mean(y3.mc < y2.mc & y2.mc < y1.mc)

> py312 <- mean(y3.mc < y1.mc & y1.mc < y2.mc)

> print(c(py123, py132, py231, py213, py321, py312))

[1] 0.1079 0.1051 0.2616 0.1832 0.2087 0.1335

Observe that these numbers are closer to 1/6 than the ones for µ in part (b), above.

This is because the predictive distribution has more uncertainty, and therefore its

order statistics are closer to uniform.

(d) These probabilities are readily available from the µ and Y˜ sample(s).

> mean(mu1.mc > mu2.mc & mu1.mc > mu3.mc)

[1] 0.8793

> mean(y1.mc > y2.mc & y1.mc > y3.mc)

[1] 0.4703

Again, the Y˜ value is closer to uniform which in this case is 1/3.

2. For R code see sens.R. The calculations are very similar to those for Question 1, above.

We set µ0 = 75 and σ02 = 100. It is important to use a very large MC sample size, S.

Otherwise the figures will be noisy relative to one another. See Figure 1 where S = 106

P(muA < muB | yA, yB)

●

●

●

0.65

●

●

0.60

probability

0.70

0.75

●

●

0.55

●

●

●

●

0

200

400

600

800

1000

k.0 = nu.0

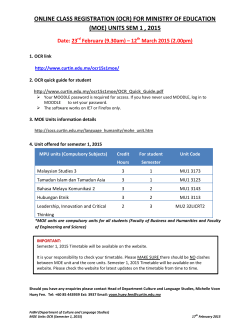

Figure 1: Posterior probability that µA is less than µB for a range of prior sample sizes.

is used. We can see that the prior sample size(s) must be several orders of magnitude

stronger than the data sample sizes before the posterior probability approaches 50/50.

People with reasonable prior uncertainty will conclude that µA < µB .

2

3. For R code see election_2008.R. The idea behind the code is that for S = 5000

total MC rounds, we sample (in turn) from each of the 51 electoral college “states”

from the appropriate Dirichlet posterior distribution. The α parameter to the Dirichlet

distribution, given “state” i’s proportions for McCain and Obama, (mi , oi ), is

αi = 500(mi , oi , 100 − mi − oi )/100 + (1, 1, 1).

(s)

Based on the collection of parameters {αi }s=1,...,S

i=1,...,51 we may tally the number of electoral

votes for Obama in the event that a random draw from the Dirichlet distribution was

maximal in the second position. In other words

(s)

θi ∼ Dirichlet(αi ),

ECO(s) =

51

X

for i = 1, . . . , 51

ECi ∗ I{argmaxθ(s) =2}

i

i=1

This assumes that each state uses a first-past-the-post, winner-takes-all, system.

These samples may be used to make calculations based on the posterior predictive

distribution of the number of EC votes for Obama. See Figure 2. Observe from the

1000

Obama's EC votes

600

400

200

Frequency

800

actual

0

[

250

]

300

350

400

ECVo

Figure 2: The posterior predictive distribution for the number of EC votes for Obama, a

posterior 95% CI, and the actual value.

figure that the 90% credible interval contains the true value, and that nearly all of the

samples (> 99%) are bigger than 270, the number of EC votes needed to win. So the

posterior probability of Obama winning is more than 99%.

3

© Copyright 2026